Monocular robustness visual-inertial tight coupling localization method

A positioning method and tightly coupled technology, applied in navigation, instrumentation, mapping and navigation, etc., can solve the problems of inability to achieve real-time robust positioning, low initialization accuracy, and poor positioning effect.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

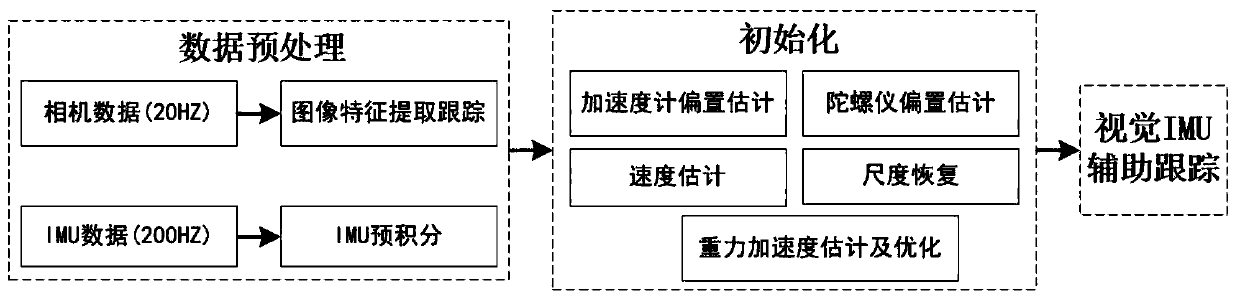

[0190] The method proposed by the present invention can theoretically be applied to the existing traditional visual-inertial fusion positioning framework (VIO). The existing traditional visual-inertial fusion positioning framework includes two modules, the front-end and the back-end. The front-end estimates the camera motion between adjacent images through the IMU and images, and the back-end receives the camera motion information estimated by the front-end at different times, and performs local and Global optimization to obtain globally consistent trajectories.

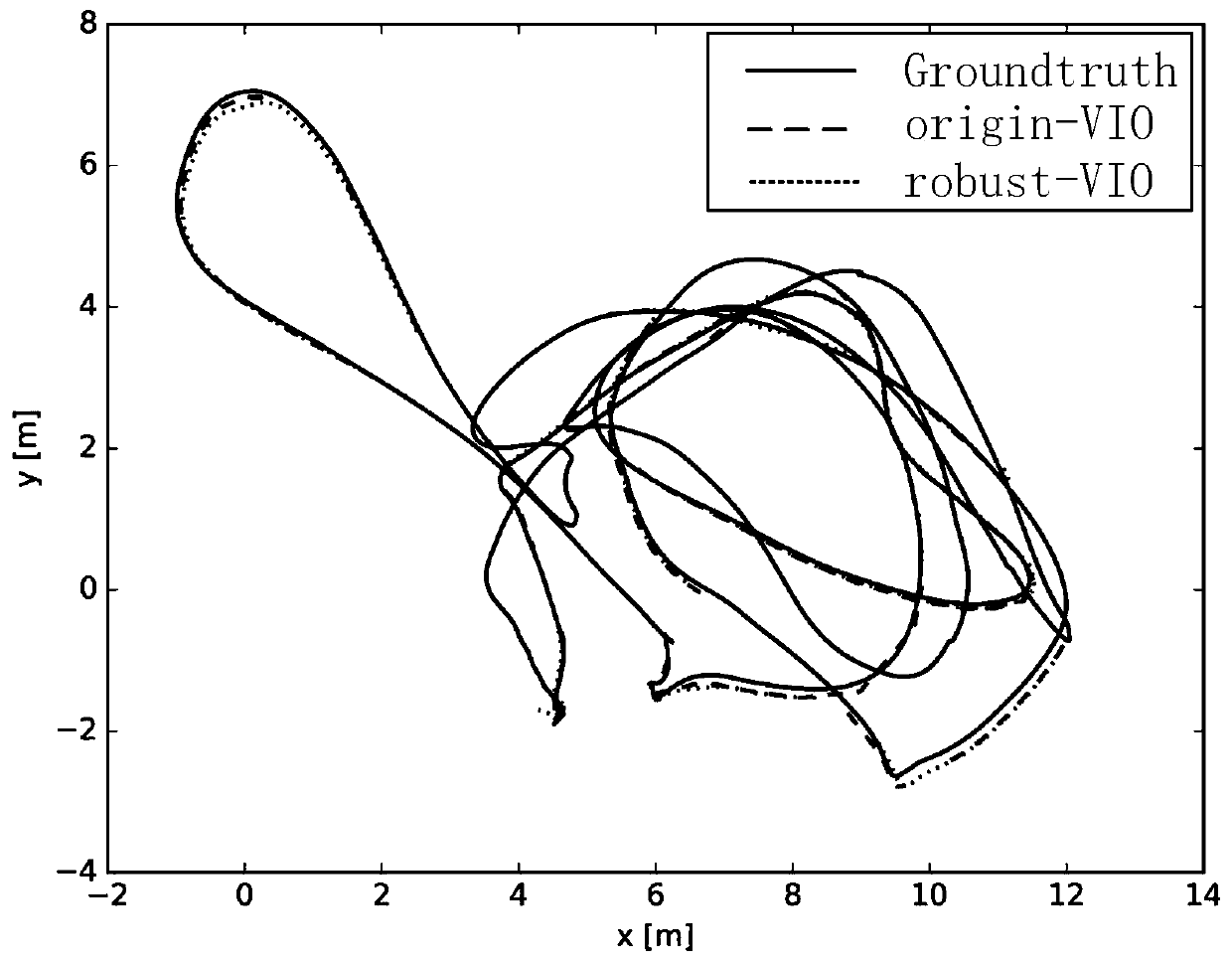

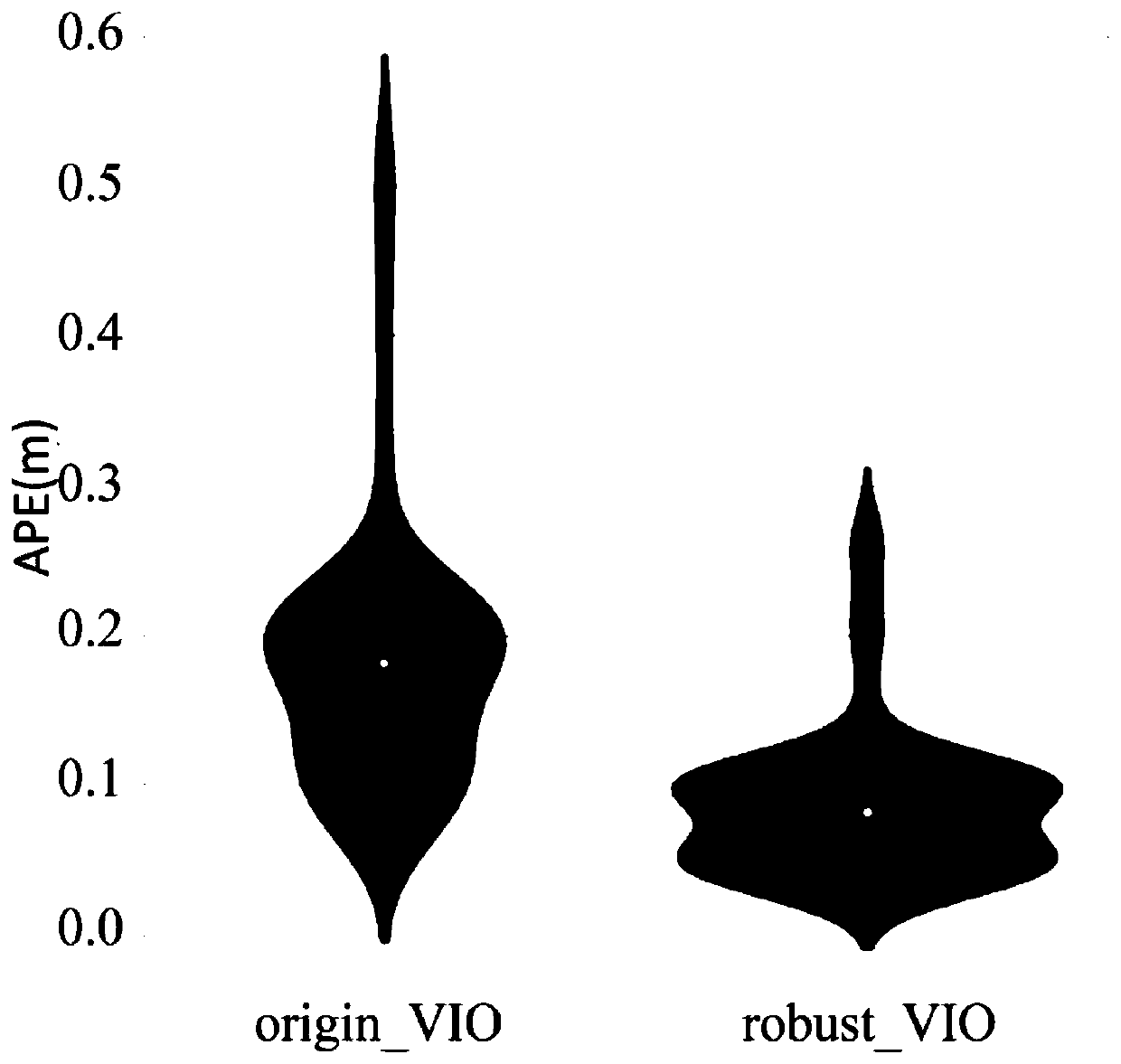

[0191] Existing VIOs include OKVIS, ORB_SLAM2-based monocular visual-inertial fusion positioning system, and VINS. Based on visual inertia ORB_SLAM2 (hereinafter referred to as origin_VIO), and using nine data sequences in the EuRoC dataset for testing. This dataset contains the dynamic motion of UAVs equipped with VI-Sensor binocular inertial cameras in different rooms and industrial environments. The image acquis...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com