Target space positioning method and device based on neural network

A neural network and spatial positioning technology, applied in the information field, can solve problems such as low processing efficiency, and achieve the effect of improving accuracy, realizing real-time positioning, and intuitive positioning.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

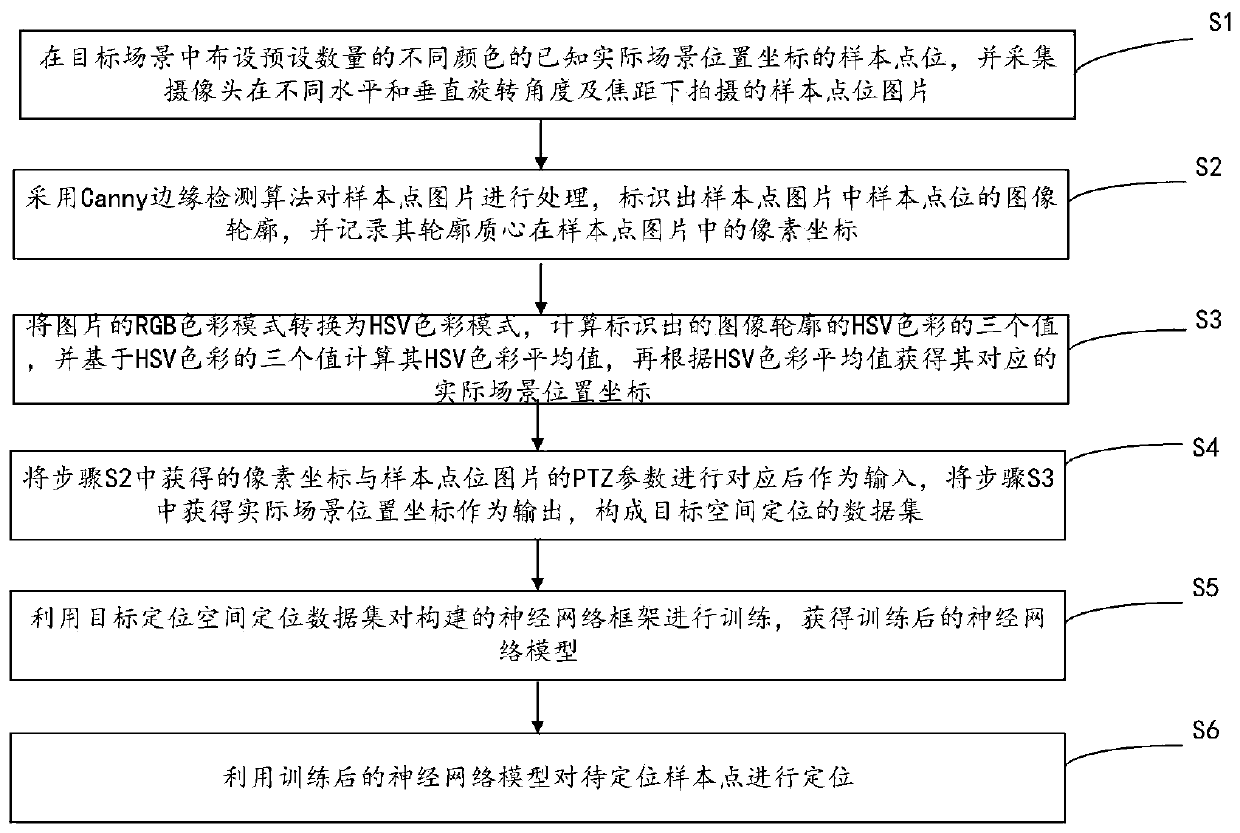

[0056] The embodiment of the present invention provides a neural network-based target space positioning method, please refer to figure 1 , the method includes:

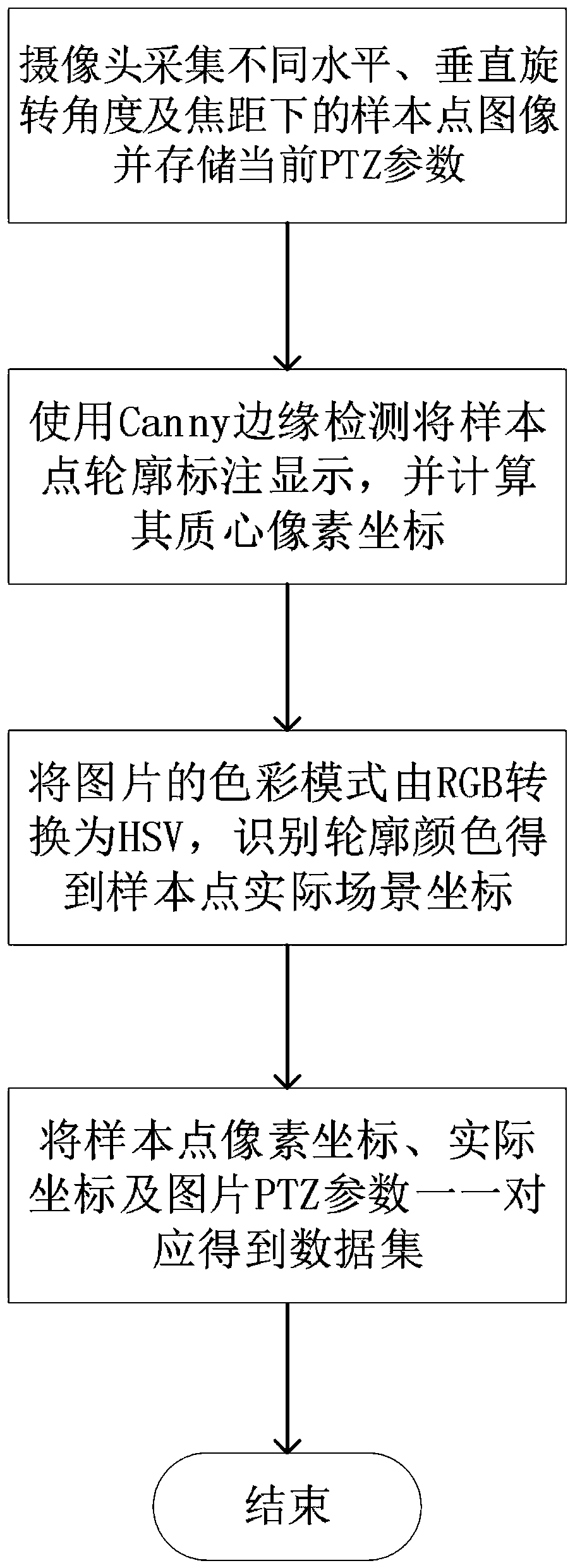

[0057] Step S1: Arrange a preset number of sample points with known actual scene position coordinates in different colors in the target scene, and collect pictures of the sample points taken by the camera at different horizontal and vertical rotation angles and focal lengths.

[0058] Specifically, the preset number can be set according to actual conditions. The sample points of known actual scene position coordinates of different colors mean that different colors correspond to different actual coordinates.

[0059] Such as Figure 4 As shown in the figure, paste the samples of circular sample points printed with black, white, gray, red, orange, yellow, green, blue, purple and other colors on the wall, and take photos at different camera angles. Then move the sample image continuously, and take photos of the sample...

Embodiment 2

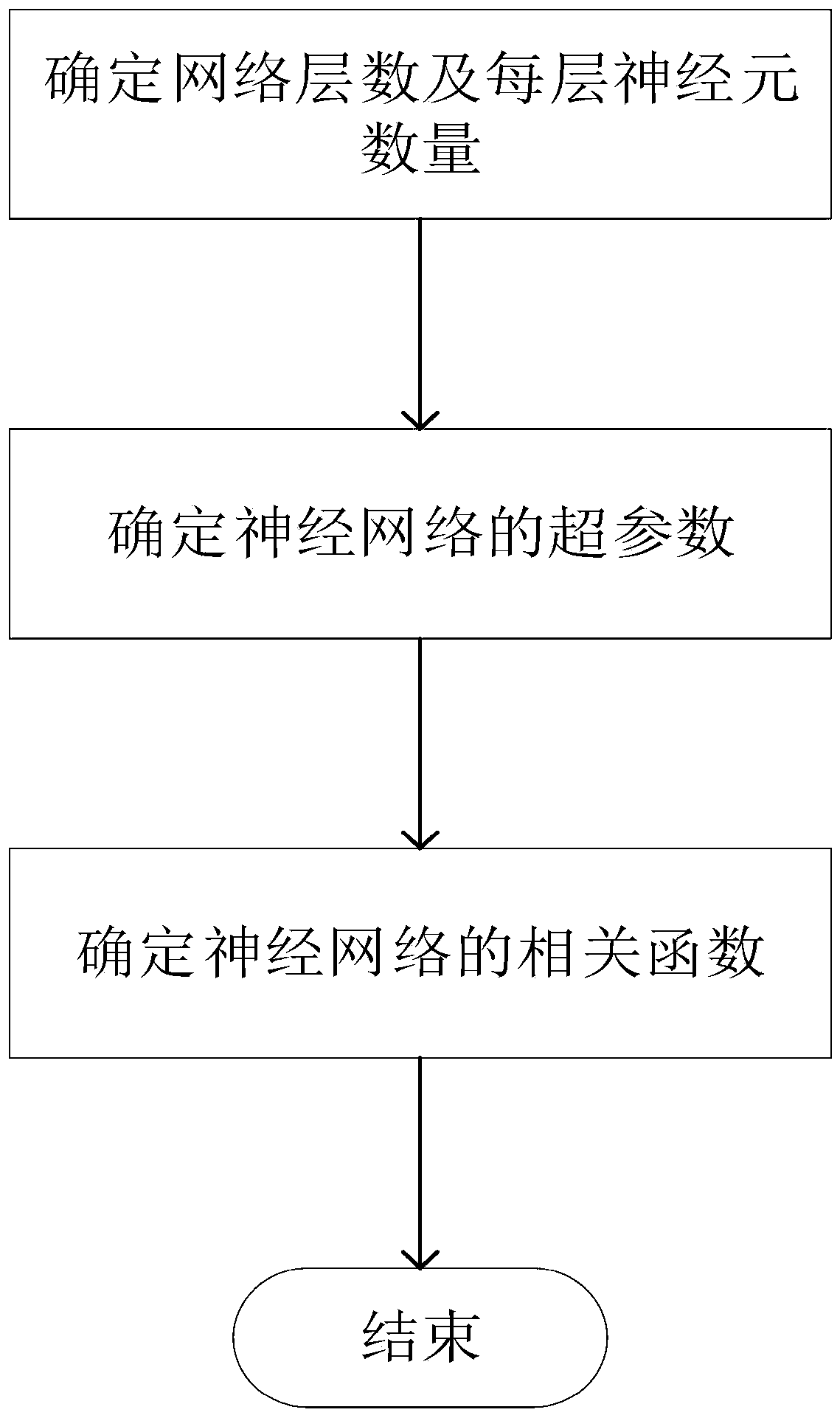

[0113] This embodiment provides a neural network-based object space positioning device, please refer to Figure 8 , the device consists of:

[0114] The sample point picture collection module 201 is used to lay out a preset number of sample points with known actual scene position coordinates of different colors in the target scene, and collect sample points taken by the camera at different horizontal and vertical rotation angles and focal lengths bit picture;

[0115] Image contour identification module 202, for adopting Canny edge detection algorithm to process the sample point picture, identify the image contour of the sample point in the sample point picture, and record the pixel coordinates of its contour centroid in the sample point picture;

[0116] The color mode conversion module 203 is used to convert the RGB color mode of the picture into the HSV color mode, calculate the three values of the HSV color of the identified image outline, and calculate the HSV color av...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com