Dynamic gesture tracking method based on convolutional neural network

A technology of convolutional neural network and dynamic gesture, which is applied in the field of dynamic gesture tracking based on convolutional neural network, can solve the problems of insufficient real-time tracking and poor tracking effect, and achieve good generalization ability, sufficient real-time performance, and tracking strong effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

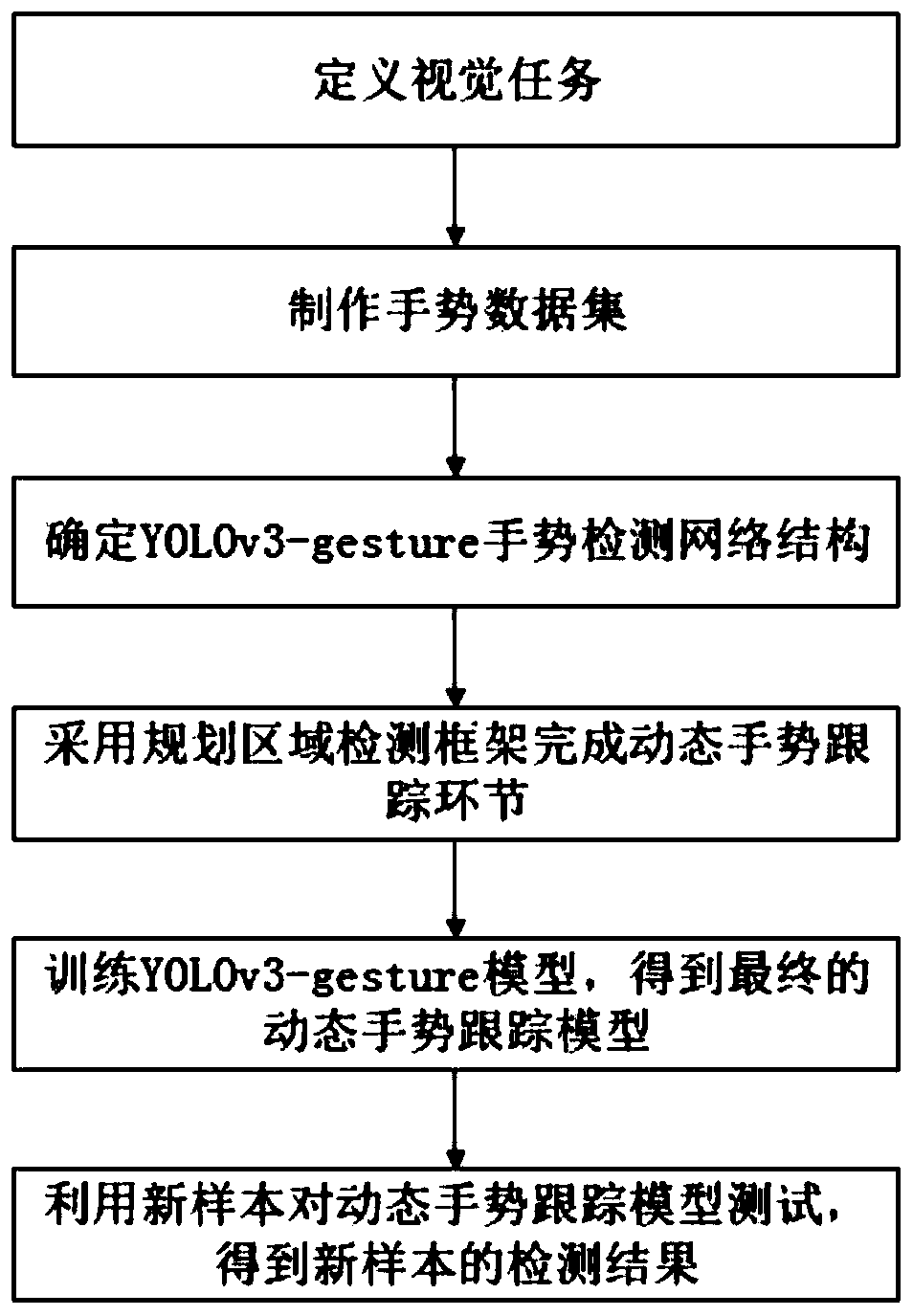

[0020] Specific implementation mode one: refer to figure 1 and figure 2 This embodiment is specifically described. A convolutional neural network-based dynamic gesture tracking method described in this embodiment includes the following steps:

[0021] Step 1: Tracking dynamic gestures in complex backgrounds as a vision task;

[0022] Step 2: Select gesture image samples for filtering processing, and then make a gesture training set;

[0023] Step 3: Determine the YOLOv3-gesture gesture detection network structure;

[0024] Step 4: Use the planning area detection framework to complete dynamic gesture tracking;

[0025] Step 5: Train the YOLOv3-gesture model to obtain a dynamic gesture tracking model;

[0026] Step 6: Use the obtained model to complete dynamic gesture tracking.

[0027] According to the obtained dynamic gesture tracking model, new samples are used to test the dynamic gesture tracking model, and the detection results of the new samples are obtained.

[002...

specific Embodiment approach 2

[0032] Embodiment 2: This embodiment is a further description of Embodiment 1. The difference between this embodiment and Embodiment 1 is that the detailed steps of Step 3 are as follows: first, keep the residual module of Darknet-53 , add a 1×1 convolution kernel after each residual module, and use a linear activation function in the first convolution layer, and then in the residual module, adjust the number of residual network layers in each module.

[0033] This implementation method structurally improves the shortcomings of the traditional Darknet-53 network for the detection of single-type objects such as gestures, which are too complex and redundant. The specific implementation steps are as follows:

[0034] 1. Keep the residual module of Darknet-53, add a 1×1 convolution kernel after each residual module to further reduce the output dimension, and use a linear activation function in the first convolution layer to avoid low-dimensional convolution The problem of layer fe...

specific Embodiment approach 3

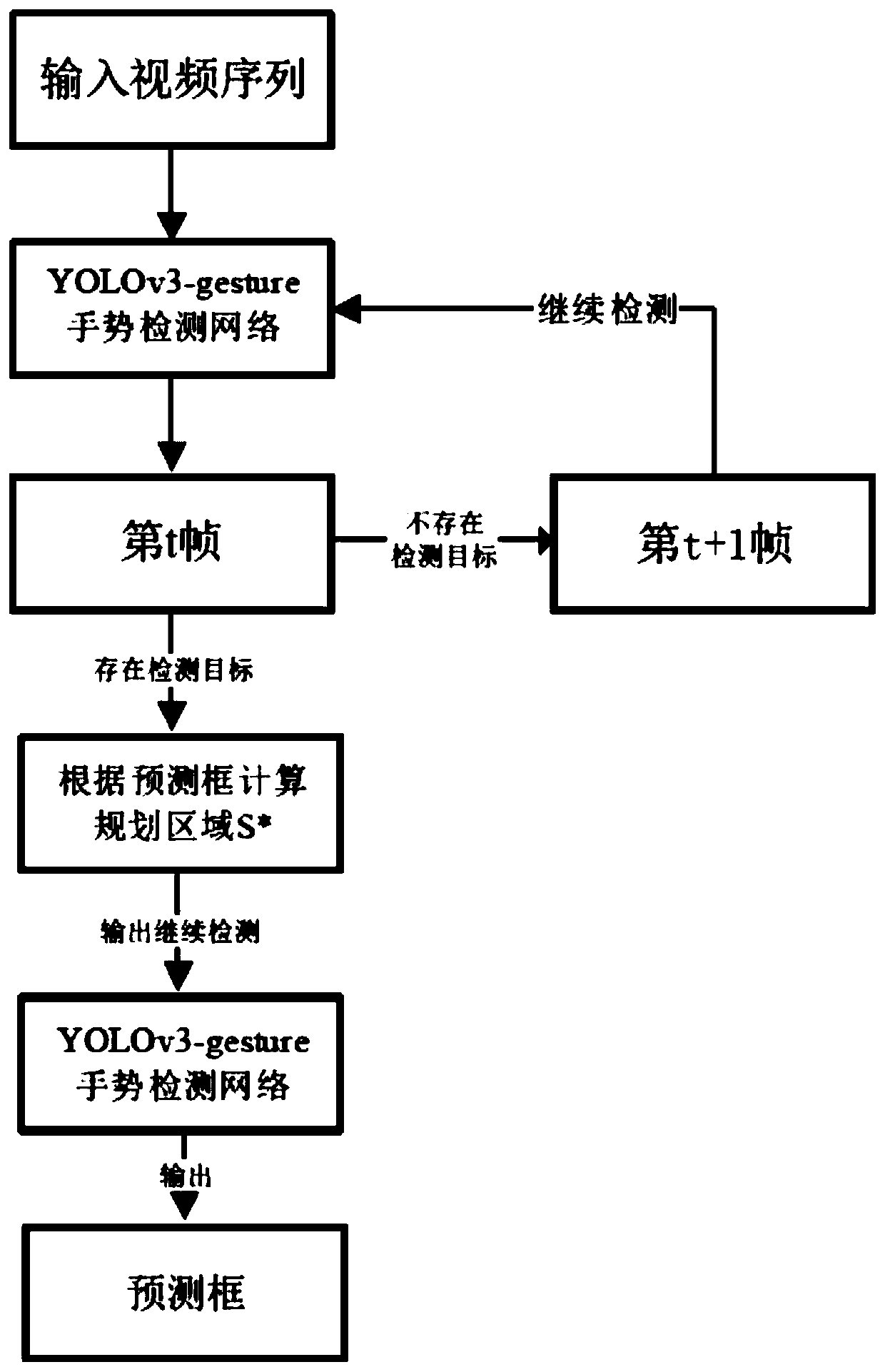

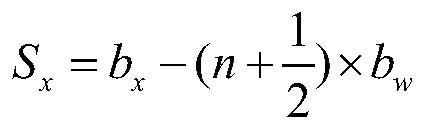

[0036] Embodiment 3: This embodiment is a further description of Embodiment 1. The difference between this embodiment and Embodiment 1 is that in step 4, the specific steps of using the planning area detection framework to complete dynamic gesture tracking are: First assume that there is a gesture target Object detected in the t-th frame image 1 , then the YOLOv3-gesture network predicts the output prediction box X 1 The center coordinates of (b x ,b y ), prediction frame width b w and height b h ; After entering the t+1th frame, generate a planning area near the center point of the tth frame for detection, that is, at the t+1th frame, the size of the input YOLOv3-gesture network is the planning area S * , where the planning area S * The width of S w and height S h The value is determined by the width of the predicted border b w and b h decision, then take the center point of the tth frame as the origin, and the upper left corner vertex (S x ,S y ) formula is as fol...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com