Implementation method from 3D sparse point cloud to 2D grid map based on VSLAM

An implementation method and sparse point technology, applied in image enhancement, image analysis, photo interpretation, etc., can solve the problems of positioning and navigation needs, high cost of laser radar, etc., and achieve the effect of improving operation efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

[0032] Table 1 is the system platform parameters. The hardware environment used for implementation is Jetson TX2. The present invention is carried out under the Linux system. The experimental means include data set testing and field testing. The field testing uses MYNTEYE for data collection, and completes different lighting conditions in different scenarios in the experimental corridors and rooftop balconies. performance evaluation.

[0033] parameter Implementation conditions System hardware platform Jetson TX2 vision sensor MYNTEYE S1030 operating environment Ubuntu 16.04 Programming language C++, Python test environment Laboratory Building (30*20m2)

[0034] Table 1

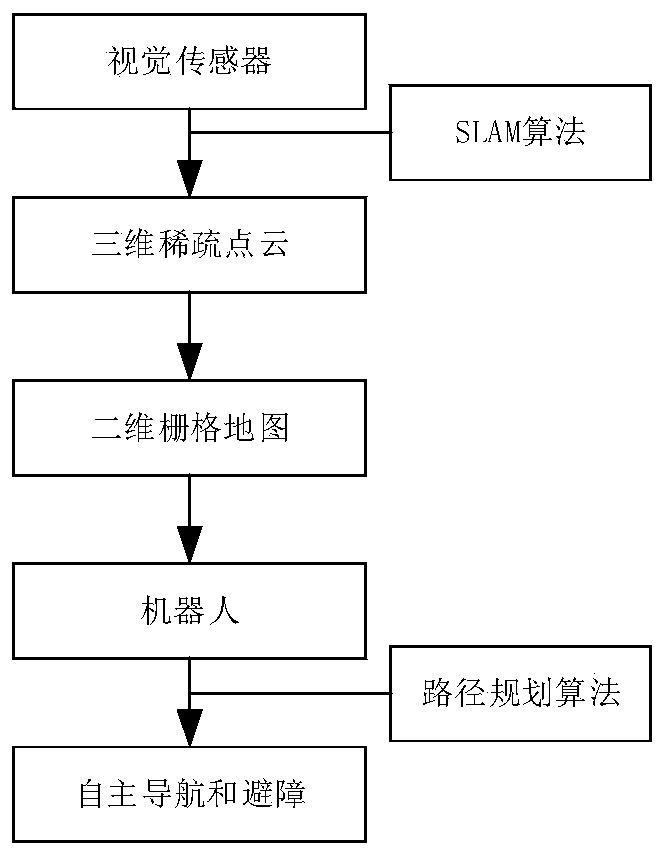

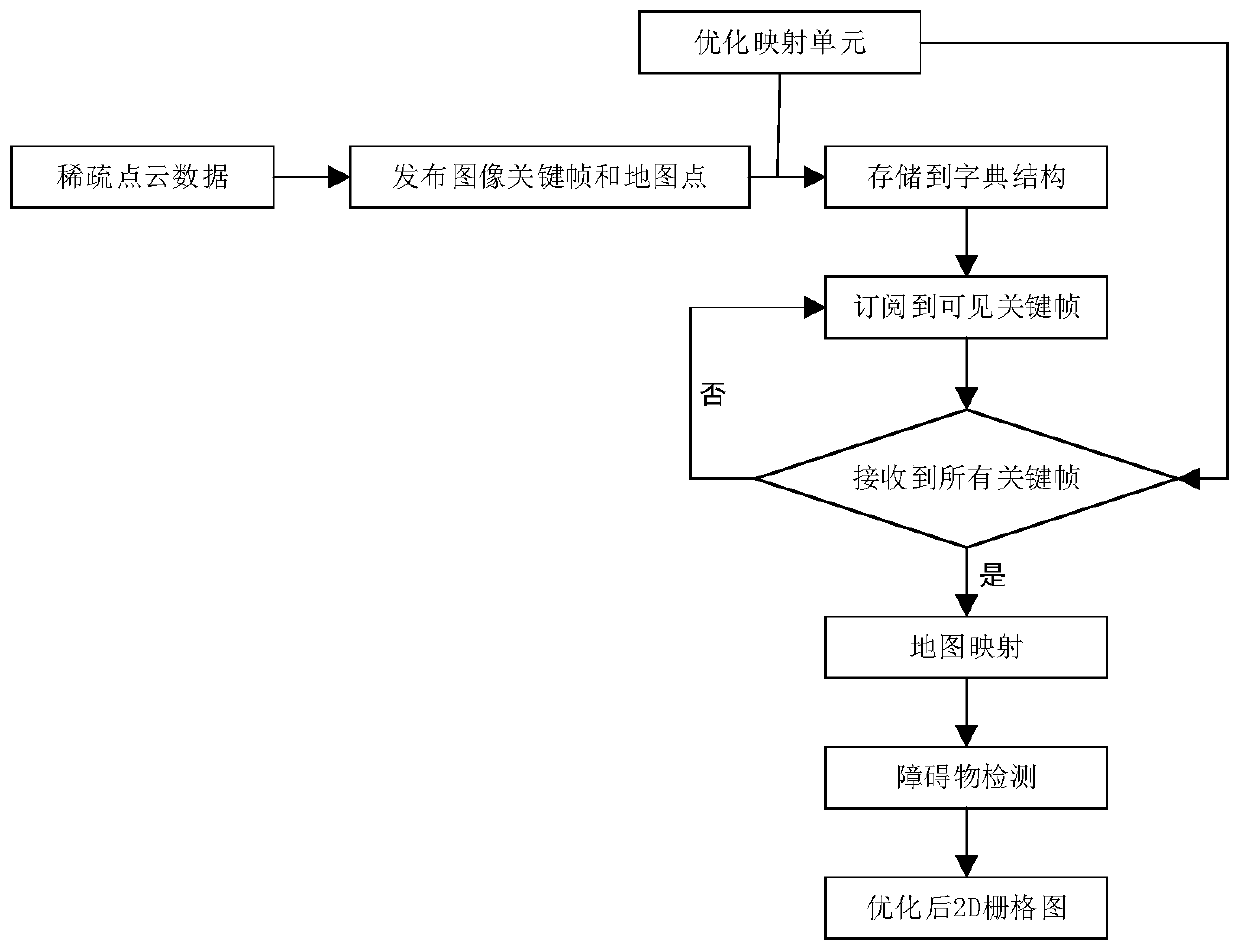

[0035] Such as figure 1 As shown, the implementation method of the present invention based on 3D sparse point cloud gener...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com