SLAM-based visual perception mapping algorithm and mobile robot

A visual perception and robotics technology, applied in the field of visual perception and mobile robots, can solve problems such as inability to make large-scale map construction, inaccurate pose estimation, and insufficient functions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0057] Part I, Algorithms

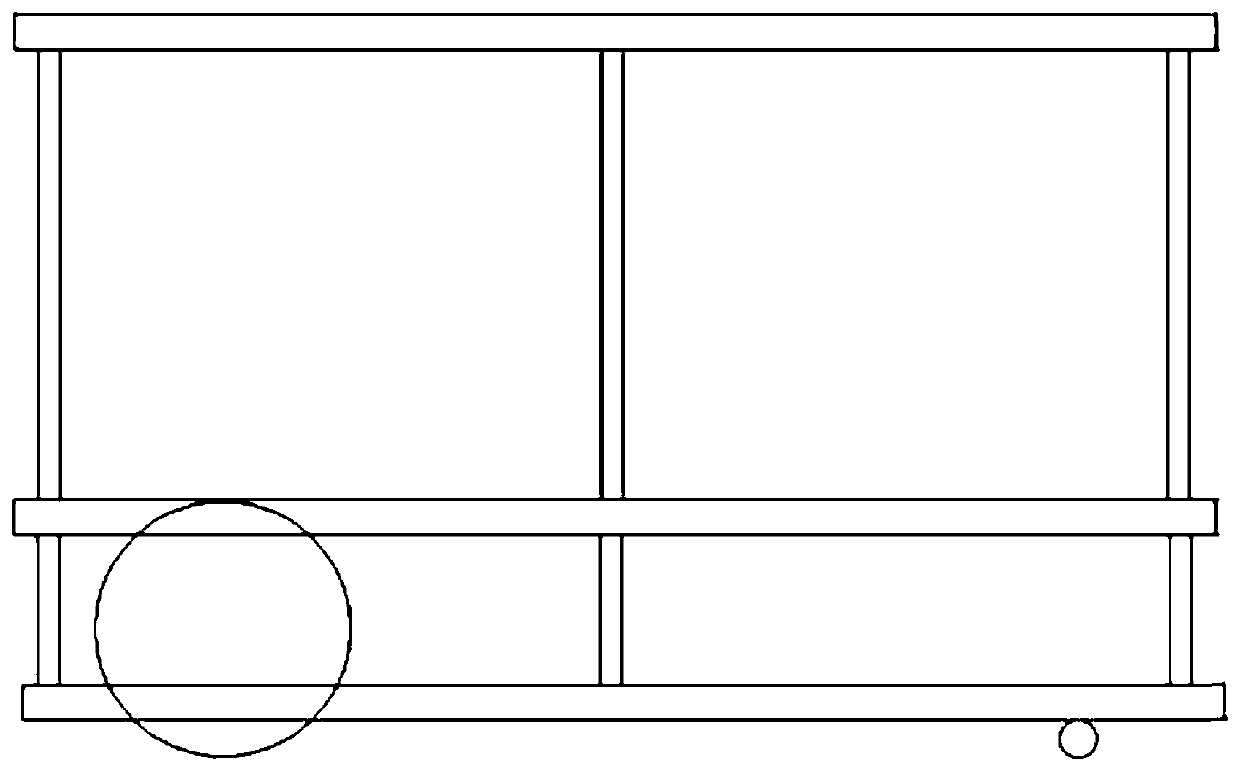

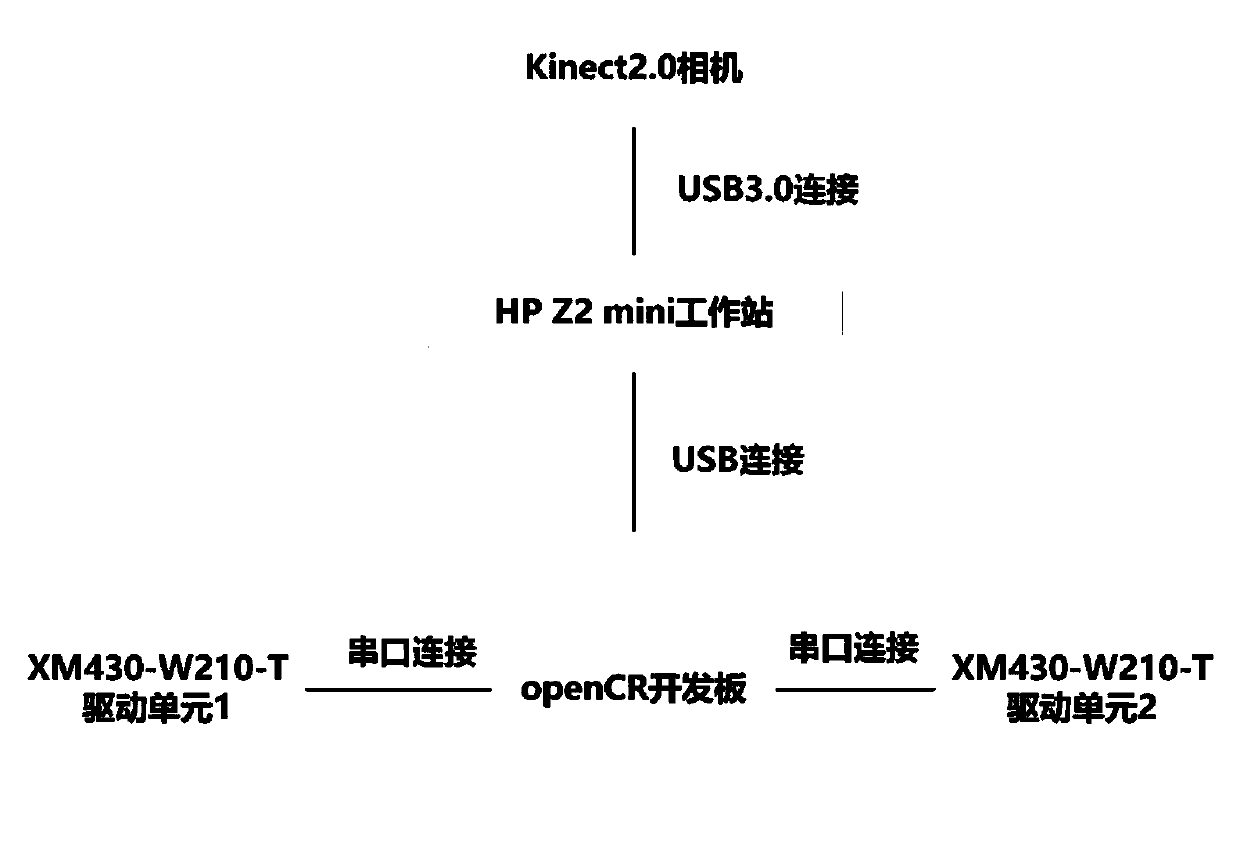

[0058] The invention discloses a visual perception mapping algorithm based on SLAM. Firstly, the image data collected by the depth camera carried on the robot and the rotational speed data collected by the encoder installed at the driving wheel of the robot are data fused; wherein, the Kinect2 used The .0 camera is a depth camera, and the collected image data is divided into two parts: a color image and a depth image. The two parts establish a corresponding relationship through a unified time stamp, and extract the image features of the color image; the rotational speed data is calculated and processed according to the kinematic equation , fused and optimized together with the depth image, by minimizing the encoder and re-projection errors, the pose of the robot at a certain moment can be obtained, and the pose information is output; the current frame obtained when the robot is walking in the area to be mapped Screening of image data, obtaining key ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com