Dual-module neural network structure video object segmentation method

A video object and network structure technology, applied in the field of computer vision, can solve problems such as complex background, occlusion, and inability to achieve efficient segmentation, and achieve the effects of enhancing discrimination, suppressing noise influence, and saving costs

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0105] The experimental hardware environment of the present invention is: 3.4GHz Intel(R) Core(TM) i5-7500 CPU and GTX 1080TiGPU PC, 16 memory, Ubuntu18.04 operating system, based on the open source framework Pytorch depth framework. An image size of 854x480 is used for training and testing. test results (such as Figure 4 Figure 5 ) data set comes from DAVIS public video image segmentation data set.

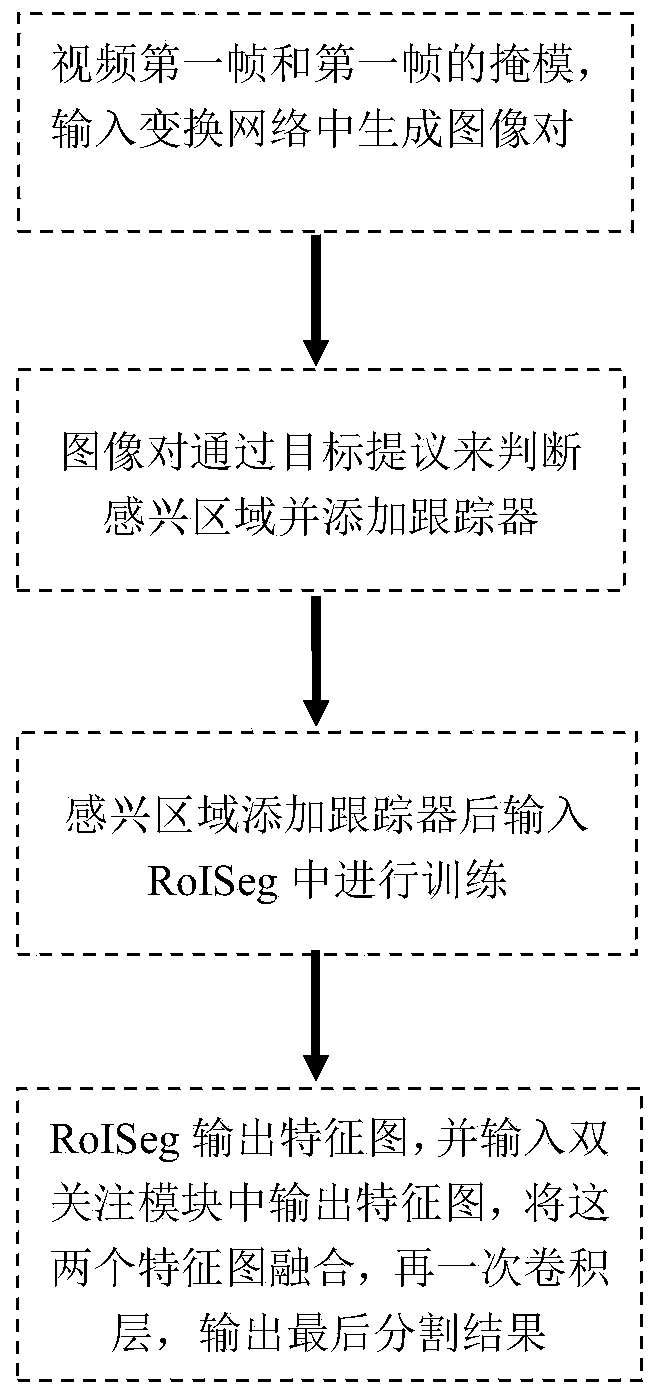

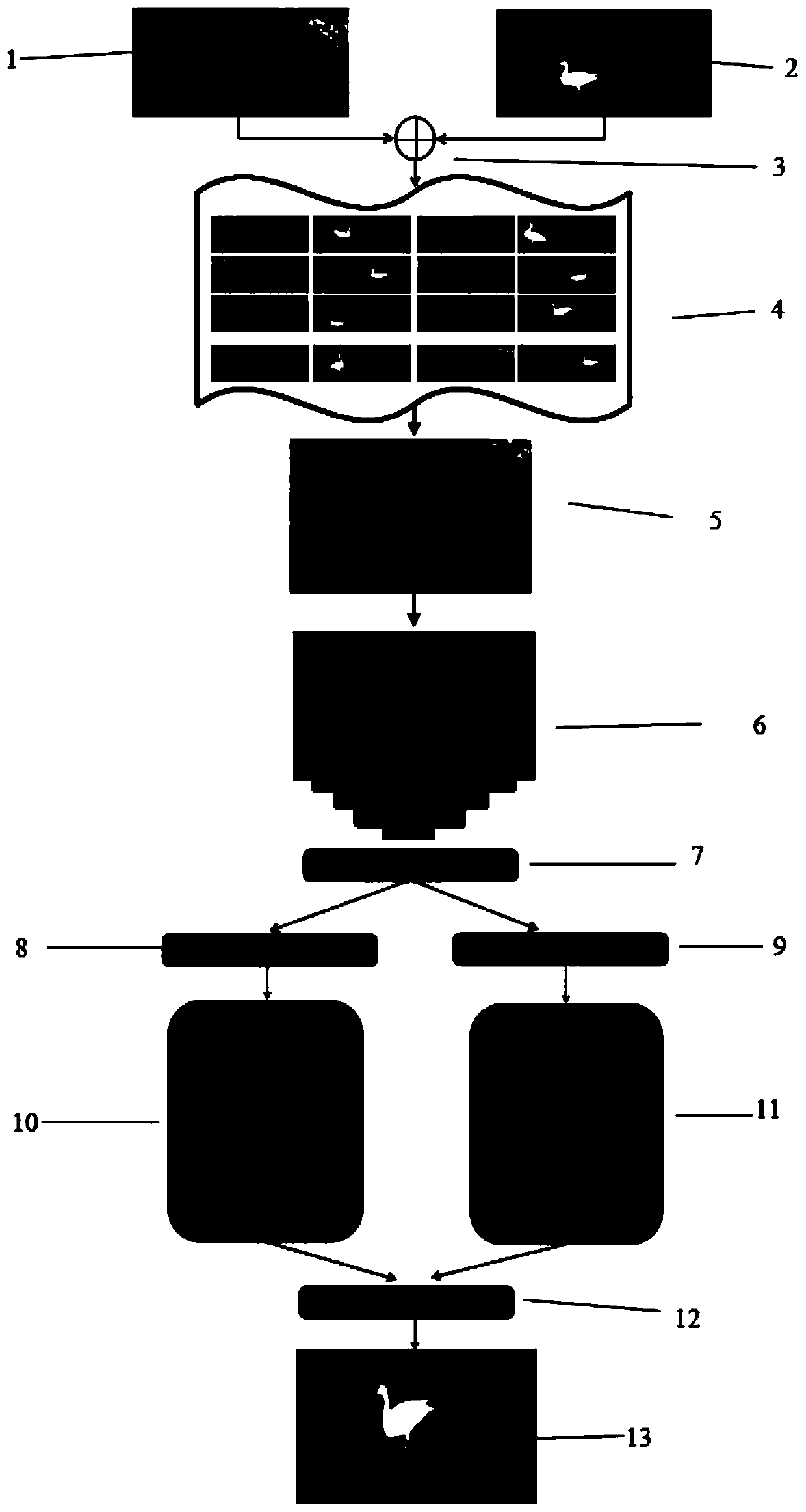

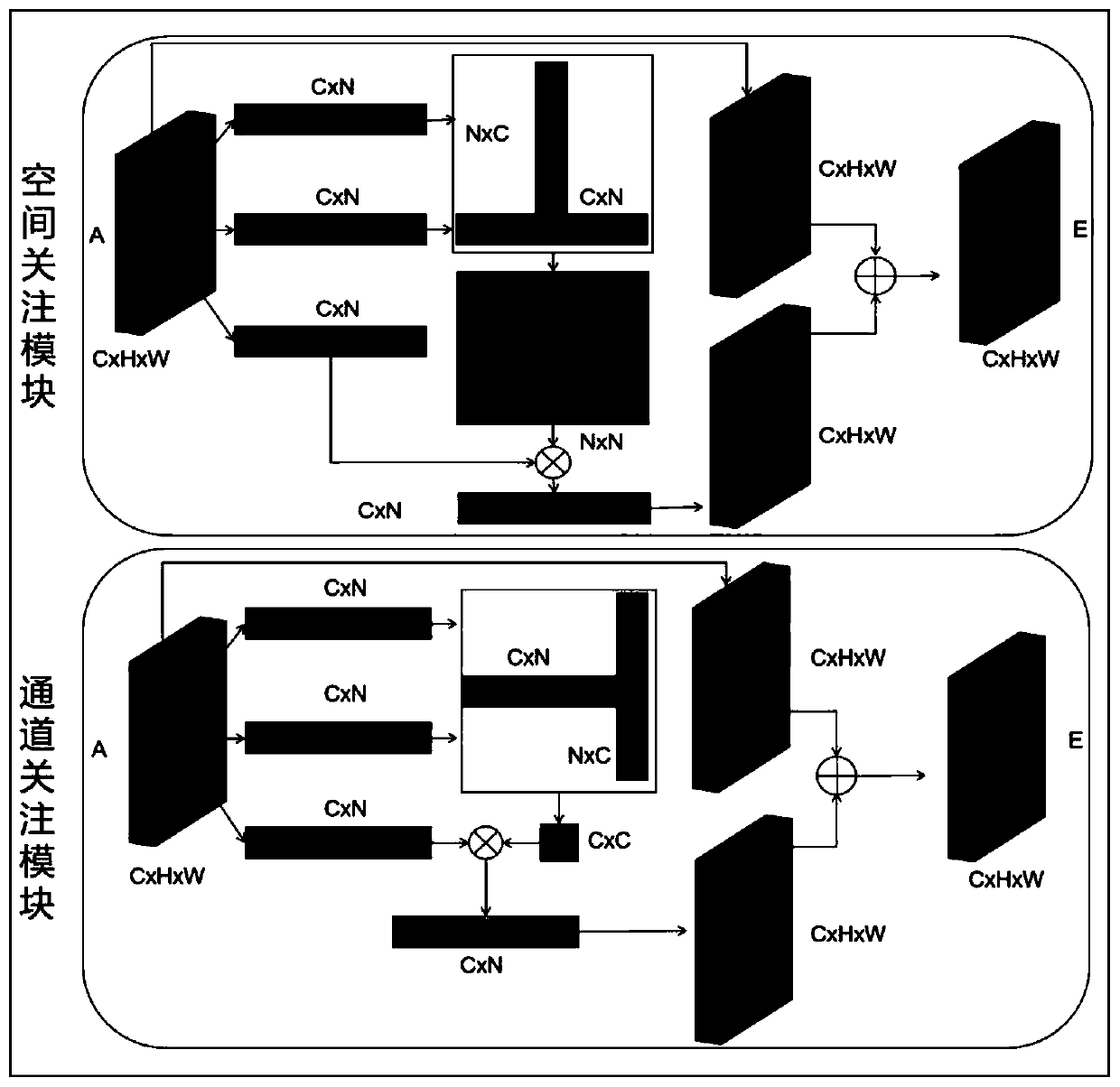

[0106] First, for the given first frame and the mask of the first frame (such as figure 2 shown in 1 and 2). Image pairs from 1 to 100 are generated by transforming the network ( figure 2 shown in 4). Select candidate regions of interest through the target proposal box ( figure 2 shown in 5). After adding the tracker in the area of interest, input it into the RoISeg network for training ( figure 2 shown in 6). The output feature map from the last convolutional layer in the RoISeg network ( figure 2 Shown in 7) are respectively input into the spatial attention mo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com