An optimized cache system based on edge computing framework and its application

An edge computing and caching system technology, applied in transmission systems, wireless communications, electrical components, etc., can solve problems such as no cache life cycle considerations, resource waste, etc., to shorten network response time, reduce latency, and improve latency Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

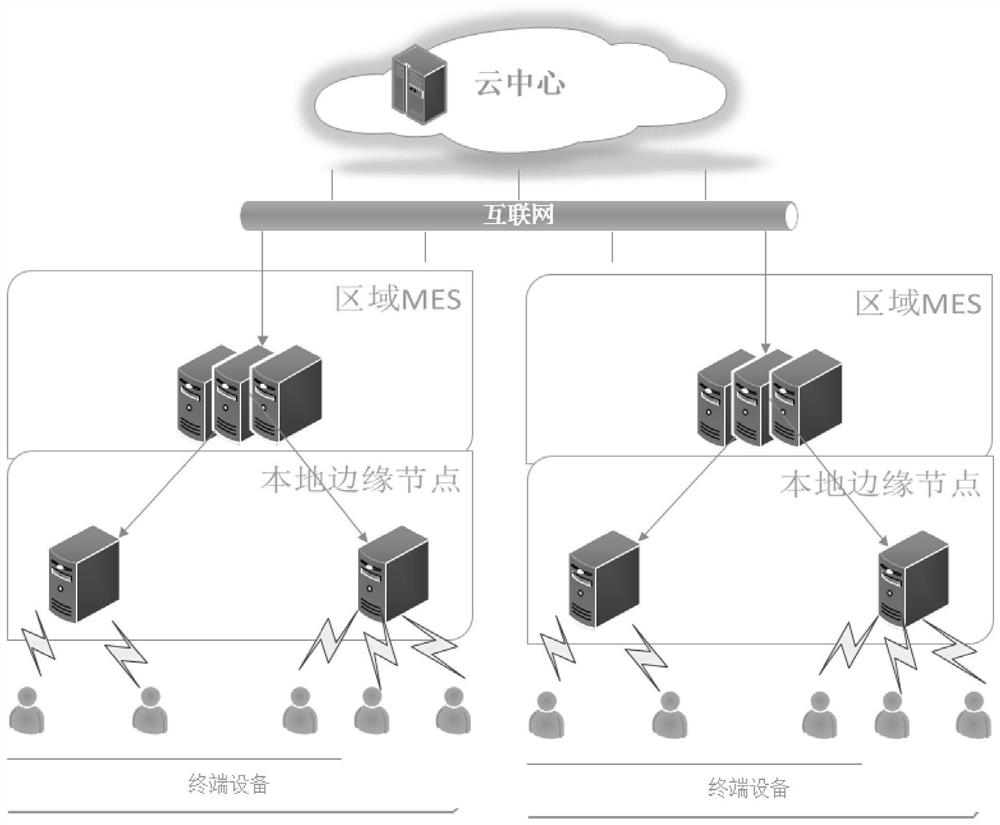

[0065] An optimized caching system based on an edge computing framework, including multiple local area networks, all of which are connected to the cloud center through the Internet;

[0066] Each local area network is an independent edge node cluster. The edge node cluster includes routers, first-level switches, second-level switches and regional edge servers connected in sequence from top to bottom. The second-level switches are connected downward to local edge nodes and user terminal equipment;

[0067] The router connects upward to the Internet, and the first-level switch connects downward to the second-level switch and the regional edge server;

[0068] The router is used to connect the local area network and the Internet; the first-level switch and the second-level switch are used for the connection and networking within the local area network; the regional edge server is used to store the hot content of the entire local area network, and is responsible for the data label ...

Embodiment 2

[0072] According to an optimized caching system based on an edge computing framework described in Embodiment 1, the difference is that:

[0073] The regional edge server installs Redis as a database to store the data tags of cache resources and the reachability table of other edge node clusters nearby;

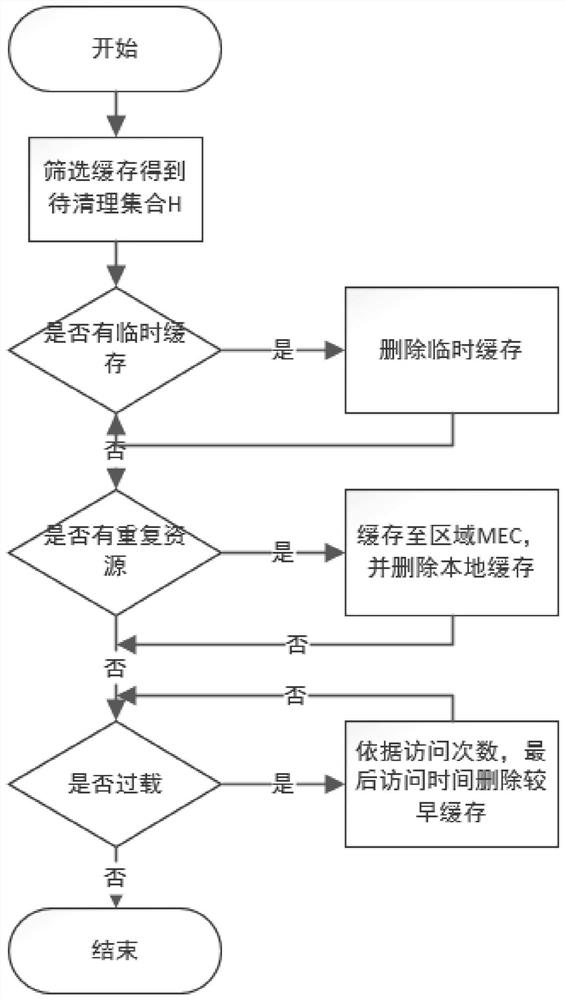

[0074]The data label is used to record the relevant information of the cache resource, including the URI of the cache resource, the file size, the number of visits, the storage path, whether it is a temporary cache, the generation time of the data label, and the number of visits includes the total number of visits in the entire LAN and each local edge The visit times of nodes, the total visit times threshold p0 in the whole local area network is 125% of the number of users in the whole local area network, the visit times threshold pi of each local edge node is the number of users in the network where the i-th local edge node is located; through the whole local area network The...

Embodiment 3

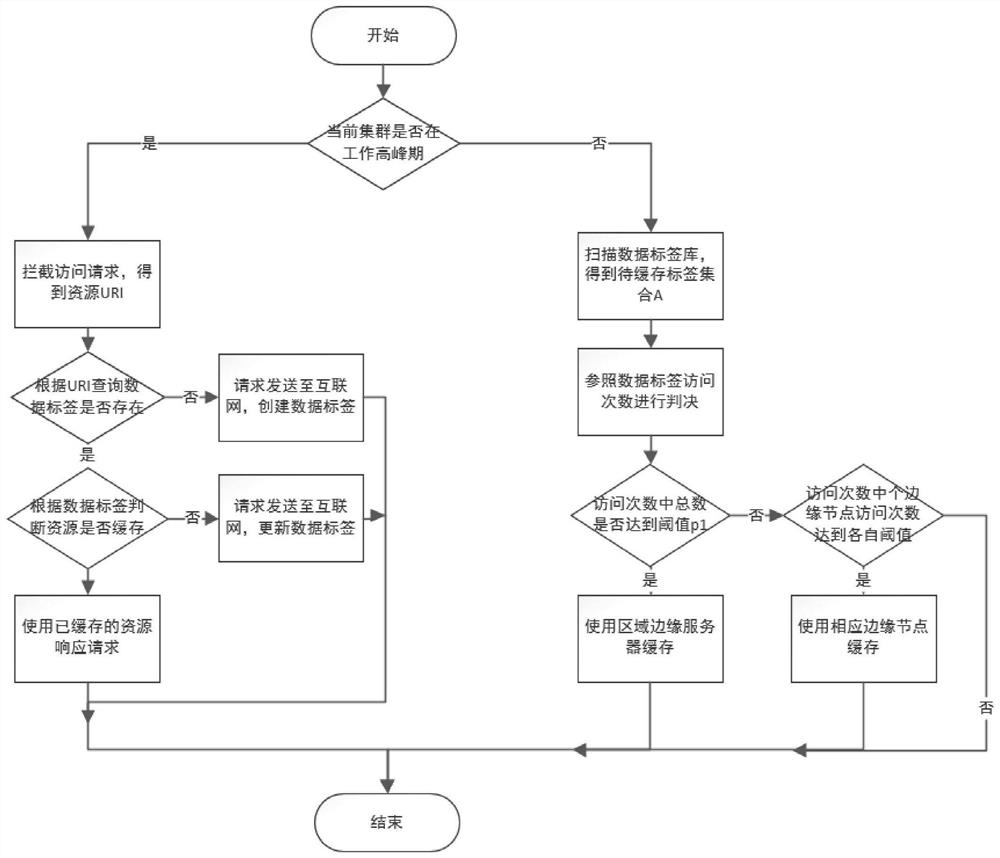

[0077] A cache placement and usage method of an optimized cache system based on an edge computing framework described in Embodiment 2, such as figure 2 As shown, the optimized caching system based on the edge computing framework is divided into two periods, peak working period and non-peak working period, including the following steps:

[0078] (1) Determine whether the current edge node cluster is in the peak period of work or not. If the current edge node cluster is in the peak period of work, the system is responsible for using the cache to provide services during the peak period of work, and enter step (4); otherwise, it is not During the peak period, the system is responsible for the placement of the cache, and enters step (2);

[0079] (2) Optimize the caching system to automatically poll the database, select the data tags whose access times reach the threshold, including the data tags whose total access times in the entire local area network reach the threshold p0 and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com