Virtual reality system space positioning method based on depth perception

A depth perception, virtual reality technology, applied in the field of virtual reality, can solve problems such as inability to interact correctly, inability to correct the relationship between itself and the virtual target position, and inability to position the virtual camera.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

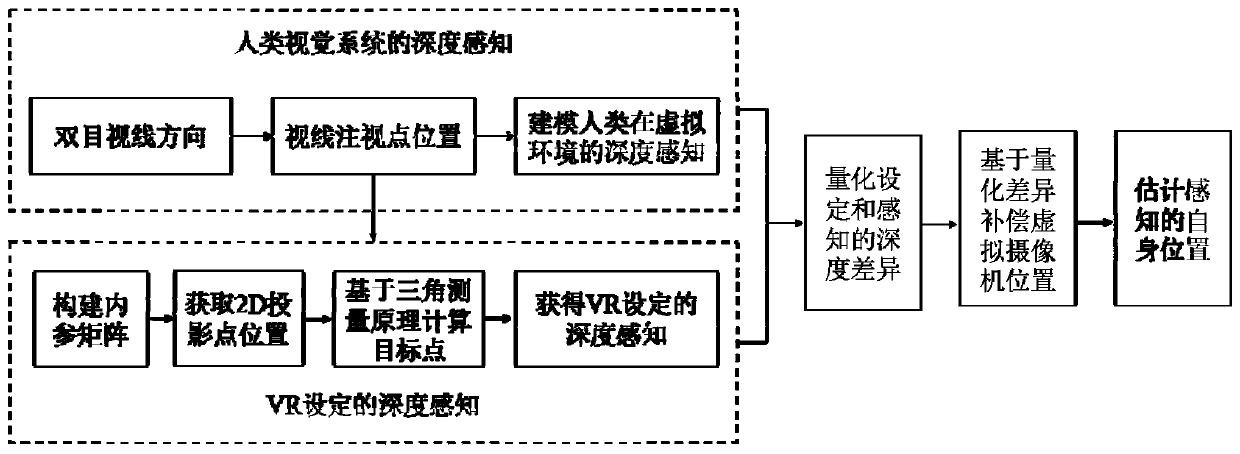

[0061] A spatial positioning method for virtual reality systems based on depth perception, such as figure 2 shown, including the following steps:

[0062] Step S1: Modeling the depth perception of the human visual system in the virtual space based on the visual fixation point, the specific implementation steps are as follows:

[0063] S1.1. Real-time tracking of gaze point:

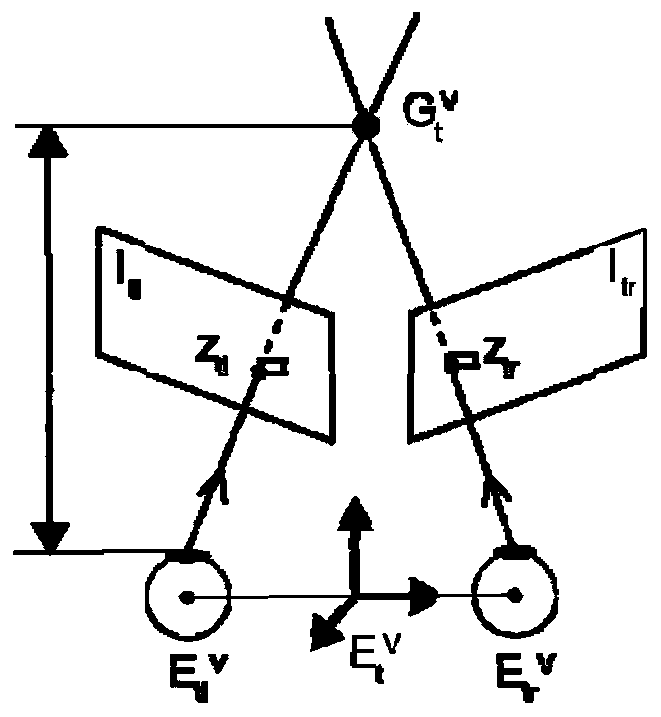

[0064] At time t, when the human eye passes through the binocular parallax image I displayed on the left and right displays of the VR helmet tl and I tr Gaze at a target point in virtual space When , the binocular line of sight direction is obtained by the line of sight tracking algorithm based on pupil corneal reflection technology, and then the closest point of the two lines of sight in space is solved according to the knowledge of spatial analytic geometry, which is the point of sight fixation; The coordinates of the 3D gaze point in the space coordinate system are The superscript V represents ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com