Patents

Literature

328 results about "Virtual cinematography" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Virtual cinematography is the set of cinematographic techniques performed in a computer graphics environment. This includes a wide variety of subjects like photographing real objects, often with stereo or multi-camera setup, for the purpose of recreating them as three-dimensional objects and algorithms for automated creation of real and simulated camera angles.

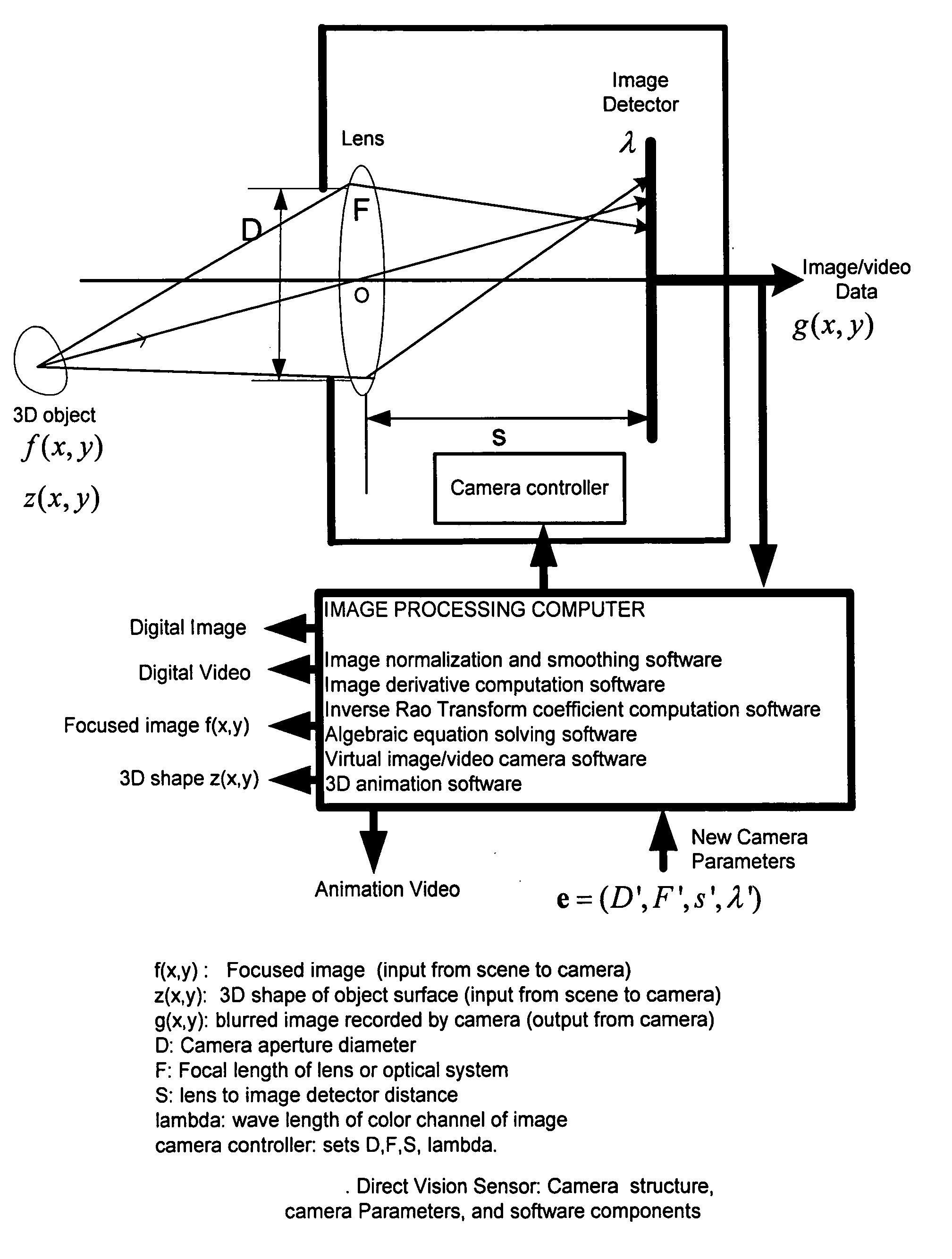

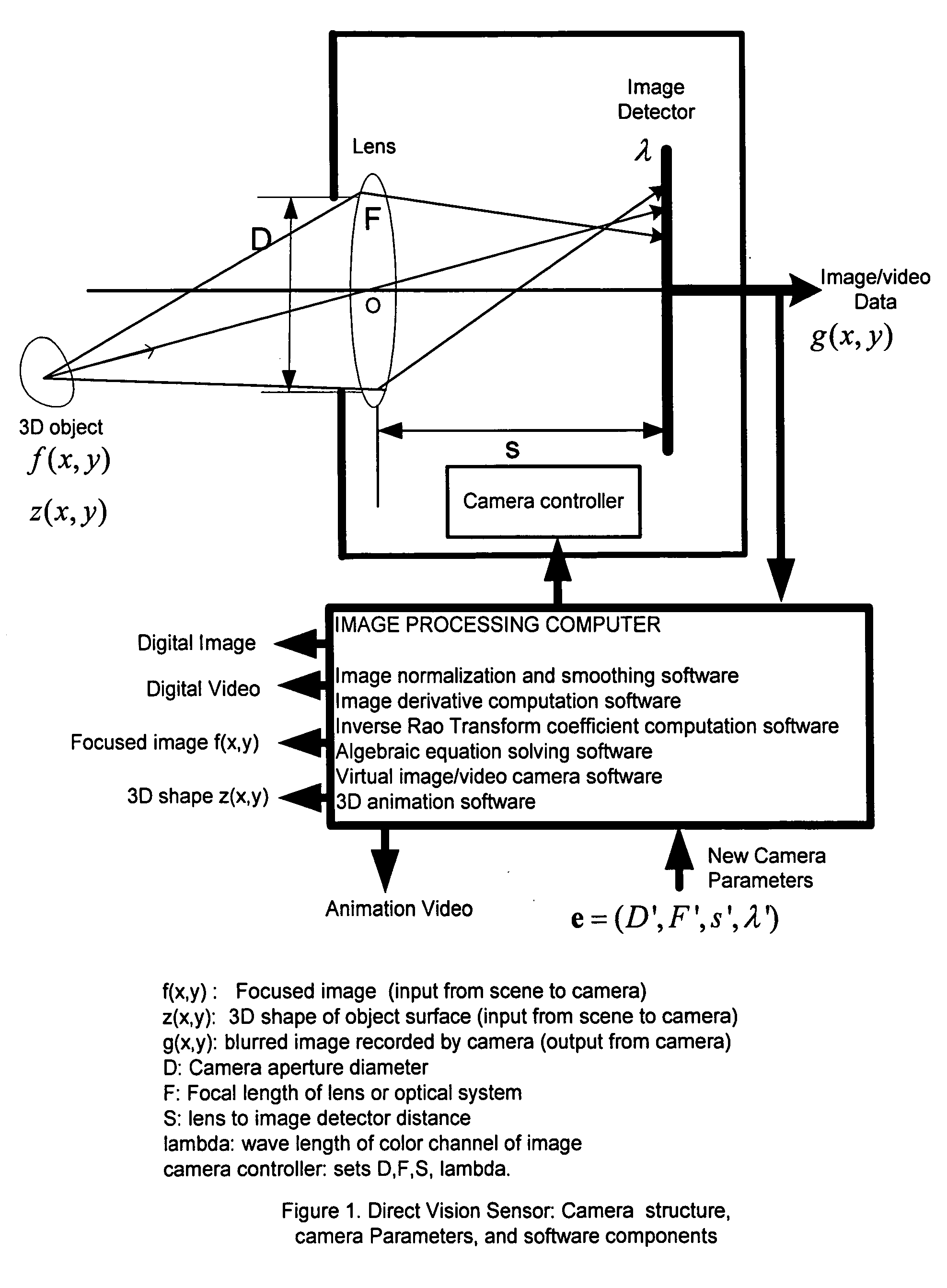

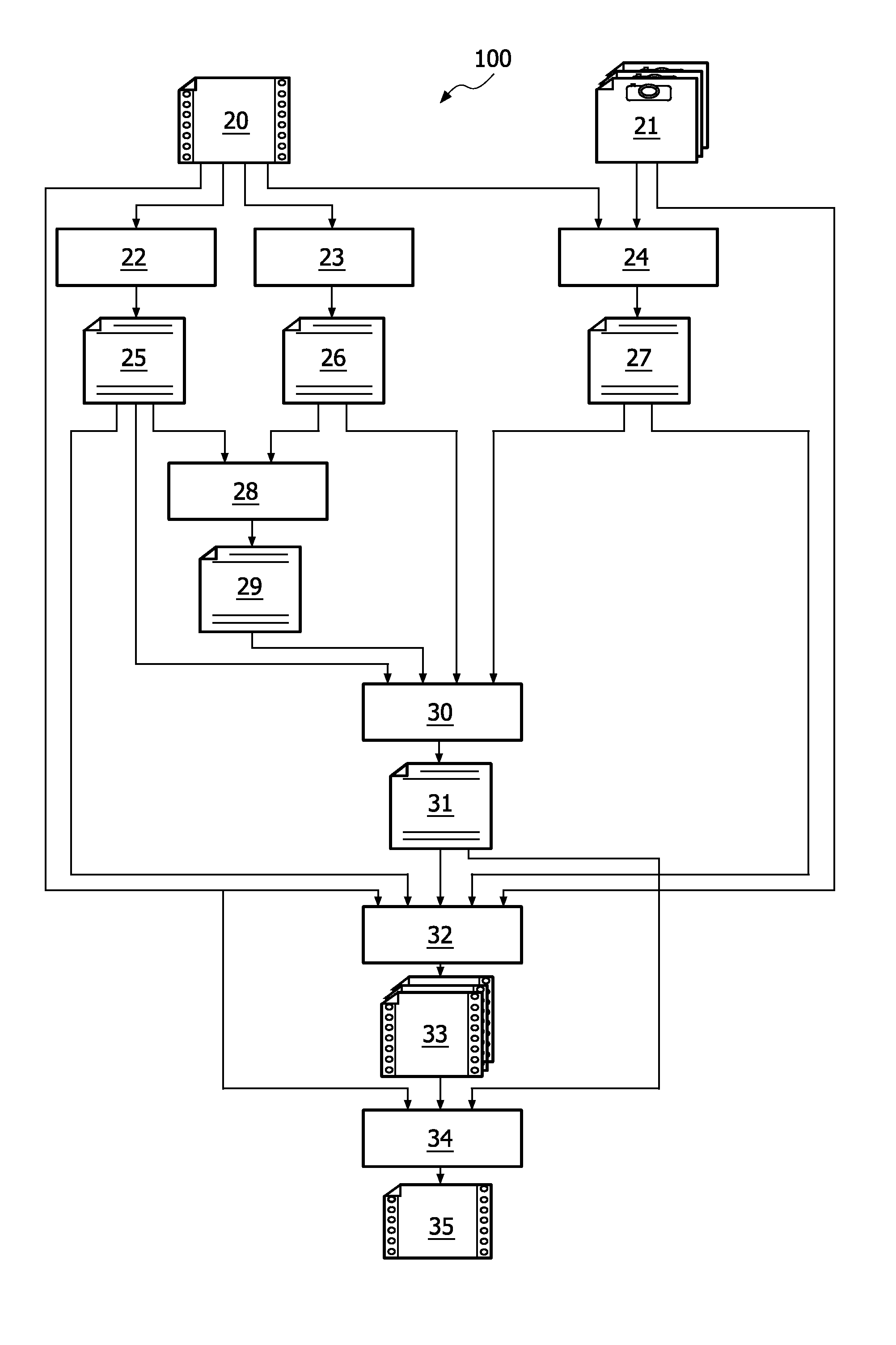

Direct vision sensor for 3D computer vision, digital imaging, and digital video

InactiveUS20060285741A1Accurate modelingApparent advantageImage enhancementDetails involving processing stepsDigital videoDigital imaging

A method and apparatus for directly sensing both the focused image and the three-dimensional shape of a scene are disclosed. This invention is based on a novel mathematical transform named Rao Transform (RT) and its inverse (IRT). RT and IRT are used for accurately modeling the forward and reverse image formation process in a camera as a linear shift-variant integral operation. Multiple images recorded by a camera with different camera parameter settings are processed to obtain 3D scene information. This 3D scene information is used in computer vision applications and as input to a virtual digital camera which computes a digital still image. This same 3D information for a time-varying scene can be used by a virtual video camera to compute and produce digital video data.

Owner:SUBBARAO MURALIDHARA

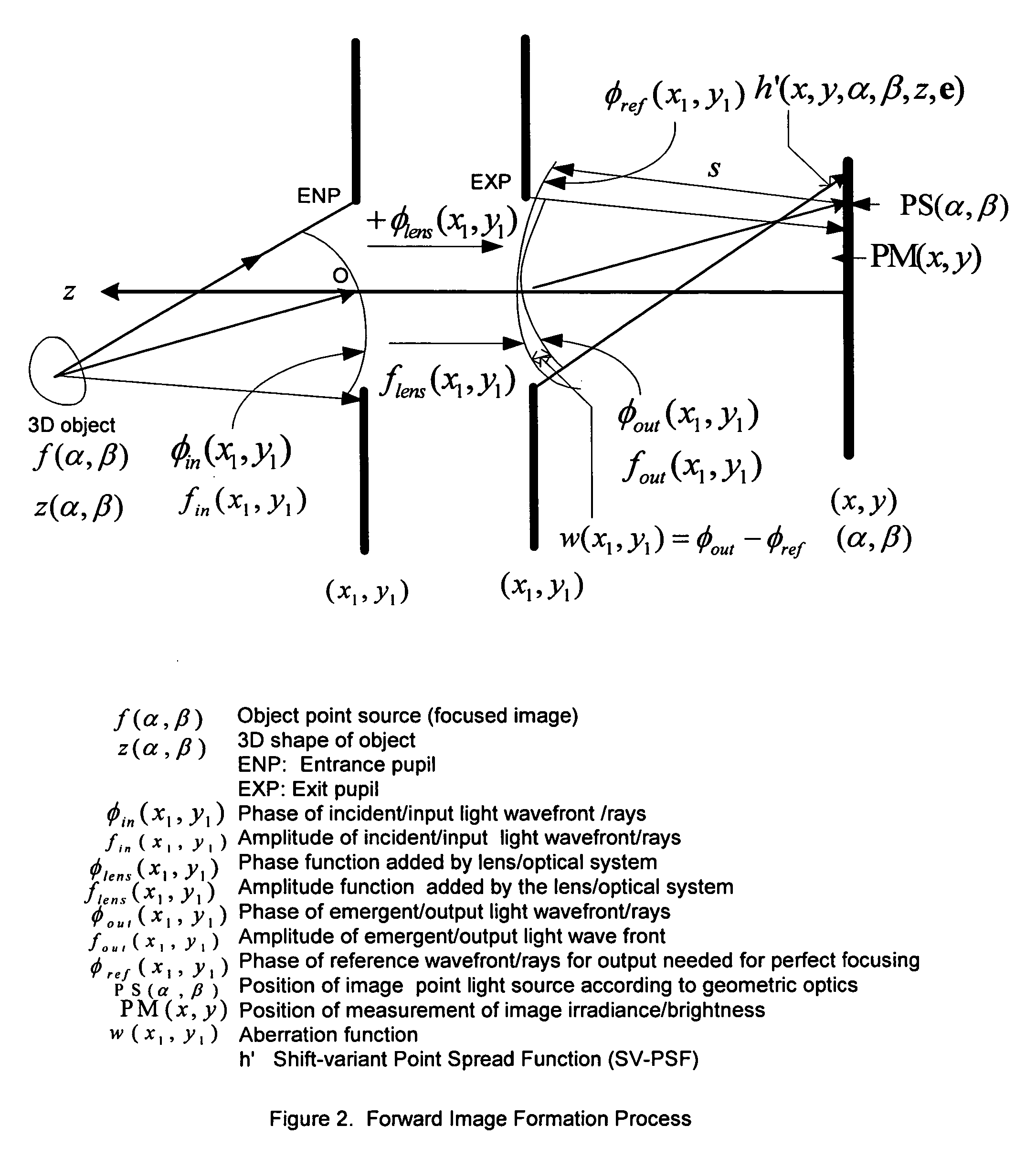

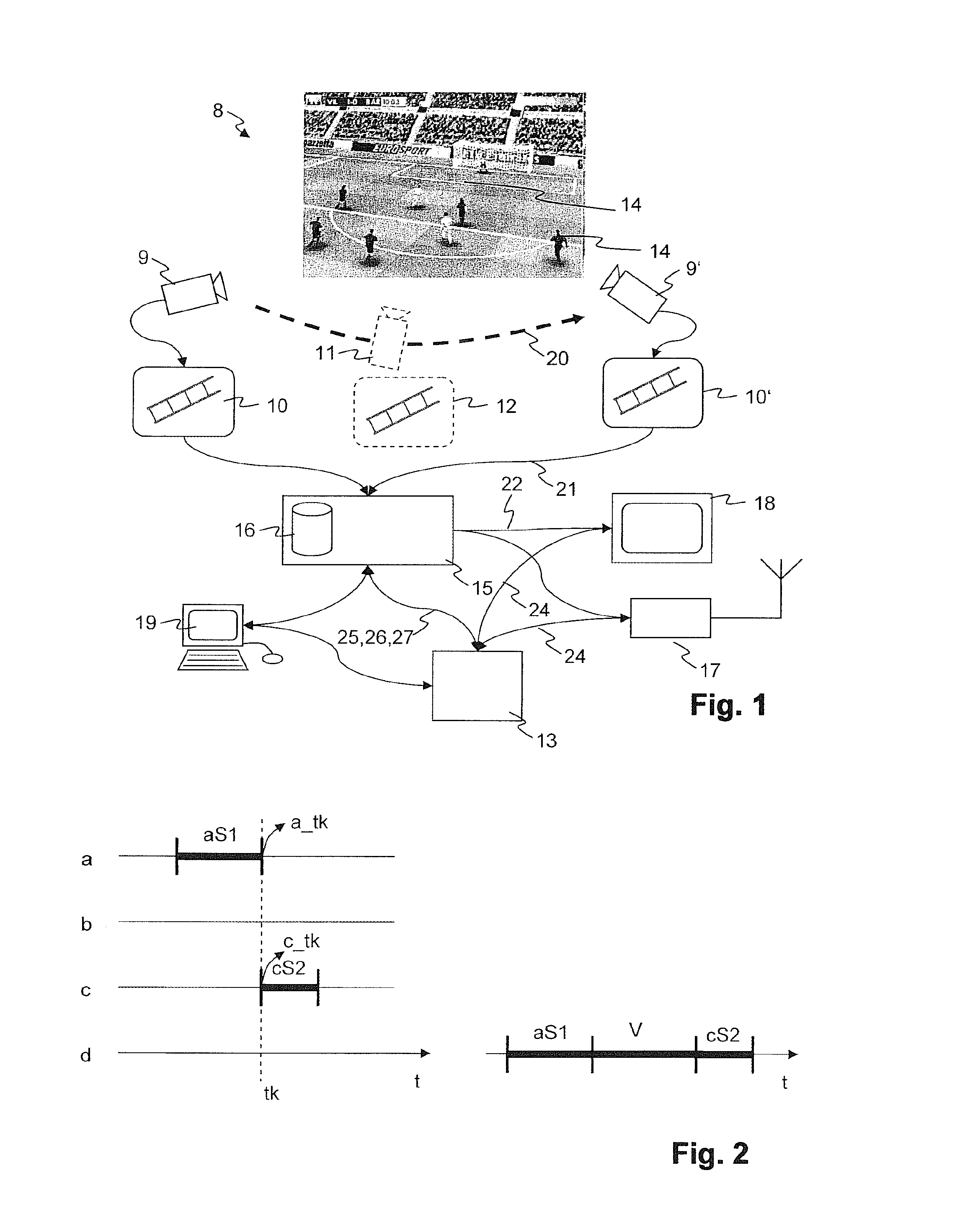

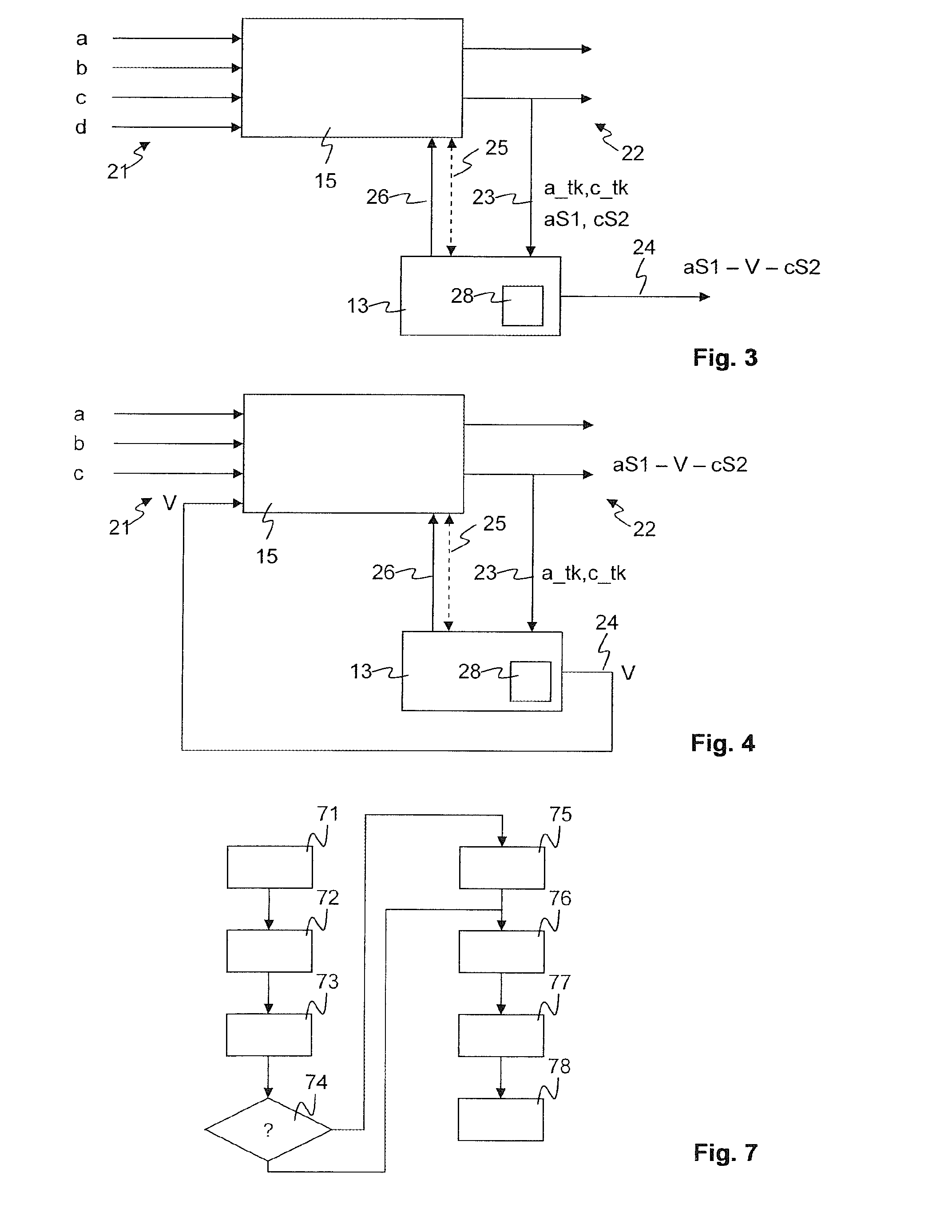

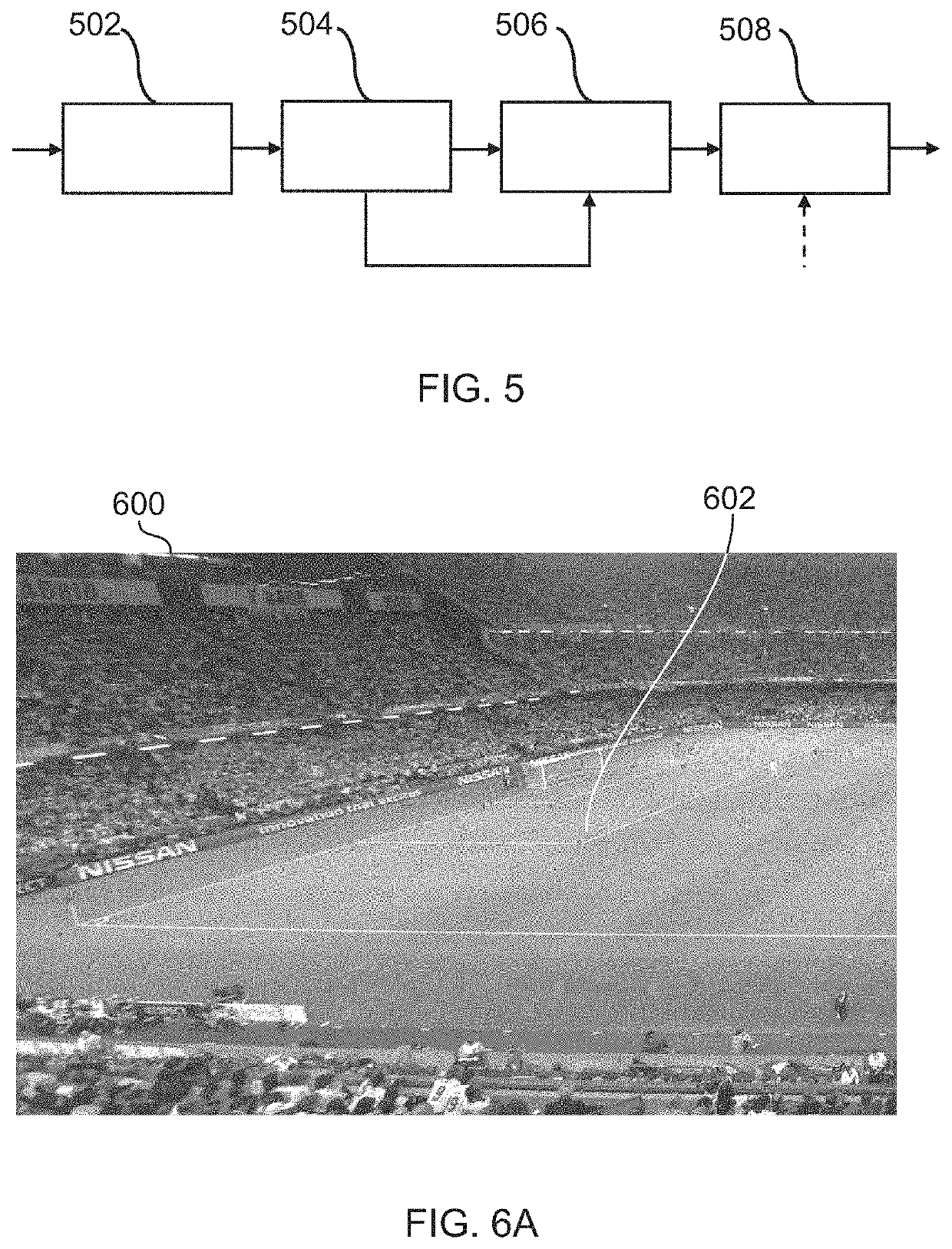

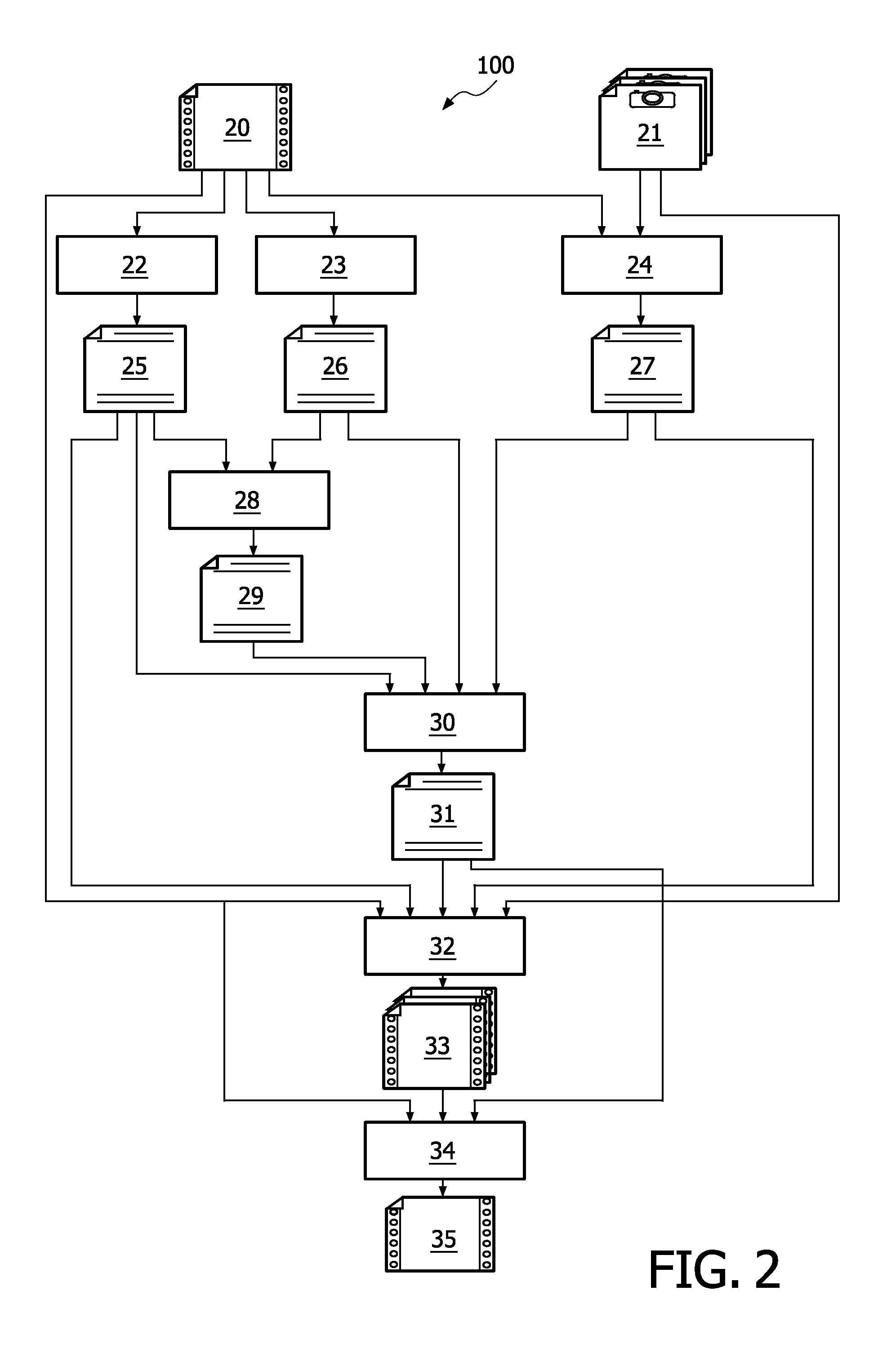

Image processing method and device for instant replay

ActiveUS20120188452A1Eliminate needQuick interactionTelevision system detailsColor television detailsImaging processingKey frame

What is disclosed is a computer-implemented image-processing system and method for the automatic generation of video sequences that can be associated with a televised event. The methods can include the steps of: Defining a reference keyframe from a reference view from a source image sequence; From one or more keyframes, automatically computing one or more sets of virtual camera parameters; Generating a virtual camera flight path, which is described by a change of virtual camera parameters over time, and which defines a movement of a virtual camera and a corresponding change of a virtual view; and Rendering and storing a virtual video stream defined by the virtual camera flight path.

Owner:VIZRT AG

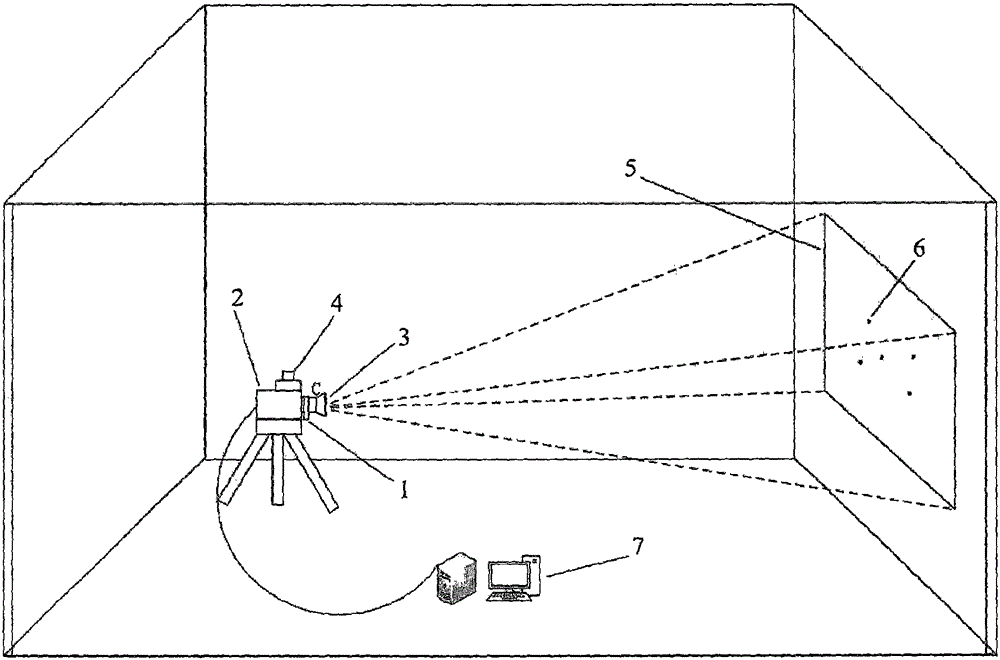

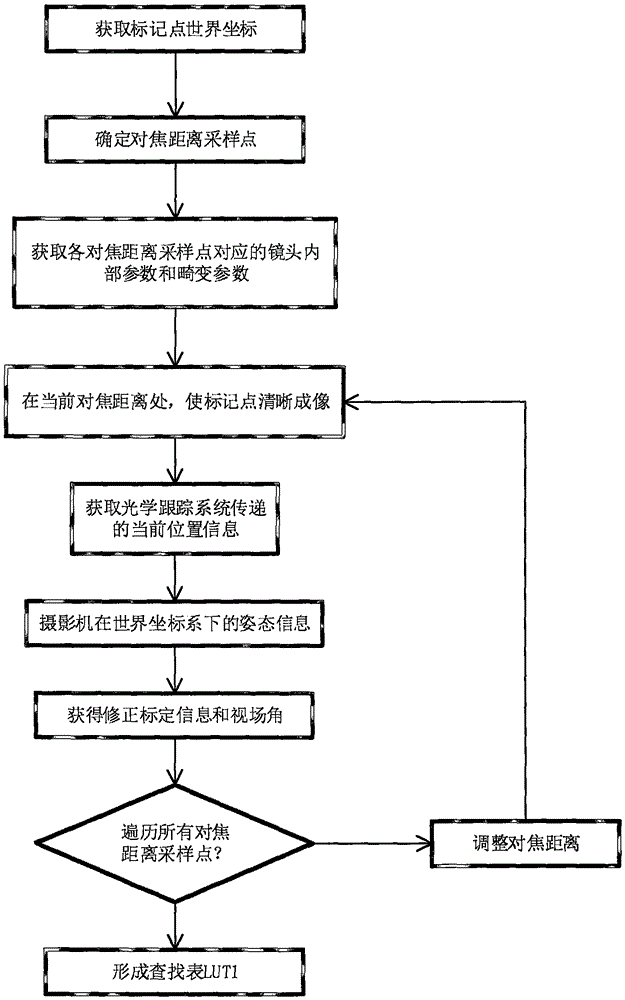

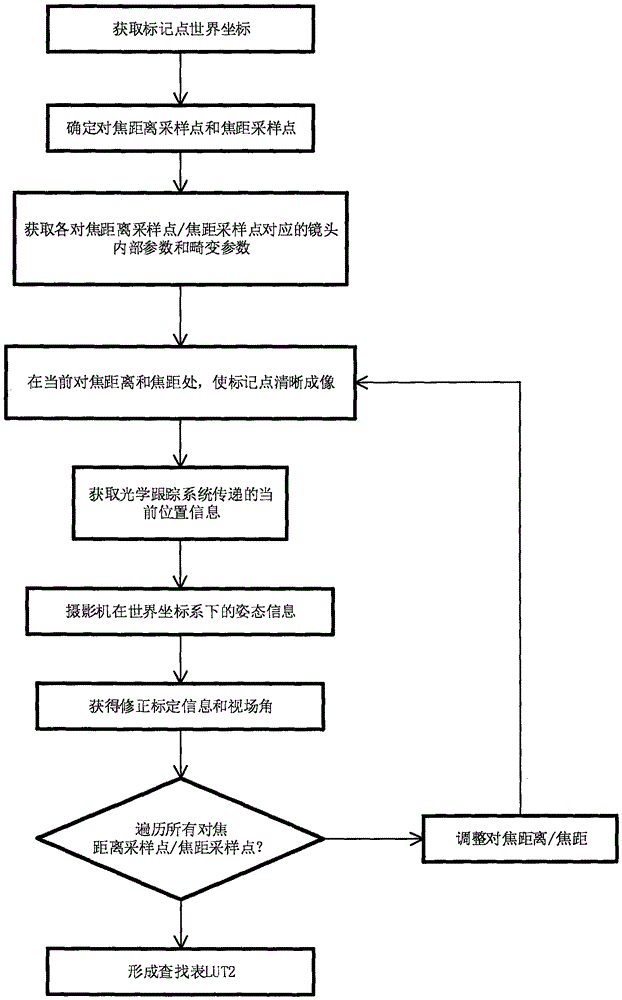

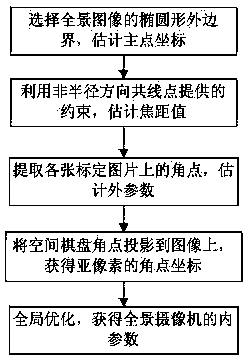

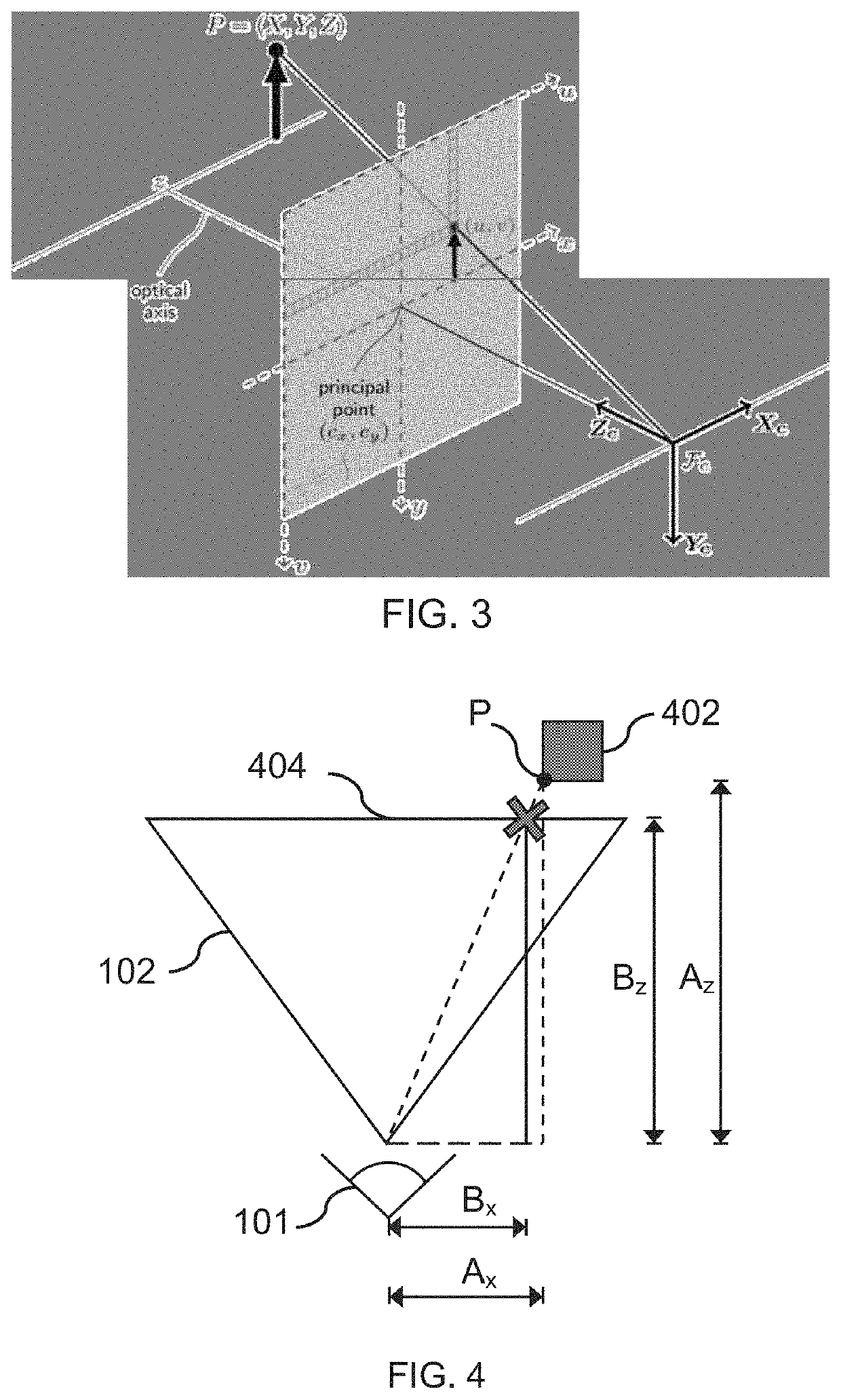

Camera positioning correction calibration method and system

ActiveCN105118055AFully automatedReal-time computingImage enhancementImage analysisCamera lensOptical tracking

The present invention discloses a camera positioning correction calibration method and a system for realizing the method, belonging to the technical field of image virtual production. Through the intrinsic relationship among a lens parameter, an imaging surface and an optical tracking device, by using the world coordinate and image point coordinate of N mark points on a background screen and the internal parameter and lens distortion parameter of a camera lens, the rotation matrix between a camera coordinate system and a world coordinate system and the translation vector of a camera perspective center in the world coordinate system are obtained, combined with the current position information given by a camera posture external tracking device in a current state, camera correction calibration information and viewing angle are obtained, the lookup table with a focusing distance and a focus length relationship is established, thus when a camera position, a lens focal distance and a focusing distance change, the position of the virtual camera of the virtual production system is fully automatically positioned and corrected, and thus a real video frame picture and a virtual frame image generated by a computer can be matched perfectly.

Owner:BEIJING FILM ACAD

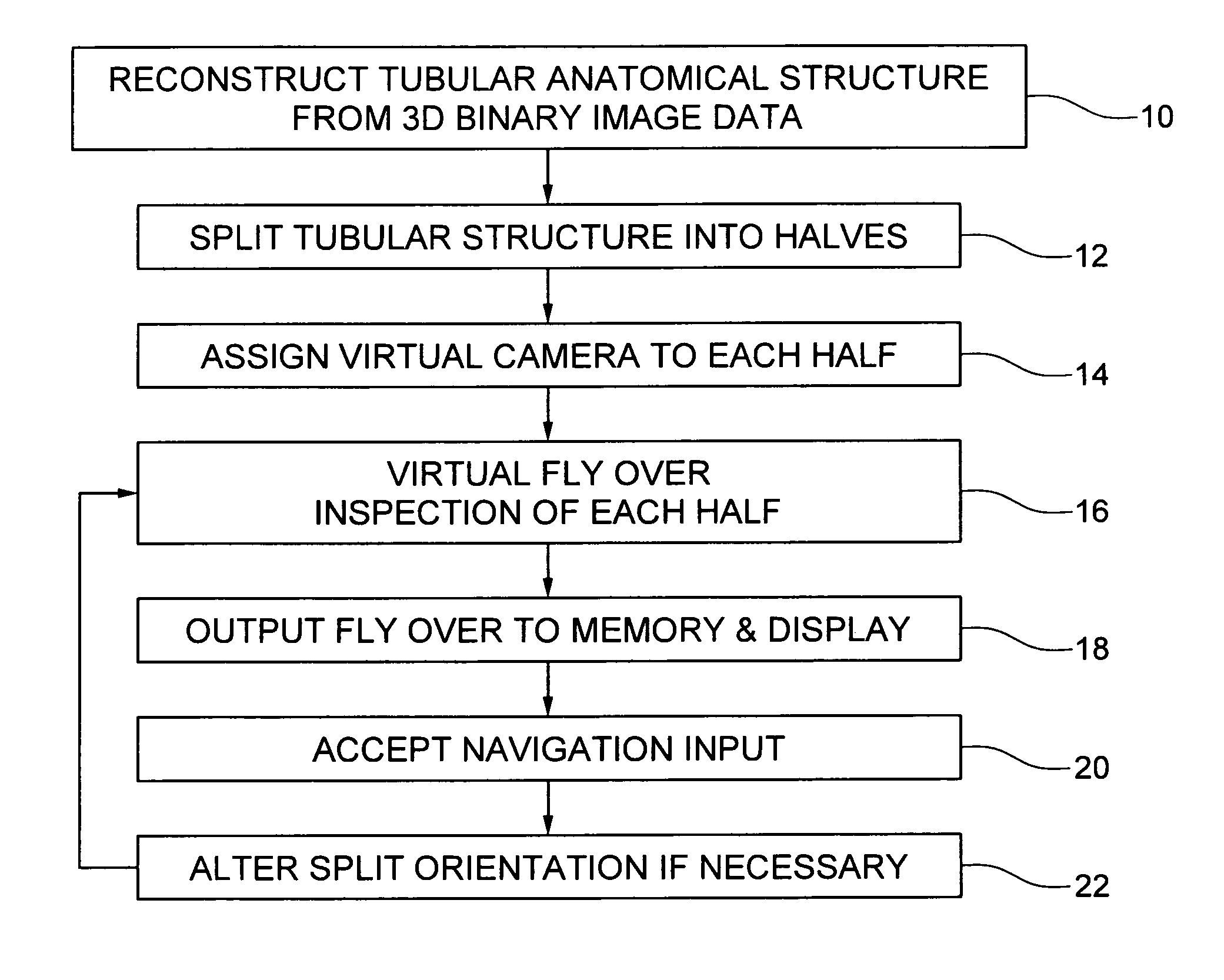

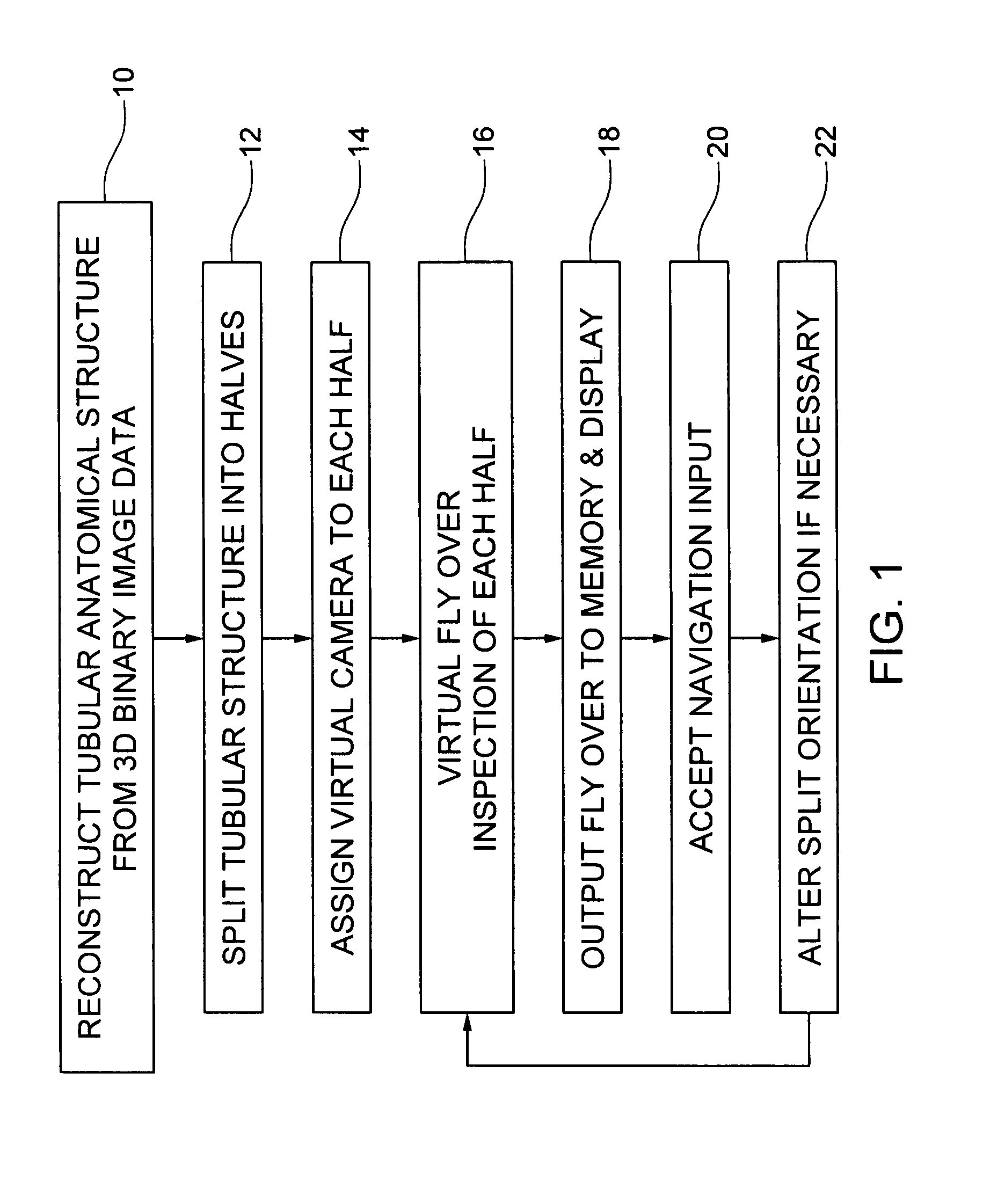

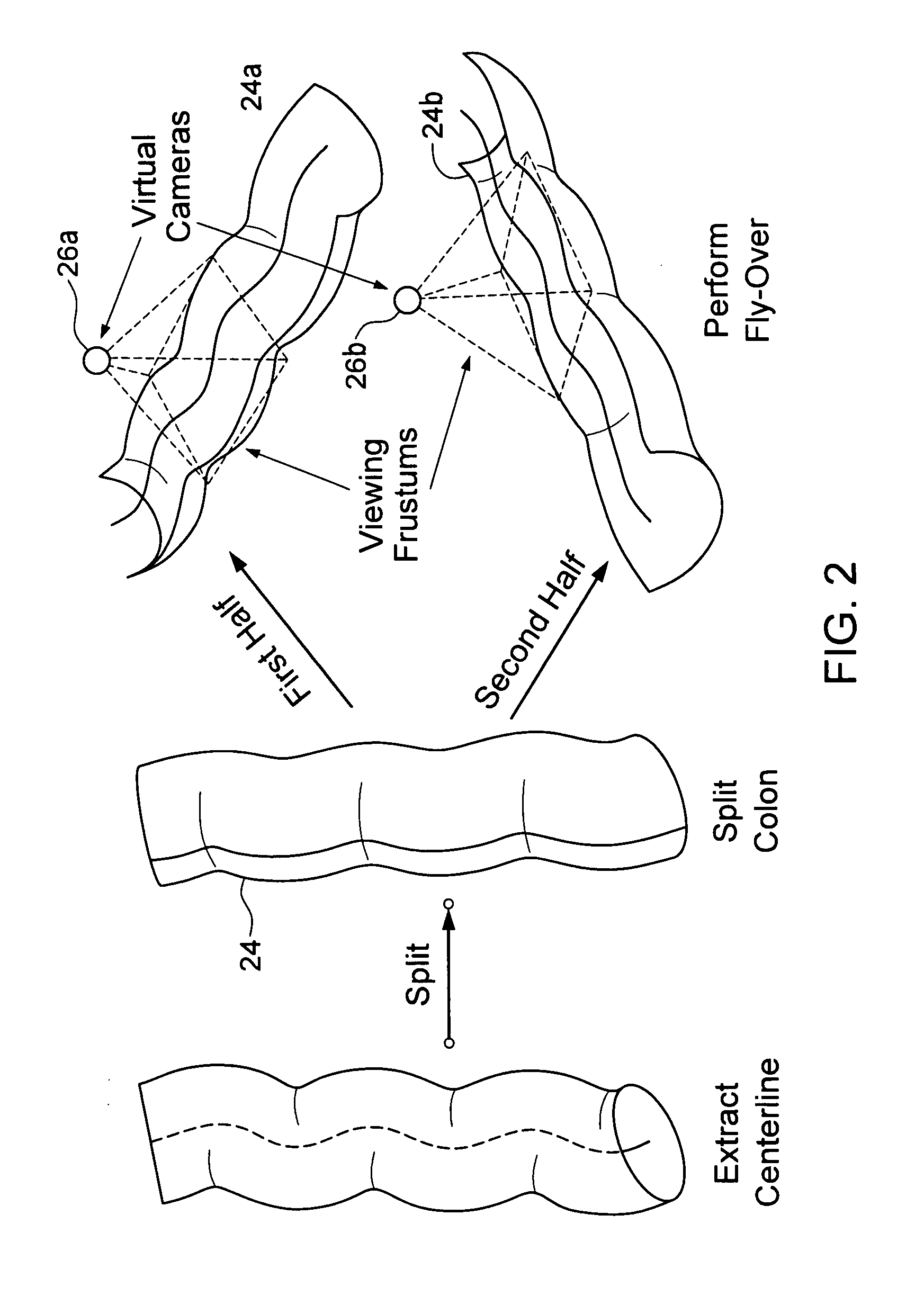

Virtual fly over of complex tubular anatomical structures

InactiveUS20080069419A1Avoid possibilityUltrasonic/sonic/infrasonic diagnosticsMedical simulationAnatomical structuresData set

An embodiment of the invention is method, which can be implemented in software, firmware, hardware, etc., for virtual fly over inspection of complex anatomical tubular structures. In a preferred embodiment, the method is implemented in software, and the software reconstructs the tubular anatomical structure from a binary imaging data that is originally acquired from computer aided tomography scan or comparable biological imaging system. The software of the invention splits the entire tubular anatomy into exactly two halves. The software assigns a virtual camera to each half to perform fly-over navigation. Through controlling the elevation of the virtual camera, there is no restriction on its field of view (FOV) angle, which can be greater than 90 degrees, for example. The camera viewing volume is perpendicular to each half of the tubular anatomical structure, so potential structures of interest, e.g., polyps hidden behind haustral folds in a colon are easily found. The orientation of the splitting surface is controllable, the navigation can be repeated at another or a plurality of another split orientations. This avoids the possibility that a structure of interest, e.g., a polyp that is divided between the two halves of the anatomical structure in a first fly over is missed during a virtual inspection. Preferred embodiment software conducts virtual colonoscopy fly over. Experimental virtual fly over colonoscopy software of the invention that performed virtual fly over on 15 clinical datasets demonstrated average surface visibility coverage is 99.59+ / −0.2%.

Owner:UNIV OF LOUISVILLE RES FOUND INC

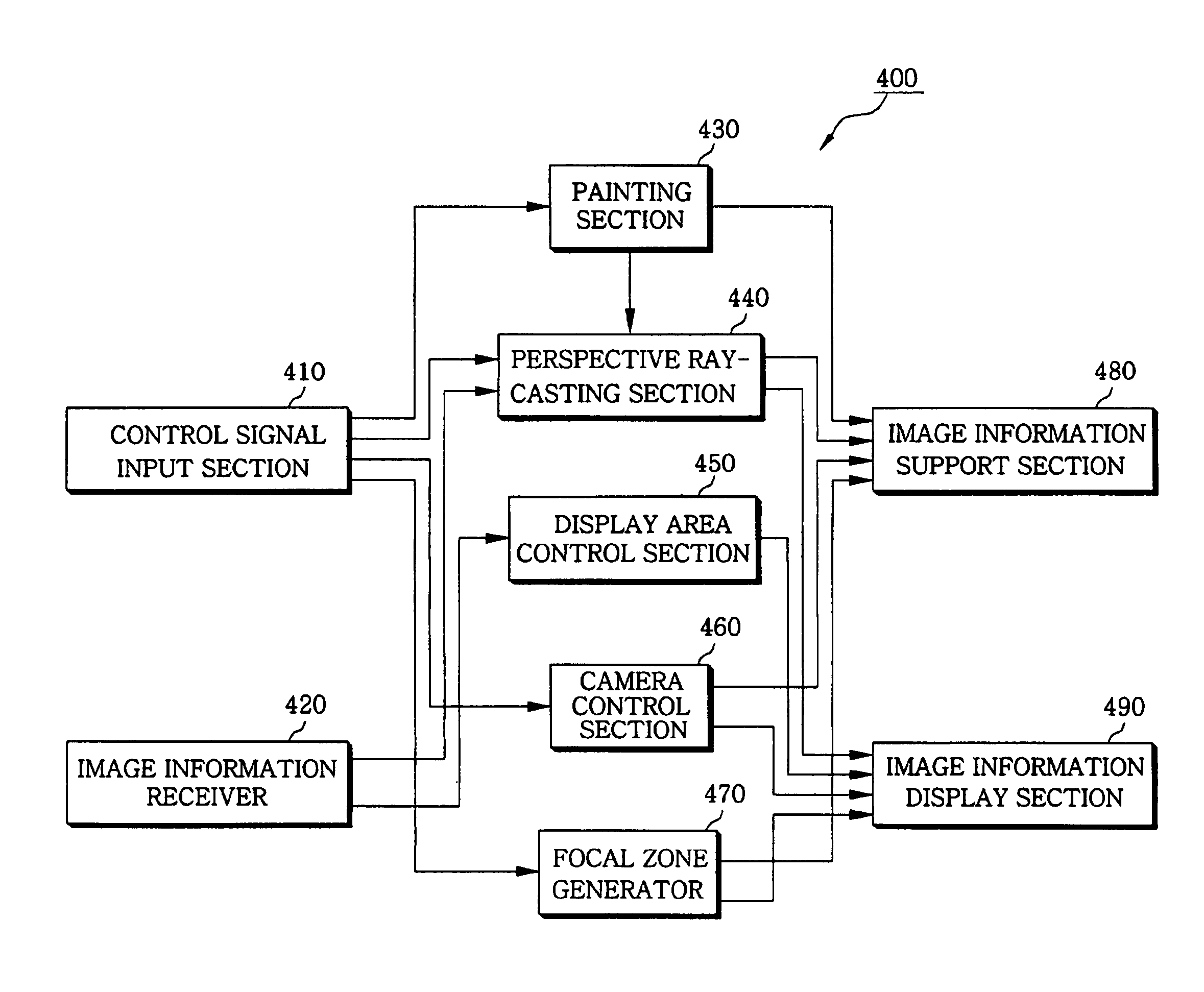

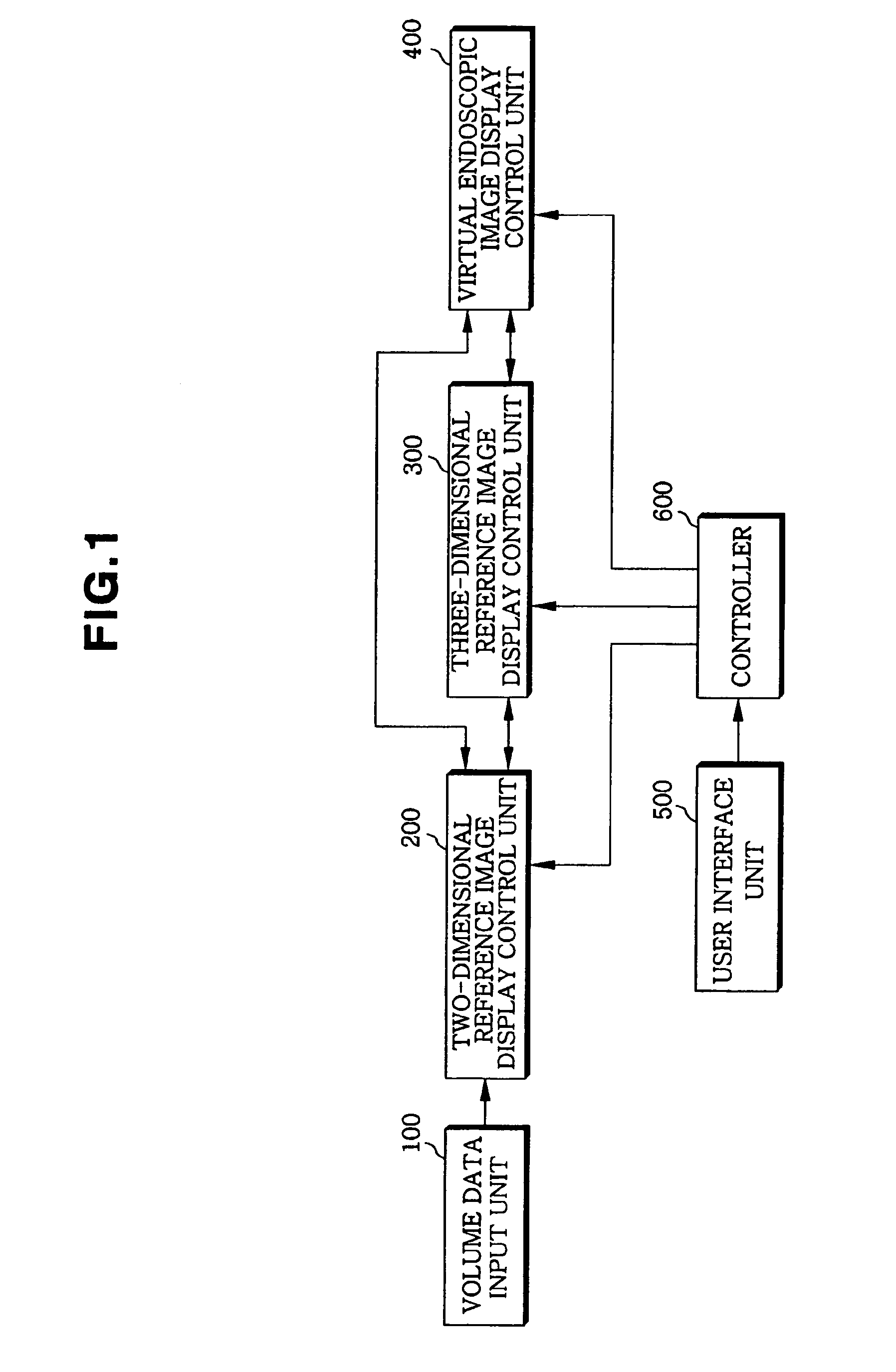

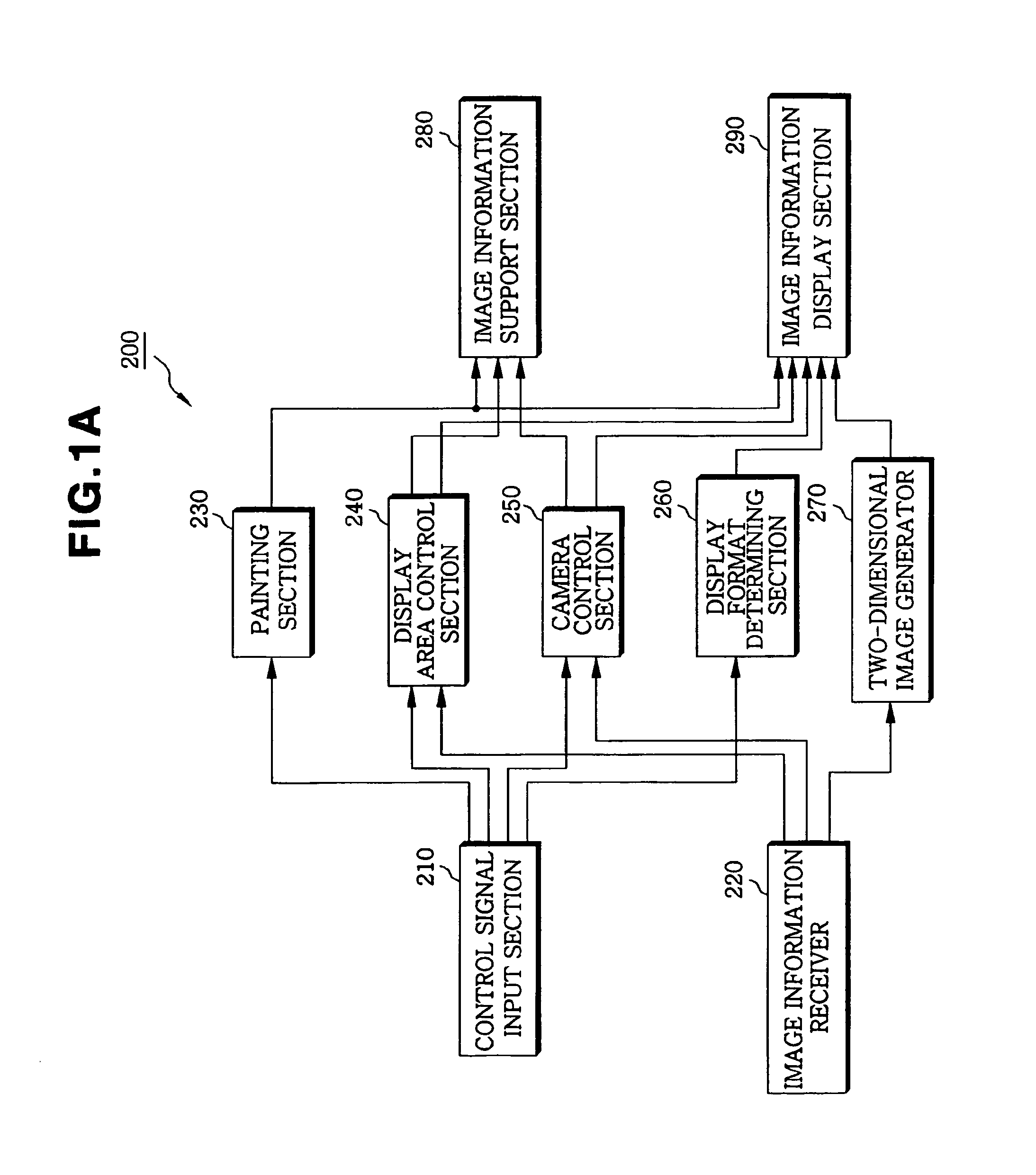

Apparatus and method for displaying virtual endoscopy display

InactiveUS7102634B2Easy to operateSurgeryCharacter and pattern recognitionReference imageComputer science

An apparatus and method for displaying a three-dimensional virtual endoscopic image are provided. In the method, information on a virtual endoscopic image is input in the form of volume data expressed as a function of three-dimensional position. A two-dimensional reference image, a three-dimensional reference image, and a virtual endoscopic image are detected from the volume data. The detected images are displayed on one screen. Virtual cameras are respectively displayed on areas, in which the two and three-dimensional reference images are respectively displayed. Here, a camera display sphere and a camera display circle are defined on the basis of a current position of each virtual camera. When information regarding to one image, among the two and three-dimensional reference images and the virtual endoscopic image on one screen, is changed by a user's operation, information regarding to the other images is changed based on the information changed by the user's operation.

Owner:INFINITT HEALTHCARE CO LTD

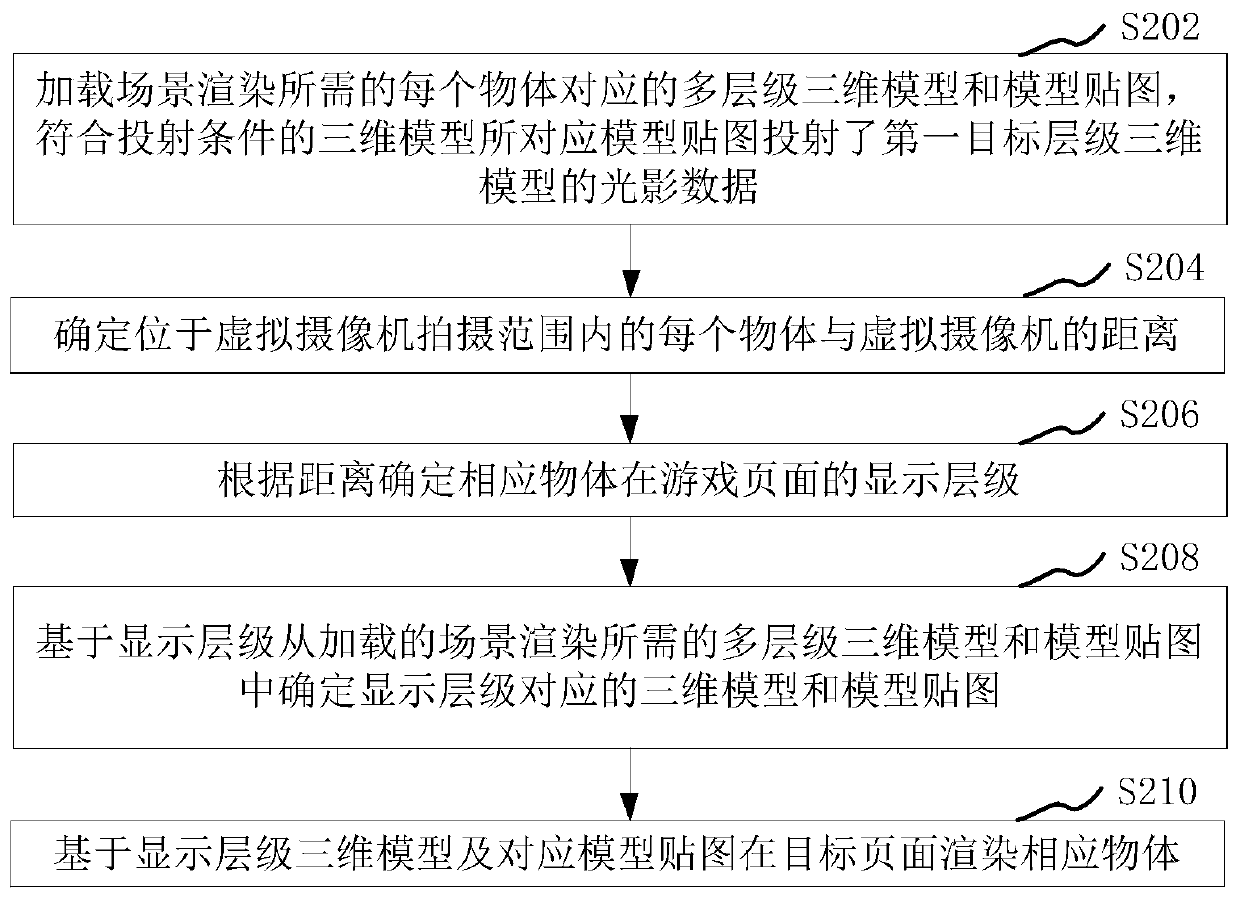

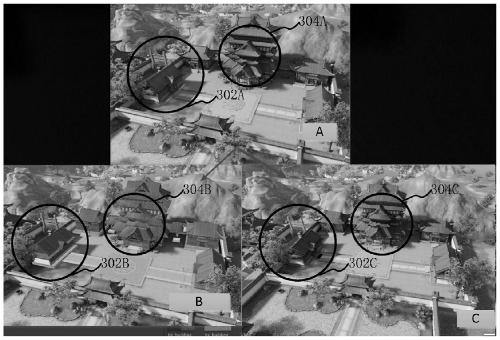

Scene rendering method and device, computer readable storage medium and computer equipment

ActiveCN111105491AImprove light and shadow effectsExtended line of sight3D-image rendering3D modellingEngineeringVirtual cinematography

The invention relates to a scene rendering method and device, a computer readable storage medium and computer equipment. The method comprises the steps of loading a multi-level three-dimensional modeland a model map corresponding to each object required for scene rendering; wherein a model map corresponding to the three-dimensional model meeting the projection condition projects light and shadowdata of a first target level three-dimensional model; determining the distance between each object in the shooting range of the virtual camera and the virtual camera; determining a display level of the corresponding object on the target page according to the distance; and rendering a corresponding object on the target page based on the three-dimensional model of the display hierarchy and the corresponding model map. According to the scheme provided by the invention, the rendering effect of the virtual scene can be improved under the conditions of increasing the sight distance range and ensuring the loading speed of the virtual scene.

Owner:TENCENT TECH (SHENZHEN) CO LTD

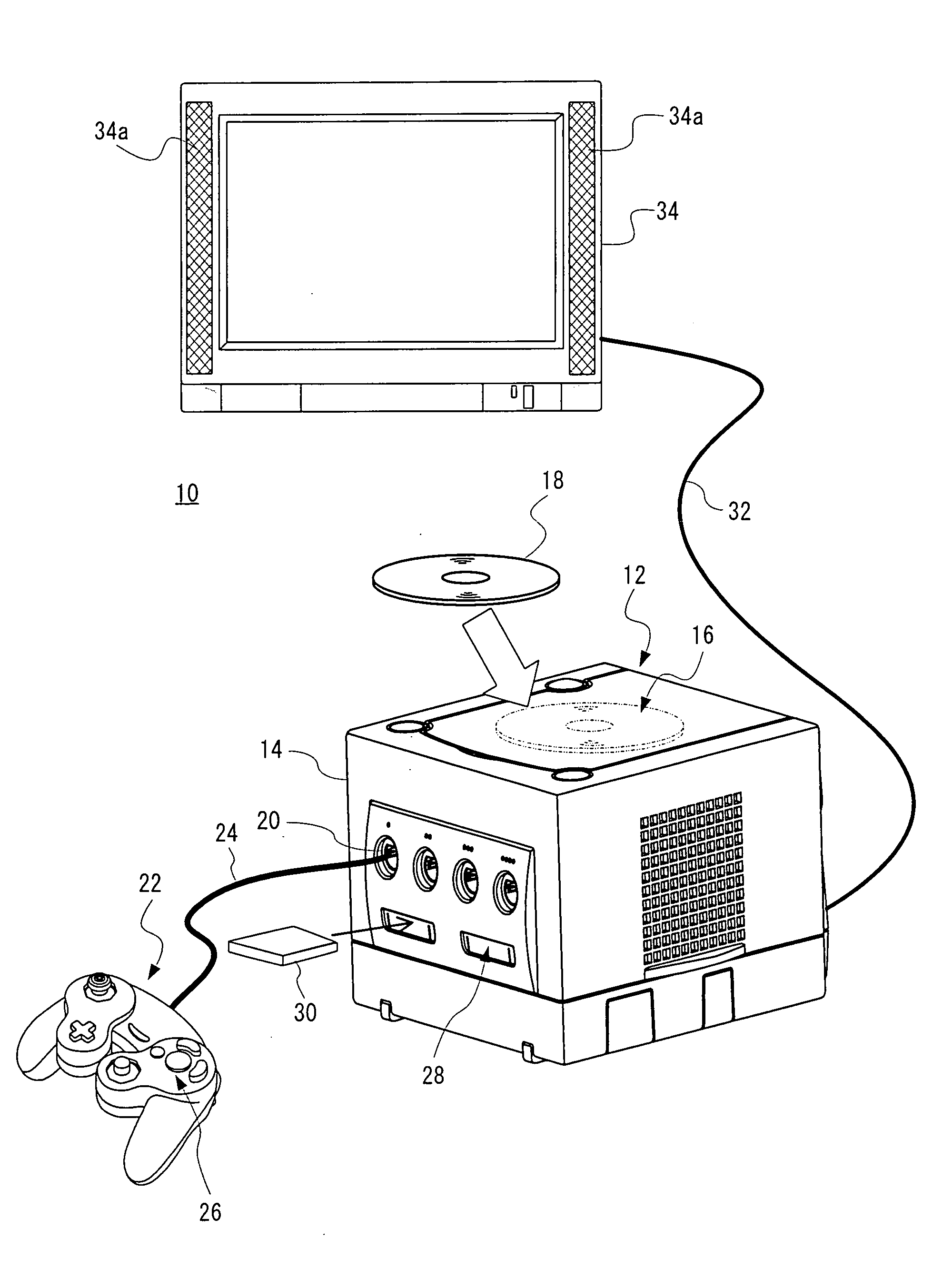

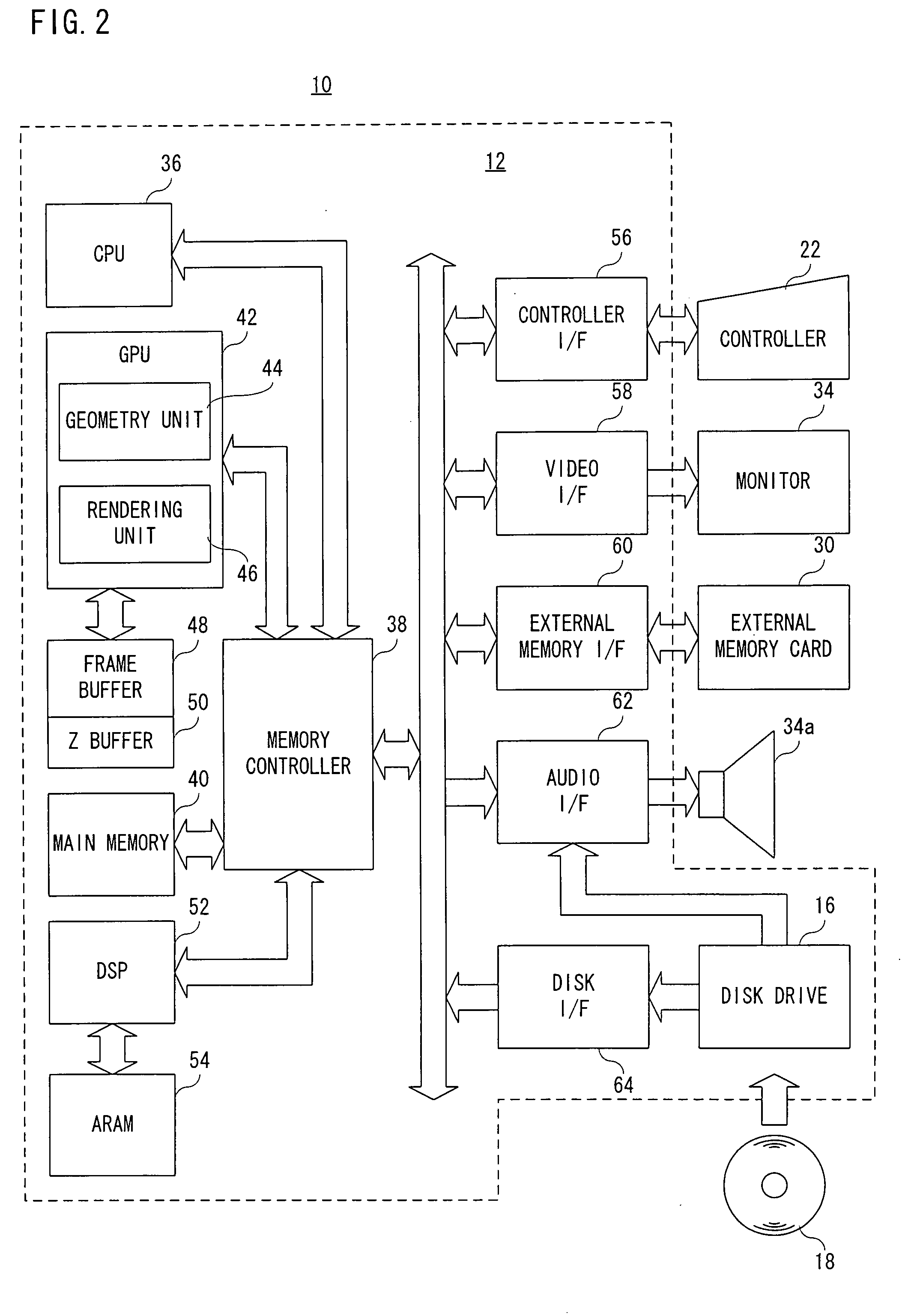

Storage medium storing game program and game apparatus

ActiveUS20080113792A1Easy to observeAppropriate speed of movementVideo gamesSpecial data processing applicationsRight visual fieldVisual field loss

A game apparatus includes a CPU, and displays on a monitor a scene viewed from a virtual camera moving in a virtual game space. In the game apparatus, an aspect ratio of an image to be displayed on the monitor is acquired, and a moving speed of the virtual camera is set based on the aspect ratio. For example, a visual field of the virtual camera is set based on the aspect ratio, and as the image is laterally longer with respect to a longitudinal thereof, the visual field in a crosswise direction is made wider, and therefore a crosswise moving speed of the virtual camera is made smaller.

Owner:NINTENDO CO LTD

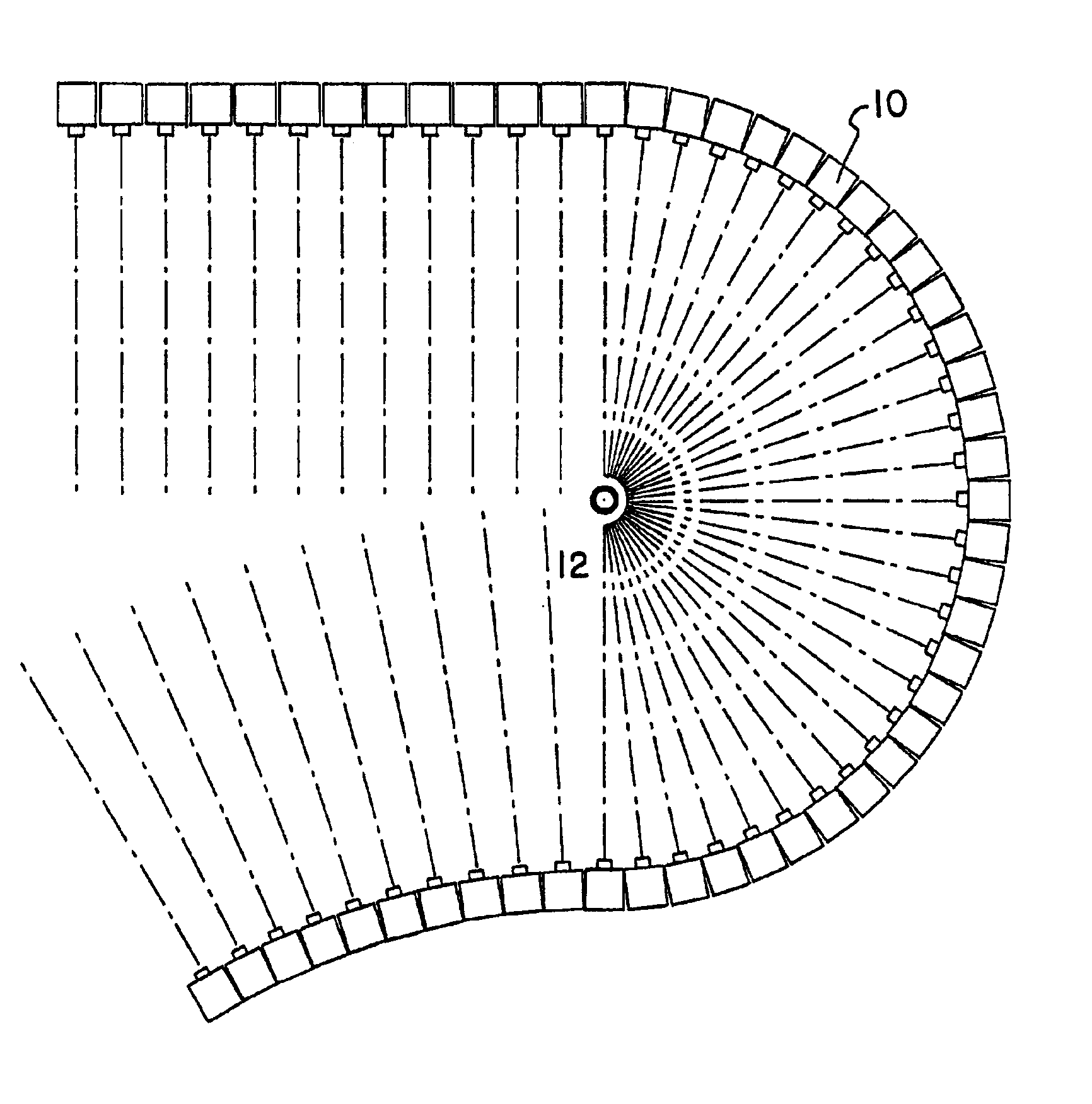

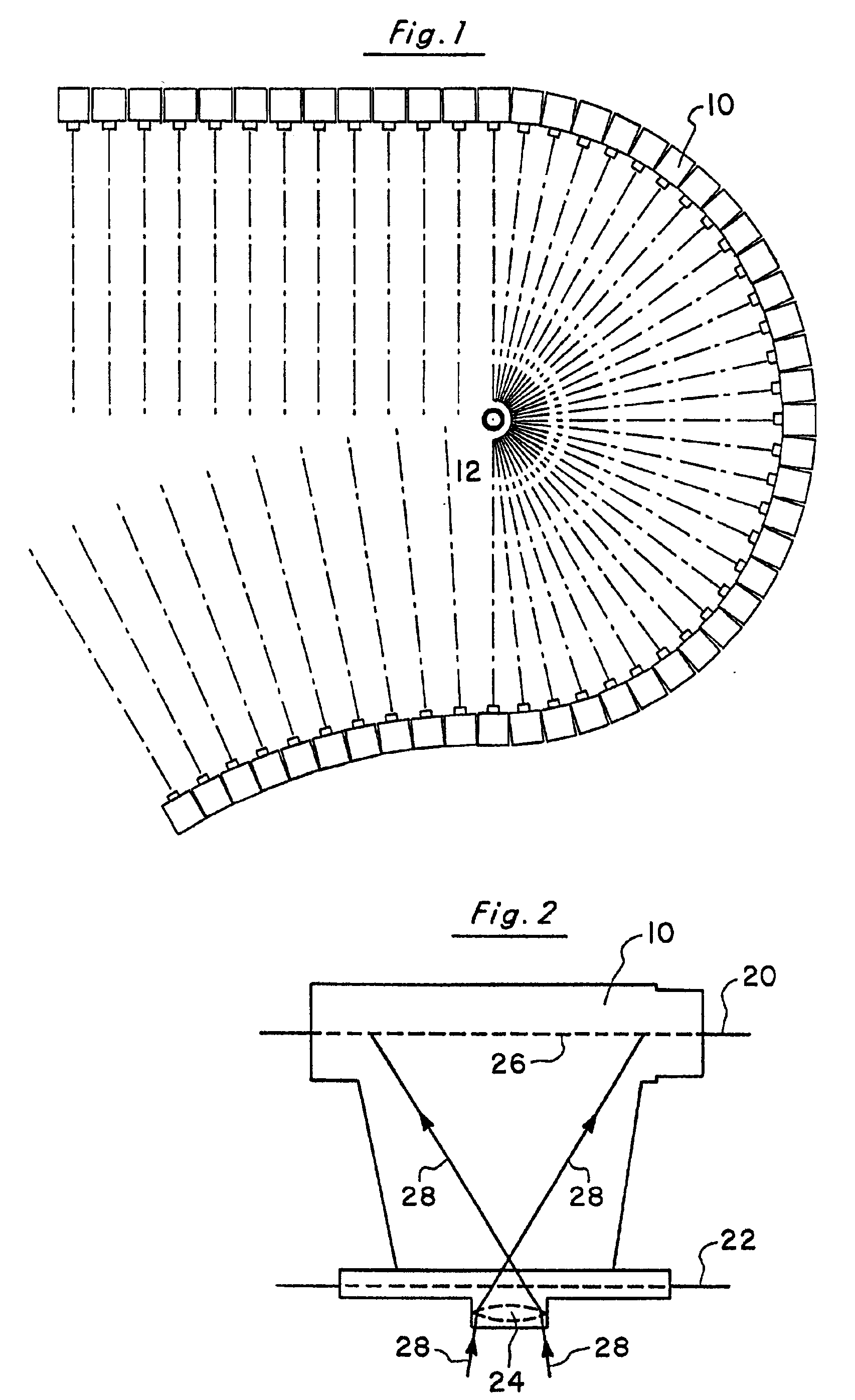

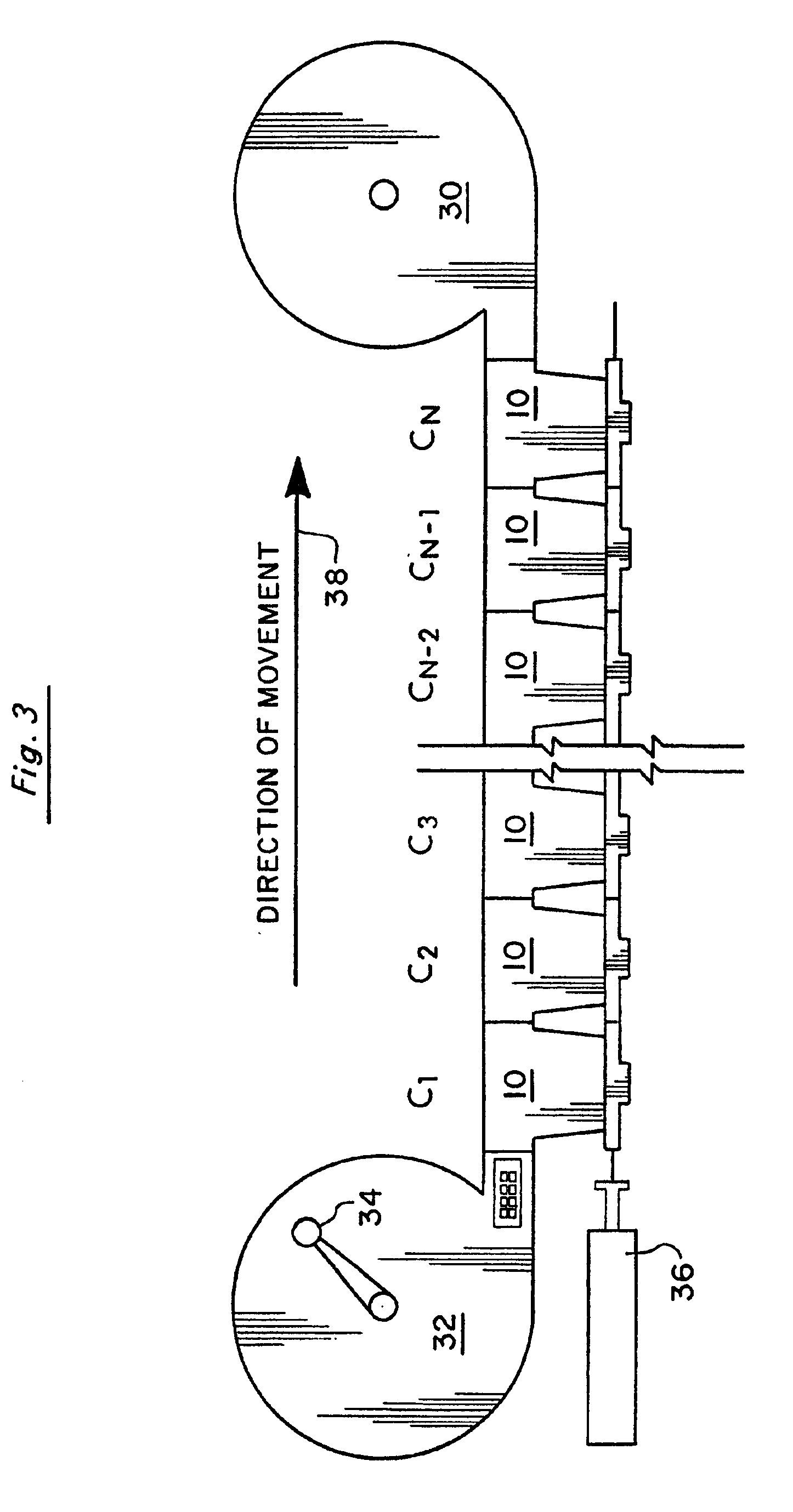

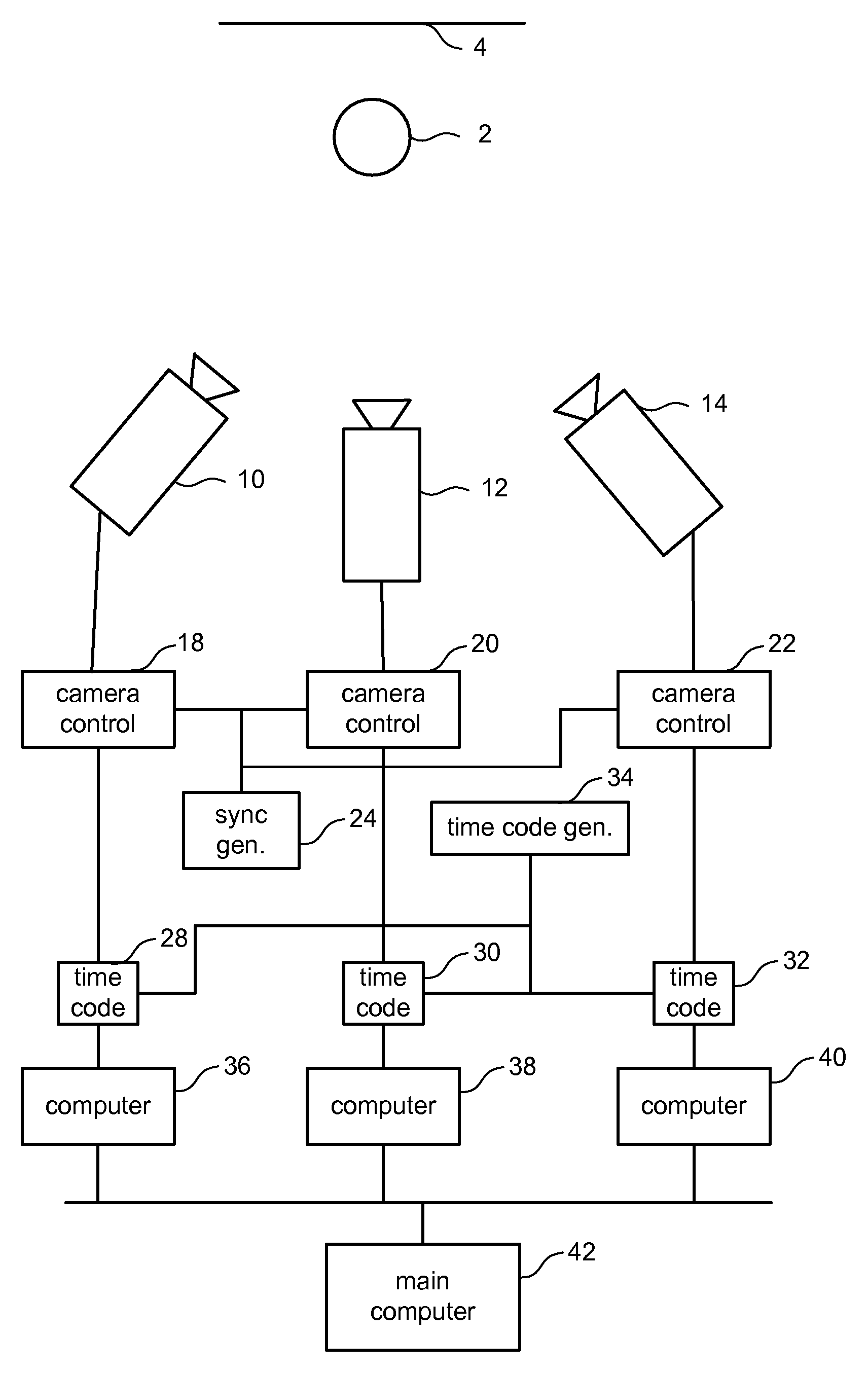

System for producing time-independent virtual camera movement in motion pictures and other media

InactiveUS6933966B2Provides illusionSmooth transitionTelevision system detailsCamerasPicture booksVideo tape

A system for producing virtual camera motion in a motion picture medium in which an array of cameras is deployed along a preselected path with each camera focused on a common scene. Each camera is triggered simultaneously to record a still image of the common scene, and the images are transferred from the cameras in a preselected order along the path onto a sequence of frames in the motion picture medium such as motion picture film or video tape. Because each frame shows the common scene from a different viewpoint, placing the frames in sequence gives the illusion that one camera has moved around a frozen scene (i.e., virtual camera motion). In another embodiment, a two-dimensional array of video cameras is employed. Each camera synchronously captures a series of images in rapid succession over time. The resulting array of images can be combined in any order to create motion pictures having a combination of virtual camera motion and time-sequence images.

Owner:TAYLOR DAYTON V

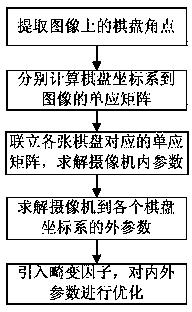

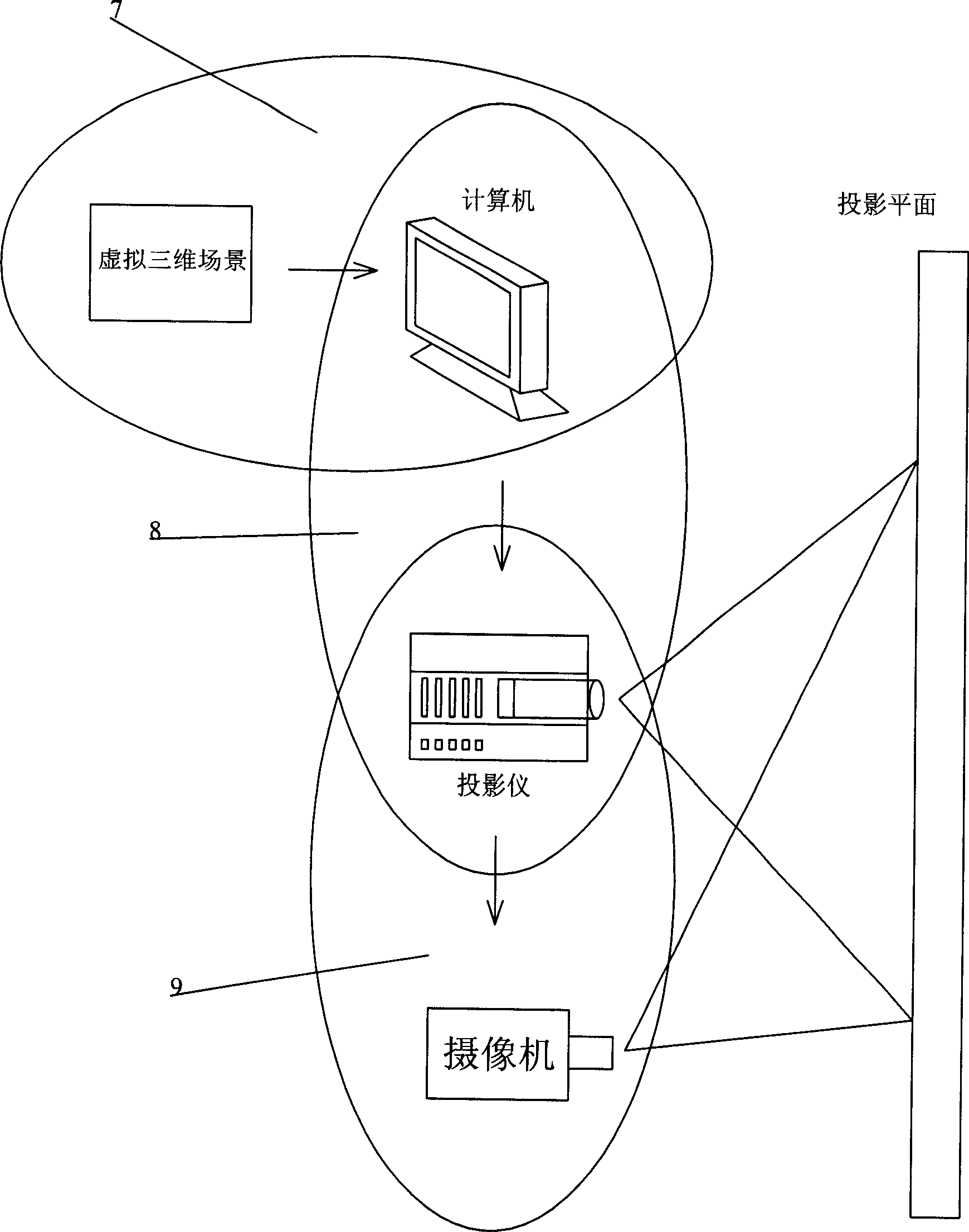

Mixed visual system calibration method based on Kinect camera

ActiveCN103646394AFull Imaging GuaranteedNo special requirements for space configurationImage analysisVirtual cameraProjection plane

The invention relates to a mixed visual system calibration method based on a Kinect camera. A mixed visual system comprises the Kinect camera and a panoramic camera. The calibration method comprises the steps of carrying out calibration on an RGB camera of the Kinect camera and the panoramic camera, acquiring internal parameters of the RGB camera and the panoramic camera, setting a calibration board based on a checkerboard, and establishing a checkerboard coordinate system; and constructing virtual camera projection plane located in front of a panoramic camera coordinate system in space, calculating and acquiring a transformation matrix from the virtual camera projection plane to the checkerboard coordinate system, and further acquiring external parameters from an IR camera of the Kinect camera to the panoramic camera. The mixed visual system calibration method based on the Kinect camera can acquire the internal parameters of the Kinect camera and the external parameters of the mixed visual system, has low requirements for calibration conditions and a space configuration relation of the mixed visual system, and is flexible in use.

Owner:福建旗山湖医疗科技有限公司

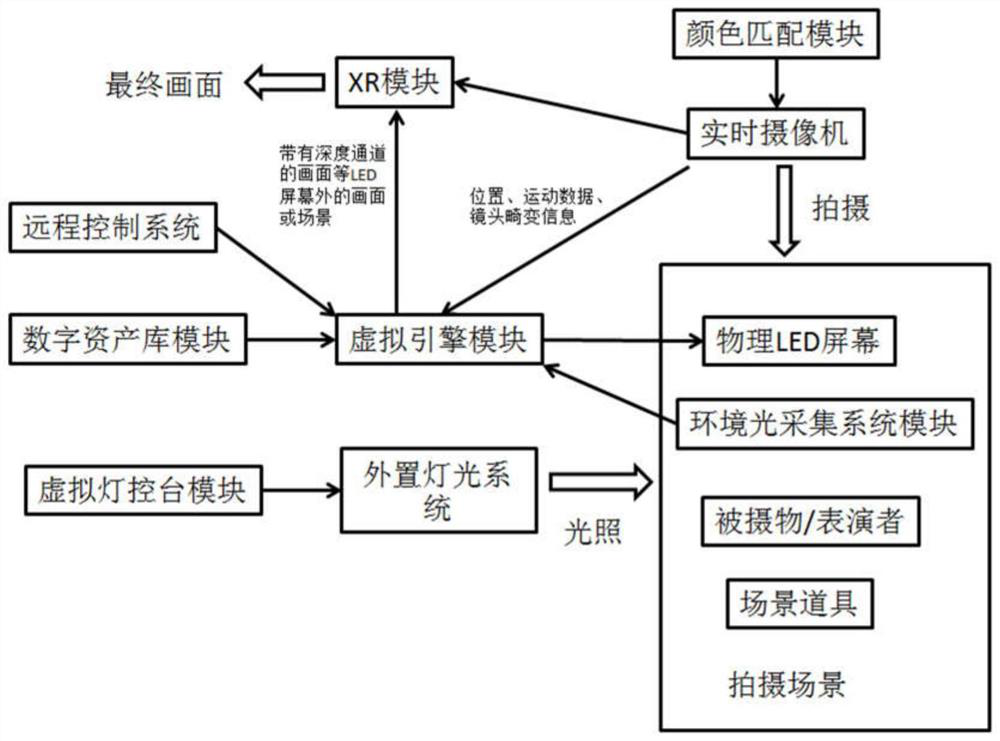

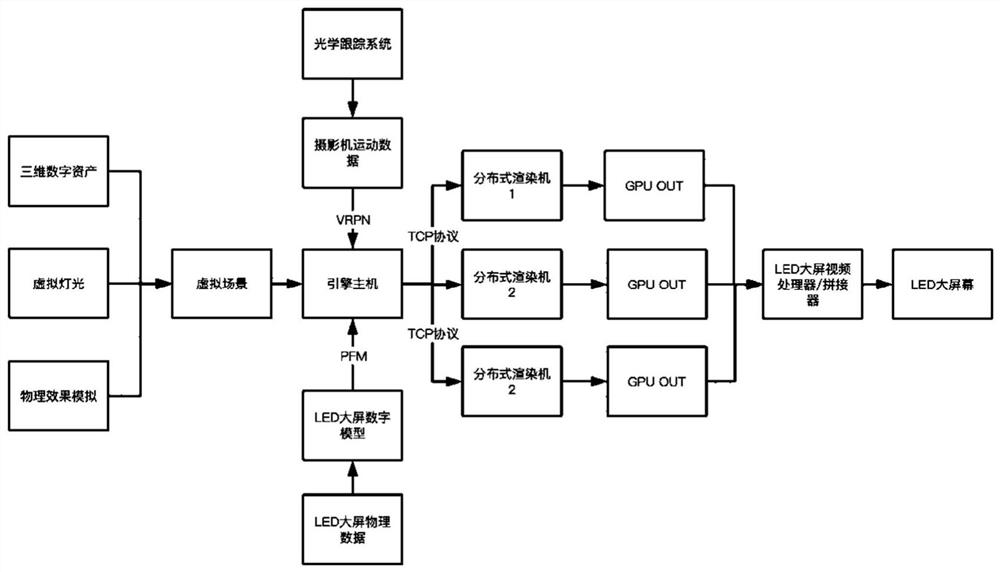

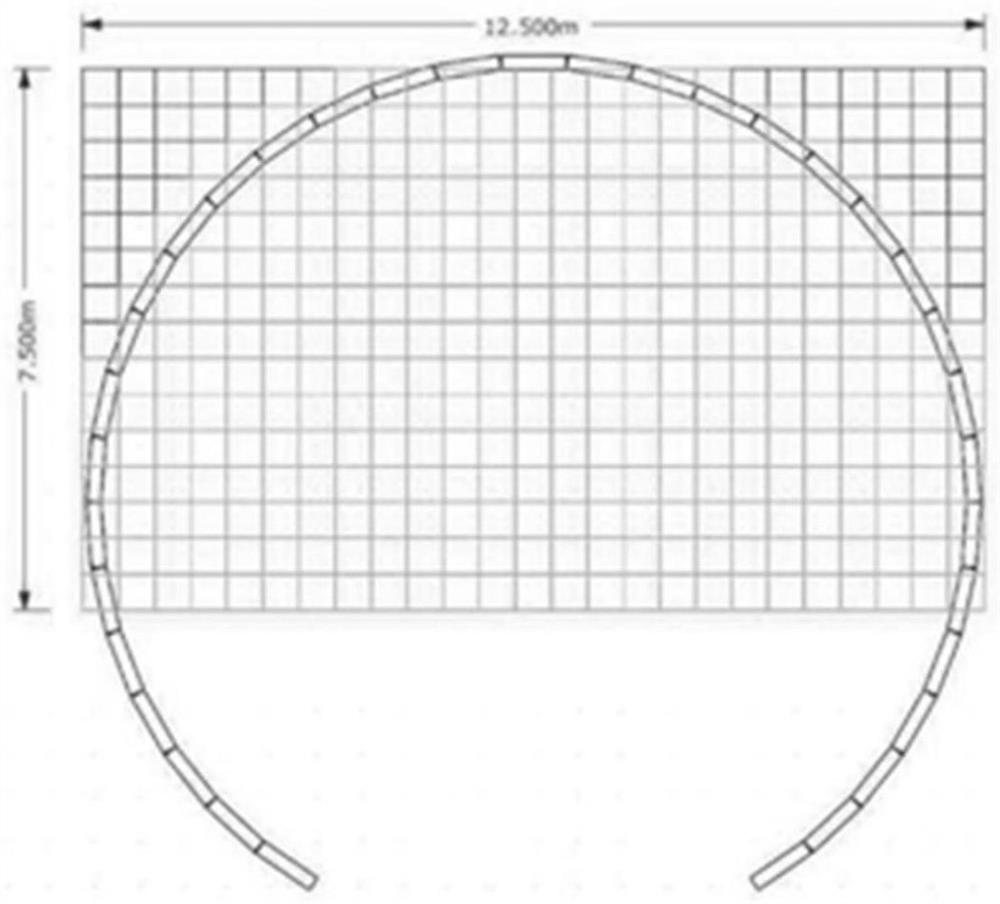

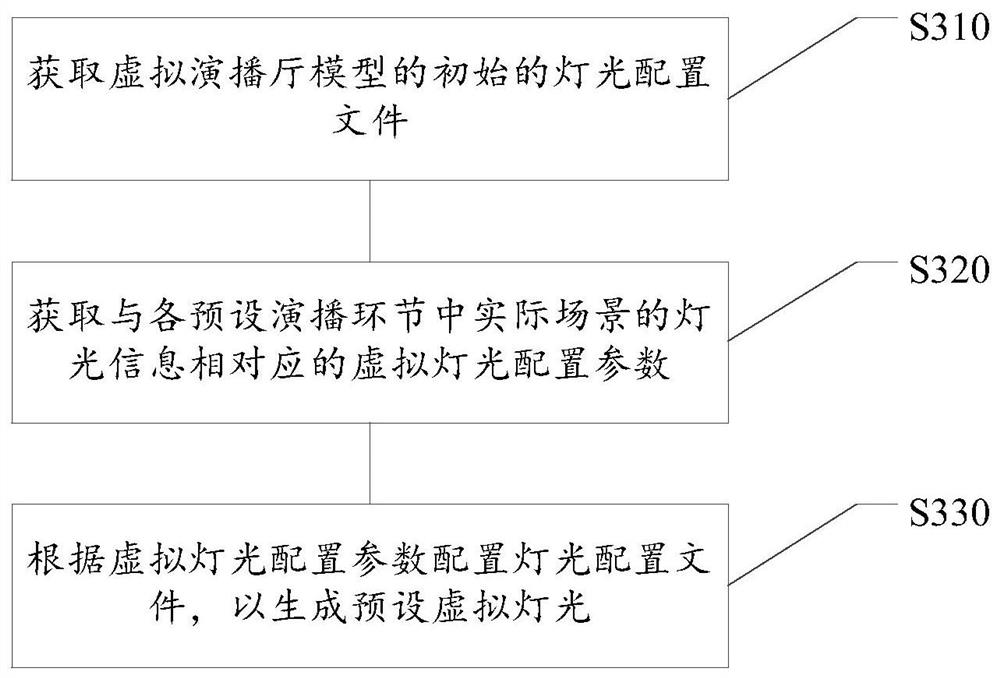

Real-time virtual scene LED shooting system and method

ActiveCN112040092ASee the full effect in real timeEasy to correctTelevision system detailsColor television detailsVirtual screenEngineering

The invention discloses a real-time virtual scene LED shooting system and method, and belongs to the field of movie and television shooting. According to the invention, the digital assets are called to construct the virtual scene according to the content needing to be presented by shooting scenes, the virtual LED screen and the virtual camera are reconstructed in a virtual engine module, and the real environment illumination information in the photostudio is synchronized to the virtual engine in real time. Distributed real-time rendering is performed on the virtual scene through the virtual engine module and the virtual scene is displayed on a virtual LED screen; the virtual engine further overlaps a picture with a depth channel rendered by the virtual engine in real time according to theposition of the real-time camera and lens distortion information outside the virtual LED screen. The physical LED screen displays the virtual LED screen, the real-time camera completes shooting, the XR module obtains the picture with the depth channel and the picture shot by the real-time camera, and a final picture is obtained through synthesis. The method can replace green screen key to achievethe effect of direct film formation in most environments, optimize the film production process, and save the cost of complex visual special effects.

Owner:浙江时光坐标科技股份有限公司

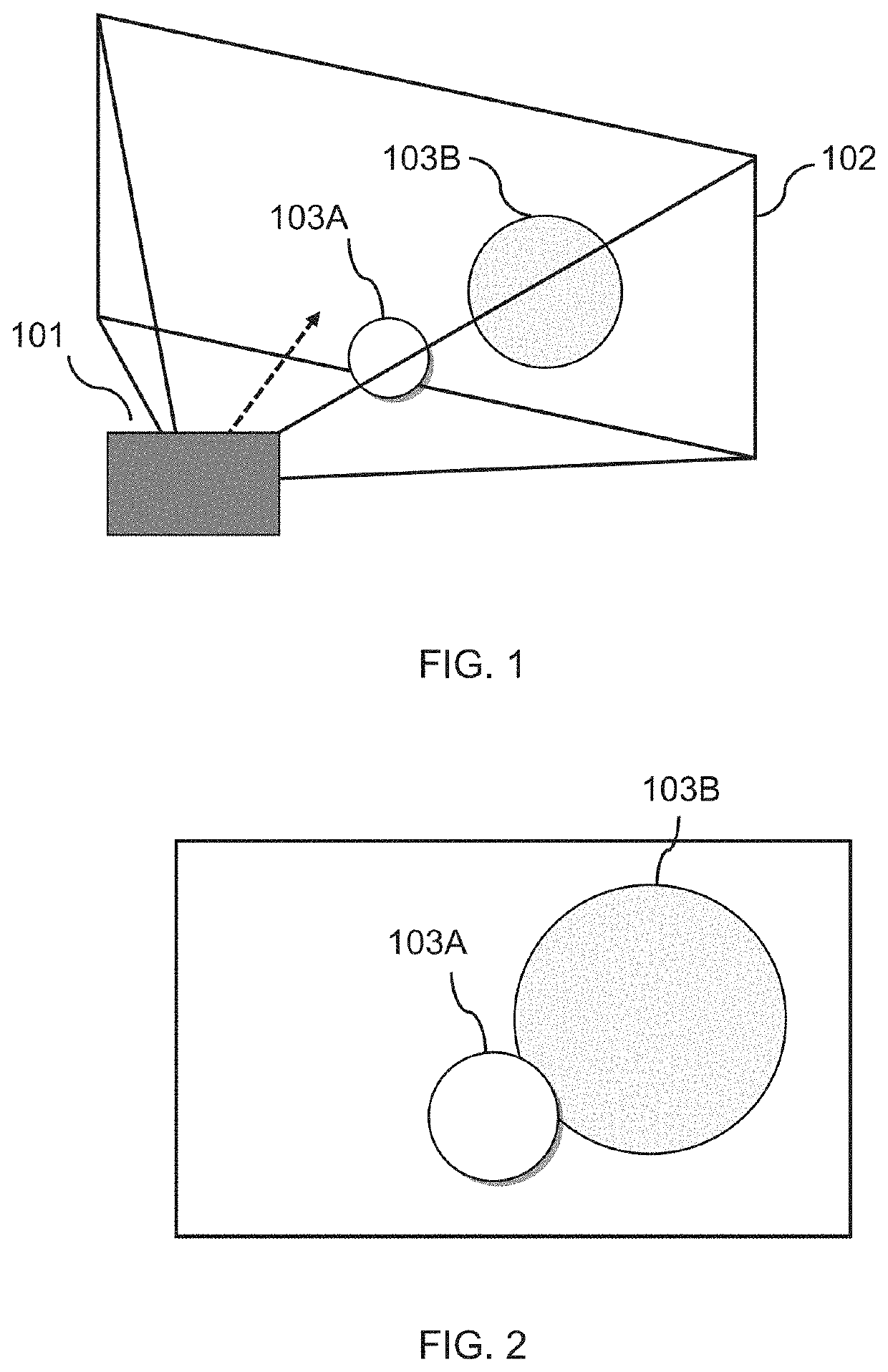

Method and system for generating an image

A method of generating an image includes receiving a video stream, the video stream having a two-dimensional video of a three-dimensional scene captured by a video camera; determining a mapping between locations in the two-dimensional video of the scene and locations in a three-dimensional representation of the scene, the mapping being determined based on a known parameter of the video camera and a known size of a feature in the three-dimensional scene; generating a three-dimensional graphical representation of the scene based on the determined mapping; determining a virtual camera angle from which the three-dimensional graphical representation of the scene is to be viewed; rendering an image corresponding to the graphical representation of the scene viewed from the determined virtual camera angle, and outputting the rendered image for display.

Owner:SONY COMPUTER ENTERTAINMENT INC

Cartoon expression based auxiliary entertainment system for video chatting

InactiveCN102455898AIncrease the fun of chattingIncrease entertainment2D-image generationCharacter and pattern recognitionVirtual cameraAnger

The invention relates to an auxiliary entertainment system for video chatting. The system can be used for adding cartoon expression pictures for video images of a user undergoing video chatting in real time so as to increase the chatting pleasure for the user. According to the system, cartoonlized expression patterns are located and drawn on various body parts, such as the face, fingers and the like, of a user in the video by using a mode recognition technology, and a specific expression pattern can also be drawn at an appointed position of the user in a video picture. The patterns can be changed according to the changes on pleasure, anger, sorrow, joy and other emotions selected by the user, and can also be changed by automatically recognizing the expressions and actions of the user according to mode recognition. Meanwhile, the system can also be compatible with the expression patterns, in general formats, used in word chatting software, and can be used for drawing the expression patterns in the video according to user settings. All the cartoon drawings are completed in real time, and a modified video stream is provided for any video chatting system used by the user in a virtual camera manner.

Owner:张明

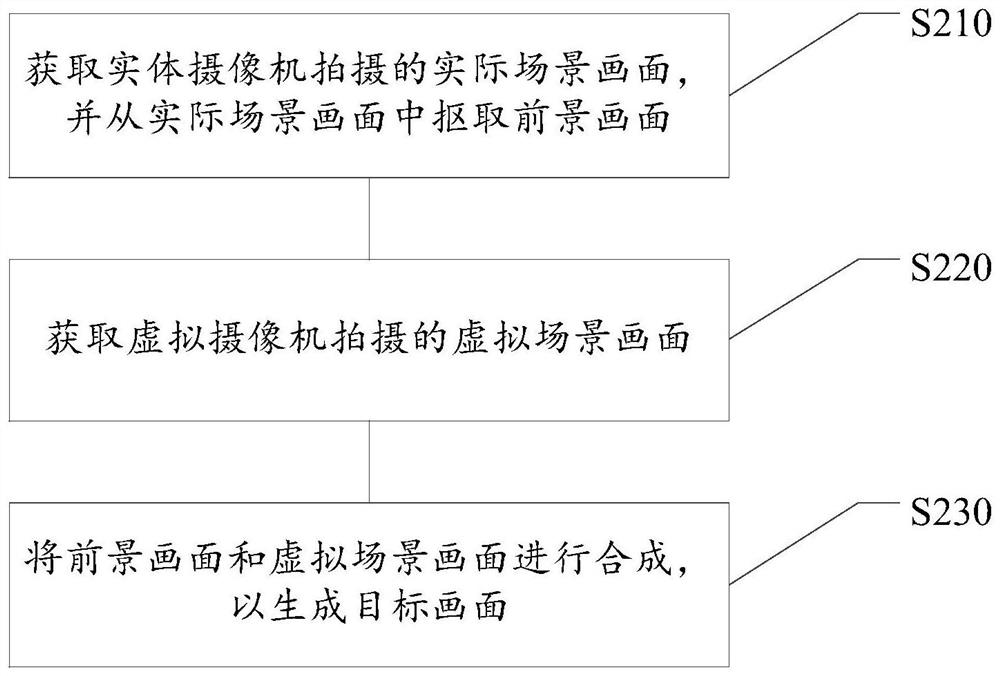

Control method and device of virtual camera in virtual studio, implementation method of virtual studio, virtual studio system, computer readable storage medium and electronic equipment

ActiveCN111698390AFlexible and simple operation controlImprove realismTelevision system detailsColor television detailsEngineeringVirtual cinematography

The invention relates to the technical field of computers, and provides a control method and device of a virtual camera in a virtual studio, an implementation method of the virtual studio, a virtual studio system, a computer readable storage medium and electronic equipment. The control method of the virtual camera in the virtual studio comprises the steps of: obtainingpreset parameters of the virtual camera in different preset studio links, whereinthe preset parameters comprise at least one of the position, the posture and the focal length; generating a corresponding mirror moving control according to each preset parameter; and in response to a triggering operation on any lens moving control, adjusting the virtual camera according to a preset parameter corresponding to any lens moving control. According to the scheme, based on the generated lens moving control, the virtual camera can shoot the virtual background pictures corresponding to different preset performance broadcasting links,so that the virtual background pictures and the actual pictures shot by the entity camera can be better fused, and the reality sense of the performance broadcasting pictures is improved.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

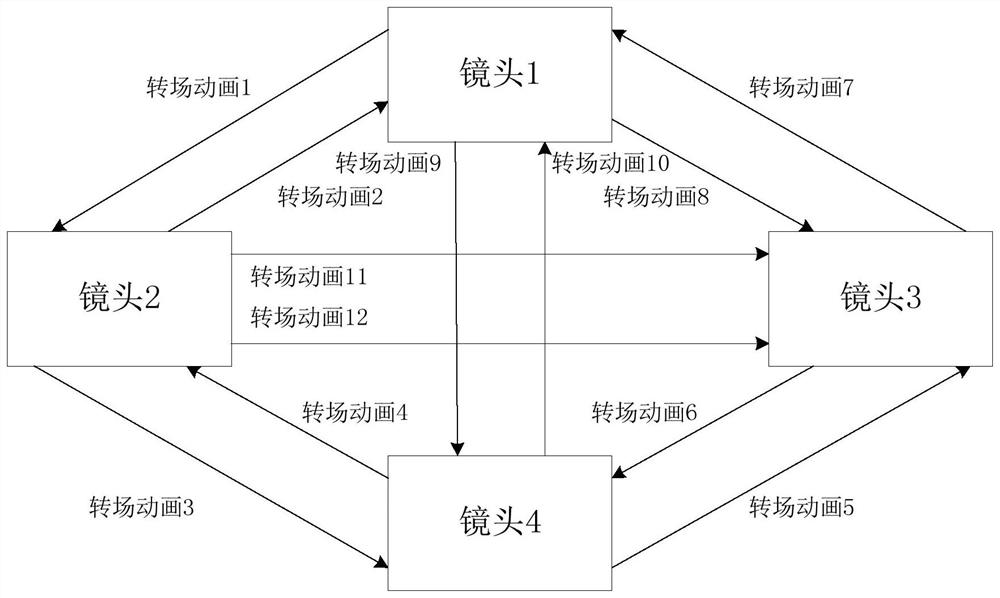

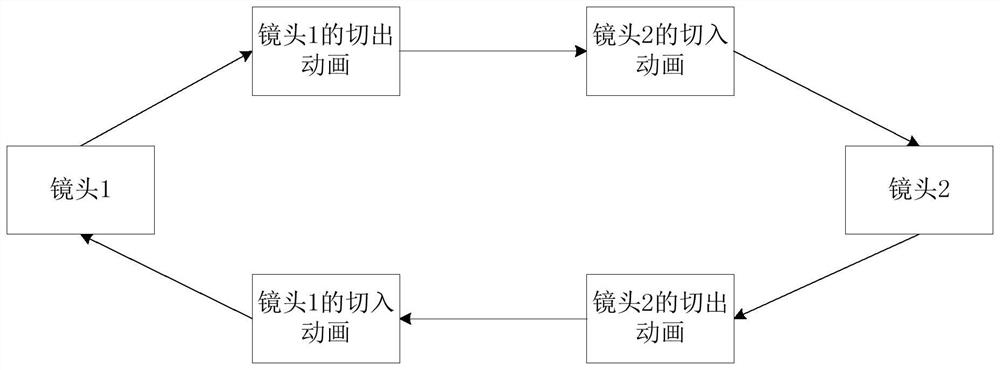

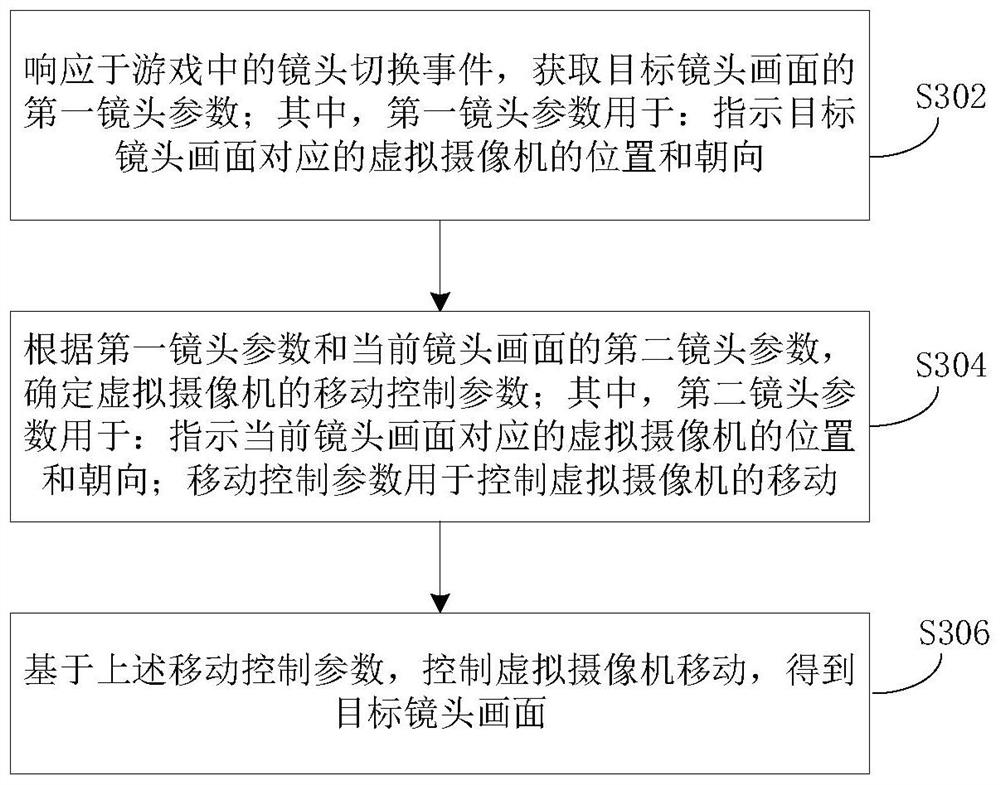

Lens switching method and device in game, and electronic equipment

PendingCN111803946ASmooth switchingImprove game visual experienceVideo gamesOphthalmologyGame player

The invention provides a lens switching method and device in a game, and electronic equipment. The method comprises the steps of obtaining a first lens parameter of a target lens picture in response to a lens switching event in a game, wherein the first lens parameter indicates the position and orientation of a virtual camera corresponding to the target lens picture; determining a movement controlparameter of the virtual camera according to the first lens parameter and a second lens parameter of a current lens picture, wherein the second lens parameter indicates the position and orientation of the virtual camera corresponding to the current lens picture, and the movement control parameter is used for controlling movement of the virtual camera; and controlling the virtual camera to move based on the movement control parameter to obtain a target lens picture. According to the method, the virtual camera is controlled to move based on the lens parameters, lens switching is achieved in a one-lens-to-bottom mode, transition animation does not need to be manufactured, only the lens parameters of all the lenses need to be set and maintained, the maintenance cost is low, lens switching issmooth, and the game visual experience of game players is improved.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

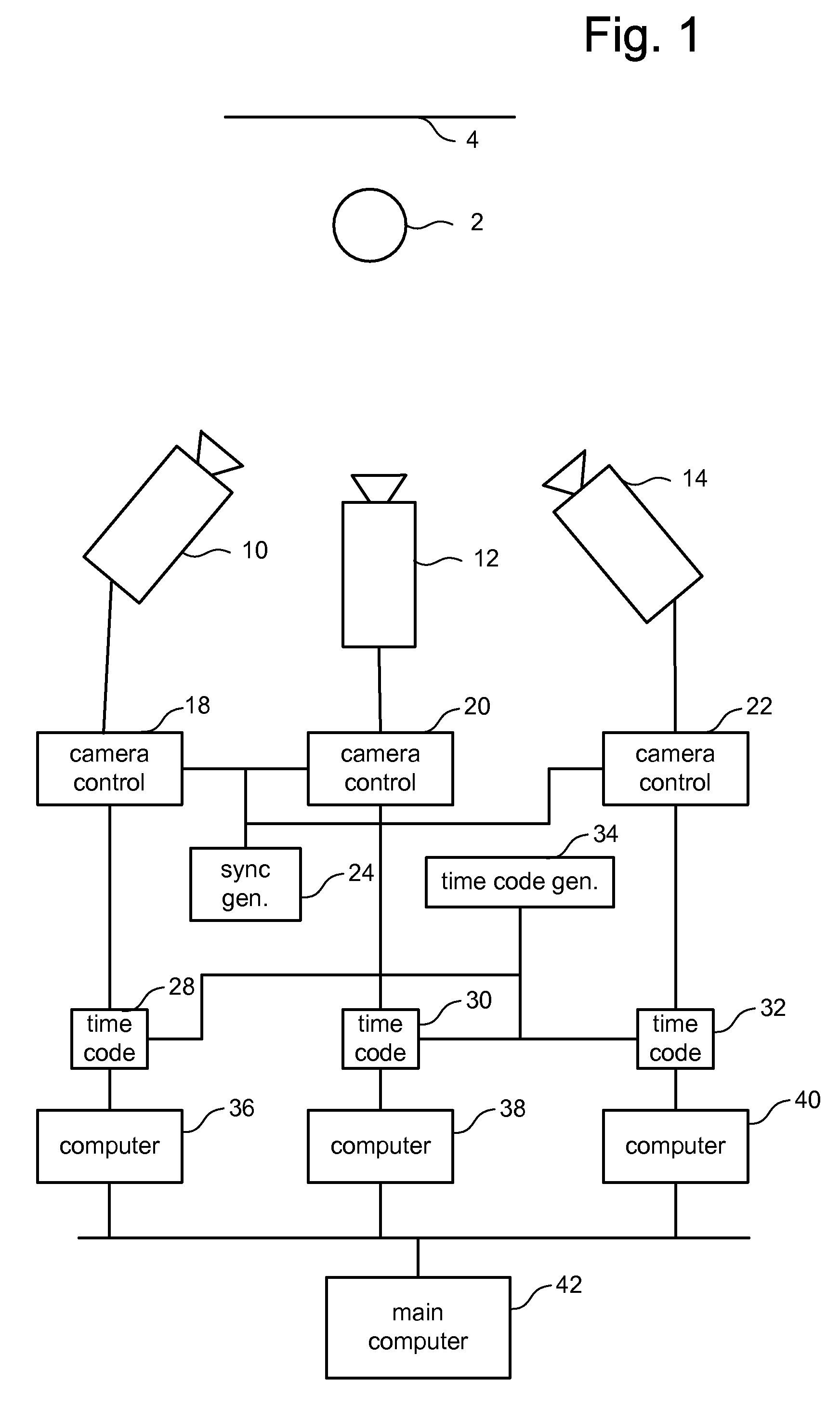

Foreground detection

ActiveUS20070285575A1Reduce errorsShorten the timeImage enhancementTelevision system detailsImage warpingForeground detection

A system is disclosed that can find an image of a foreground object in a still image or video image. Finding the image of the foreground object can be used to reduce errors and reduce the time needed when creating morphs of an image. One implementation uses the detection of the image of the foreground object to create virtual camera movement, which is the illusion that a camera is moving around a scene that is frozen in time.

Owner:SPORTSMEDIA TECH CORP

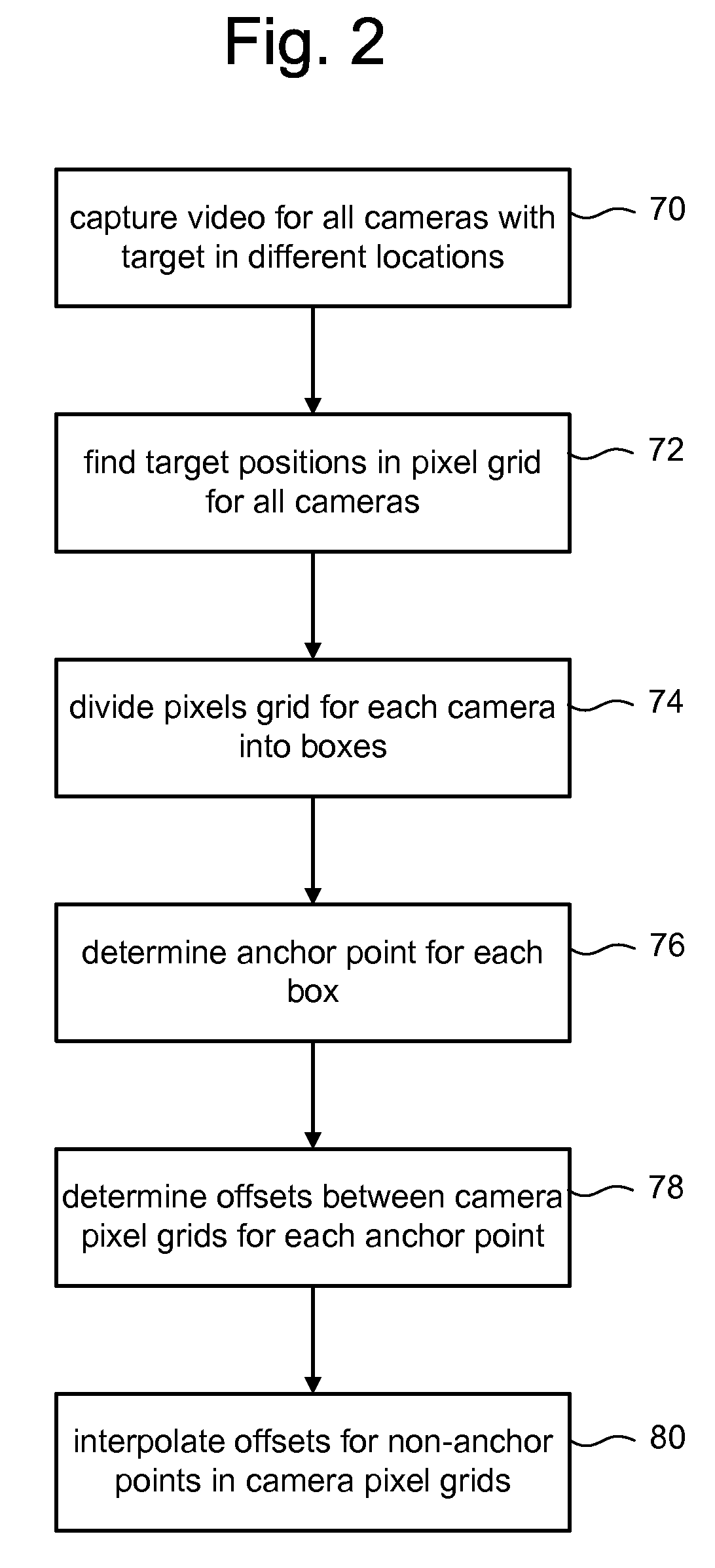

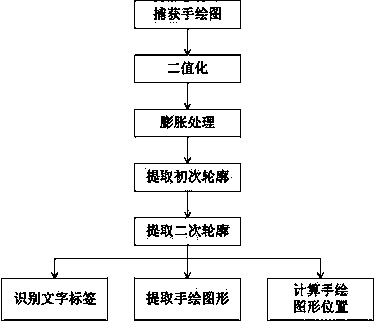

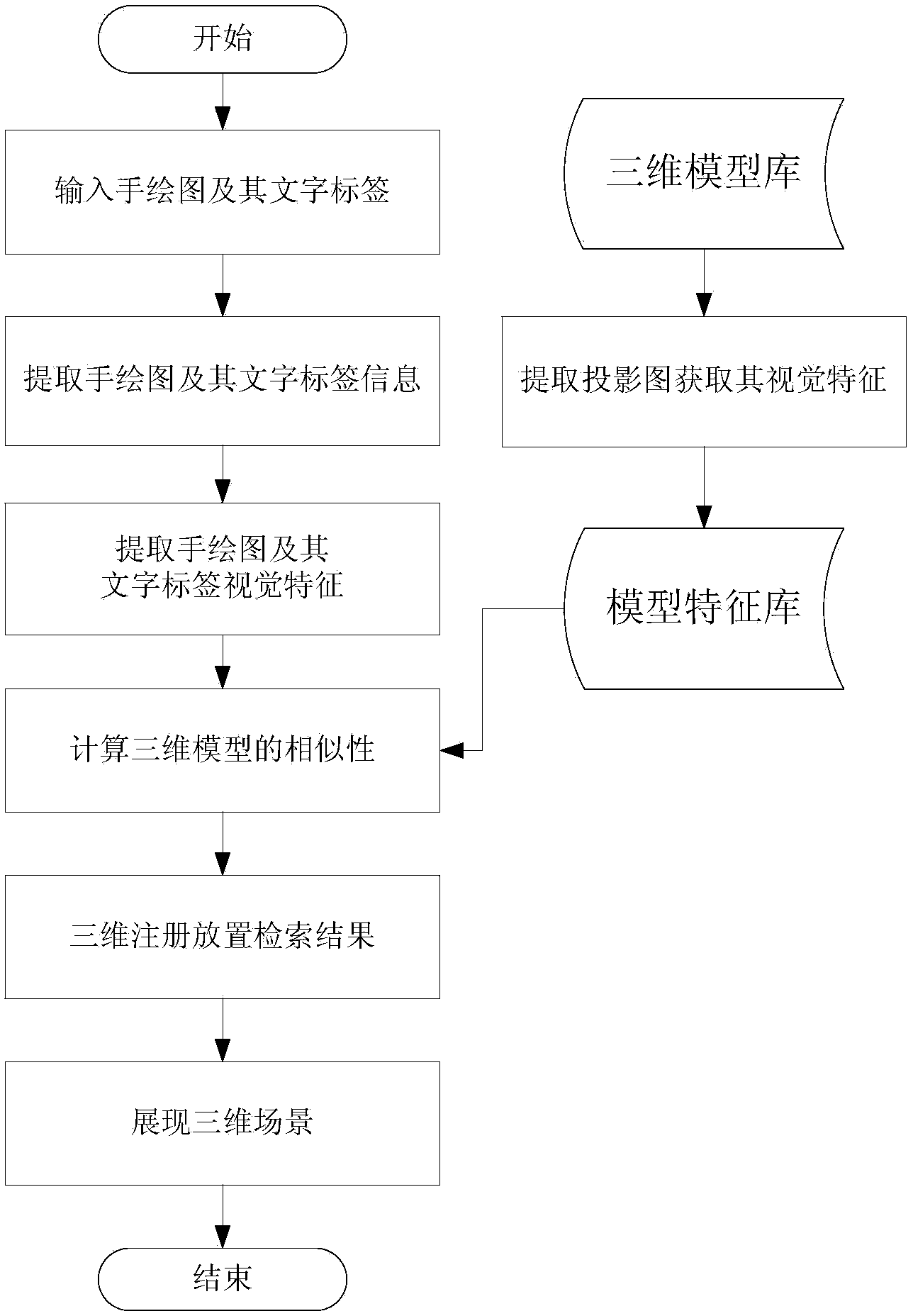

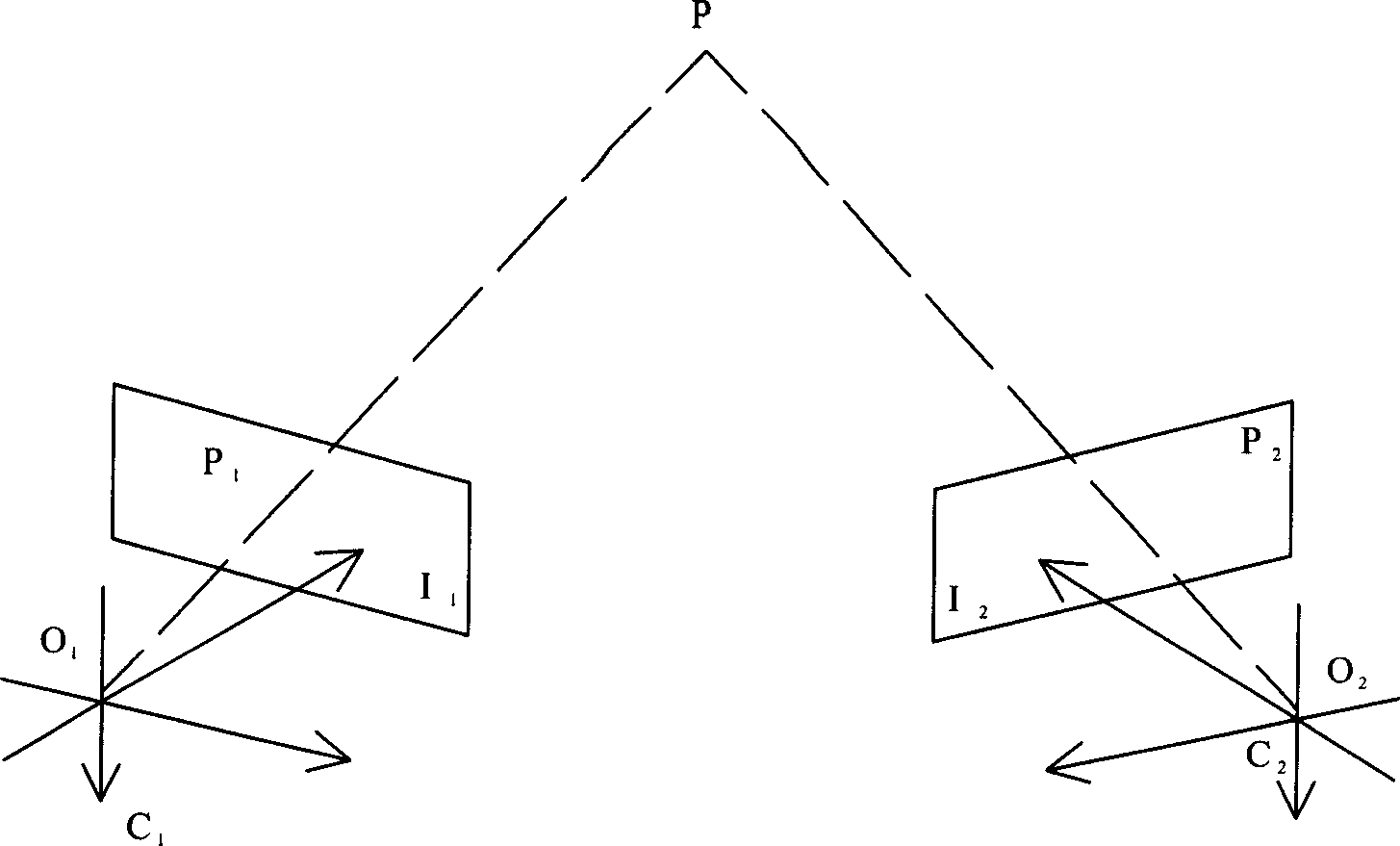

Hand-drawn scene three-dimensional modeling method combining multi-perspective projection with three-dimensional registration

InactiveCN103729885AAchieve retrievalHigh precisionSpecial data processing applications3D modellingDimensional modelingVirtual camera

The invention provides a hand-drawn scene three-dimensional modeling method combining multi-perspective projection with three-dimensional registration. The three-dimensional modeling method comprises steps that standardized preprocessing is performed on all three-dimensional models in a three-dimensional model base, virtual cameras are arranged at vertexes of a regular polyhedron, projection pictures at all angles of each three-dimensional model are shot to represent visual shapes of the three-dimensional model, visual features of all the projection pictures of each three-dimensional model are extracted, and a three-dimensional model feature base is established according to the visual features; users draw two-dimensional hand-drawn pictures of each three-dimensional model of a three-dimensional scene needing showing and character labels of the two-dimensional hand-drawn drawings by hands, images are shot through cameras, processing on image regions is performed, visual features of hand-drawn pictures are extracted, character label regions subjected to processing serve as retrieval key words, similarity calculation is performed on visual features of hand-drawn pictures and three-dimensional model features of the three-dimensional model feature base, retrieval is performed to obtain three-dimensional models of a three-dimensional scene, three-dimensional models with largest similarity are projected to corresponding positions through a three-dimensional registration algorithm, and then show of three-dimensional modeling of the hand-drawn scene and a three-dimensional is achieved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

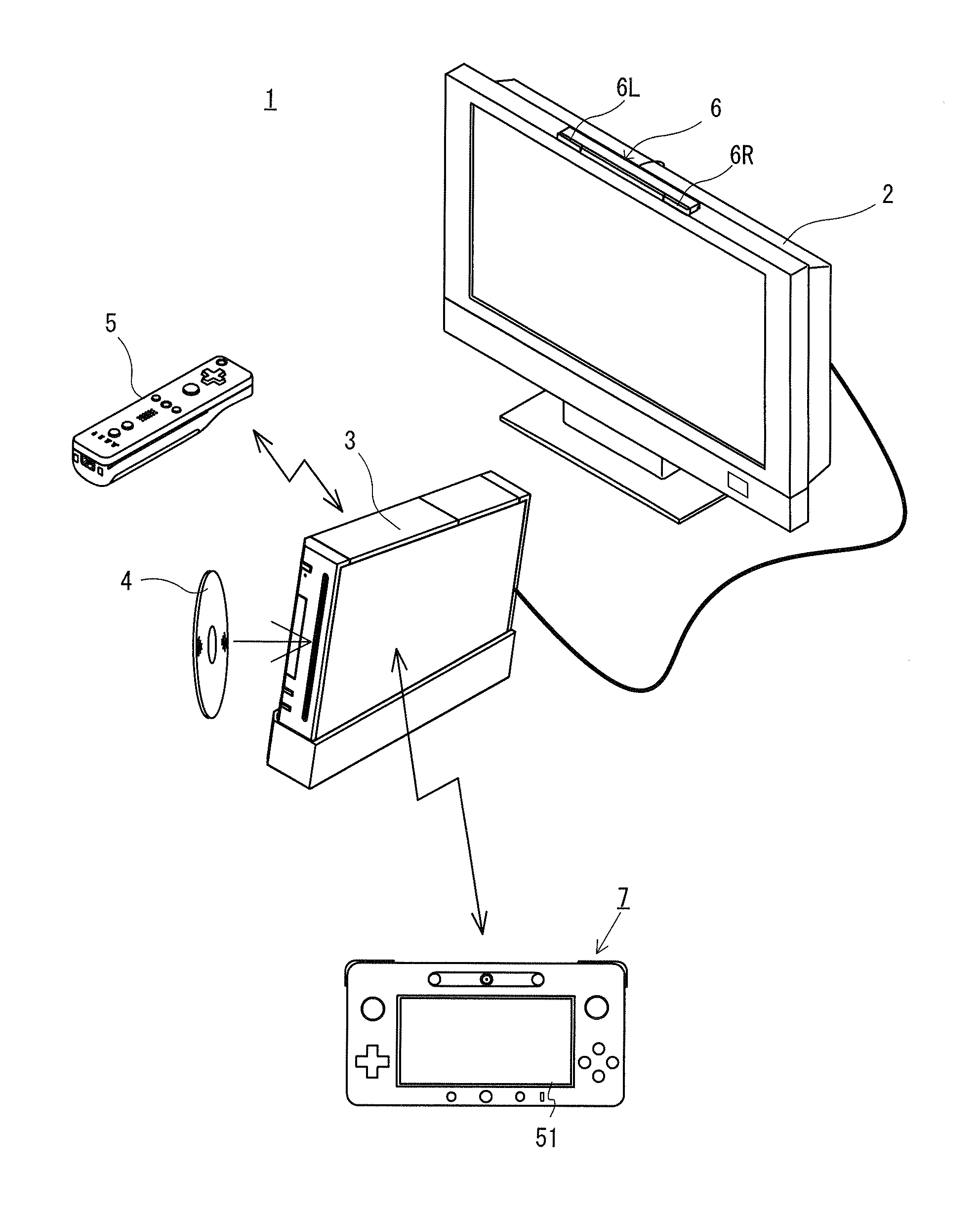

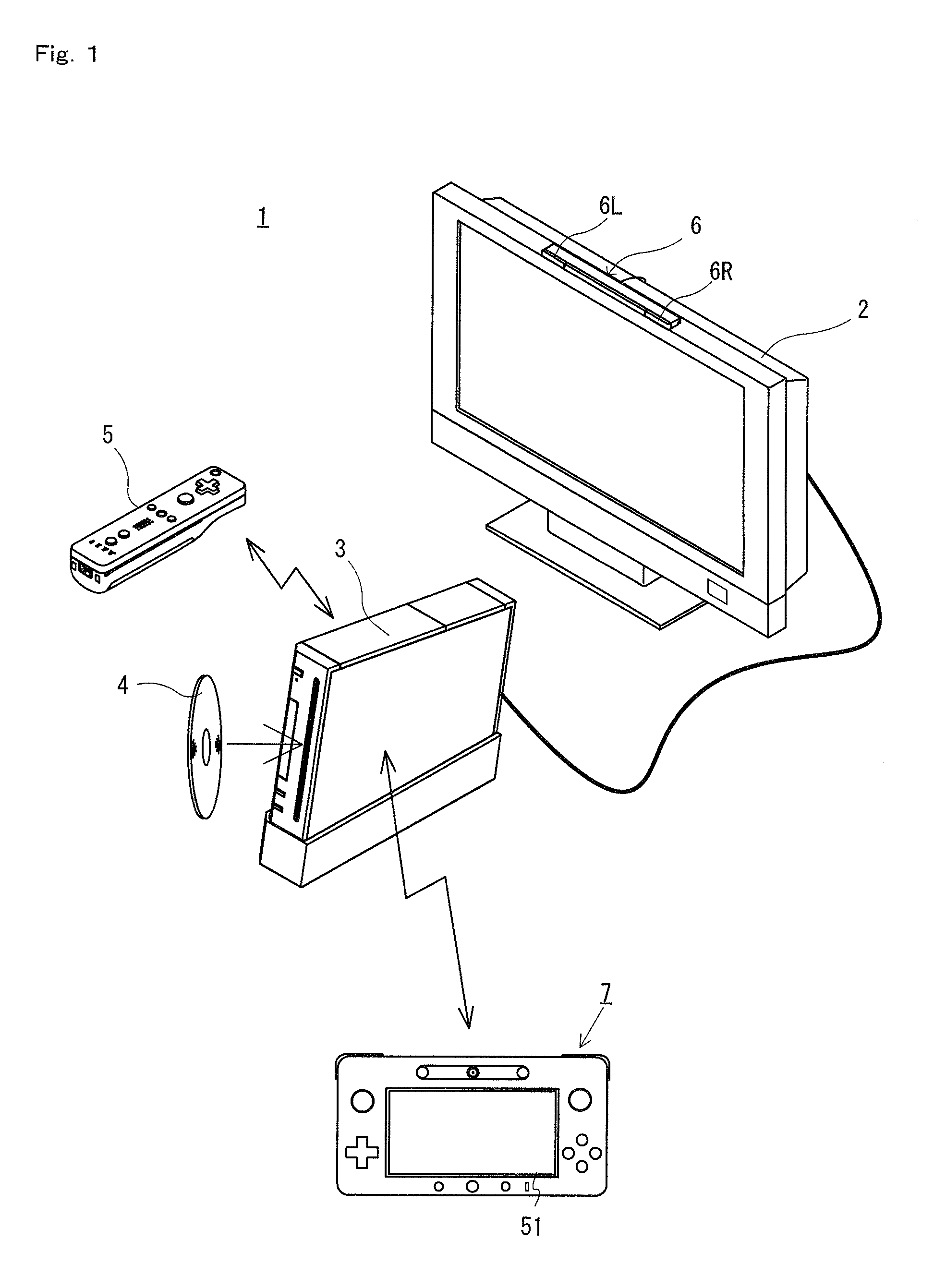

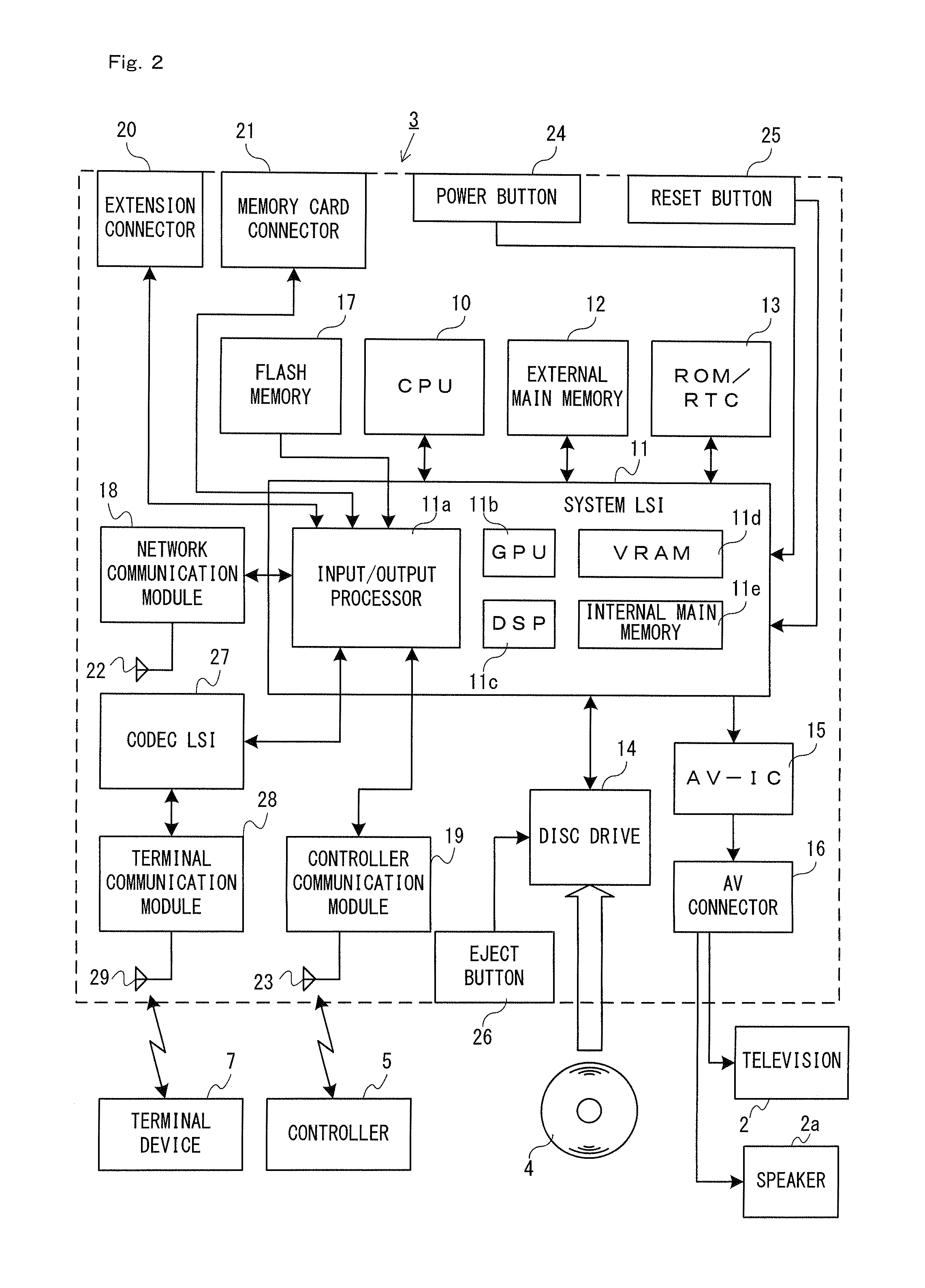

Game system, game device, storage medium storing game program, and game process method

ActiveUS20120165099A1Improve controllabilitySimple and intuitive operationVideo gamesSpecial data processing applicationsVirtual spaceVirtual camera

An example game system includes a controller device, and a game process section for performing a game process based on an operation on the controller device. The controller device includes a plurality of direction input sections, a sensor section for obtaining a physical quantity used for calculating an attitude of the controller device, and a display section for displaying a game image. The game process section first calculates the attitude of the controller device based on the physical quantity obtained by the sensor section. Then, the game process section controls an attitude of a virtual camera in a virtual space based on the attitude of the controller device, and controls a position of the virtual camera based on an input on the direction input section. A game image to be displayed on the display section is generated based on the position and the attitude of the virtual camera.

Owner:NINTENDO CO LTD

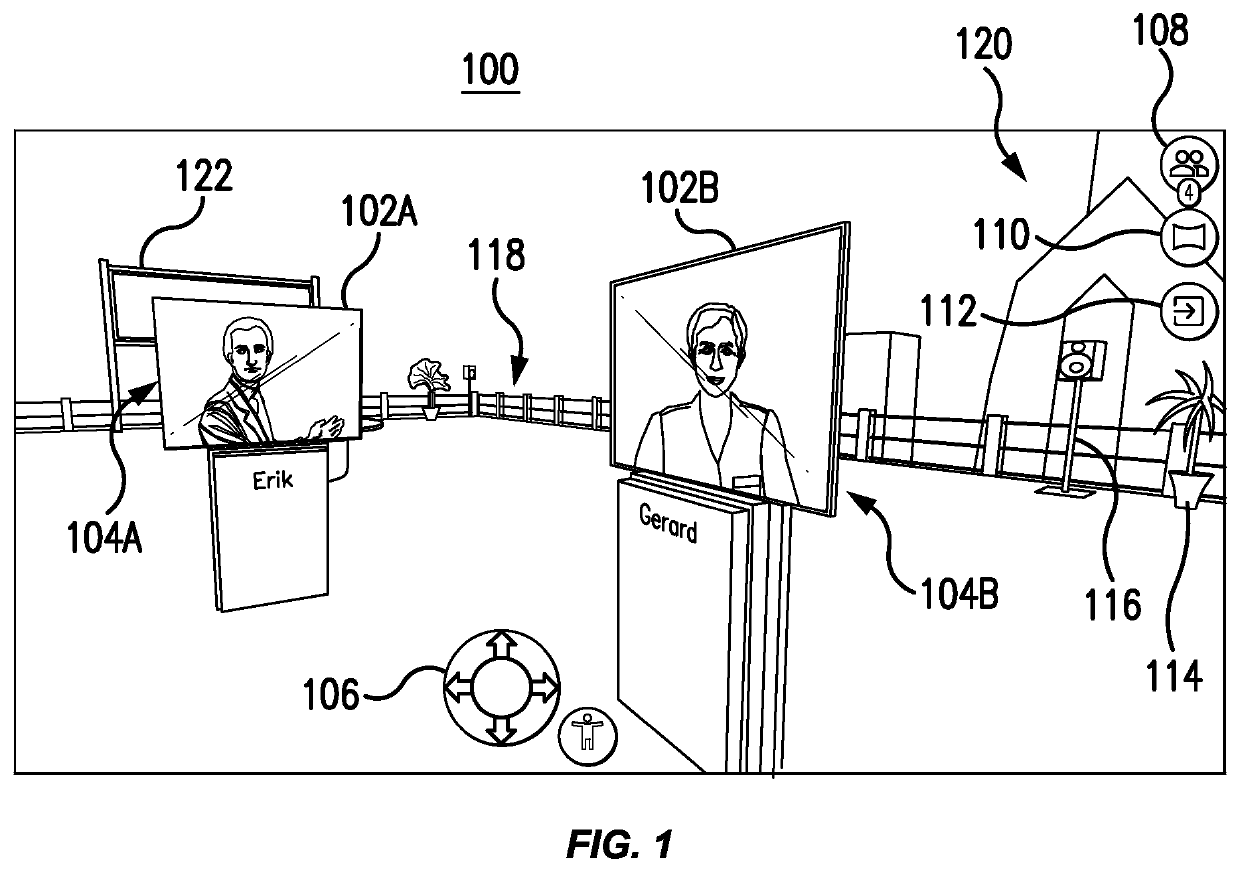

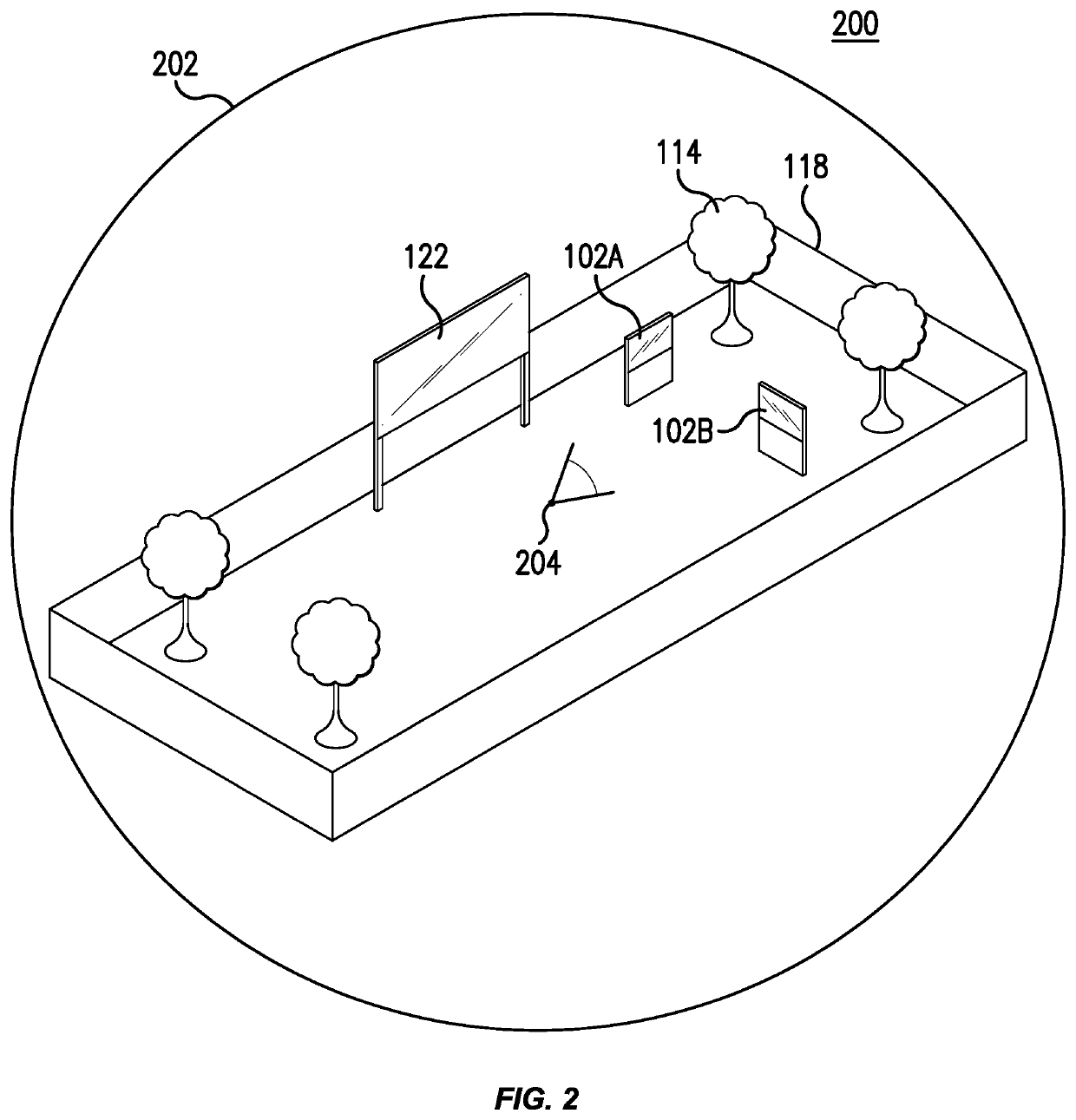

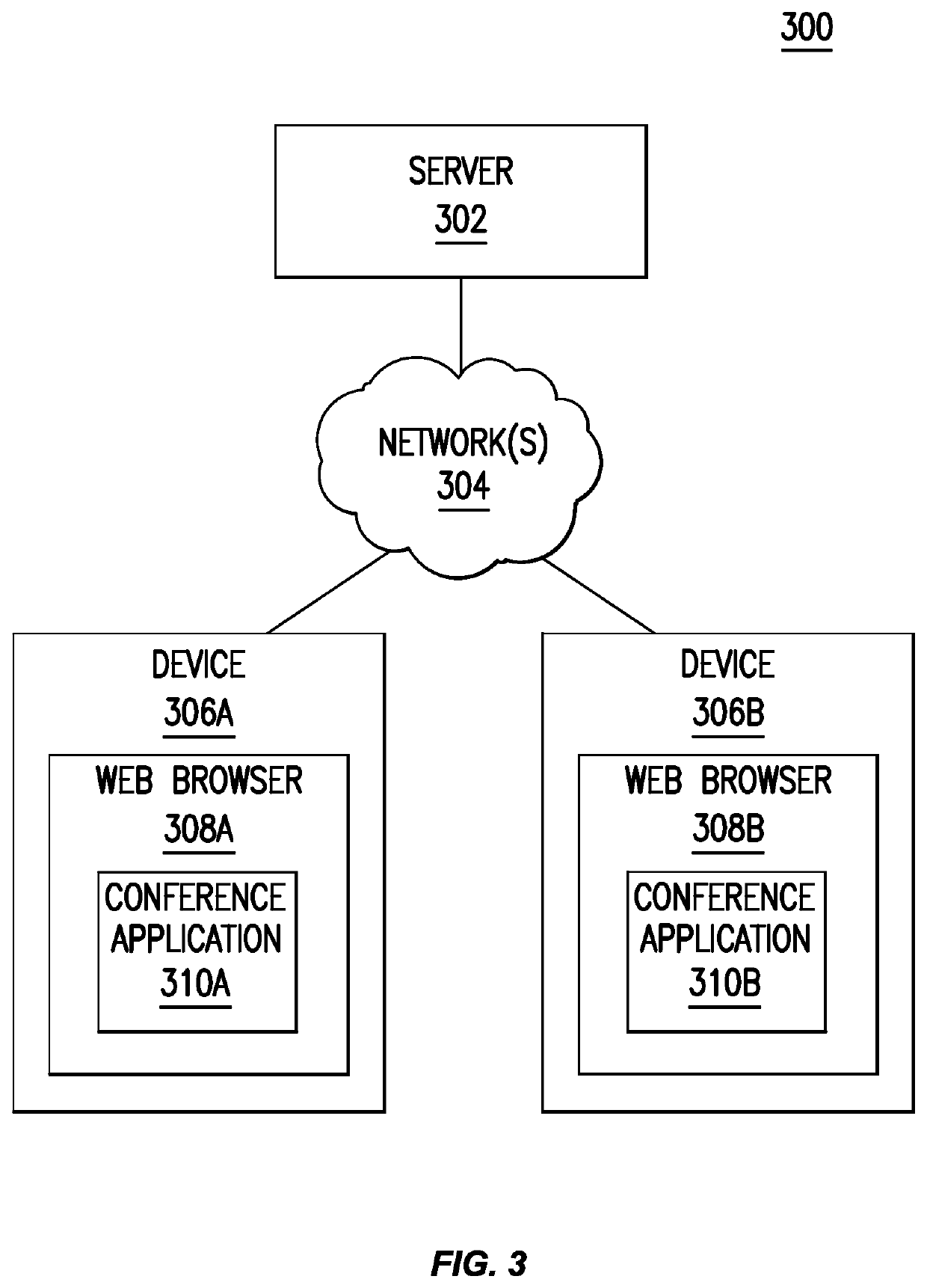

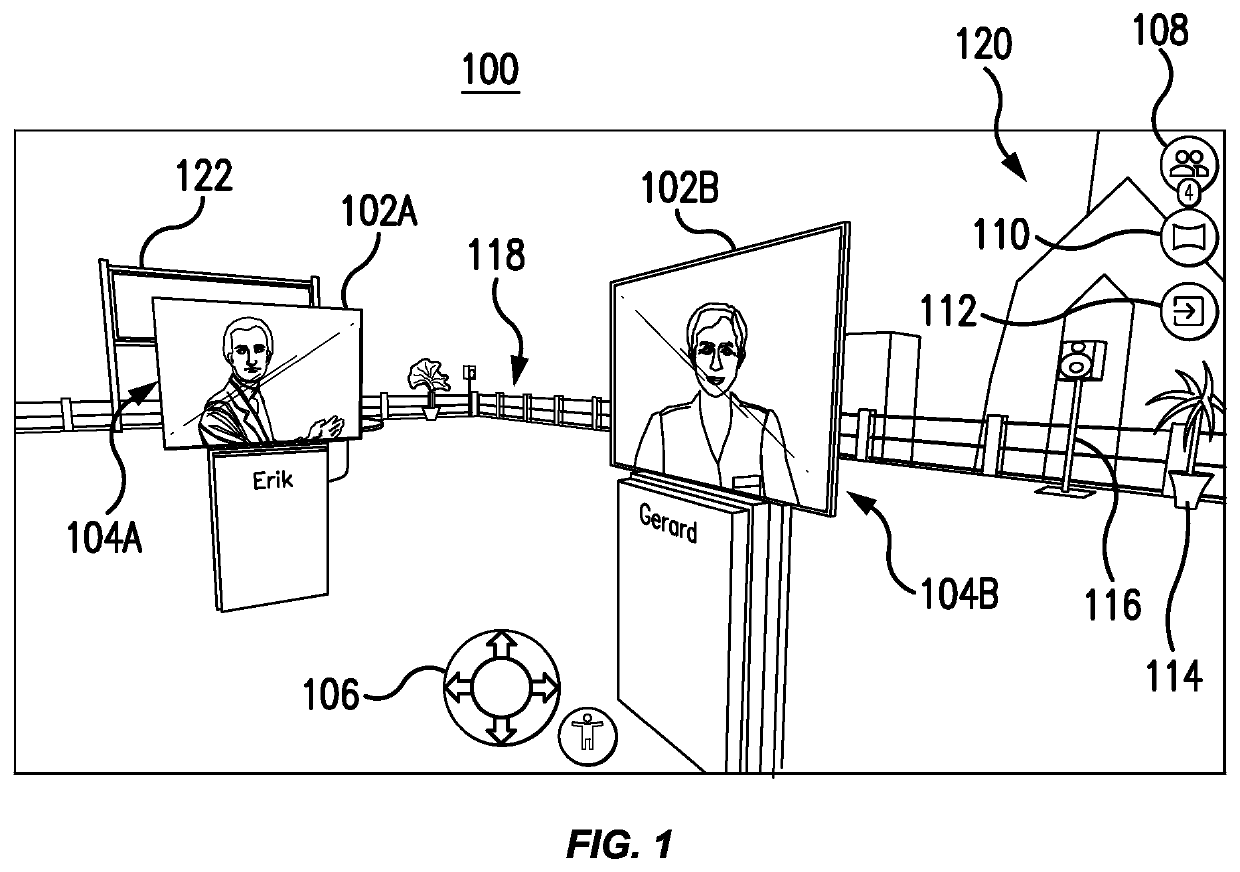

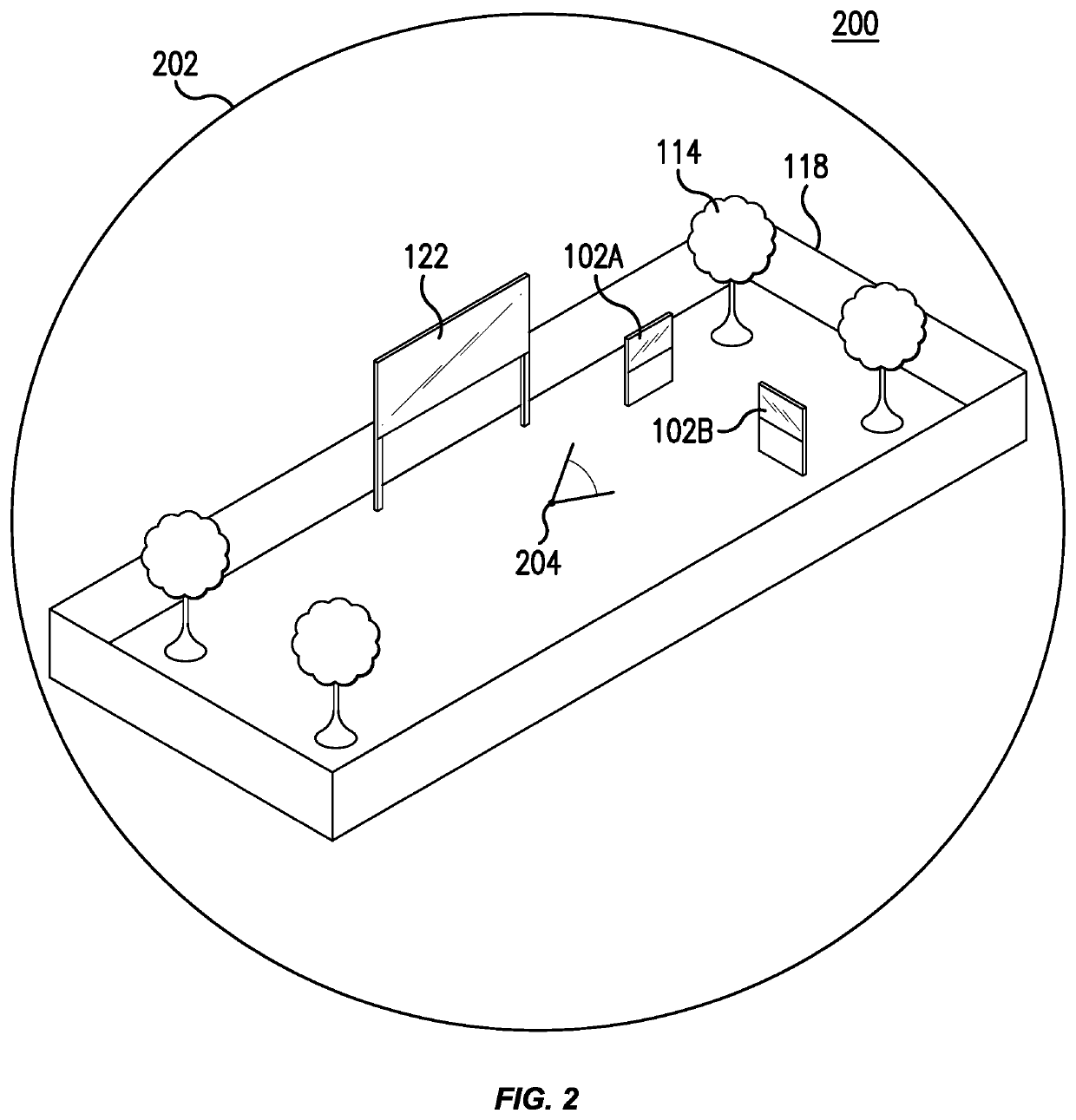

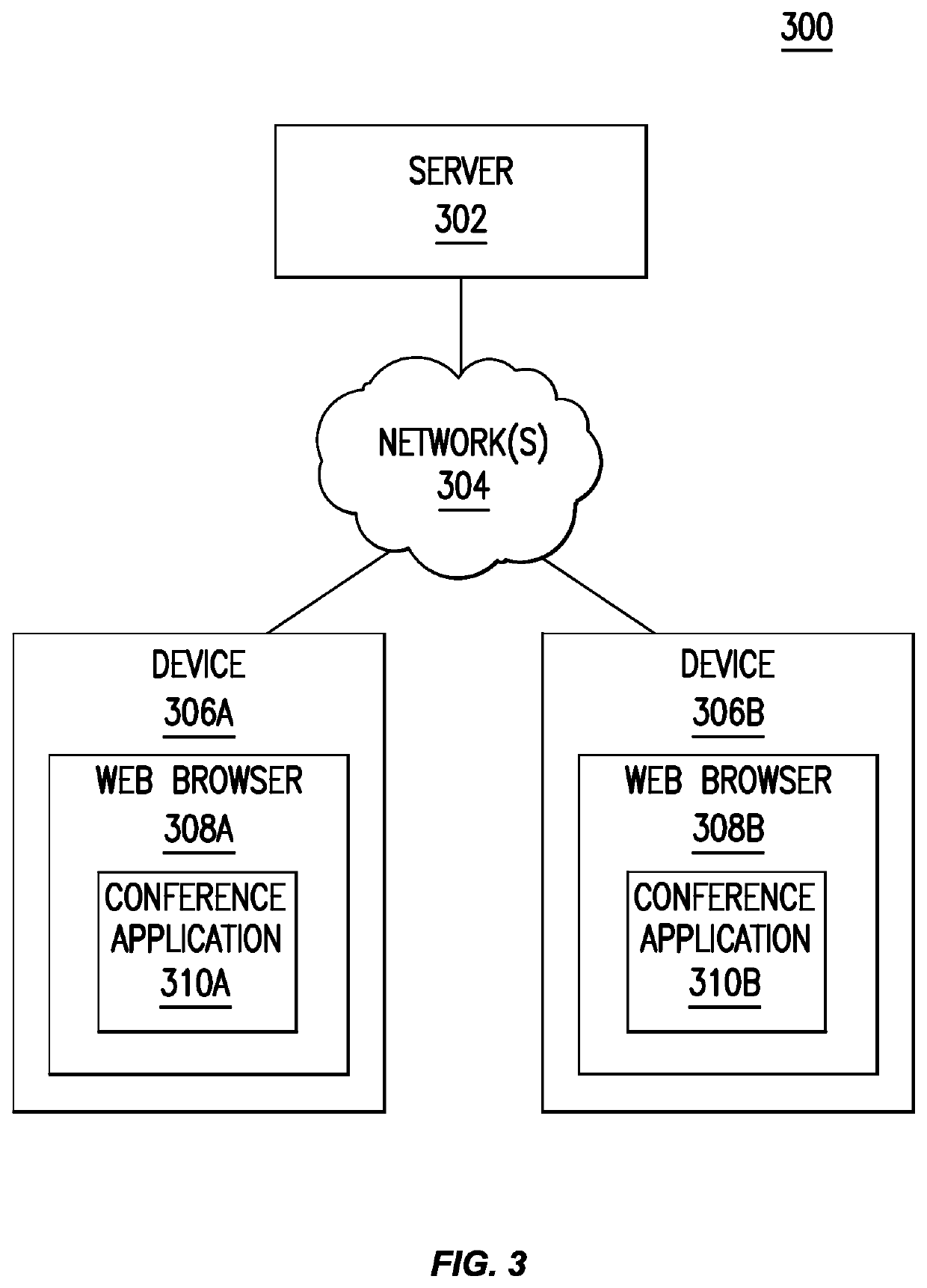

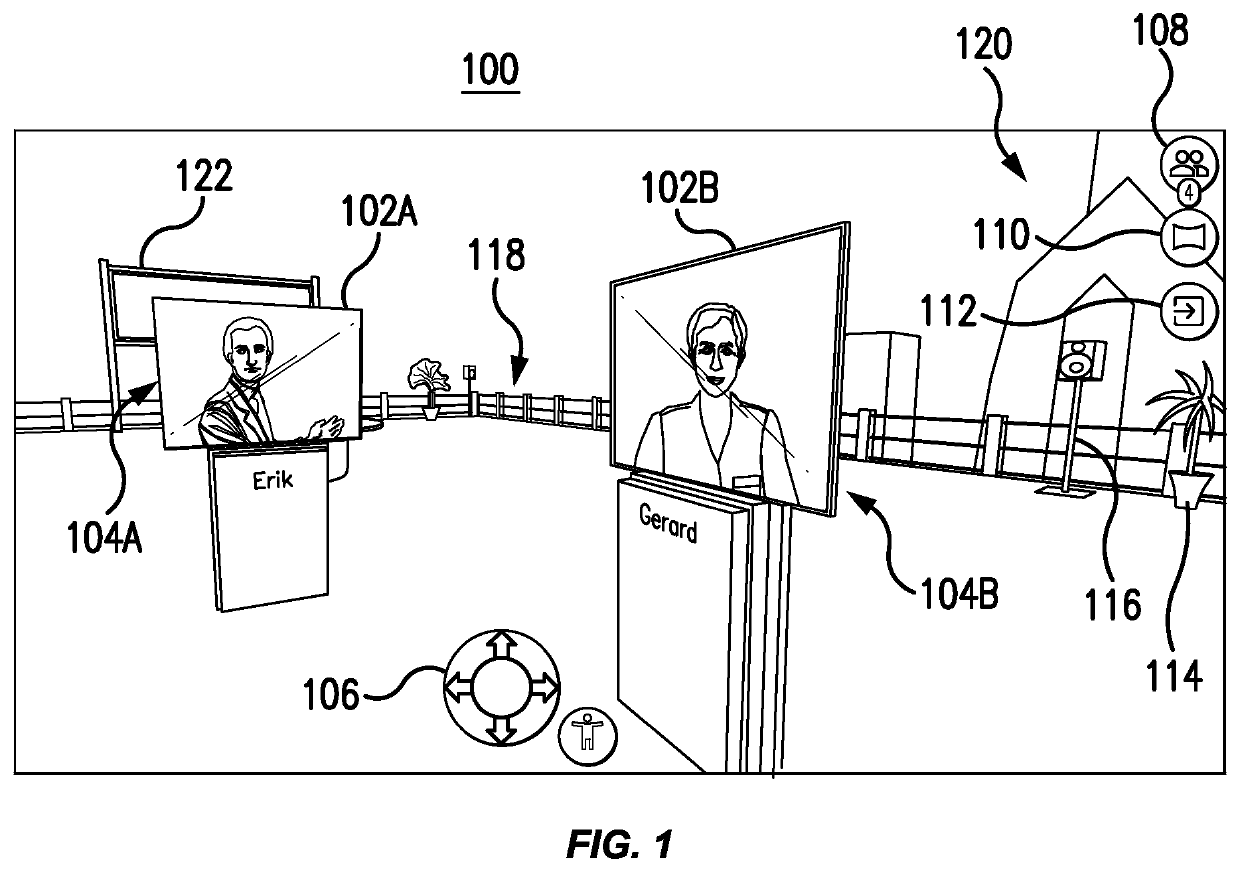

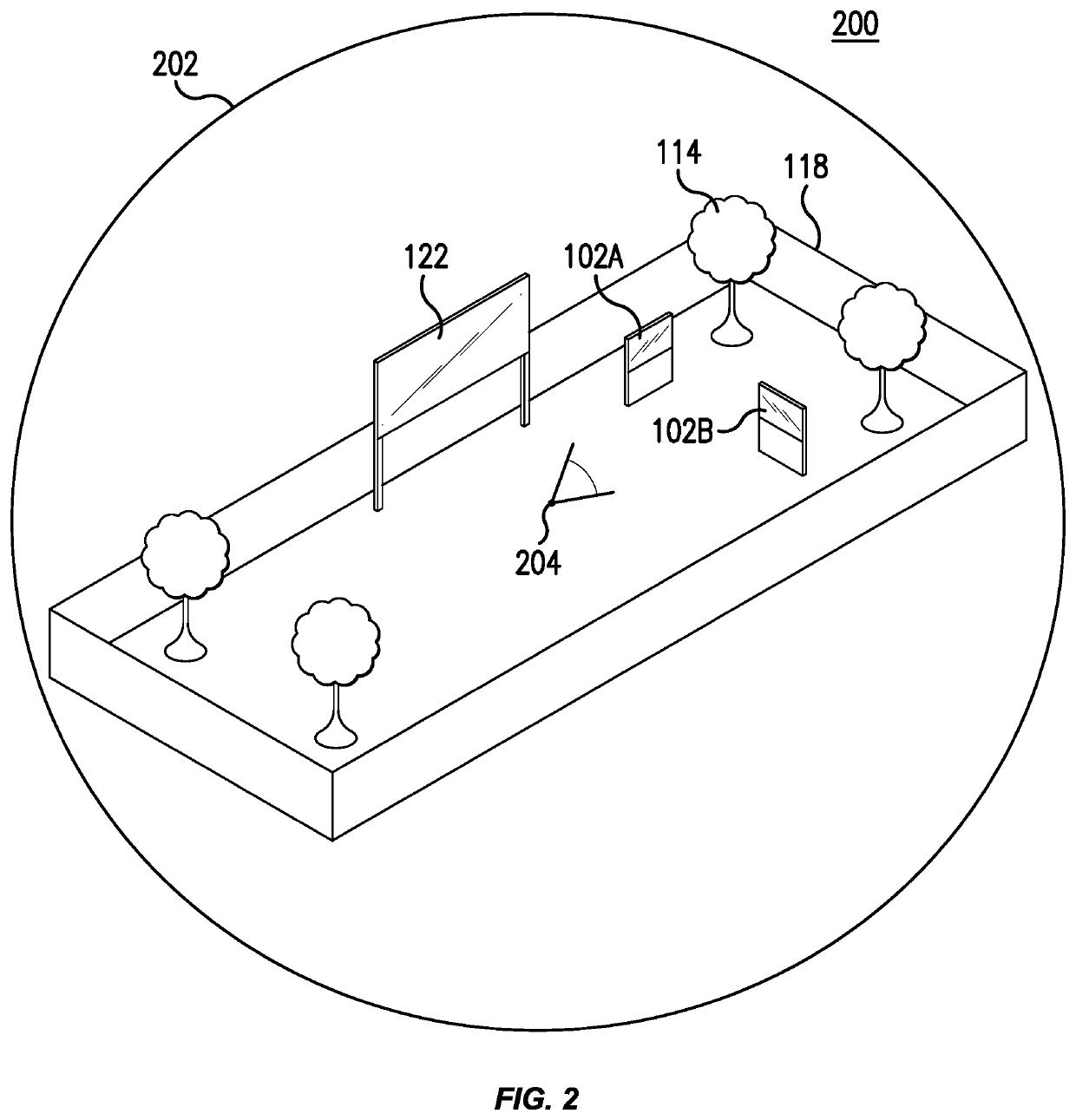

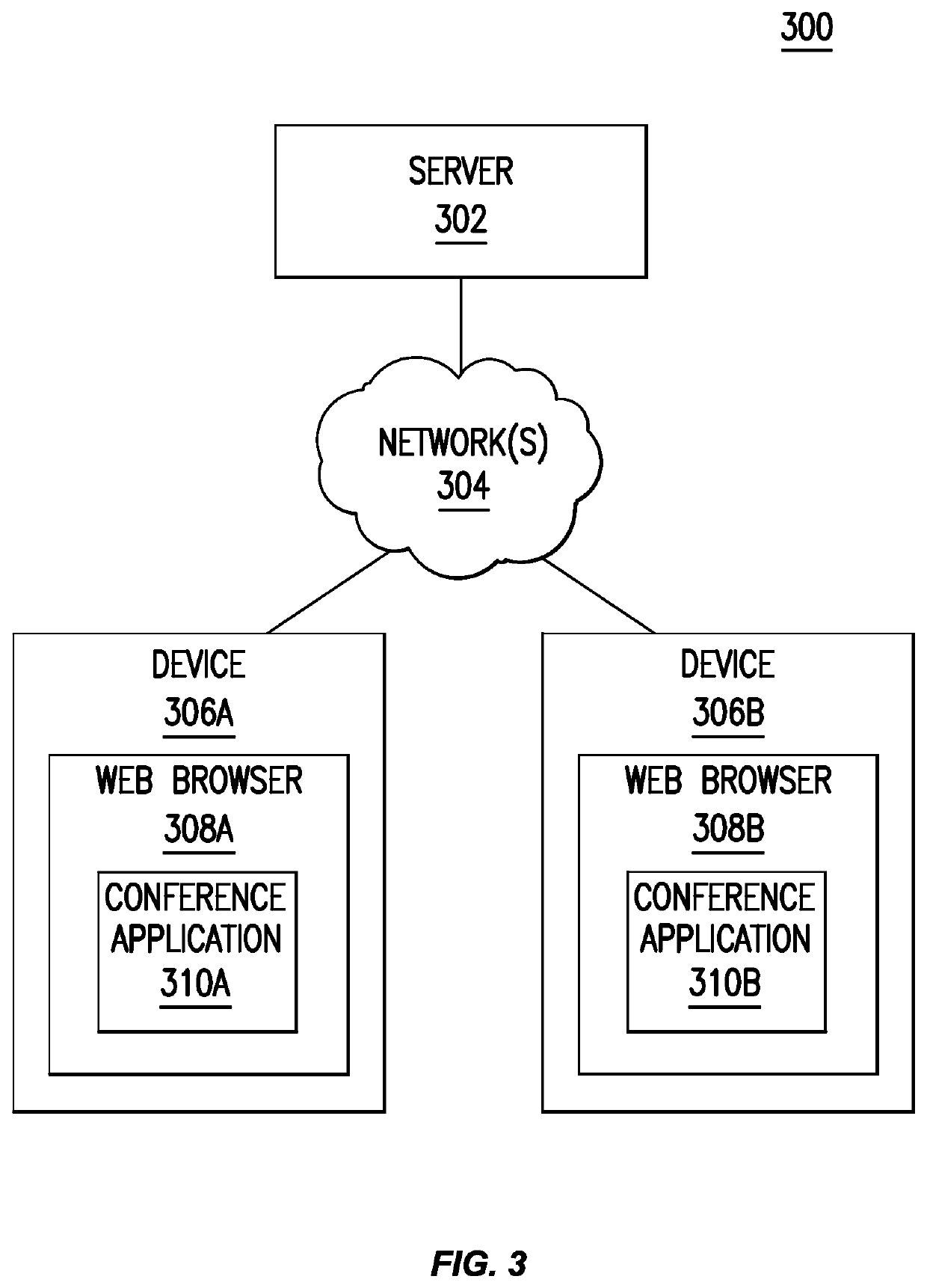

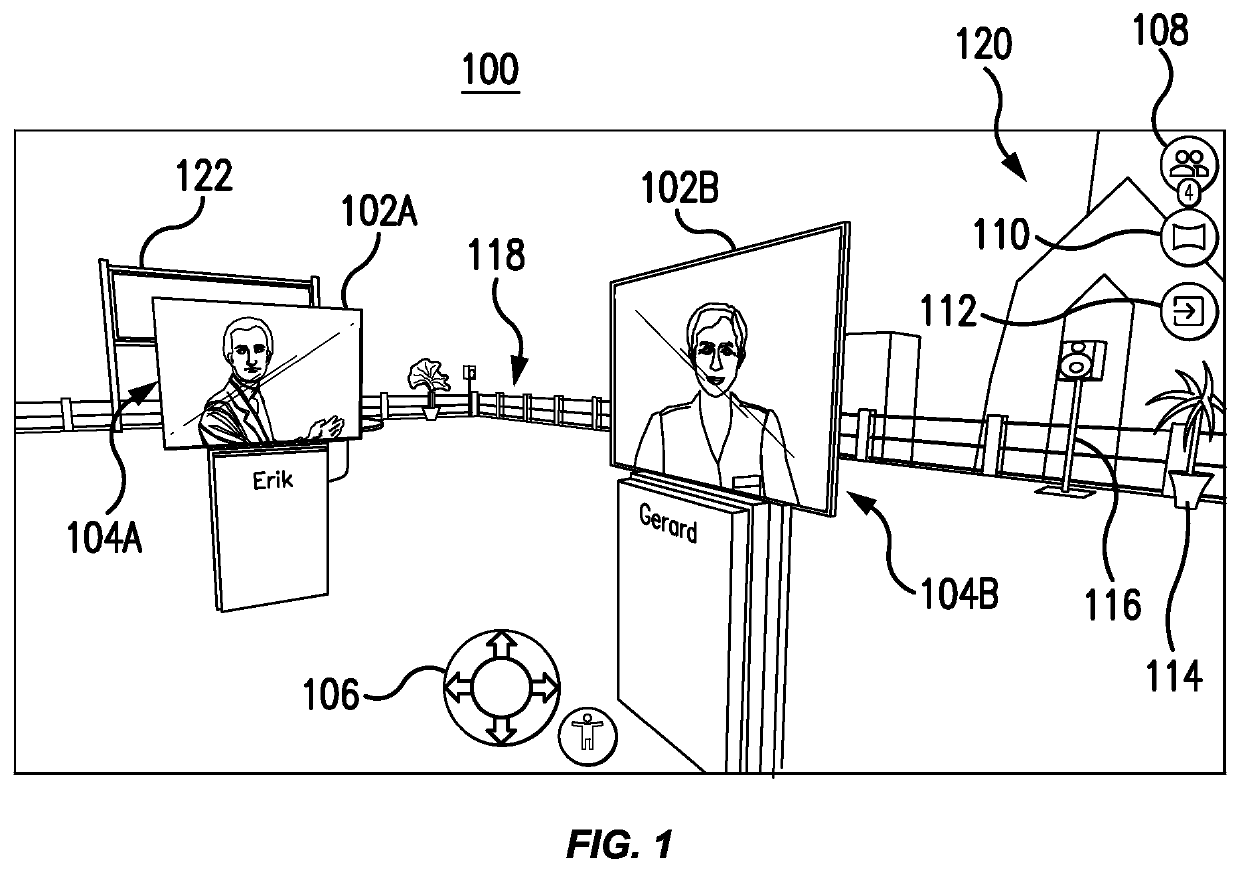

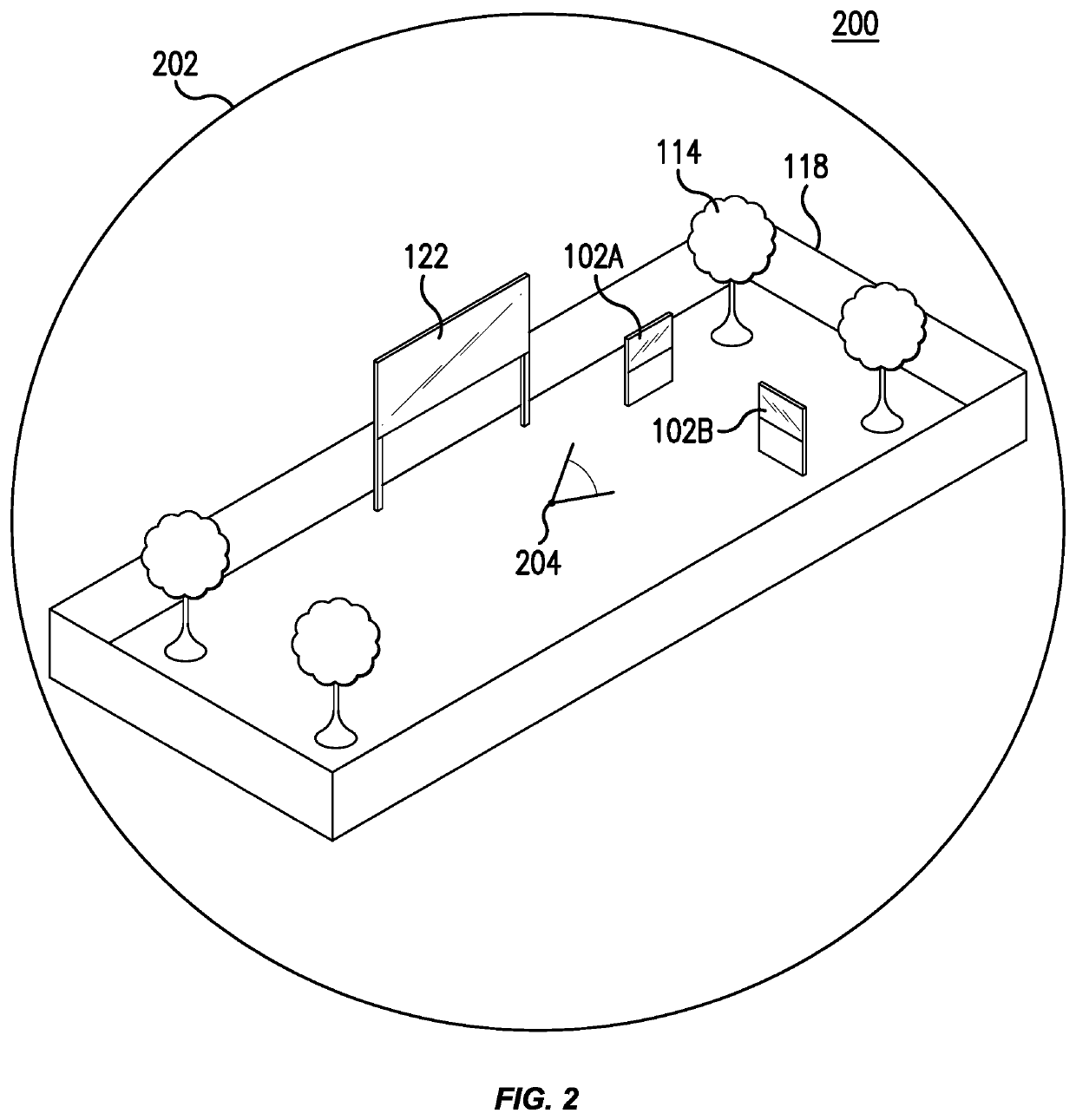

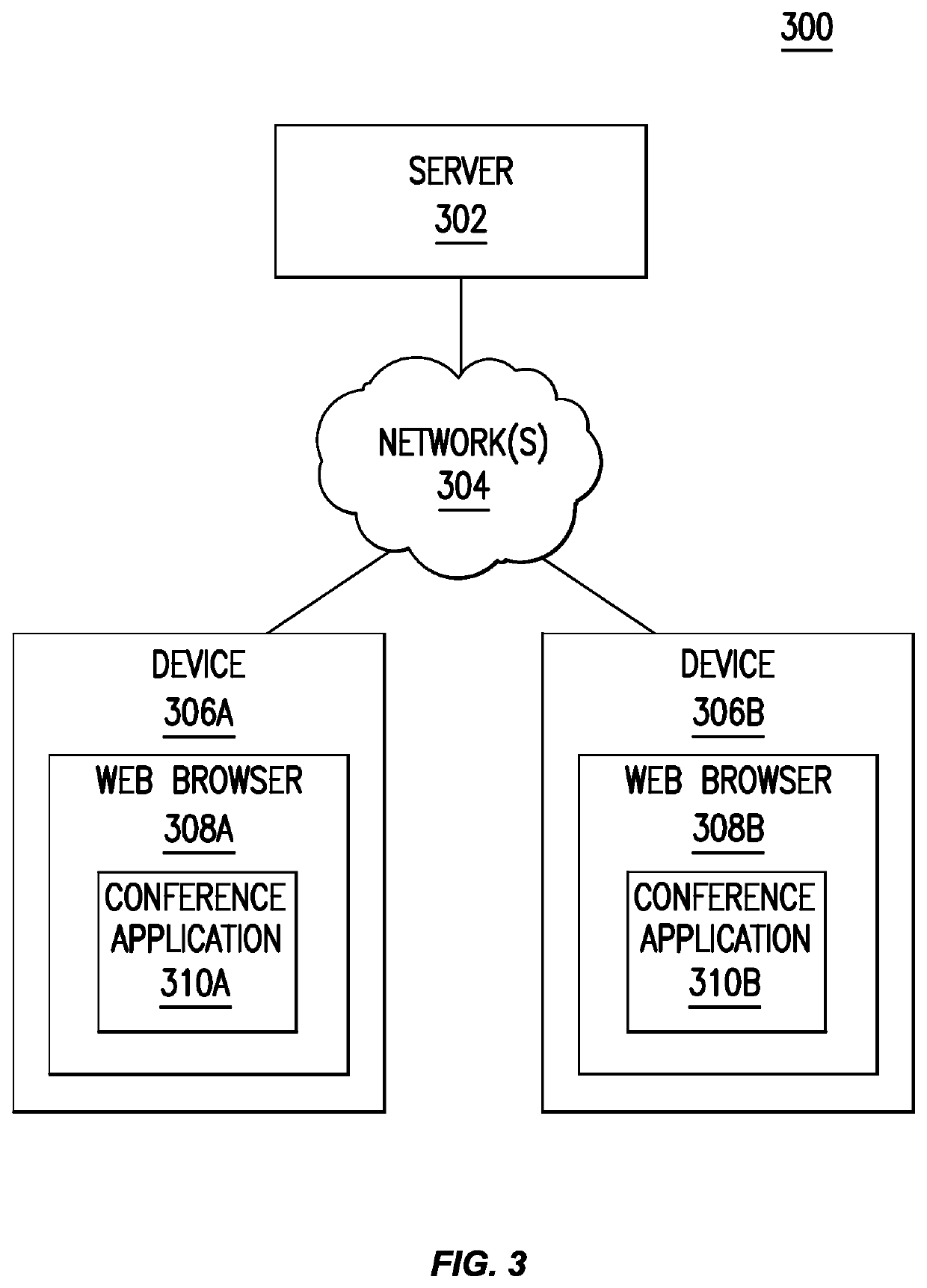

Web-based videoconference virtual environment with navigable avatars, and applications thereof

ActiveUS10979672B1Avoid the needSpecial service provision for substationTelevision conference systemsVirtual spaceClassical mechanics

Disclosed herein is a web-based videoconference system that allows for video avatars to navigate within the virtual environment. The system has a presented mode that allows for a presentation stream to be texture mapped to a presenter screen situated within the virtual environment. The relative left-right sound is adjusted to provide sense of an avatar's position in a virtual space. The sound is further adjusted based on the area where the avatar is located and where the virtual camera is located. Video stream quality is adjusted based on relative position in a virtual space. Three-dimensional modeling is available inside the virtual video conferencing environment.

Owner:KATMAI TECH HLDG LLC

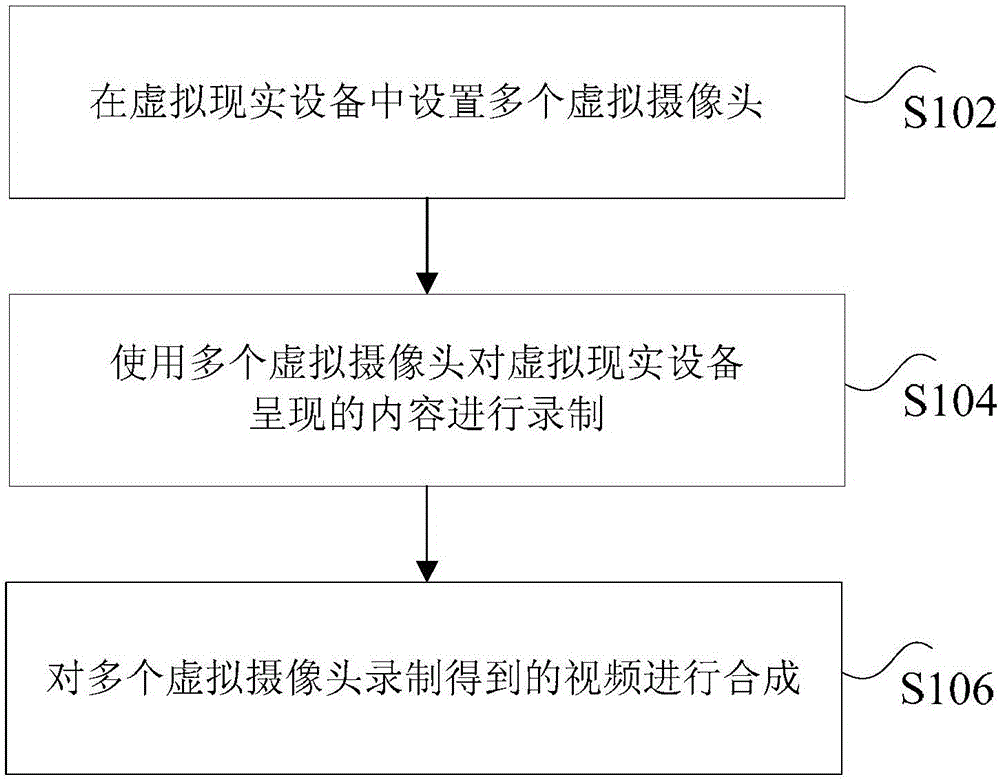

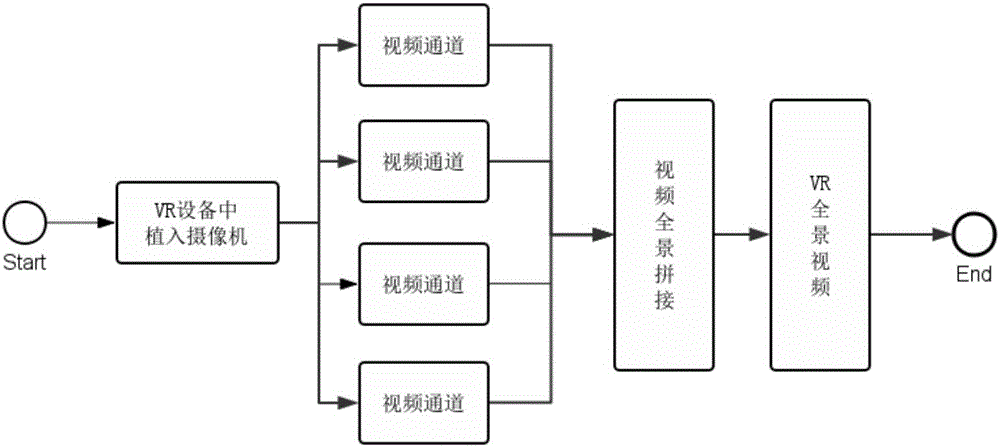

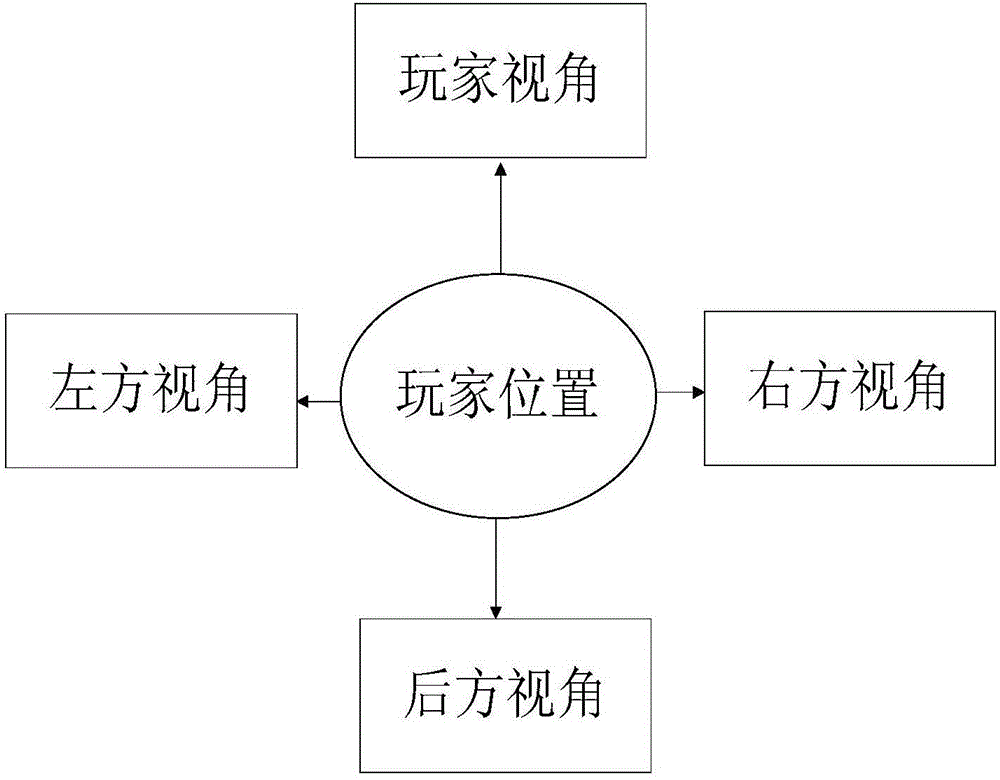

Game recording method and device, and virtual reality device

The invention discloses a game recording method and device, and a virtual reality device. The method comprises the steps of setting multiple virtual cameras in the virtual reality device. The multiple virtual cameras at least comprise the virtual camera for recording a first angle of view of a player who employs the virtual reality device, and one or more virtual cameras for recording other angles of view following the first angle of view except the first angle of view; recording content displayed by the virtual reality device by employing the multiple virtual cameras; and synthesizing videos recorded by the multiple virtual cameras, thereby forming a panoramic video. Through application of the embodiment, the technical problem that the game experience of the game player cannot be restored due to the fact that only the content displayed at a PC can be recorded when the VR game is recorded is solved, and the possibility of restoring what the game player sees when the VR game is recorded can be provided.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

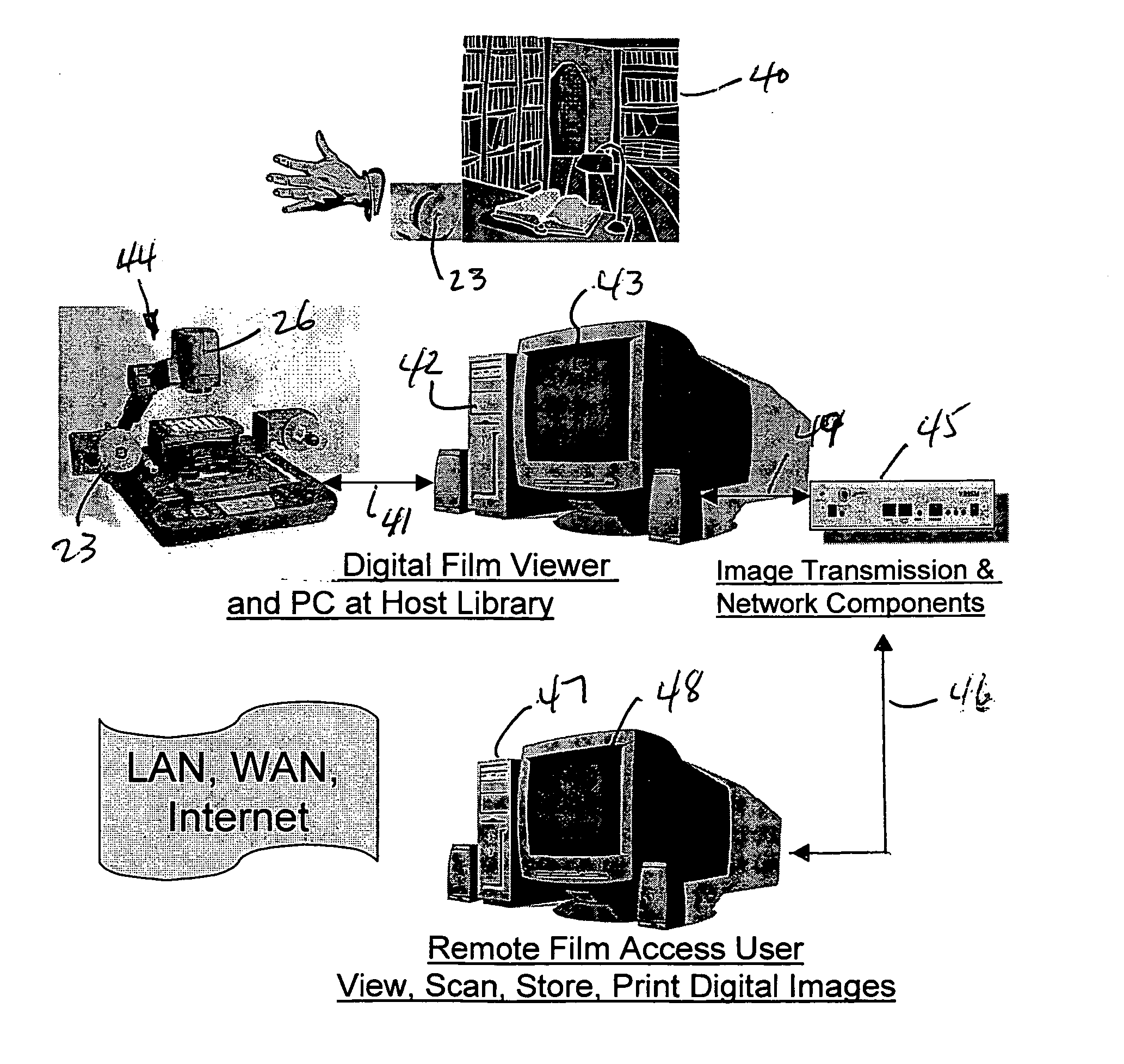

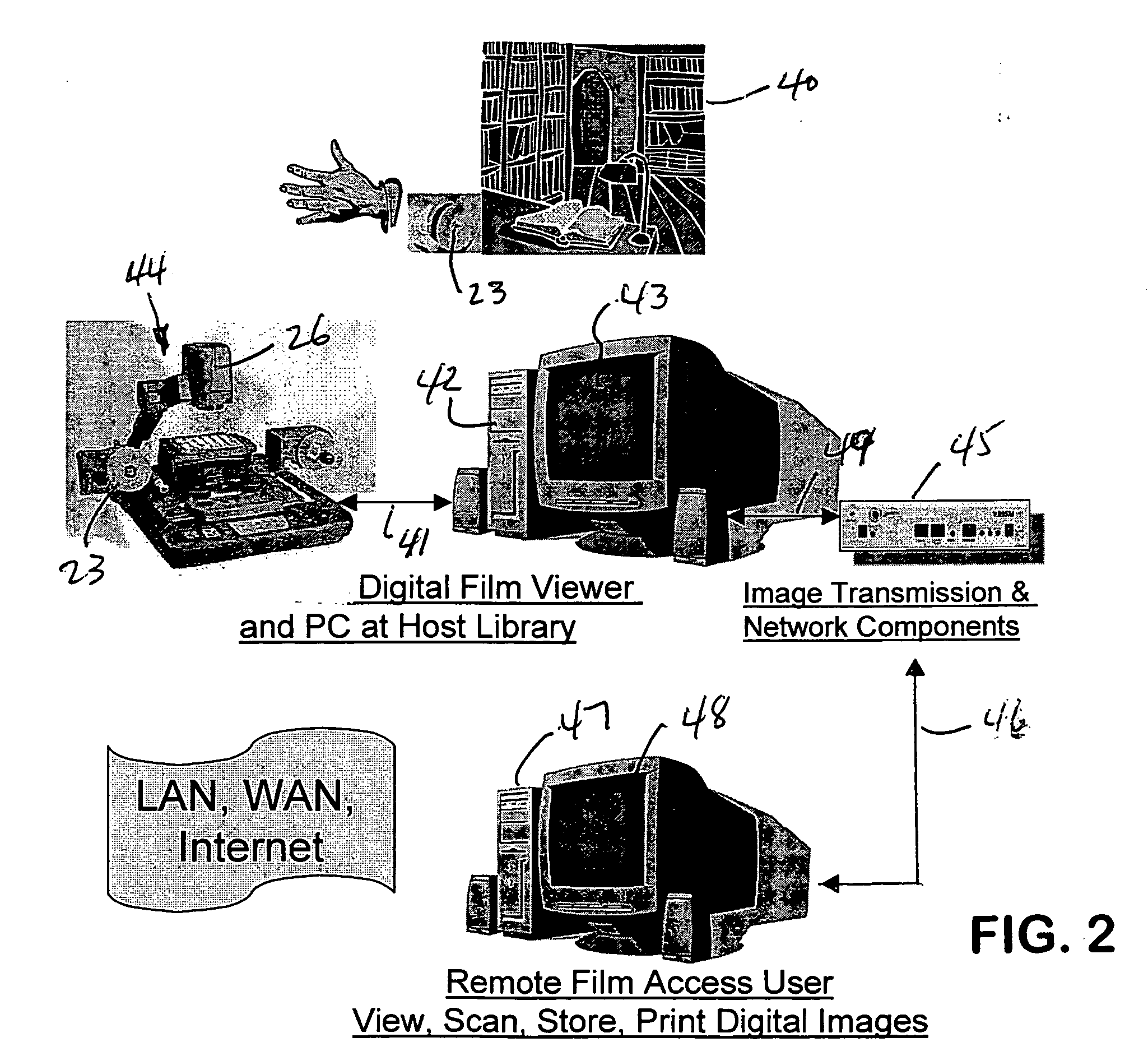

Apparatus and methods for remote viewing and scanning of microfilm

Apparatus for remotely viewing and scanning microfilm at a first location, while controlling the viewing and scanning from a remote location, including a viewing and scanning device for generating a video feed and subsequently scanned images of the microfilm, a host computer at the first location in communication with the viewing and scanning device to receive the video feed and the scanned images, a user's computer at a remote location in communication with the host computer to receive the video feed and the scanned images from the host computer, and a virtual film movement control at the user's computer to control the movement of the microfilm at the first location. A virtual camera control at the remote location controls the camera of the viewing and scanning device.

Owner:DIGITAL CHECK CORP

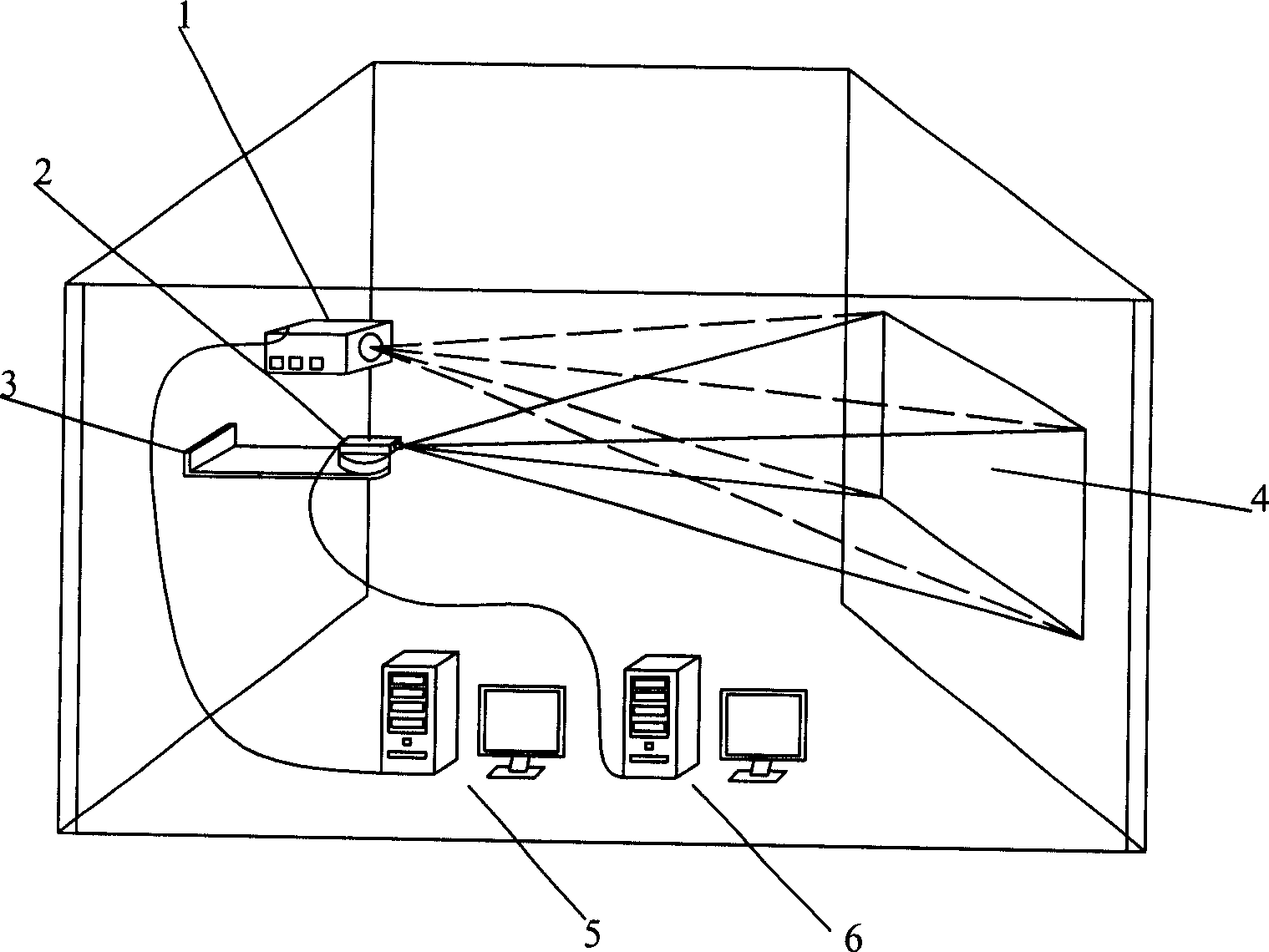

Three-dimensional vision semi-matter simulating system and method

InactiveCN1897715AEasy to operateClose to the real test environment to a high degreeSteroscopic systemsViewpoints3d image

The system comprises: a projector, a video camera, a cradle head of video camera, a projection screen and a computer. When using the simulation system to make simulation, in the first, the virtual view software in the computer generates a virtual 3D image to get multi virtual stereovision 3D images from more than two viewpoints at same time. The projector projects each 3D image to the screen; the real video camera respectively captures the 3D images from different viewpoints on the screen to get the output images of the simulation system at the measurement time; calculating the parameters of the virtual video camera, the parameters of imaging model of real video camera and the parameters of imaging model of projector; finally, based on the basis principle of stereovision, getting the virtual 3D scene and 3D space coordinates in the image.

Owner:BEIHANG UNIV

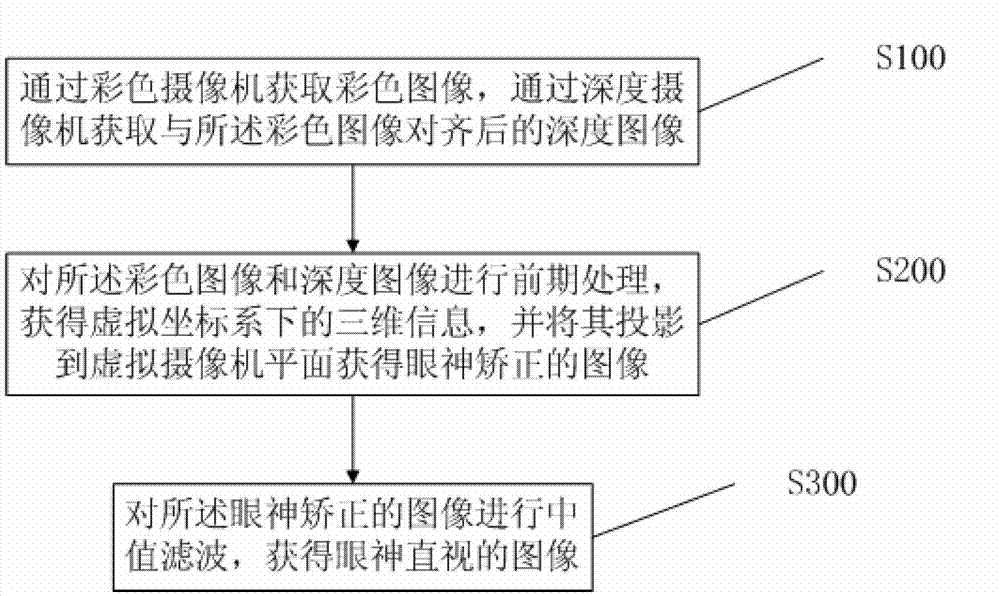

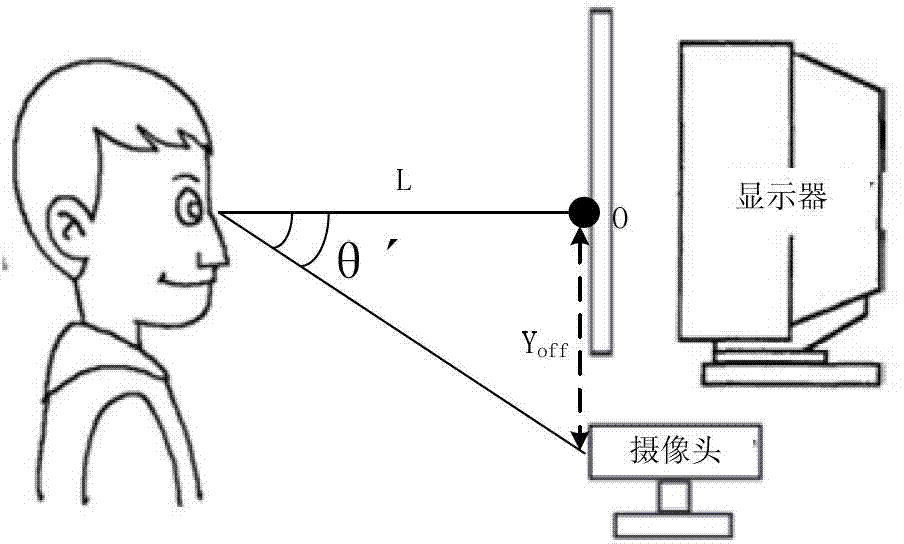

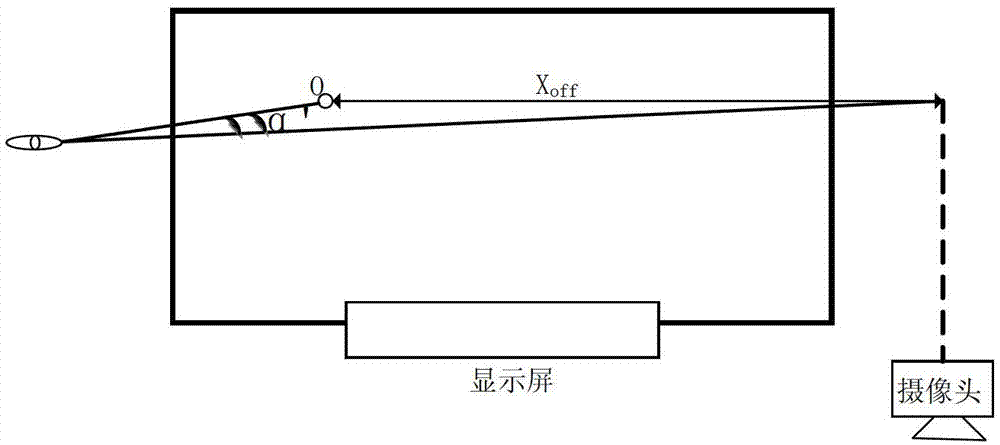

Eye interaction method and system for video conference

ActiveCN103034330AEasy to operateNo need to fixInput/output for user-computer interactionImage analysisInteraction systemsVirtual coordinate systems

The invention provides an eye interaction method and an eye interaction system for a video conference; the method comprises the following steps that (100) a colored image is acquired by a color camera, and a depth image which is in alignment with the colored image is acquired by a depth camera; (200) the colored image and the depth image are processed preliminarily, three-dimensional information under a virtual coordinate system is acquired, and the three-dimensional information is projected to the plane of a virtual camera to acquire an eye correction image; and (300) the median of the eye correction image is filtered, and a direct eye image is acquired. According to the eye interaction method and the an eye interaction system for the video conference, the operation is convenient, the real-time performance is high, different positions of video conference attendees are adapted to automatically, the realization is simple, and the attention to the video conference can be enhanced.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

Volume areas in a three-dimensional virtual conference space, and applications thereof

ActiveUS11070768B1Audio stream is attenuatedTelevision conference systemsAutomatic exchangesVirtual spaceClassical mechanics

Disclosed herein is a web-based videoconference system that allows for video avatars to navigate within the virtual environment. The system has a presented mode that allows for a presentation stream to be texture mapped to a presenter screen situated within the virtual environment. The relative left-right sound is adjusted to provide sense of an avatar's position in a virtual space. The sound is further adjusted based on the area where the avatar is located and where the virtual camera is located. Video stream quality is adjusted based on relative position in a virtual space. Three-dimensional modeling is available inside the virtual video conferencing environment.

Owner:KATMAI TECH HLDG LLC

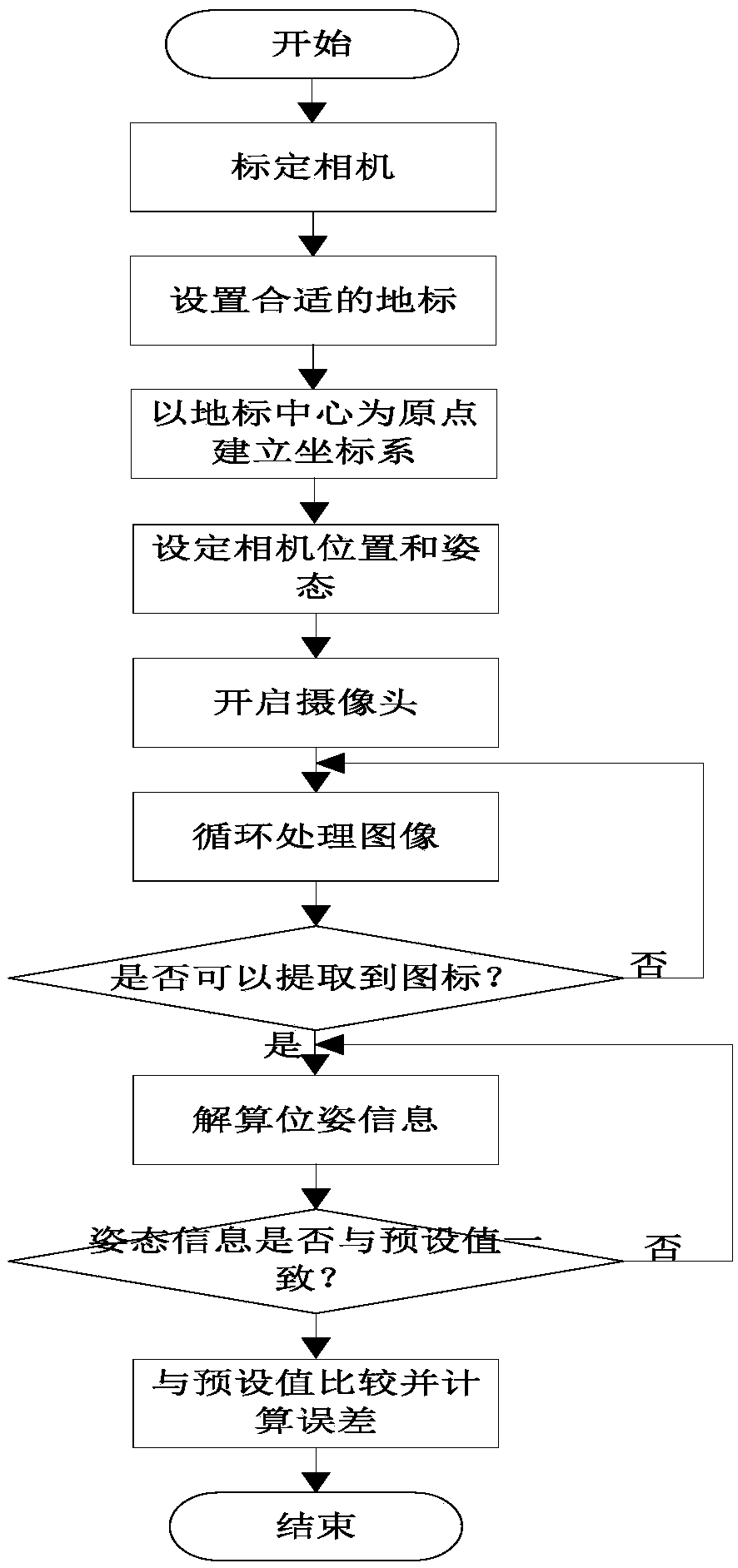

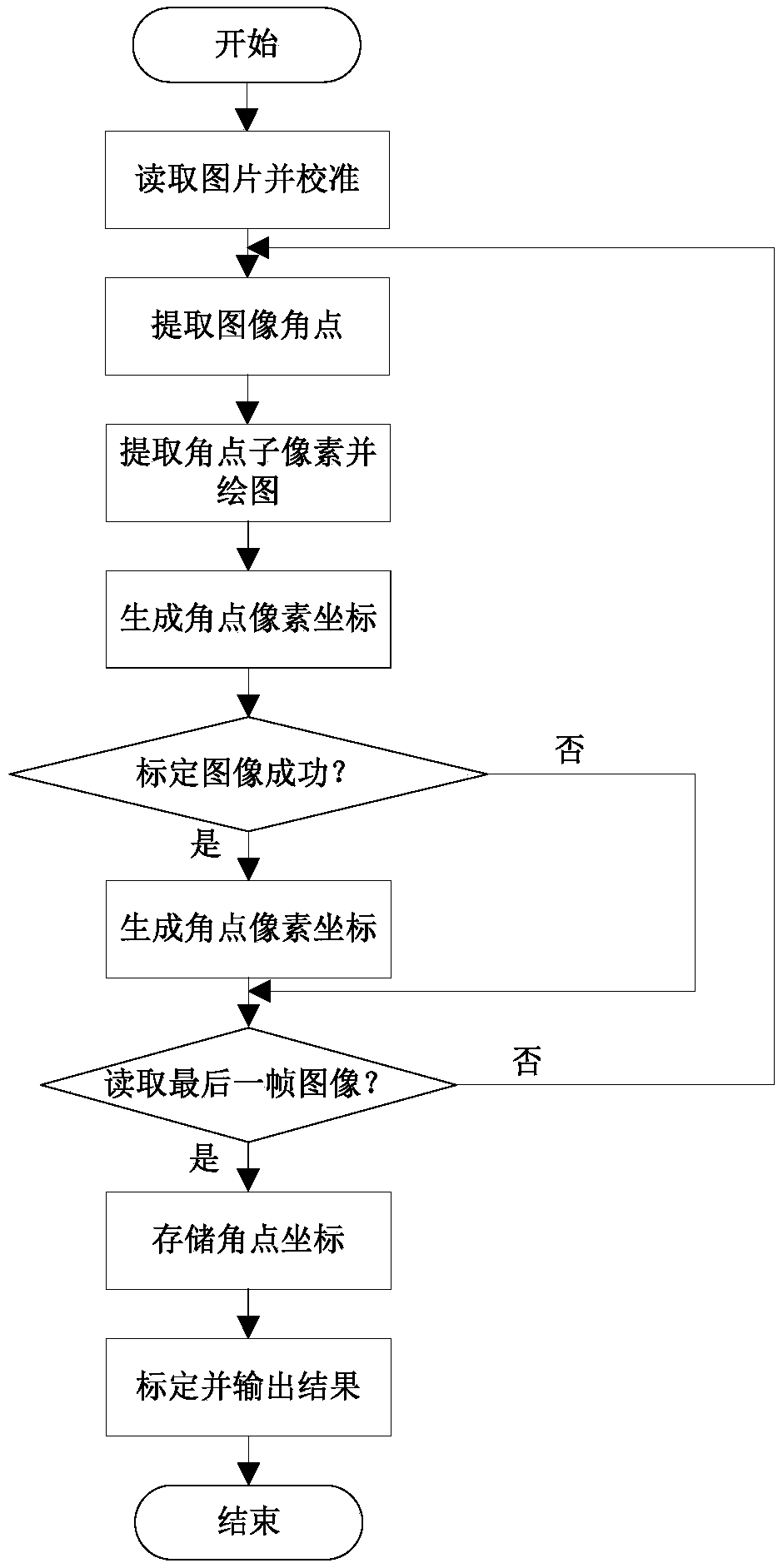

Unmanned aerial vehicle positioning method based on a cooperative two-dimensional code of a virtual simulation environment

ActiveCN109658461ASolving Fast, Robust Localization ProblemsUnable to solve the problemImage enhancementImage analysisUncrewed vehicleEngineering

The invention provides an unmanned aerial vehicle positioning method based on a cooperative two-dimensional code of a virtual simulation environment, and the method comprises the steps: placing a checkerboard in a virtual scene, carrying out the calibration of a camera, and obtaining the parameters of the virtual camera; Identifying the AprilTag two-dimensional code in the scene, accurately positioning the unmanned aerial vehicle through the AprilTag two-dimensional code, and verifying the calibration accuracy of the camera and the feasibility of the positioning and attitude determination algorithm based on the AprilTag two-dimensional code in the virtual scene. In the virtual scene, the present invention places a checkerboard grid, uses a coordinate system conversion relationship to obtain virtual camera parameters, and calibrates the camera, and provides a camera internal reference for the drone visual navigation verification algorithm in the virtual scene. the problem that the virtual camera internal parameter cannot be acquired is solved, the calibrated camera parameters and the AprilTag two-dimensional code positioning algorithm are used for solving the position parameters ofthe camera, and the problem of rapid and robust positioning of the unmanned aerial vehicle in a complex environment is solved.

Owner:NO 20 RES INST OF CHINA ELECTRONICS TECH GRP

Determining video stream quality based on relative position in a virtual space, and applications thereof

ActiveUS11076128B1Reduce rateReduce resolutionTelevision conference systemsSpeech analysisVirtual spaceClassical mechanics

Disclosed herein is a web-based videoconference system that allows for video avatars to navigate within the virtual environment. The system has a presented mode that allows for a presentation stream to be texture mapped to a presenter screen situated within the virtual environment. The relative left-right sound is adjusted to provide sense of an avatar's position in a virtual space. The sound is further adjusted based on the area where the avatar is located and where the virtual camera is located. Video stream quality is adjusted based on relative position in a virtual space. Three-dimensional modeling is available inside the virtual video conferencing environment.

Owner:KATMAI TECH HLDG LLC

Presenter mode in a three-dimensional virtual conference space, and applications thereof

ActiveUS11095857B1Television conference systemsCathode-ray tube indicatorsVirtual spaceClassical mechanics

Disclosed herein is a web-based videoconference system that allows for video avatars to navigate within the virtual environment. The system has a presented mode that allows for a presentation stream to be texture mapped to a presenter screen situated within the virtual environment. The relative left-right sound is adjusted to provide sense of an avatar's position in a virtual space. The sound is further adjusted based on the area where the avatar is located and where the virtual camera is located. Video stream quality is adjusted based on relative position in a virtual space. Three-dimensional modeling is available inside the virtual video conferencing environment.

Owner:KATMAI TECH HLDG LLC

Merging of a video and still pictures of the same event, based on global motion vectors of this video

ActiveUS20110229111A1Improve visual appearanceMitigate, alleviate or eliminate oneTelevision system detailsElectronic editing digitised analogue information signalsMotion vectorVirtual camera

It is quite common for users to have both video and photo material that refer to the same event. Adding photos to home videos enriches the content. However, just adding still photos to a video sequence has a disturbing effect. The invention relates to a method to seamlessly integrate photos into the video by creating a virtual camera motion in the photo that is aligned with the estimated camera motion in the video. A synthesized video sequence is created by estimating a video camera motion in the video sequence at an insertion position in the video sequence at which the still photo is to be included, creating a virtual video sequence of sub frames of the still photo where the virtual video sequence has a virtual camera motion correlated to the video camera motion at the insertion position.

Owner:SHENZHEN TCL CREATIVE CLOUD TECH CO LTD

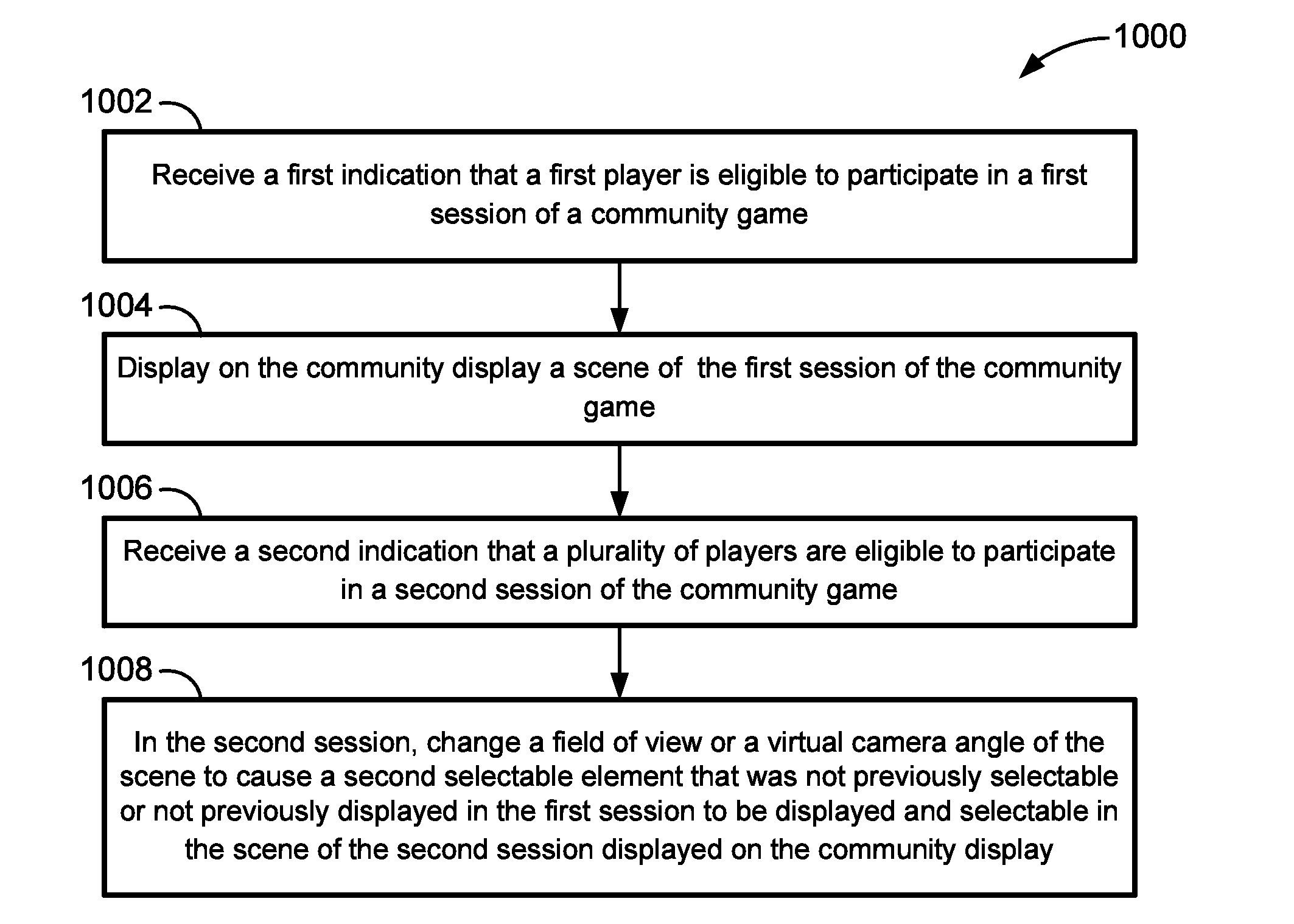

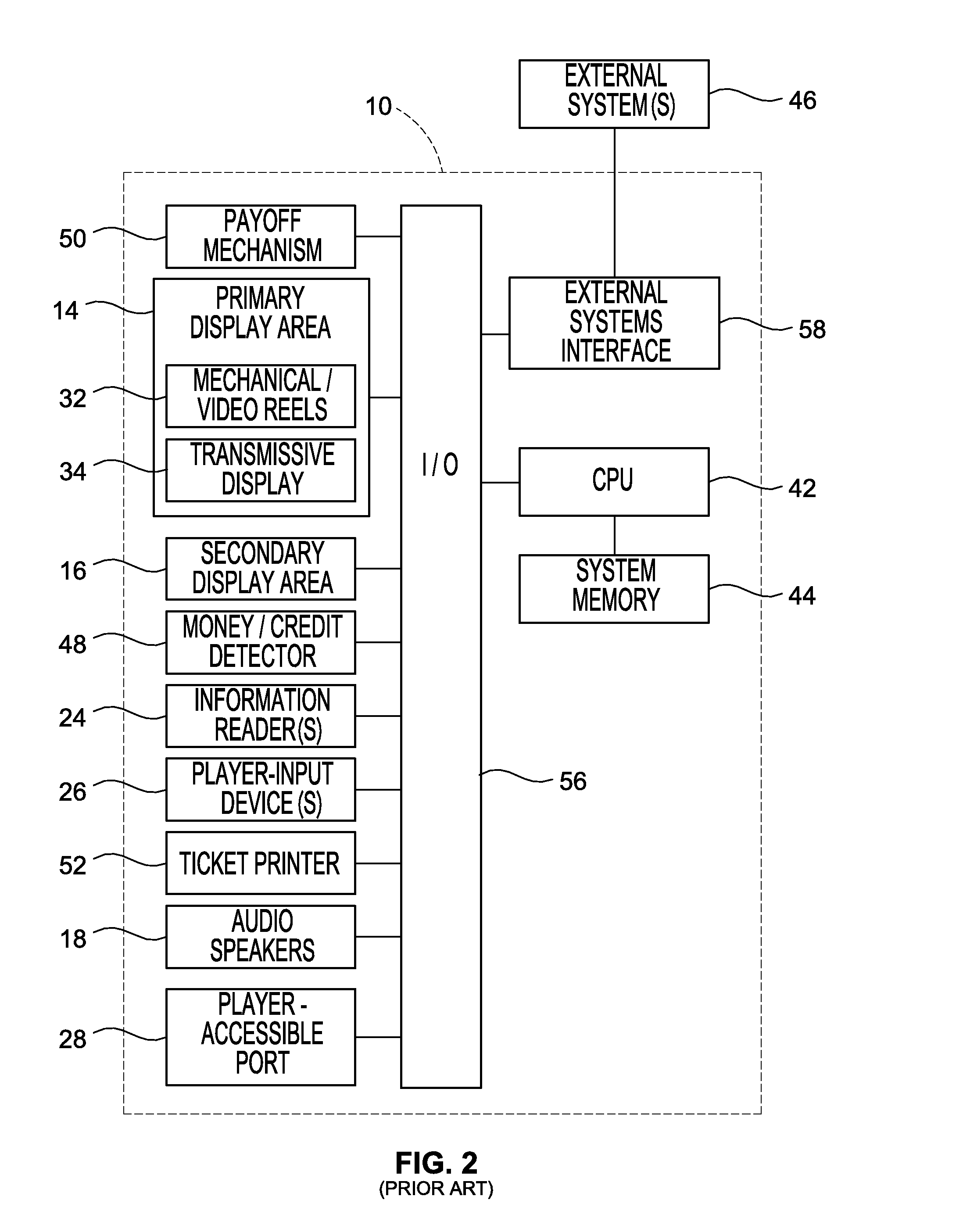

Community game that adapts communal game appearance

ActiveUS20130084965A1Increase valueIncrease in sizeApparatus for meter-controlled dispensingVideo gamesCommunity settingDisplay device

A community game that adapts a scene on a community display based on the number of participating players and / or player locations from one session of the community game to another or in any given session and / or number of the gaming terminals. Selectable elements are displayed on the community display for selection by the participating players. When additional players are eligible to participate in the community game, a field of view or a virtual camera angle of a scene is changed to reveal additional selectable elements or a greater variety of selectable elements than were available for selection with fewer participating players. Any of the selectable elements can be cooperatively selectable elements which multiple players can select to reveal an enhanced award. Based on the players' locations, the scene can be adapted to portray selectable elements or previously hidden or obscured areas of the scene closest to the newly participating player(s).

Owner:LNW GAMING INC

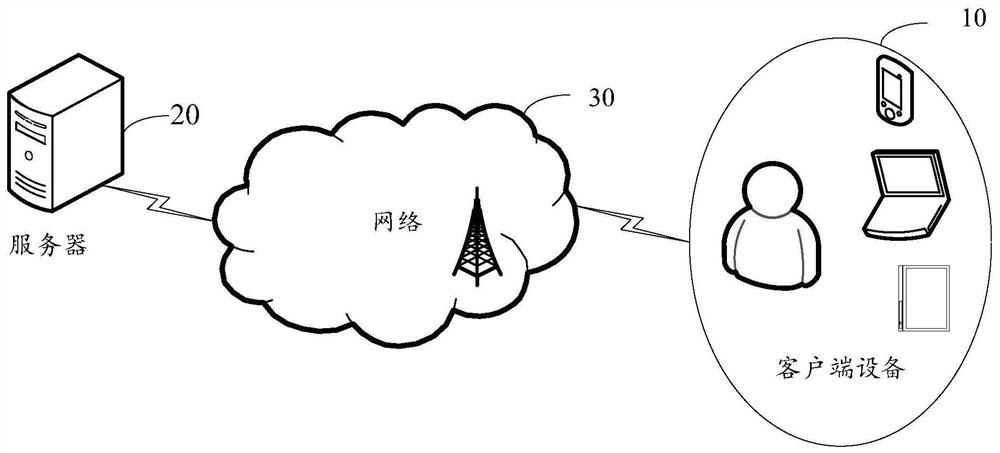

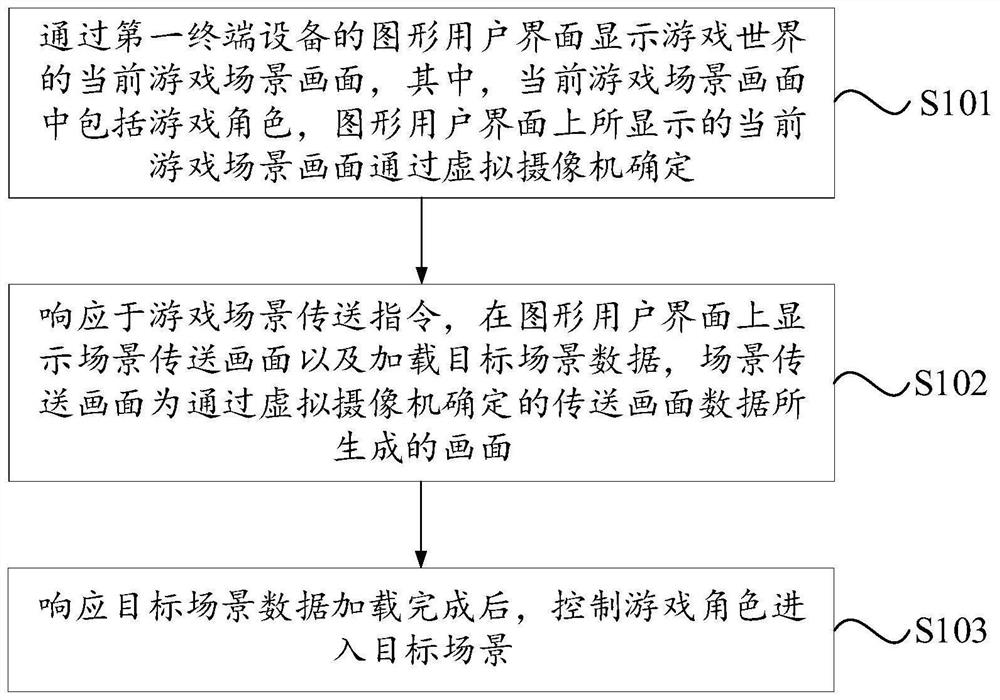

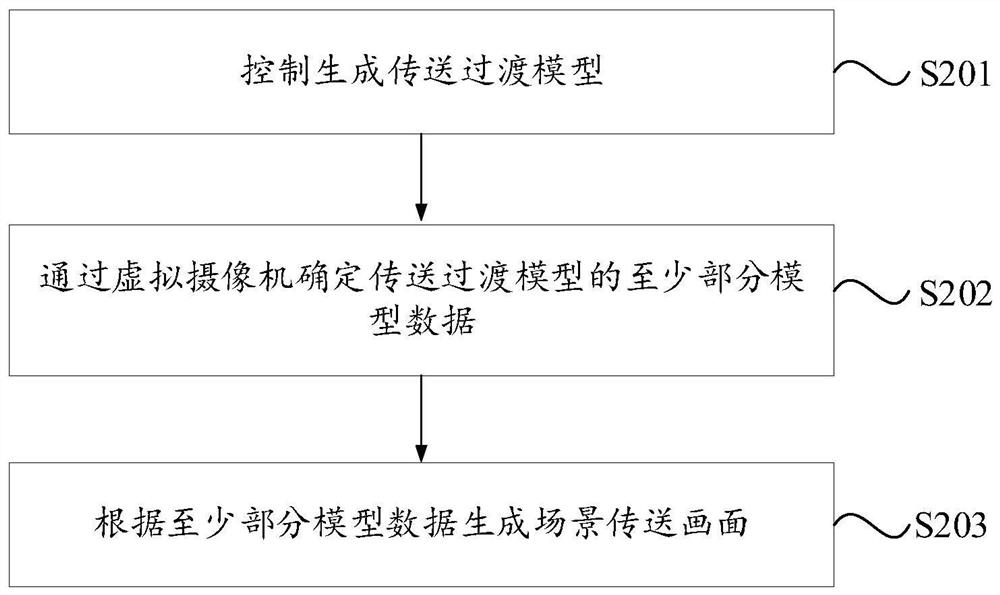

Game role transmission method and device, electronic equipment and storage medium

PendingCN111729306ADoes not increase performance consumptionIncrease workloadVideo gamesGraphical user interfaceTerminal equipment

The invention provides a game role transmission method and device, electronic equipment and a storage medium, and relates to the technical field of games. The current game scene picture of the game world is displayed through a graphical user interface of a first terminal device, the current game scene picture comprises a game role, and the current game scene picture displayed on the graphical userinterface is determined through a virtual camera; in response to a game scene transmission instruction, a scene transmission picture can be displayed on the graphical user interface and target scenedata can be loaded, and the scene transmission picture is a picture generated by the transmission picture data determined by the virtual camera; after the target scene data is loaded, the game role iscontrolled to enter the target scene, and other game scenes are not introduced when the target scene data is loaded, so that the performance consumption of the first terminal equipment is hardly increased in the transmission process of the game role, and extra workload is not increased for a game designer.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

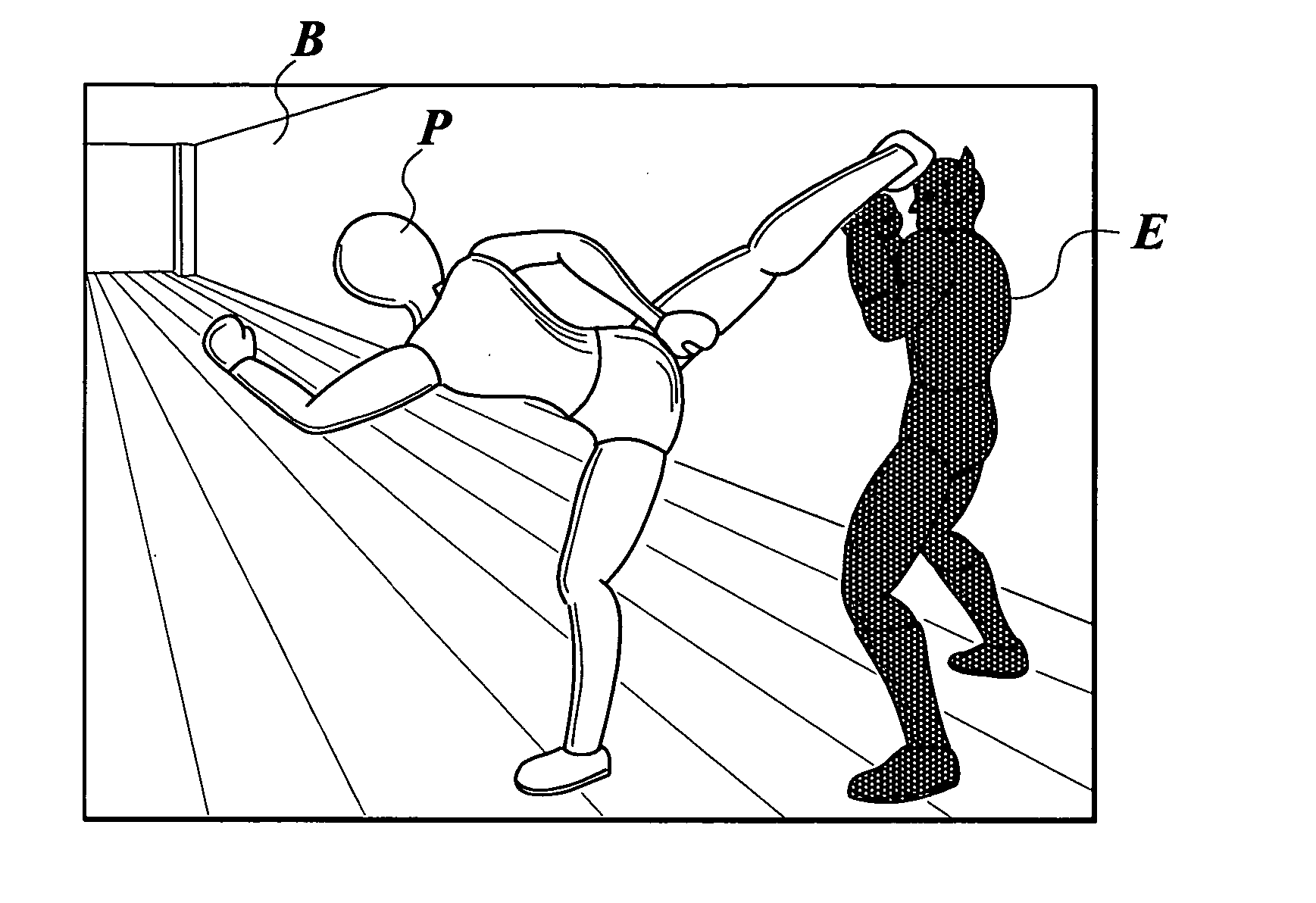

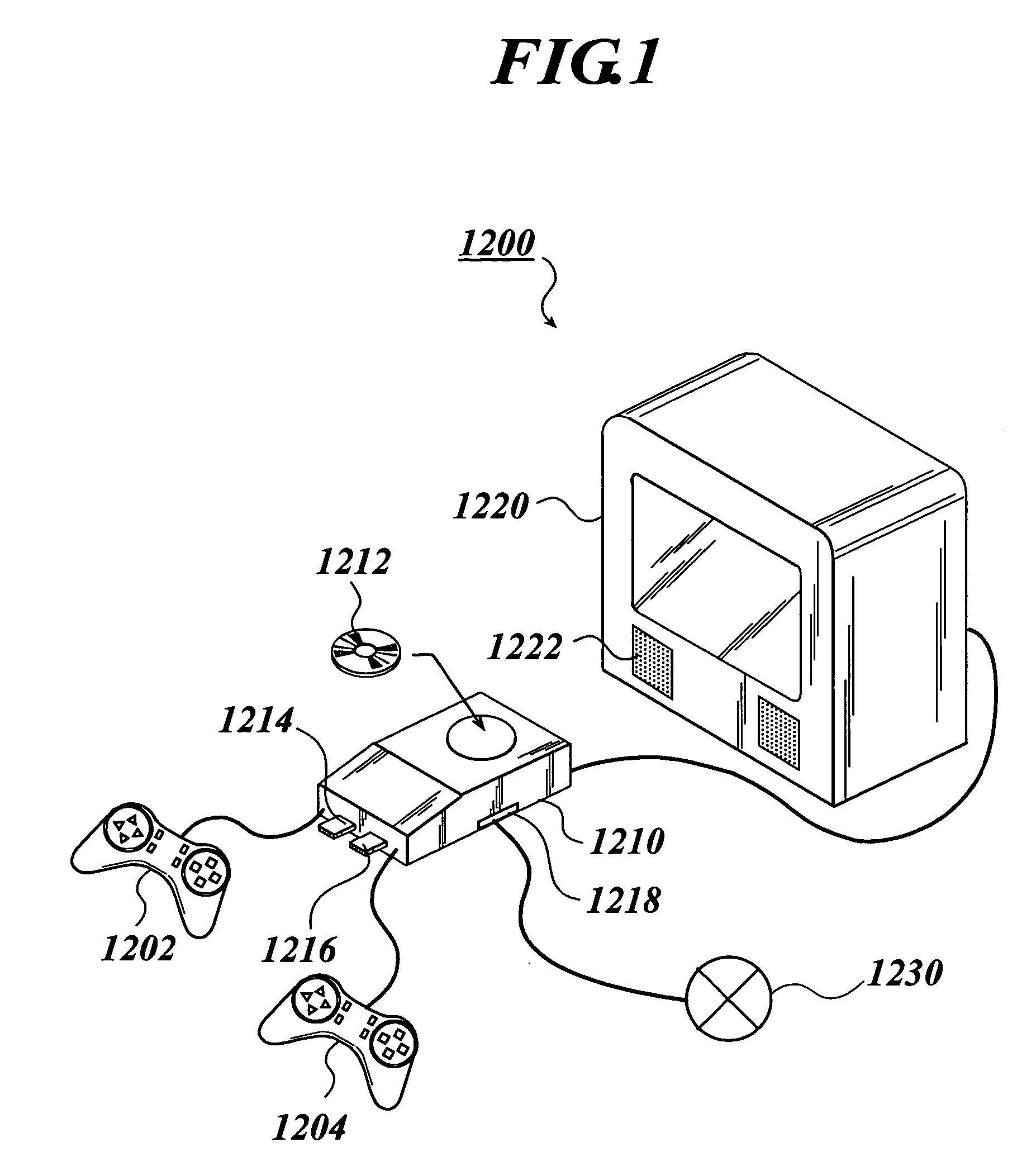

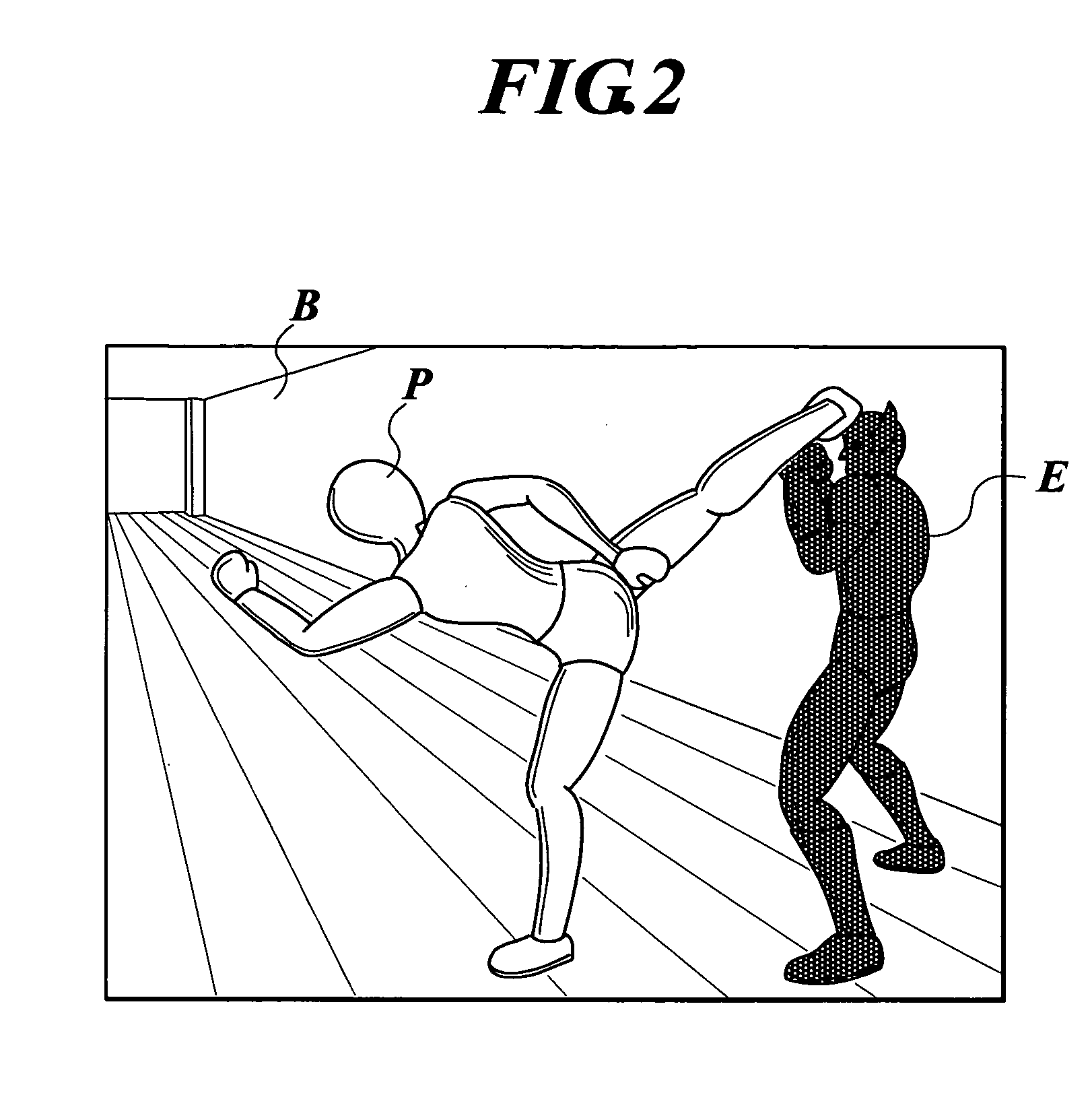

Method for performing game, information storage medium, game device, data signal and program

A game performing method for making a computer device execute a predetermined game by generating a first object and a second object as seen from a virtual camera, has: judging whether there is a hit between the first object and the second object; judging whether a predetermined event occurrence condition is satisfied if it is judged that there is the hit between the first object and the second object; generating a first image which is an internal structure object of the second object if it is judged that the predetermined event occurrence condition is satisfied; and generating a second image which is the internal structure object with a predetermined part thereof changed after the first image is generated.

Owner:NAMCO

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com