Three-dimensional non-realistic expression generation method based on facial motion unit

A motion unit, non-realistic technology, applied in neural learning methods, animation production, biological neural network models, etc., can solve problems such as inaccurate expression templates or feature point positioning, to overcome geometric position dependence, accurate feature extraction, The effect of enriching spatial information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0048] Specific embodiments of the present invention will be described below in conjunction with the accompanying drawings, so that those skilled in the art can better understand the present invention.

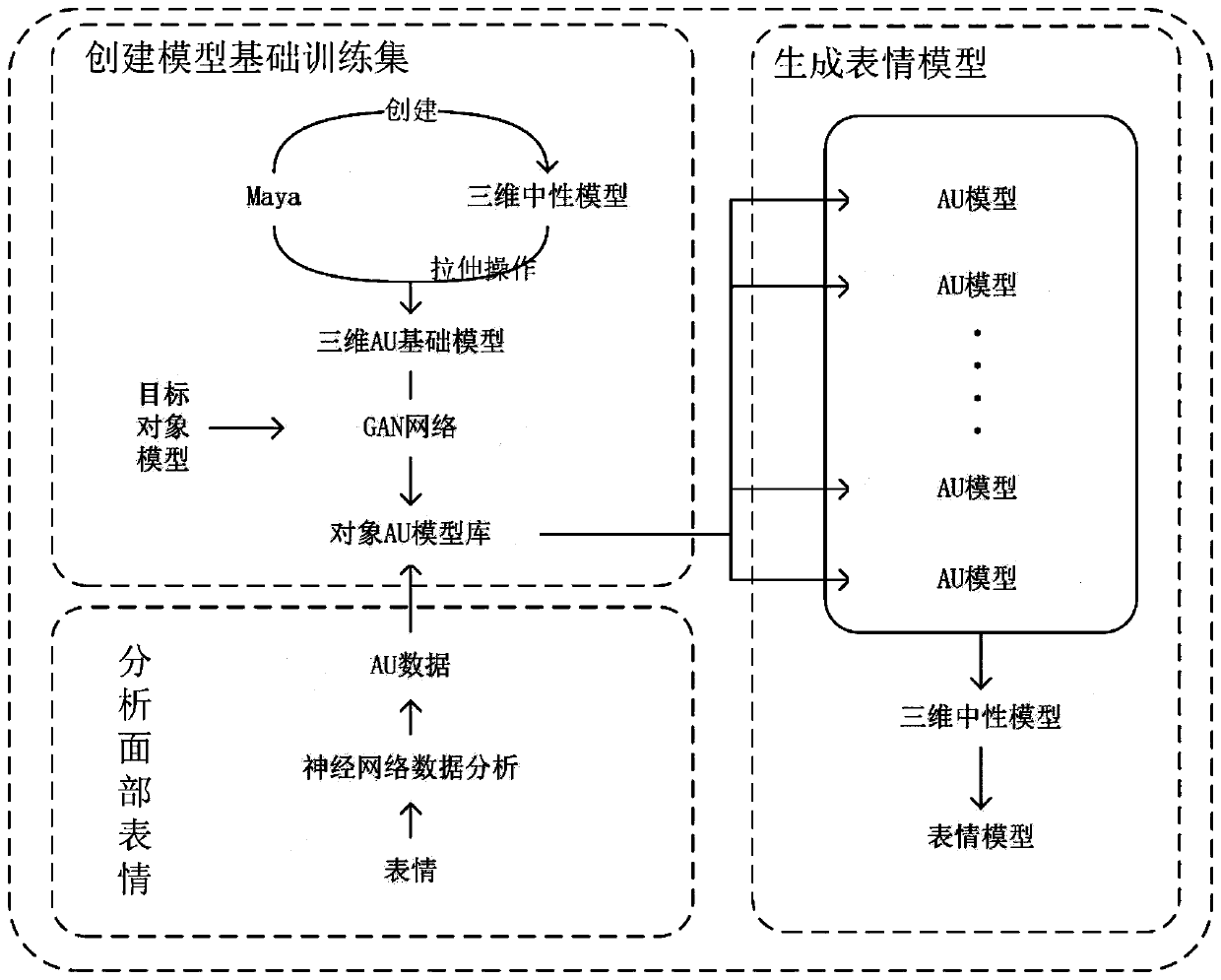

[0049] The invention proposes a three-dimensional non-realistic expression generation method based on facial motion units. include:

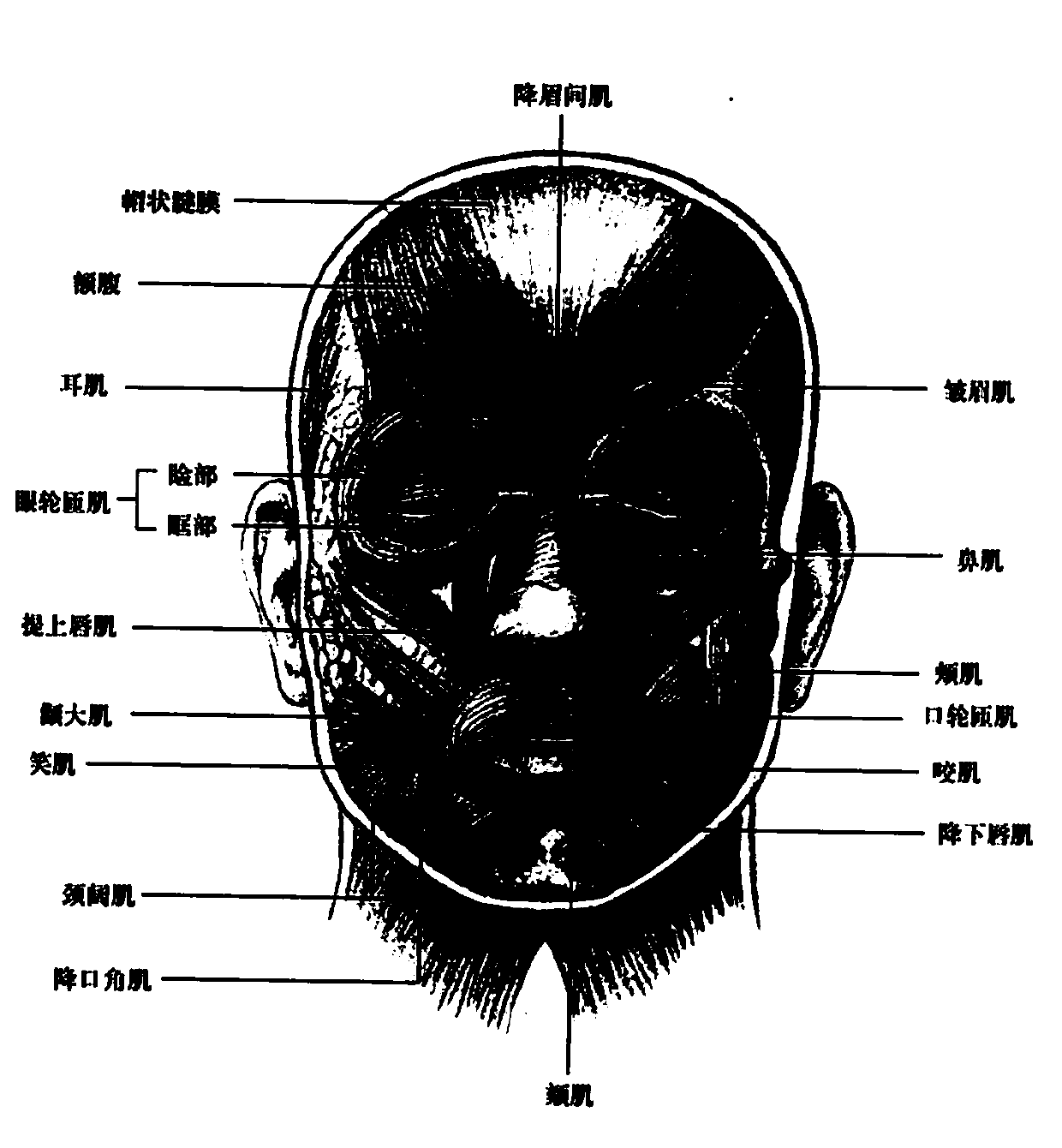

[0050] Step 1: Establish a standard 3D facial neutral model, and based on the neutral model, establish a 3D model basic training set corresponding to AU.

[0051] Use the GAN network to generate an augmented training set of AUs and corresponding 3D models. Use the AU model in the established 3D model basic training set and the target object model as the input of the GAN network, use the GAN network to generate the AU model of the target object, establish the corresponding relationship between the AU model of the target object and the 3D face model, and expand Training set.

[0052] Step 2: Analyze the facial expression of the target object to...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com