Dynamically adjusted cache data management and elimination method

A technology of caching data and dynamic adjustment, which is applied in the computer field and can solve problems such as cache pollution, pollution, and extrusion of hot data, and achieve the effects of improving cache hit rate, avoiding cache pollution, and optimizing performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0016] In order to make the purpose, technical solutions and beneficial effects of the present invention more clear, the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

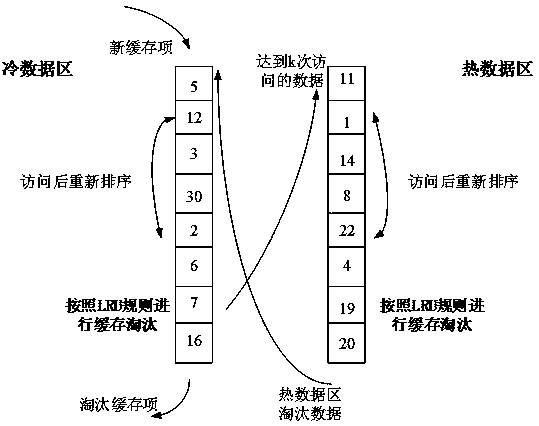

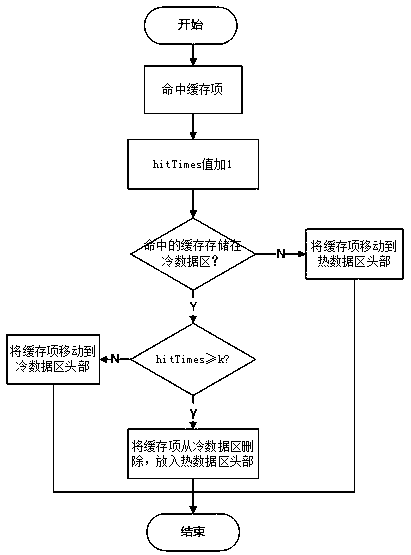

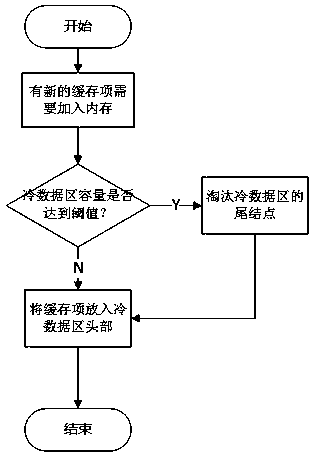

[0017] like figure 1 As shown, the overall mechanism of the dynamically adjusted cache data management and elimination method is as follows: the memory space is divided into a hot data area and a cold data area, both of which are organized and managed by a two-way linked list, referred to by linked list H and linked list C respectively. When there is a new cache item, put the cache item into the head of the linked list C, and record the hitTimes attribute in the cache item as 1. Linked list C performs cache elimination according to the LRU rule. Add 1 to the hitTimes of the item each time the cache item is hit, and when the hitTimes of a cache item reaches the set value K, delete the item from the linked list C and put it into the hea...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com