Multi-focus image fusion method based on directional filter and deconvolutional neural network

A multi-focus image and directional filter technology, applied in the field of image processing, can solve the problems of inability to accurately extract multi-focus image detail information, unable to form multi-focus image image semantic expression, unable to accurately describe image detail information, etc. Achieve the effect of saving training time, reducing computational complexity, and simple design

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

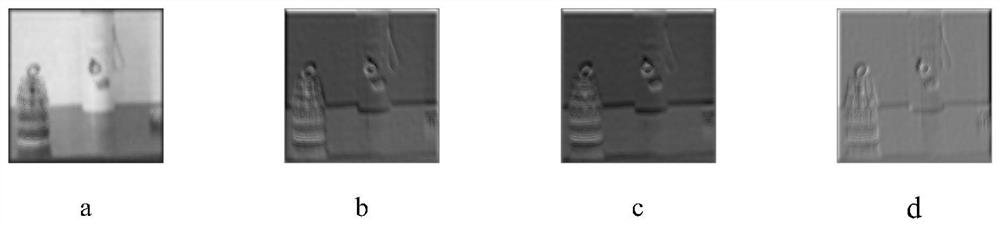

[0036] The embodiments and effects of the present invention will be described in detail below in conjunction with the accompanying drawings.

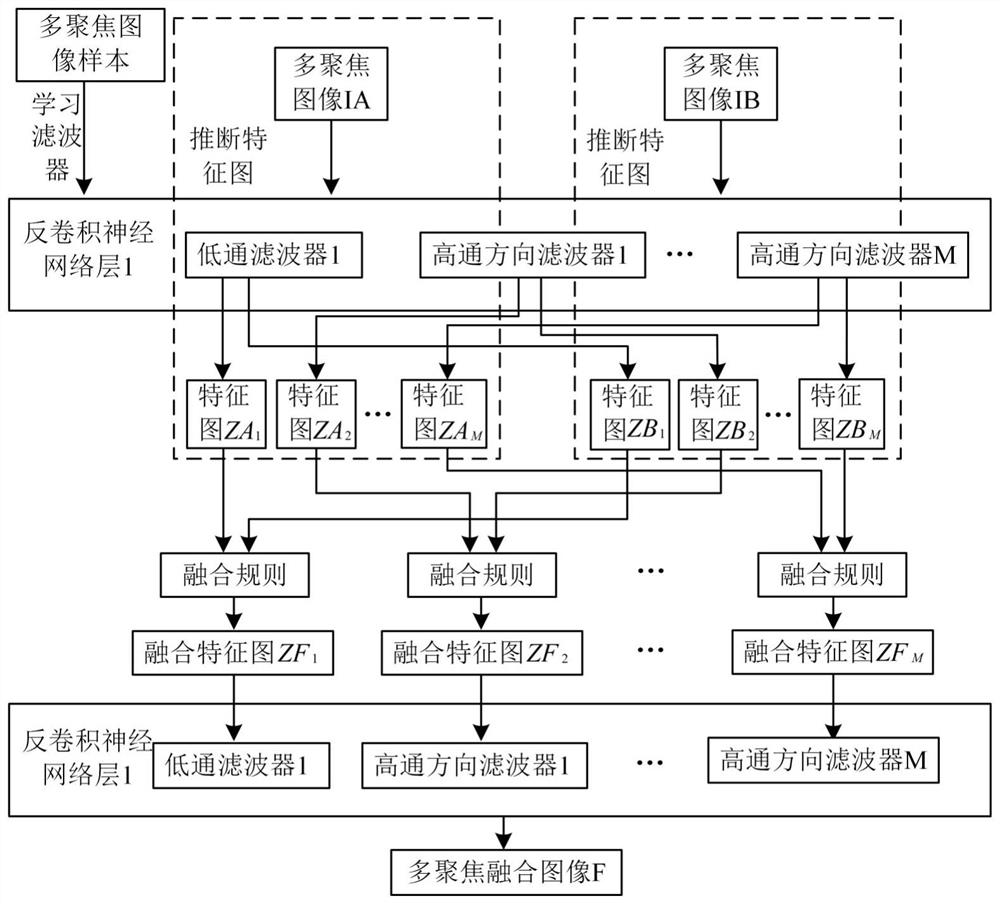

[0037] refer to figure 1 , the present invention is based on the multi-focus image fusion method of directional filter (and deconvolution neural network), and the realization steps include as follows:

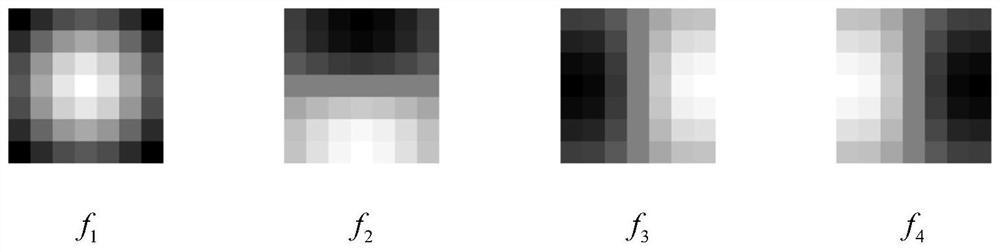

[0038] Step 1, design an initial high-pass directional filter and an initial Gaussian low-pass filter.

[0039] The high-pass directional filter has the function of accurately extracting the detailed information of the image in the corresponding direction, and the Gaussian low-pass has the function of smoothing the image and filtering noise. Their design methods are as follows:

[0040] (1.1) The design size is N, and the cutoff frequency is δ 1 The initial Gaussian low-pass filter:

[0041] According to the two-dimensional Gaussian function: The cutoff frequency is obtained as δ 1 The calculation formula of is as follows:

[0042...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com