Robot object grabbing system, method and device

A technology for robots and objects, applied in the field of robots, can solve the problems of low object grasping efficiency, high hardware cost, and appearance requirements of objects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

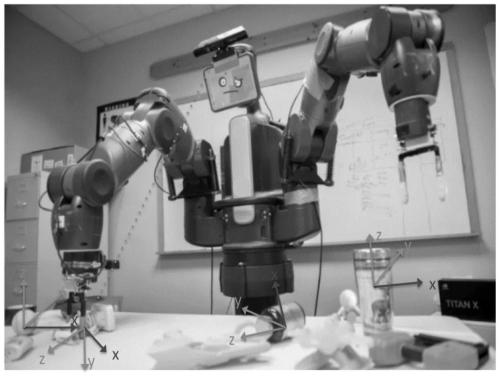

[0092] Please refer to figure 1 , which is a schematic structural diagram of an embodiment of a robot grabbing object system provided by the present application. In this embodiment, the system includes a server 1 and a robot 2 .

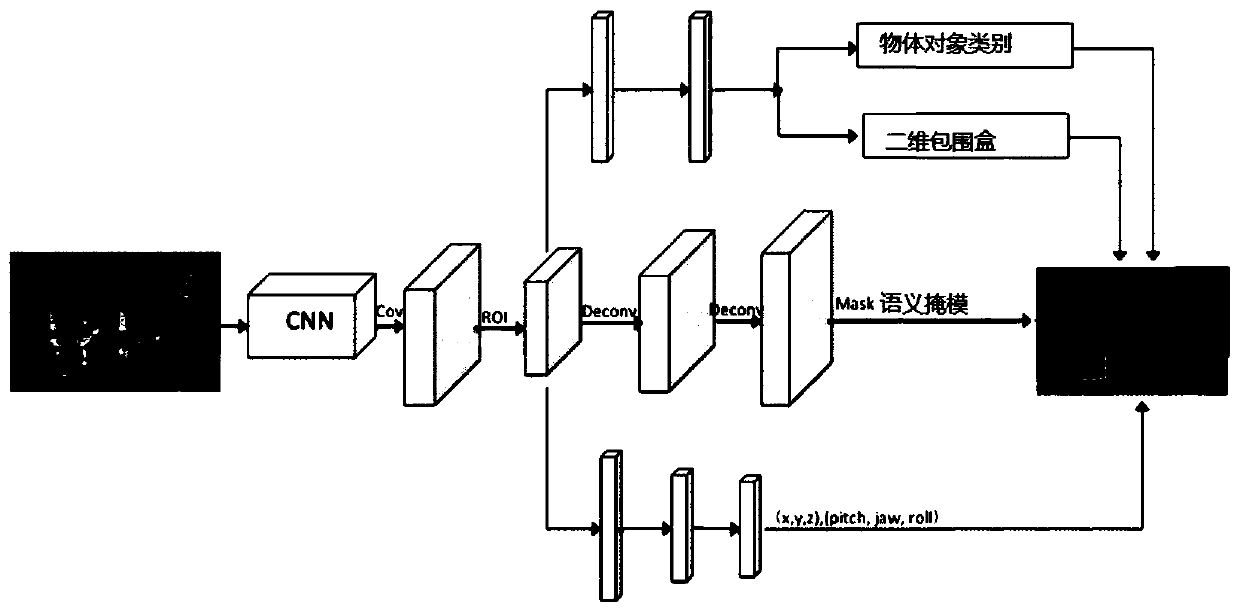

[0093] The server 1 is used to obtain a training data set; learn an object grasping information recognition model from the training data set, and the model includes an object grasping feature extraction subnetwork, an object category recognition subnetwork and a pose parameter recognition subnetwork Network; send the model to Robot 2.

[0094] The training data set includes a plurality of training data. The training data includes: the environment image for training, the category of at least one object in the environment image for training, and the corresponding relationship between the pose parameters of the at least one object relative to the camera coordinate system.

[0095] In the embodiment of the present application, in order to distinguish ...

no. 2 example

[0147] Please refer to Figure 5 , which is a flow chart of an embodiment of a method for a robot to grab an object provided in this application. The subject of execution of the method includes a robot. Since the method embodiments are basically similar to the system embodiments, the description is relatively simple, and for relevant parts, please refer to part of the description of the system embodiments. The method embodiments described below are illustrative only.

[0148] A method for grabbing an object by a robot provided in the present application includes:

[0149] Step S501: Collect the current environment image through the image collection device.

[0150] Step S503: extracting object grasping features from the current environment image through the object grasping feature extraction sub-network included in the object grasping information recognition model.

[0151] Step S505: Obtain the category of at least one object in the current environment image according to ...

no. 3 example

[0171] see Figure 7 , which is a schematic diagram of an embodiment of the robot grasping object device of the present application. Since the apparatus embodiment is basically similar to the method embodiment, the description is relatively simple, and reference may be made to part of the description of the method embodiment for related parts. The apparatus embodiments described below are merely illustrative.

[0172] The present application further provides a robot grasping object device, including:

[0173] An image acquisition unit 701, configured to acquire an image of the current environment through an image acquisition device;

[0174] The feature extraction unit 702 is used to extract the object grasping feature from the current environment image through the object grasping feature extraction sub-network included in the object grasping information identification model;

[0175] The object category identification unit 703 is configured to obtain the category of at lea...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com