Multi-focus image fusion method based on multi-scale transformation and convolution sparse representation

A technology of multi-focus image and fusion method, applied in the field of multi-scale transformation model and convolution sparse representation model, can solve the problem of low brightness of the convolution sparse representation fusion algorithm with contrast loss, and achieve obvious fusion effect, good management and merging effect. Good results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

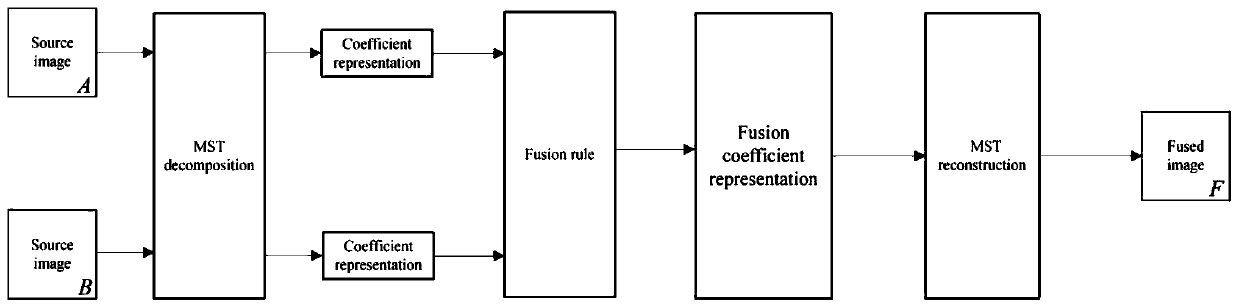

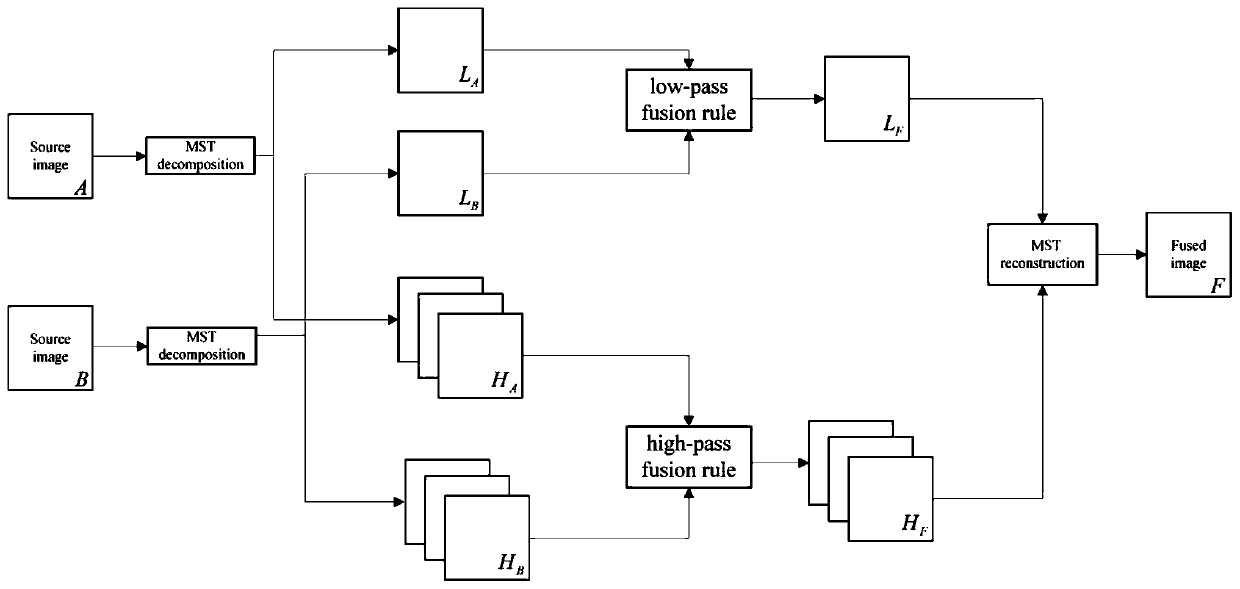

[0041] Multi-scale transformation and convolution sparse representation fusion model:

[0042] Convolutional sparse representation can be seen as a sparse representation replacement model using a convolutional form that aims to achieve a sparse representation of the entire image rather than local image patches. The basic idea of convolutional sparse representation is to take the entire image s ∈ R N Modeled as a coefficient map x m ∈ R N Its corresponding dictionary filter d m ∈ R n×n×m The sum of a set of convolutions between (n

[0043]

[0044] where * represents the convolution operator. The Alternating Direction Multiplier Method (ADMM) based Convolutional Basis Pursuit Denoising (CBPDN) algorithm solves the above problem (1). The framework of multi-scale transformation and convolution sparse representation fusion model is as follows: figure 1 shown. For the convenience of description, two geometrically registered...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com