Dynamic region division method, region channel identification method and cleaning robot

A dynamic area and robot technology, applied in the field of cleaning robots, can solve the problems that robots cannot make good use of sub-area cleaning, and achieve the effects of reducing the probability of shuttle work, improving cleaning efficiency, and high environmental adaptability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

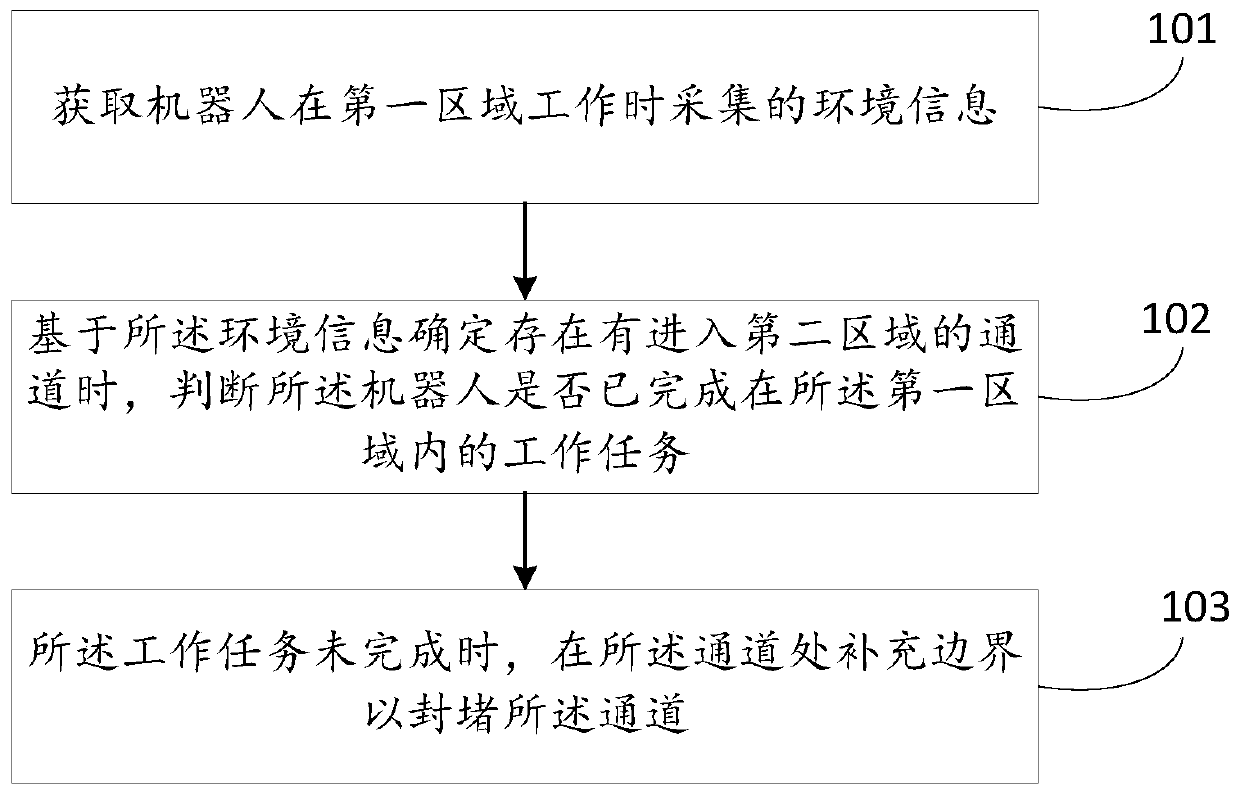

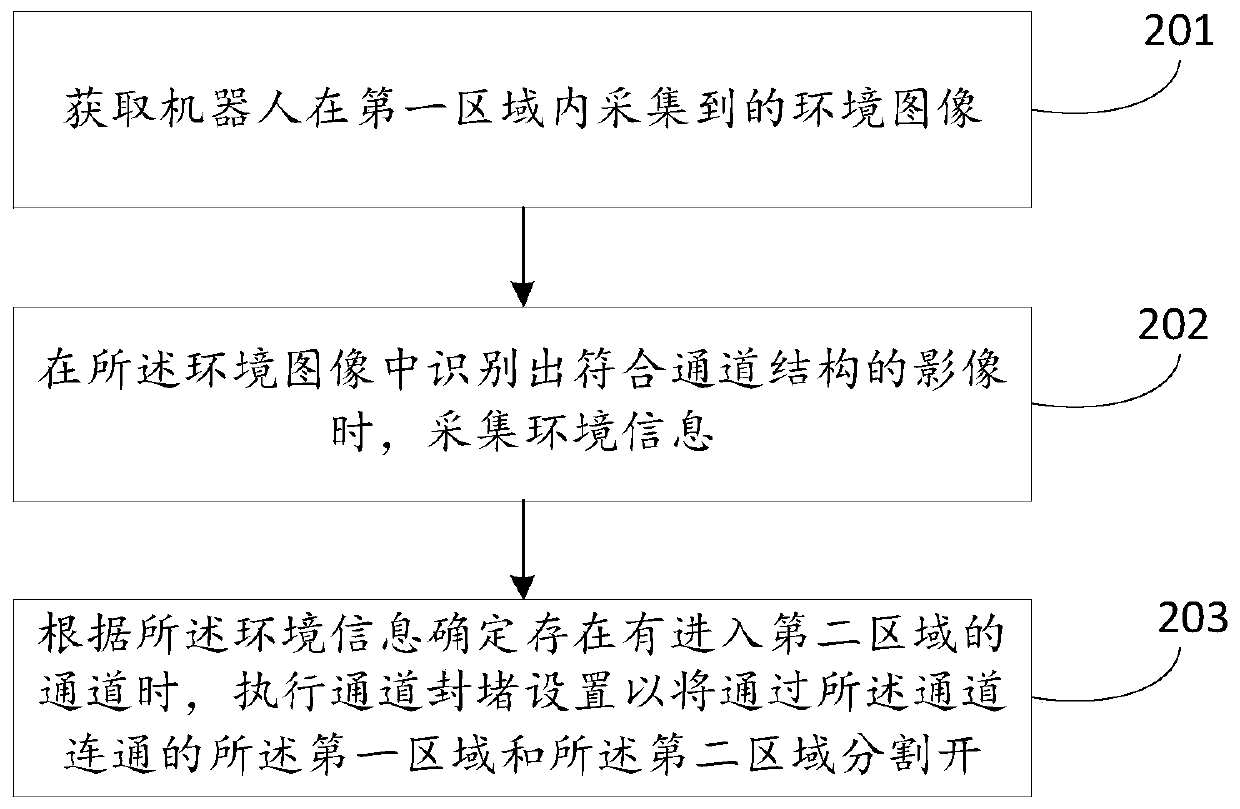

Method used

Image

Examples

Embodiment Construction

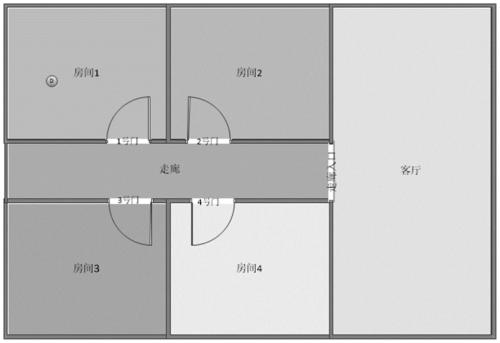

[0051] Cleaning robots, such as sweeping robots, complete the cleaning by traversing the entire apartment area when cleaning the family room. If it is impossible to distinguish different rooms for cleaning separately, the robot will repeatedly enter and exit the same room, or appear alternately between different rooms. It needs to repeatedly enter and exit to complete the cleaning task of a room, which directly leads to low cleaning efficiency; indirectly leads to Repeated sweeping, missed sweeping, etc., even cannot completely clean the entire apartment. In order to solve the above problems, it is necessary to identify the room and follow the principle of cleaning a single room before entering the next room.

[0052] There is a static partitioning scheme in the prior art. Static zoning scheme, that is, after the cleaning robot completes at least one cleaning, it can outline the house type map of the entire house; then partition the house type map to divide different rooms, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com