An edge caching method, device and electronic device based on reinforcement learning

A technology of reinforcement learning and caching, applied in machine learning, electrical components, instruments, etc., can solve problems such as large impact and waste of cache space, achieve small coverage, reduce delay, improve cache hit rate and utilize cache space rate effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

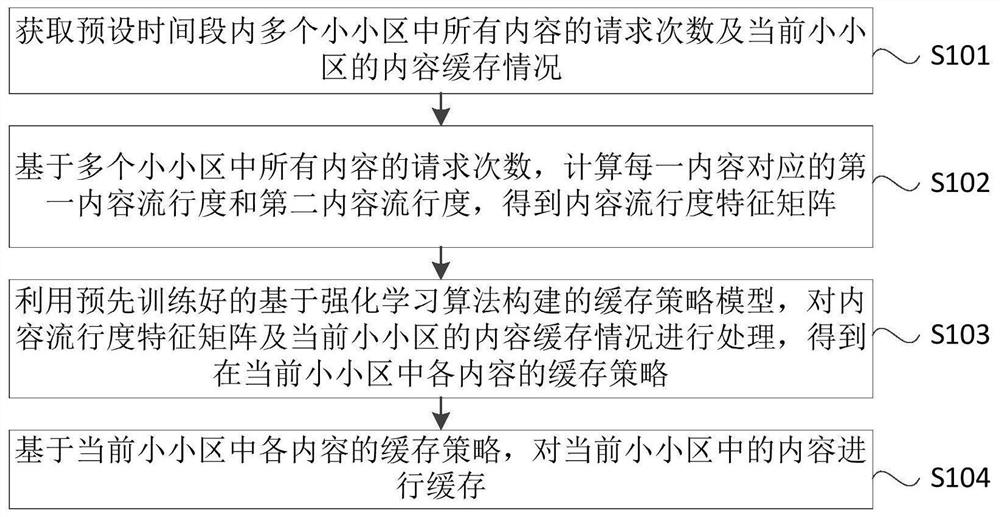

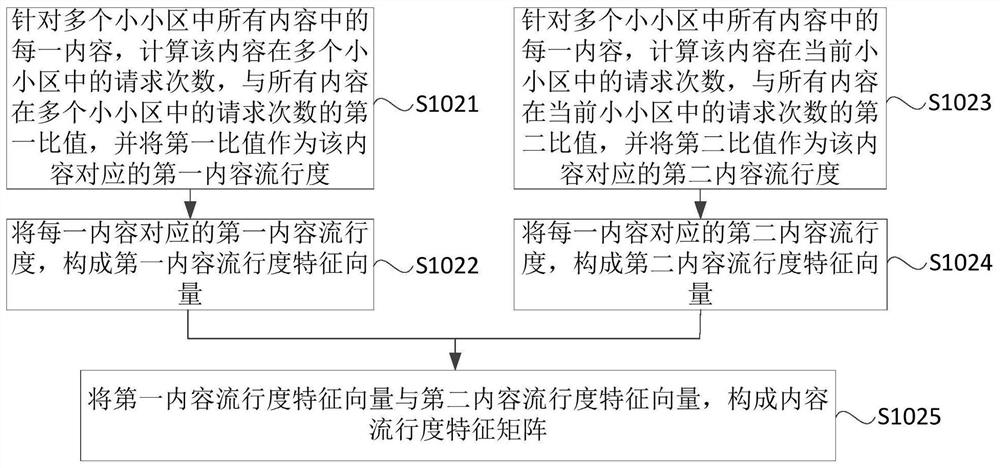

Method used

Image

Examples

Embodiment approach

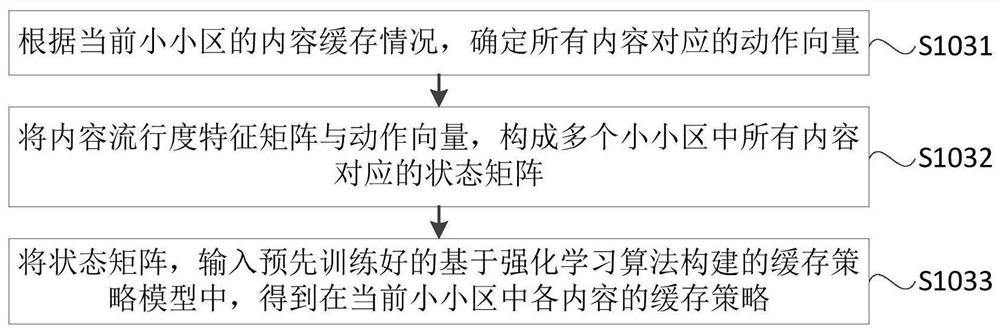

[0107] As an optional implementation mode of the embodiment of the present invention, such as image 3 As shown, using the pre-trained caching strategy model based on the reinforcement learning algorithm, the content popularity feature matrix and the content caching situation of the current small cell are processed to obtain the implementation of the caching strategy of each content in the current small cell. Can include:

[0108] S1031. Determine action vectors corresponding to all content according to the current content caching status of the small cell.

[0109] In the embodiment of the present invention, the pre-trained cache policy model based on the reinforcement learning algorithm is obtained based on the sample content popularity feature matrix, the content cache situation corresponding to the sample content, and the action vector corresponding to the sample content within a period of time. And after training the caching strategy model for the request and cache status...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com