Lable feature near-duplicated video detection method based on convolutional neural network semantic classification

A convolutional neural network and classification labeling technology, applied in the field of multimedia information processing, can solve the problems of detection efficiency of missing semantic features, etc., and achieve the effects of efficient video similarity matching, guaranteed features, and small storage space

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] The implementation of the method of the present invention will be described in detail below in conjunction with the drawings and examples.

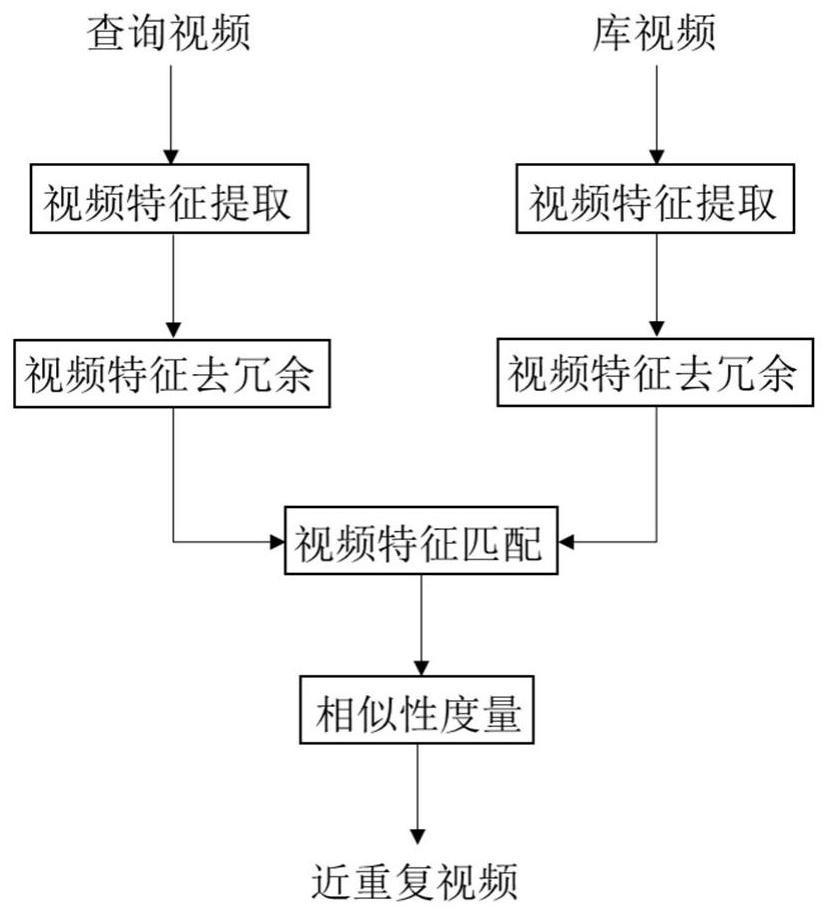

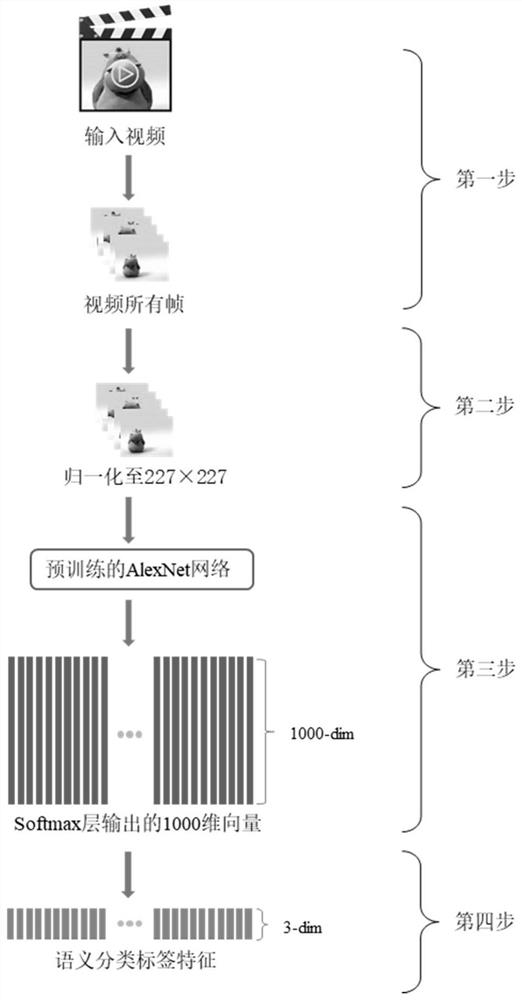

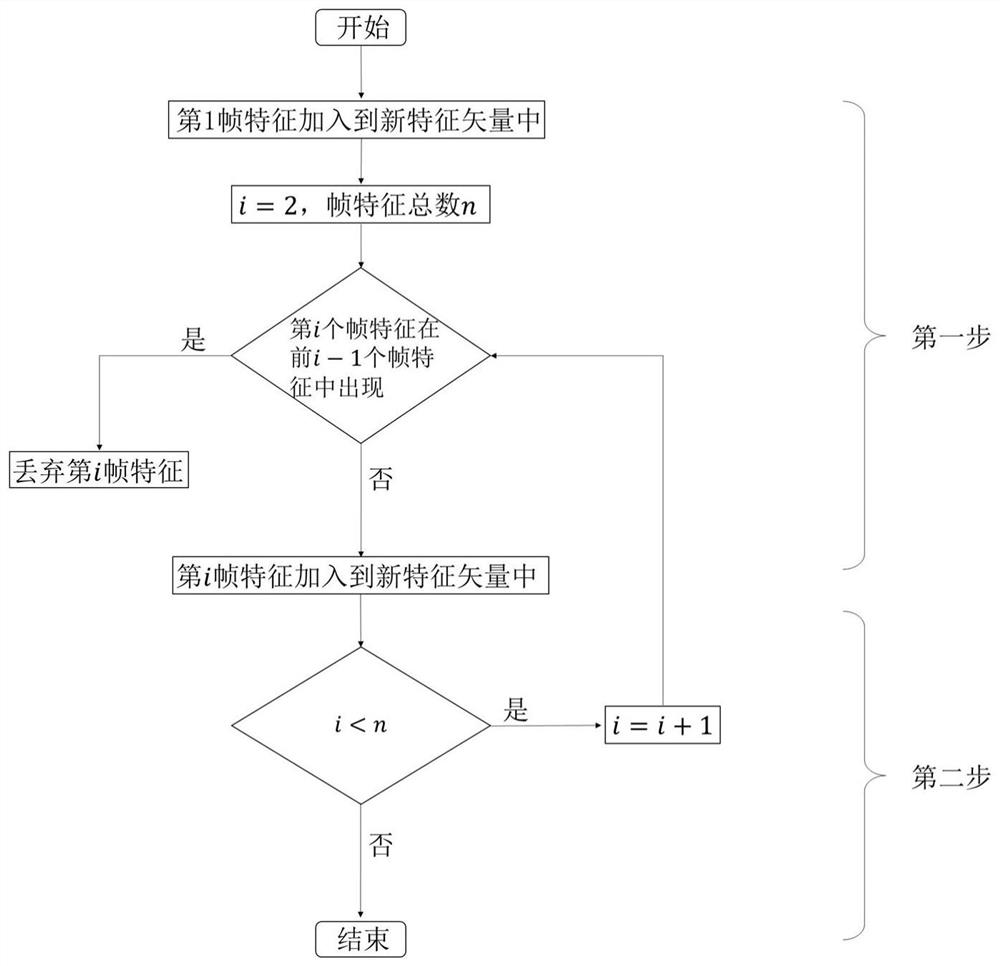

[0040] Such as figure 1 As shown, it is an overall flowchart of the implementation process of the present invention. The present invention provides a near-repetitive video detection method based on the label features of convolutional neural network semantic classification. The method first extracts dense semantic classification label features from the video; secondly, according to the same The repetition between the video frame label features of a video is used to remove the redundancy of the features, and the semantic classification label features of the video are obtained; then the similarity matching is performed on the feature vectors of the query video and the library video; finally, it is measured by calculating the Jaccard coefficient The similarity between two videos can be used to detect near-duplicate videos. Among them,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com