Construction method and device of visual point cloud map

A construction method and map technology, applied in the field of navigation and positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0036] For ease of understanding, in this embodiment, the image data is collected by a monocular camera and the image is ground texture as an example for illustration. It should be understood that this embodiment is not limited to ground texture images, and other images are also applicable.

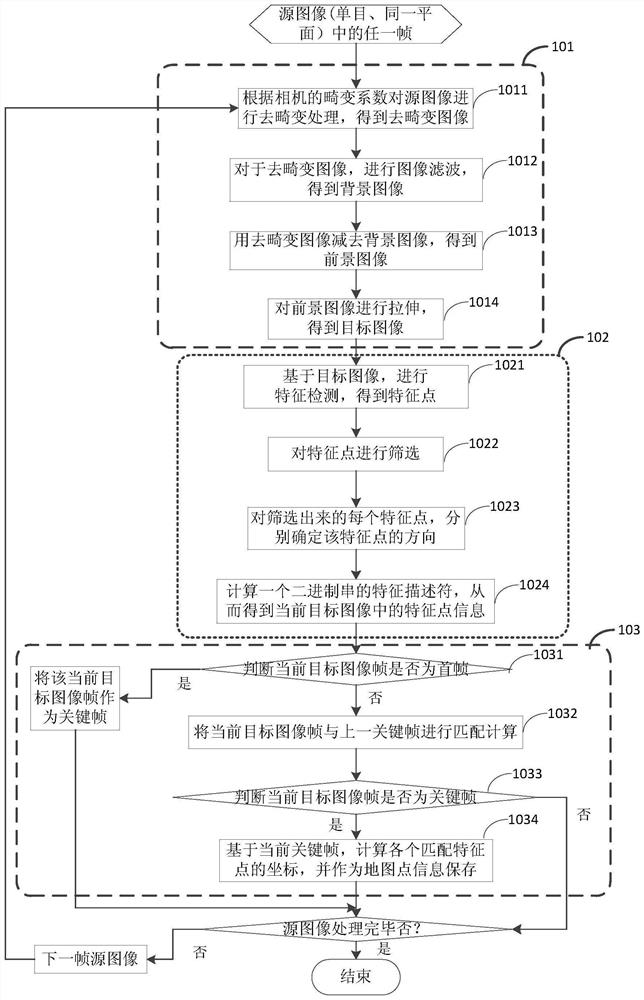

[0037] see figure 1 as shown, figure 1 Schematic diagram of a process for building a map based on image data collected by a monocular camera. It mainly includes image preprocessing, feature extraction, and inter-frame tracking. Specifically, for each frame of source image, the following steps are performed:

[0038] Step 101, using the collected image as the source image, preprocessing the image to obtain the target image, so as to extract the feature points in the image, for example, for the ground texture image, the purpose of the preprocessing is to obtain the texture information main image.

[0039] Step 1011, perform de-distortion processing on the source image according to the d...

Embodiment 2

[0091] In this embodiment, the image data is collected by a monocular camera, and the collected images are not on the same plane as an example for illustration. For example, the camera is mounted forward-looking, that is, the mobile robot collects images through the forward-looking camera.

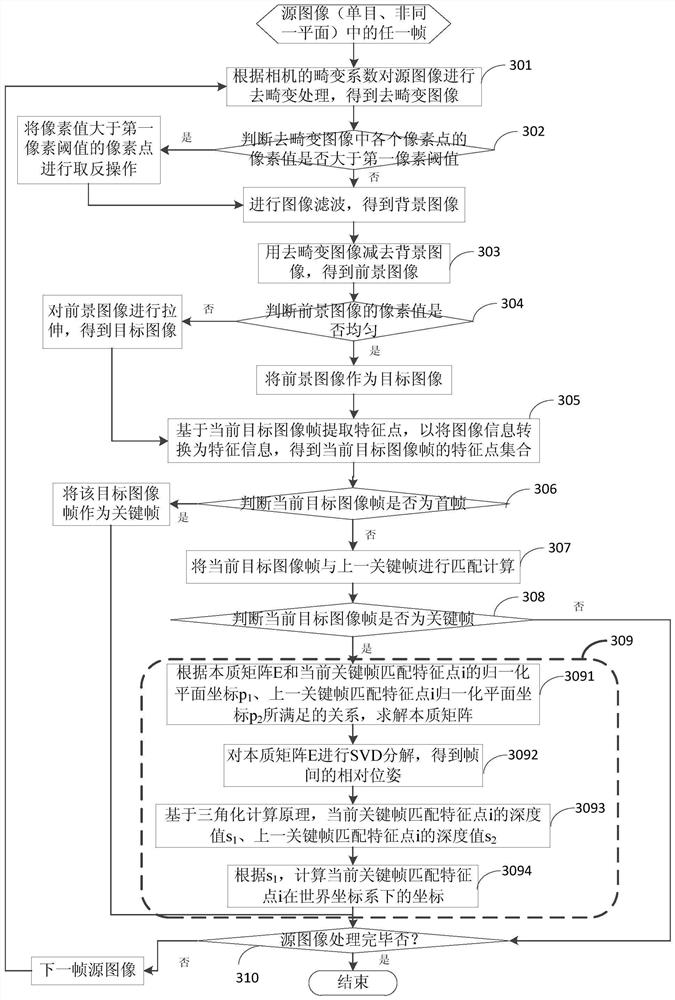

[0092] see image 3 as shown, image 3 Schematic diagram of a process for building a map based on forward-looking image data collected by a monocular camera. For each frame of image, perform the following steps:

[0093] In step 301, the source image is de-distorted according to the distortion coefficient of the camera to obtain a de-distorted image I(u, v), where u and v represent pixel coordinates.

[0094] Step 302, judging whether the pixel value of each pixel in the de-distorted image is greater than the set first pixel threshold, if so, performing an inversion operation on the pixel whose pixel value is greater than the first pixel threshold, and then inverting Filter the de-dist...

Embodiment 3

[0133] In this embodiment, it is illustrated by taking image data collected by binocular cameras as an example, and the collected images are not on the same plane.

[0134] see Figure 4 as shown, Figure 4 Schematic diagram of a process for building a map based on image data collected by a binocular camera. For each binocular image frame, that is, at the same time from the first source image frame of the first purpose and from the second source image frame of the second purpose, the following steps are performed:

[0135] Step 401, performing image preprocessing on the first source image frame and the second source image frame to obtain the current binocular target image frame, including the first target image frame and the second target image frame;

[0136] In this step, image preprocessing may be performed on the first image frame and the second image frame in parallel, or image preprocessing may be performed serially on the first image frame and the second image frame r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com