Human body posture recognition method based on time and channel double attention

A technology of human posture and recognition method, applied in character and pattern recognition, neural learning methods, instruments, etc., can solve problems such as few convolution layers, insufficient feature map information, and inability to accurately locate target action time, etc. The effect of complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] The technical solutions and effects of the present invention will be described in detail below in conjunction with the accompanying drawings and specific implementation.

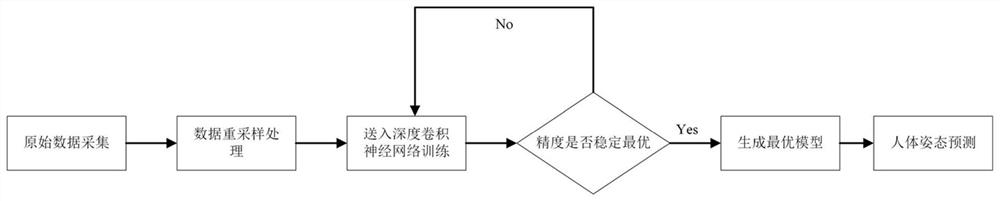

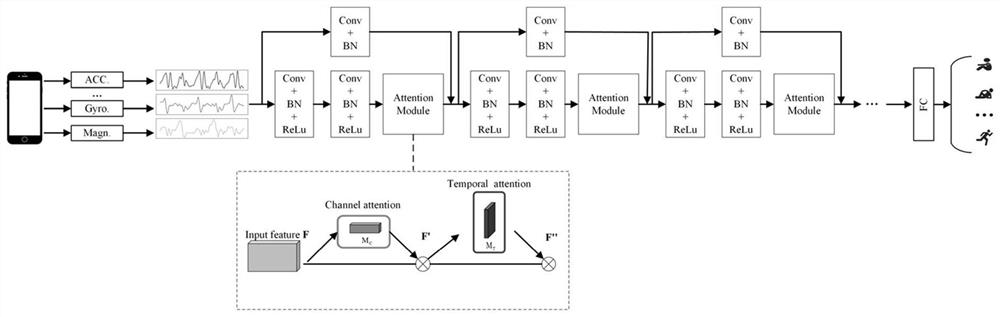

[0040] The present invention proposes a human body posture recognition method based on time and channel double attention, comprising the following steps:

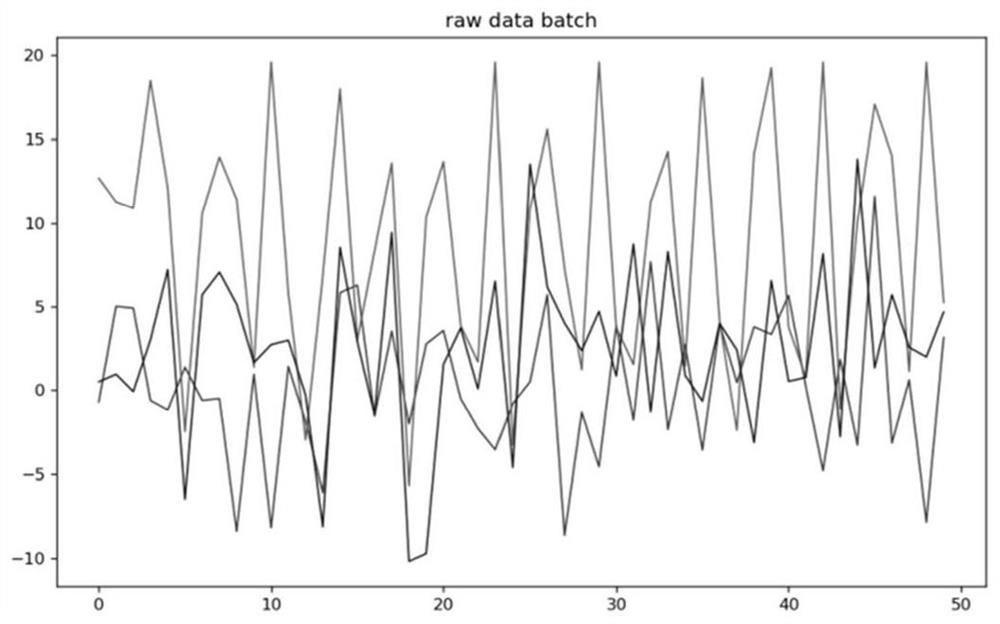

[0041] Step1, recruit volunteers and wear motion sensors to record three movements of volunteers in different body parts (such as wrists, chests, legs, etc.) Axis acceleration data, and attach corresponding action category labels to these action signal data;

[0042] Step2, perform data cleaning and noise removal on the collected triaxial acceleration data, perform frequency resampling processing on the cleaned data, divide the data into training set and test set after normalization processing, and the frequency resampling processing And the normalization processing is as follows: the data is subjected to time series signal frequency downsampling ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com