Action living body recognition method based on attitude estimation and action detection

A gesture estimation and motion detection technology, applied in the field of biometrics, can solve the problems of non-conformance of actions, easy failure of action recognition, and difficulty in adapting the threshold to big and small eyes.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

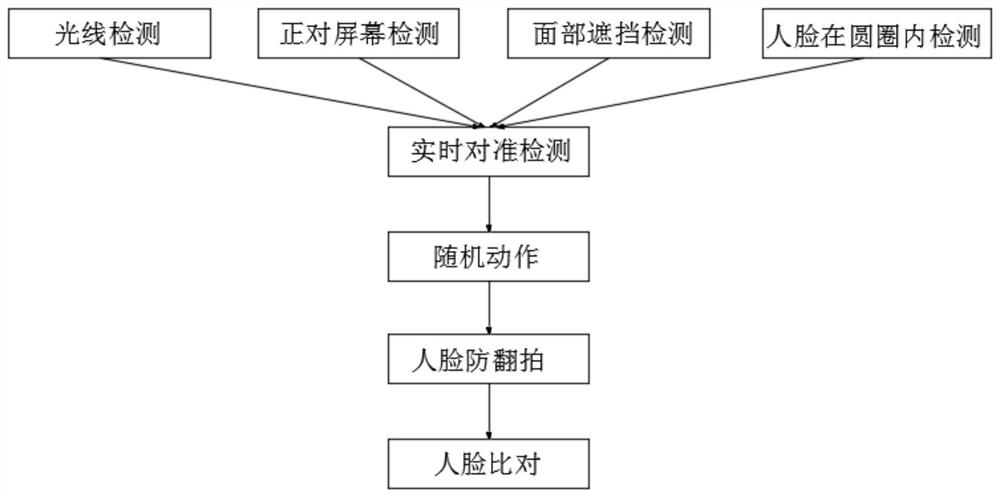

[0056] Please refer to Figure 1 to Figure 7 , this embodiment discloses a method for recognizing a living body based on pose estimation and motion detection, including real-time alignment screen recognition, random motion recognition, face recap recognition and face comparison.

[0057] 1. Real-time alignment screen recognition

[0058] The recognition process includes: using a camera to take a photo of a face, performing light recognition on the photo of the face, recognition of the face in a circle, recognition of the face facing the screen, and recognition of the occlusion of the face. In the circle, when the face is facing the screen and the face is not blocked, the real-time alignment with the screen will pass the recognition, otherwise it will not pass.

[0059] 1) Light recognition

[0060] In this process, the light is divided into strong light, normal light and weak light with decreasing intensity in sequence. If the light in the face photo is recognized as normal ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com