Inscription label detection and recognition system based on deep neural network

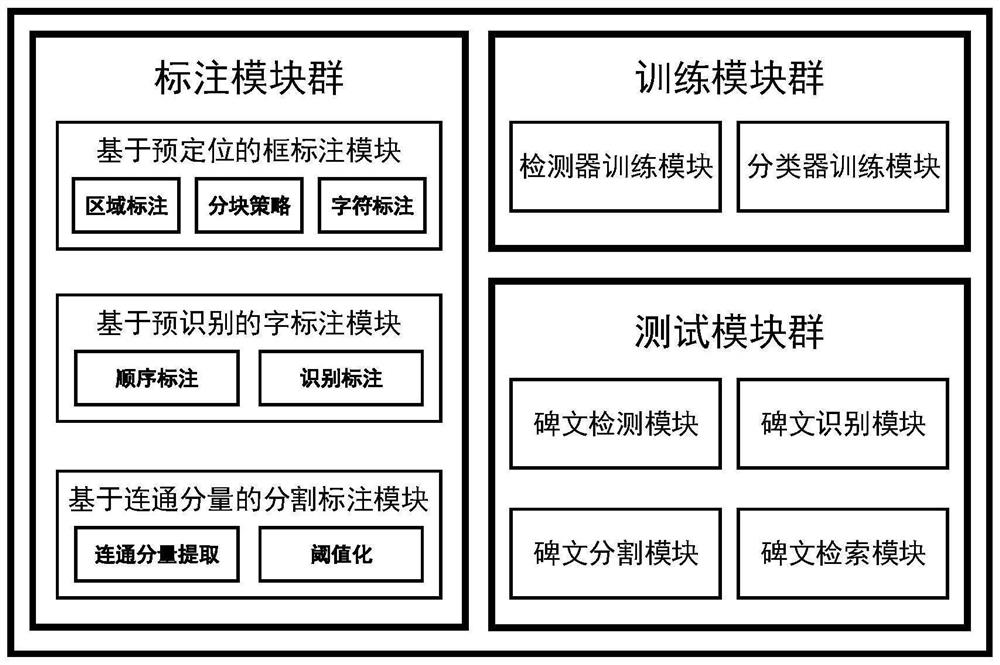

A deep neural network and recognition system technology, applied in biological neural network models, neural architectures, neural learning methods, etc., can solve problems such as detection, recognition and segmentation of mature inscriptions that have not yet appeared, so as to reduce time consumption, improve efficiency, increase The effect of labeling accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

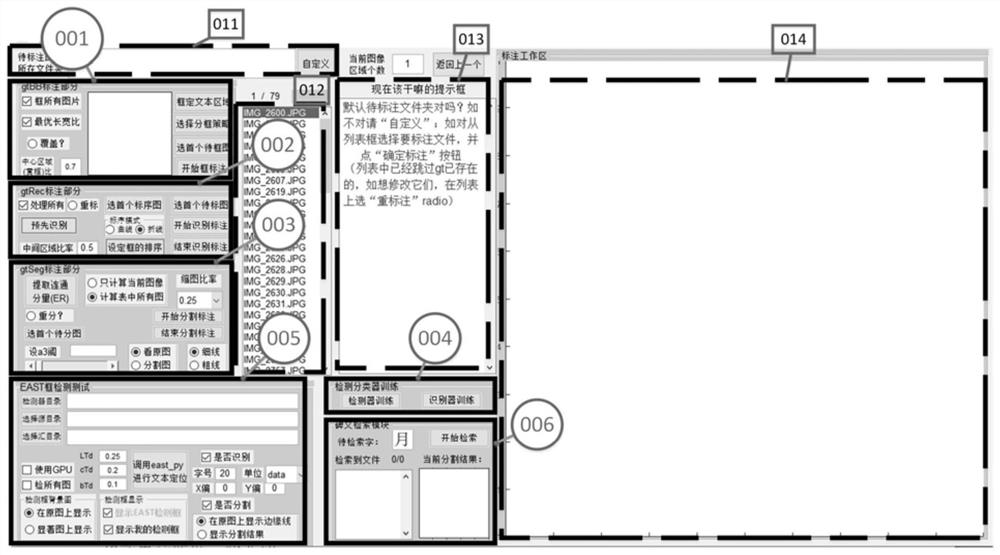

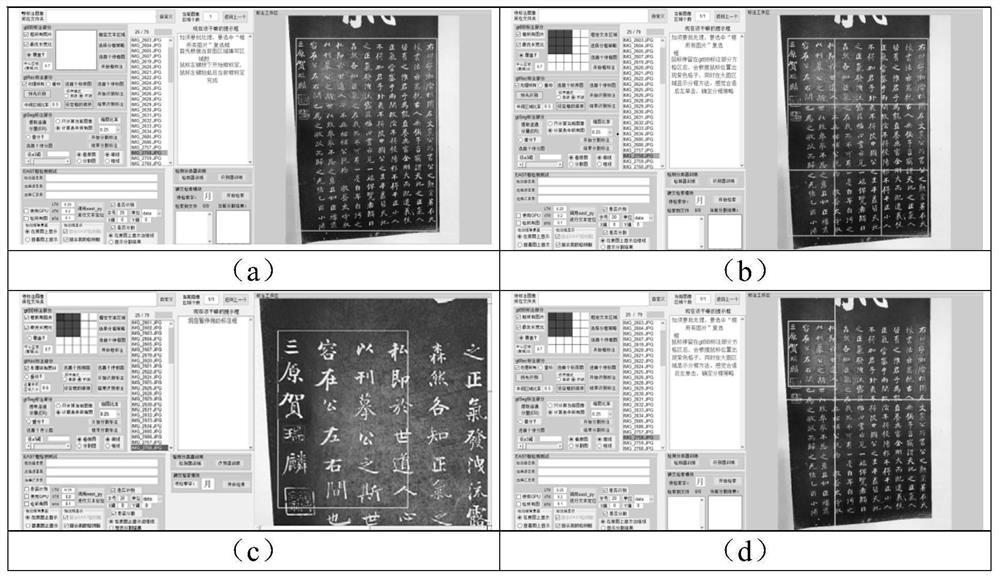

[0056] This example shows the process of detecting and labeling, recognizing and labeling, and segmenting and labeling an inscription image.

[0057] 1. Detection and labeling, interface modules such as figure 2 The 001 module is shown. Since the text of the inscription image is generally dense, each text is relatively small compared to the entire image, so if you want to mark the position accurately, you need to minimize the display of irrelevant areas and focus on the current text to be marked. Therefore, we propose a process of "focusing" the current annotation text through two steps. The first step is to locate the text area in the picture to avoid the influence of unnecessary areas on the field of vision; the second step is to Each text area in is divided into blocks, so that the text in the current block to be marked is displayed each time.

[0058] Specifically: (1) After setting the parameters on the left side, we click the "frame text area" button to start to frame...

example 2

[0068] Example 2: Detector and Recognizer Training

[0069] After labeling, we can train the detector and recognizer.

[0070] Figure 6 The interface diagram of the detector is shown, which mainly includes three areas: data acquisition area (training image and labeling result storage path setting); training image list and current image display area; detector parameter configuration and training start area. After the detection frame in Example 1 is labeled, the labeling result is saved as a text file with the same name as the image. During training, the system obtains the corresponding image from the source image and labeling result folder for training; if you want to check the labeling result of a certain image, you can Select a file in the training image list, and then press the right mouse button, the image will be displayed in the large image area on the right, as well as the labeling results of all character text boxes (such as Figure 5 shown in the blue frame on the r...

example 3

[0076] Example 3: Detection, Recognition, Segmentation and Retrieval Test Module

[0077] 1. Detection function test:

[0078] First, we click the "call east_py for text positioning" button in the test module, and the system uses the trained The detector performs text detection, and displays the original image plus the detection frame in the large image area on the right. If the "Show EAST detection frame" check box is selected, the frame obtained by the EAST algorithm (based on the non-maximum value suppression strategy) will be displayed in a green box in the figure. If the "Show my detection frame" check box is selected, then The detection frame obtained by the patent algorithm (based on connected component analysis and average position strategy) is shown in red frame in the figure. from Figure 9 In the example of , we can see that the red box is closer to the true bounding box of the text, which can better avoid the "truncated" text area, which will lead to recognition...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com