Monocular scene depth prediction method based on deep learning

A technology of scene depth and deep learning, which is applied in the field of computer vision and image processing, can solve the problems that the image generation model does not have scaling, the depth model is not completely differentiable, and the gradient computability is lost, so as to alleviate the problem of gradient disappearance and improve The transmission of information and gradients, and the effect of enhancing feature propagation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

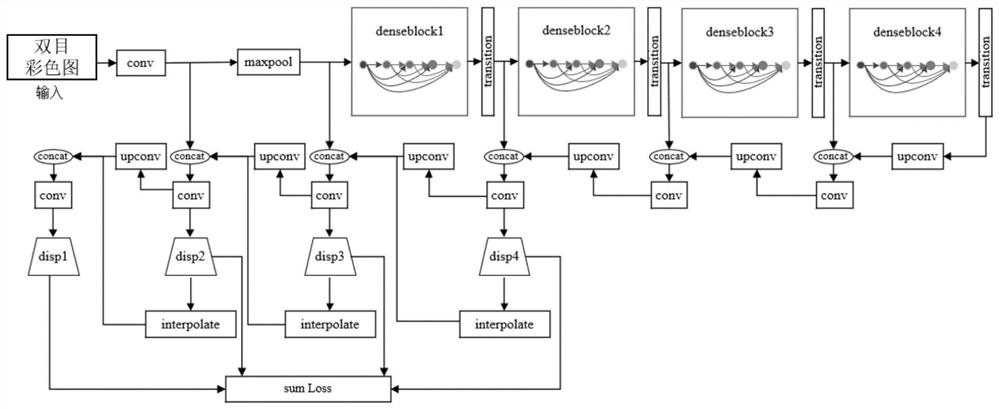

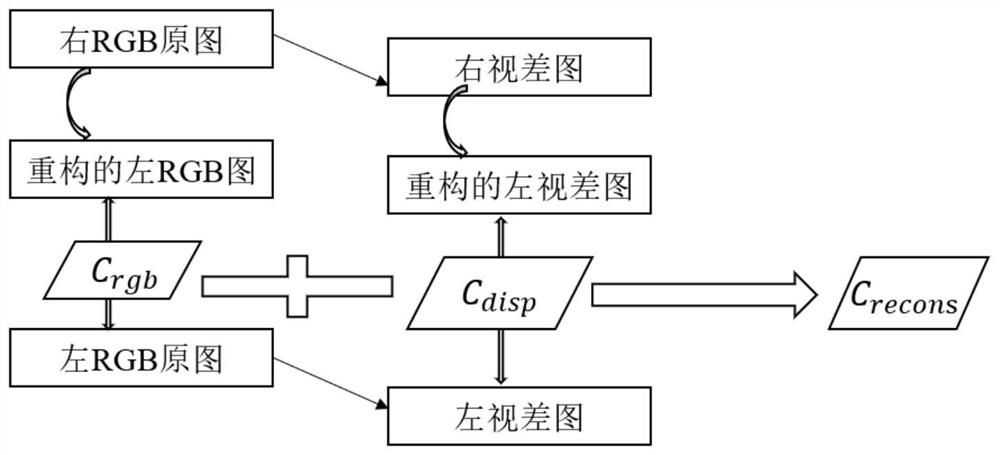

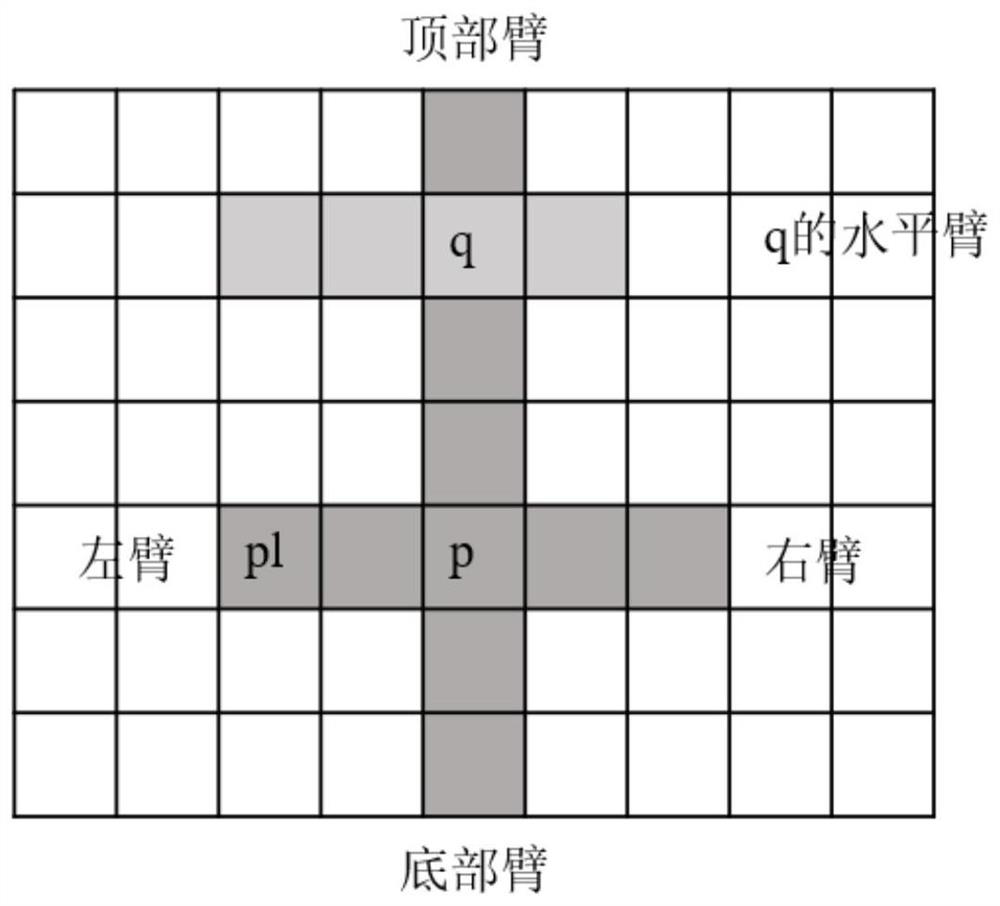

[0040] Embodiment 1: as figure 1 As shown, a monocular scene depth prediction method based on deep learning, using calibrated color image pairs as training input, using DenseNet convolution module to improve the network architecture of the encoder part, in the multi-dimensional matching of binocular stereo and image smoothing The loss constraint is strengthened at each level, the occlusion problem is improved by post-processing, and the depth prediction effect of the monocular image is generally improved. The method includes the following steps:

[0041] Step 1: Preprocessing operation, resize the high-resolution binocular color image pair to 256x512, and perform random flip and contrast transformation on the unified image pair to increase the amount of input data. Input into the encoder of the convolutional network.

[0042] Step 2: In the network encoder part, use the DenseNet convolution module to extract visual features, improve the transmission of information and gradien...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com