Sparse convolutional neural network acceleration method and system based on data flow architecture

A convolutional neural network and acceleration system technology, applied in the field of computer system structure, can solve the problems of reducing continuous access, performance degradation, etc., and achieve the effect of reducing continuous access

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

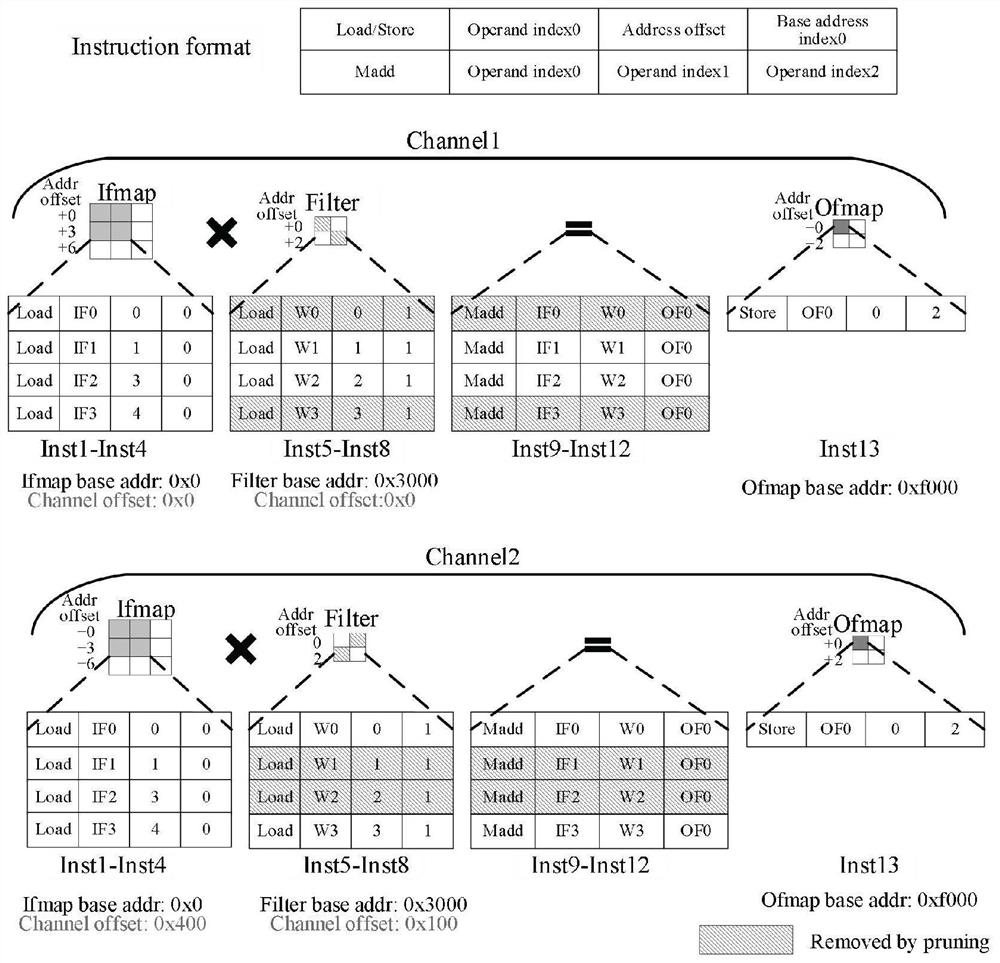

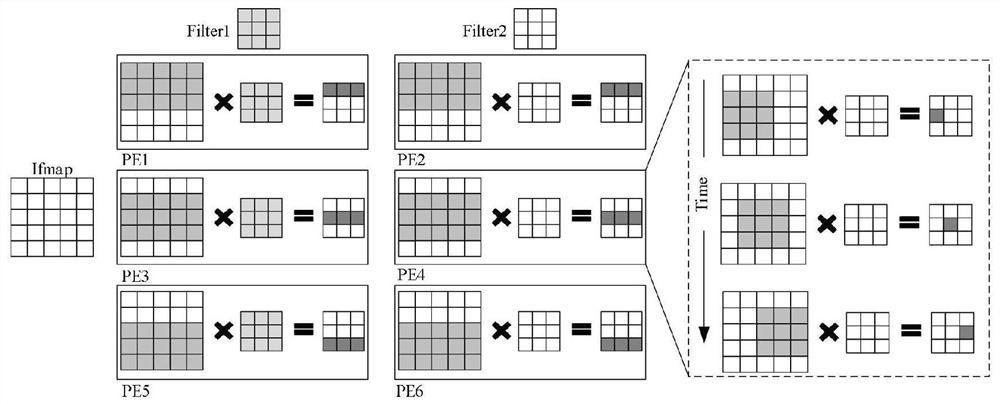

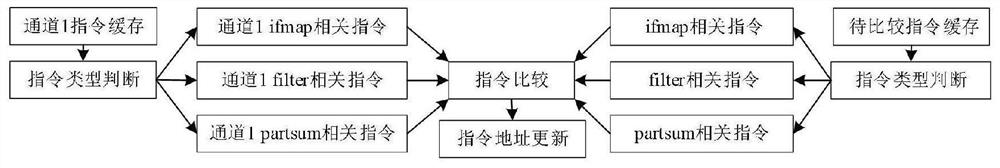

[0037] For the problem of multiple loading of instructions. Dense convolution performs multiplication and addition operations. For convolution operations of different channels, it obtains data at the same position in different channels to perform multiplication and addition operations. Based on the operation characteristics of this rule, when the operations of different channels of the convolution layer are compiled by the compiler After being mapped to the computing array (PE array), the instructions of different channels are the same, so the PE array only needs to load the instruction from the memory once to execute the operations of all channels, ensuring the full utilization of computing resources.

[0038] However, for sparse convolution, the pruning operation sets some weights to 0. Since 0 multiplied by any number is still 0, in order to eliminate the operation of 0 values, after the pruning operation is performed, the corresponding instruction is removed, so that the co...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com