Indoor positioning and mapping method based on depth camera and thermal imager

A technology of depth camera and indoor positioning, applied in 2D image generation, instruments, image enhancement and other directions, can solve the problems of large amount of calculation, high cost, low applicability, etc., and achieve the effect of improving accuracy, accurate positioning, and stable imaging

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

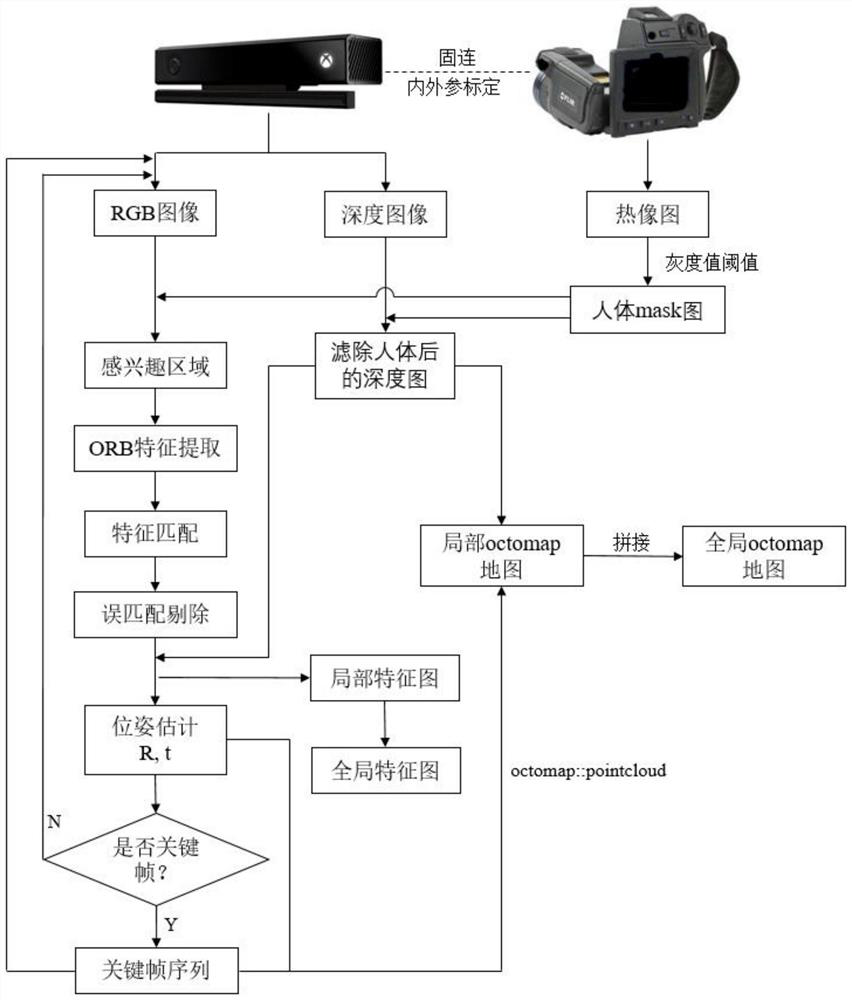

[0037] The present invention provides an indoor positioning and mapping method based on a depth camera and a thermal imager (method for short, see Figure 1-4 ), including the following steps:

[0038] Step 1. First, fix the depth camera and thermal imager, so that the depth camera and thermal imager include most of the common field of view, and then fix the depth camera and thermal imager on the robot together; and carry out the depth camera and thermal imager Calibration of internal and external parameters enables pixel-by-pixel registration of RGB images, depth images and thermal images in the same field of view;

[0039] The depth camera (model is Kinect v2) is equipped with a color lens and a depth lens, which can obtain the color image and depth image of the scene; the color image is used to obtain the color information of the scene and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com