Environmental sound recognition method based on ensemble learning and convolutional neural network

A technology of convolutional neural network and environmental sound, which is applied to biological neural network models, neural architecture, speech analysis, etc., can solve the problems of easy over-fitting and weak model generalization ability, so as to enhance generalization ability and alleviate The effect of overfitting

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

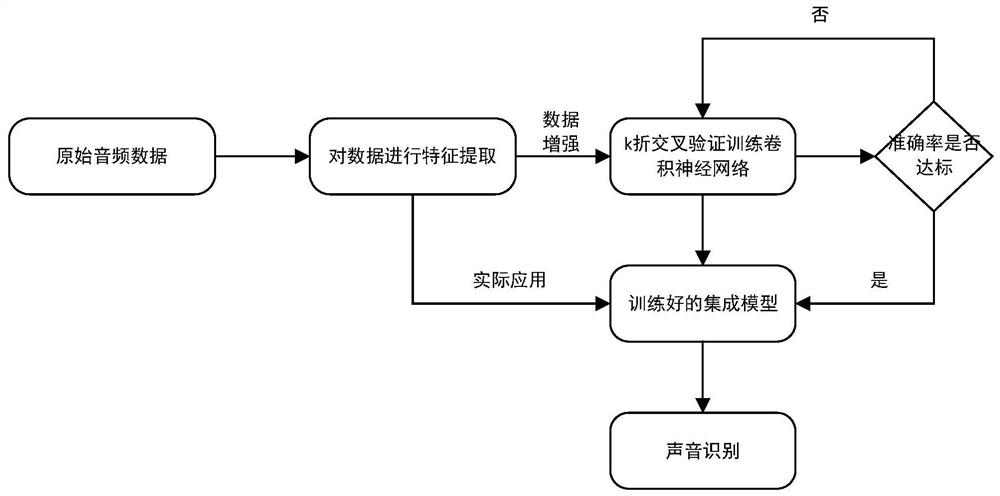

[0023] Such as figure 1 As shown, it is a flow chart of an environmental sound recognition method based on integrated learning and convolutional neural network, including the following steps:

[0024] S1. Feature extraction. In order to facilitate speech analysis, first gather N sampling points into one observation unit, called a frame. In order to avoid excessive changes between two adjacent frames, there will be a period of overlap between two adjacent frames. area. Substituting each frame into a window function removes possible signal discontinuities at both ends of each frame. For each short-term analysis window, the corresponding amplitude spectrum is obtained by FFT, and the energy spectrum of the sound is obtained by taking the square, and then the Mel energy spectrum of the sound is obtained by using the Mel filter bank, and then the log nonlinear transformation is performed on the Mel energy spectrum , to get the final Mel energy spectrum feature;

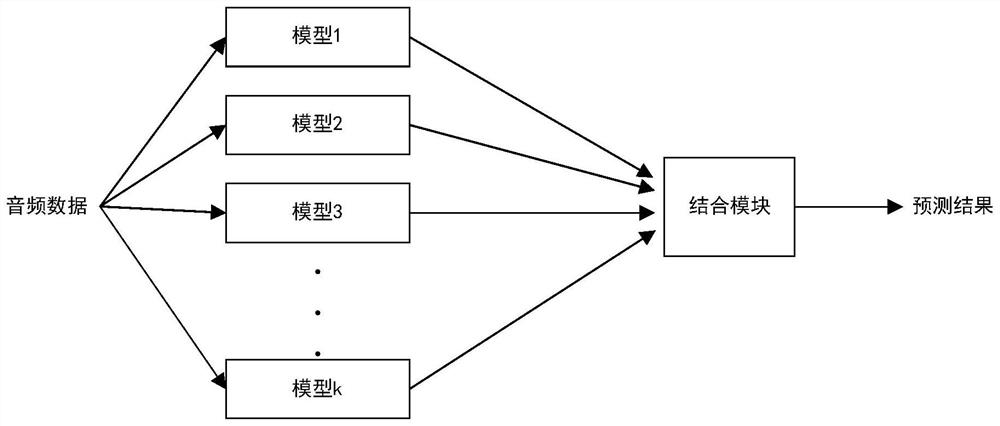

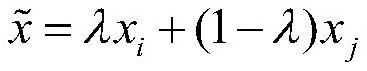

[0025] S2. Mode...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com