Stereoscopic video quality evaluation method based on binocular fusion network and two-step training framework

A technology that integrates network and stereoscopic video, applied in stereoscopic systems, neural learning methods, biological neural network models, etc., can solve problems such as difficult to accurately evaluate asymmetric distortion and unreasonableness, achieve excellent accuracy and reliability, and improve performance Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

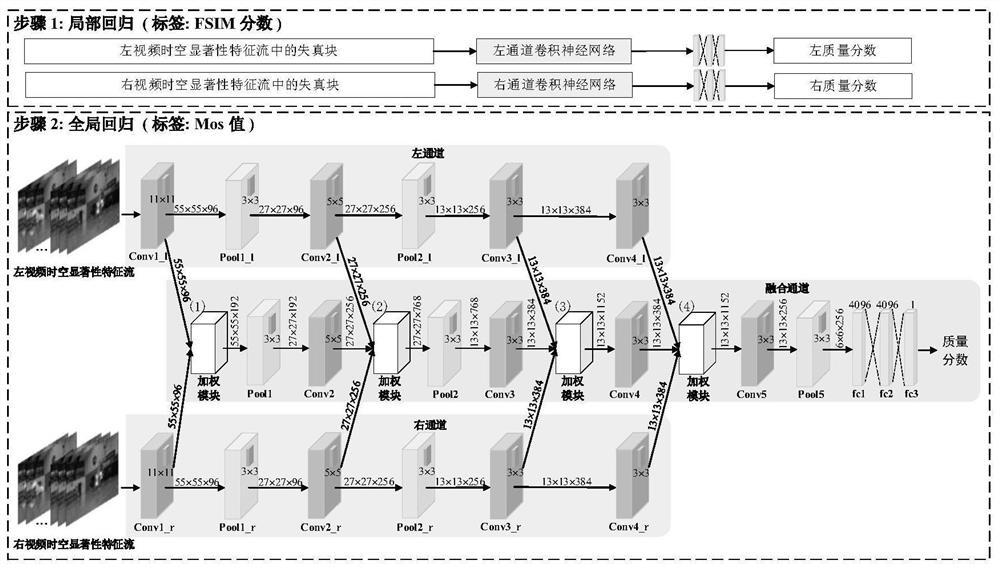

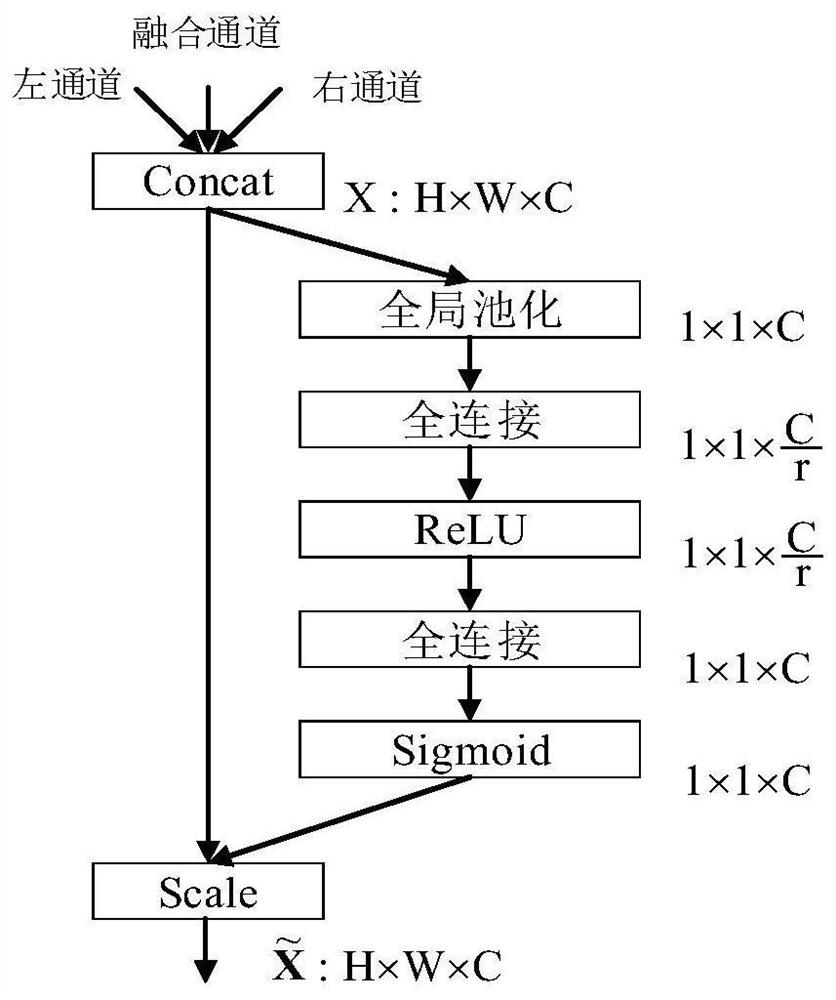

[0022] In order to solve the problems existing in the prior art, this patent embeds the "weighting module" into the fusion network to imitate binocular competition as much as possible, and adopts a two-step training framework. In the first step, the quality scores of the blocks are generated by the FSIM algorithm [17] and used as labels to regress the local network. In the second step, based on the weight model of the first step, the MOS value is used for global regression.

[0023] In order to reflect the temporal and spatial correlation of the video, the present invention selects the spatiotemporal saliency feature stream as the input of the binocular fusion network. The theory that time is not independent of each other is consistent. Because changes in spatial pixels provide motion information and attention mechanisms to the temporal domain, and in turn, the temporal flow reflects the spatial saliency in the video.

[0024] Therefore, the main contributions of the present i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com