Model generation method, music synthesis method, system, equipment and medium

A technology for generating systems and models, applied in neural learning methods, biological neural network models, electroacoustic musical instruments, etc., can solve problems such as poor music fragment effect, complex model structure, and unsmooth notes, so as to enhance fluency and improve training. Speed, effect of reducing training time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

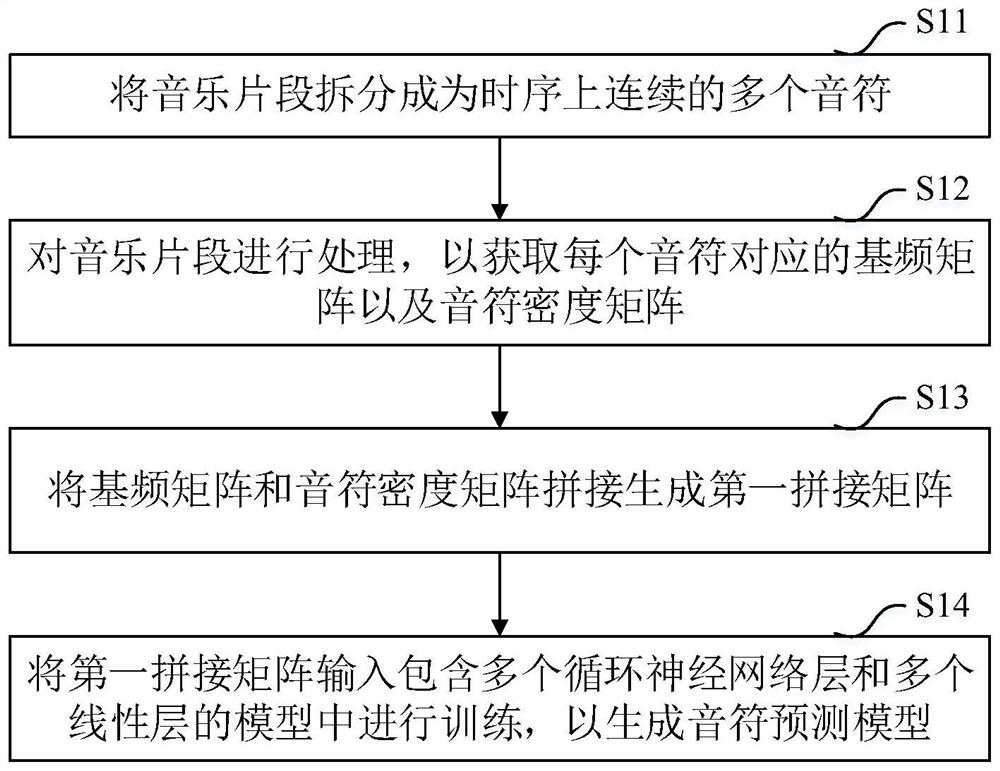

[0057] This embodiment provides a method for generating a model, such as figure 1 As shown, the generation method includes:

[0058] Step S11 , splitting the music segment into a plurality of sequential notes.

[0059] Step S12 , process the music segment to obtain a fundamental frequency matrix and a note density matrix corresponding to each note. Wherein, the note density matrix is used to represent the triggering and ending time of the corresponding note. Specifically, the processing includes Embedding processing.

[0060] Step S13, splicing the fundamental frequency matrix and the note density matrix to generate a first splicing matrix.

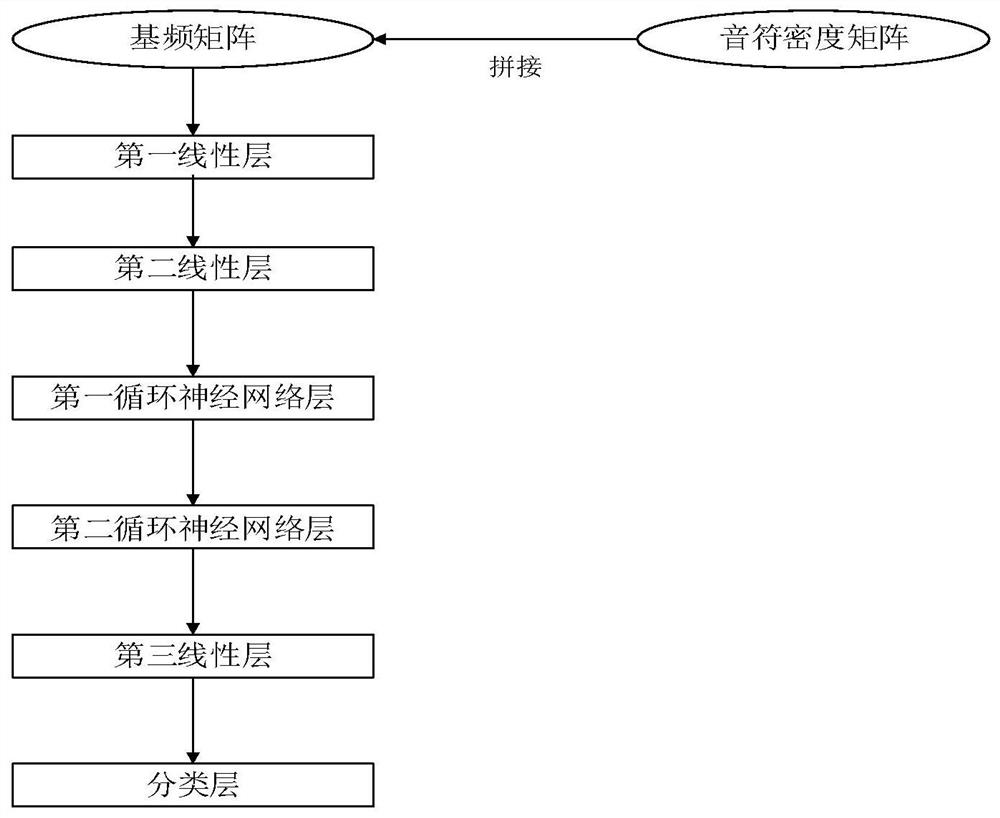

[0061] Step S14, inputting the first concatenated matrix into a model comprising a plurality of cyclic neural network layers and a plurality of linear layers for training, so as to generate a musical note prediction model. Among them, the linear layer is used to extract the musical features corresponding to the notes, the cyclic neu...

Embodiment 2

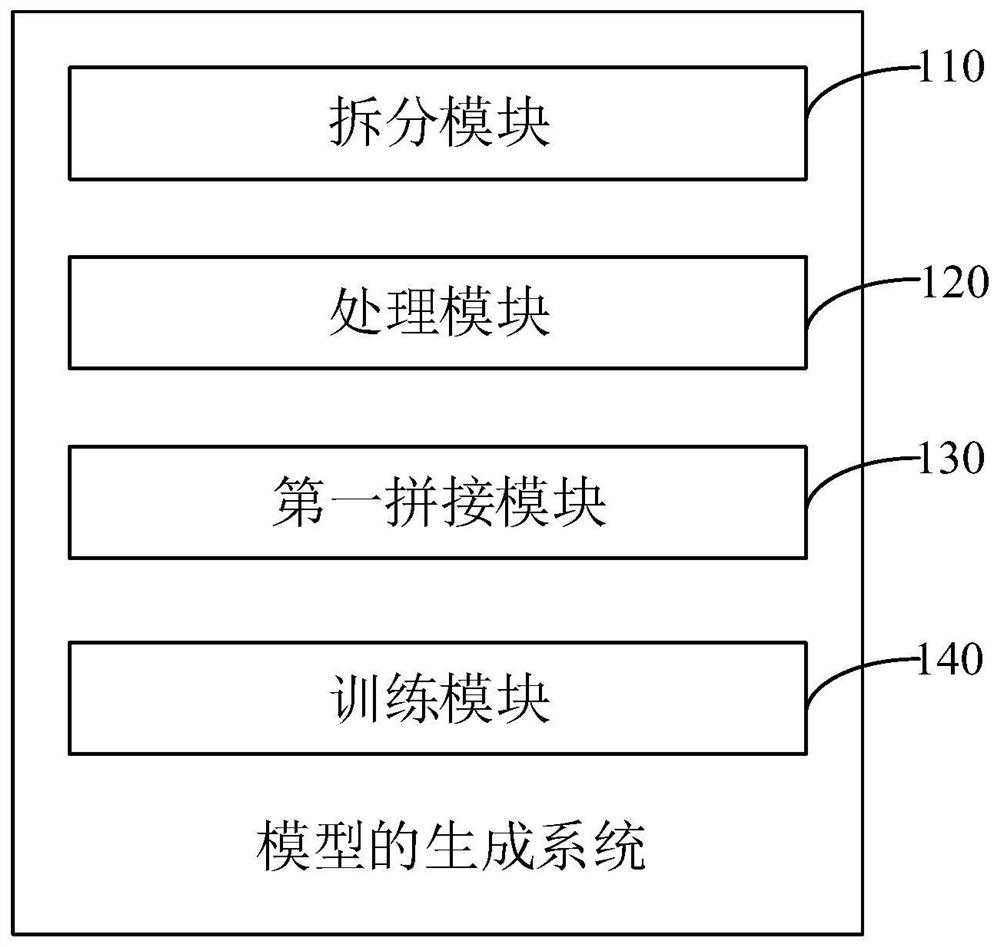

[0080] In this embodiment, a system for generating a model is provided, such as image 3 As shown, it specifically includes: a splitting module 110 , a processing module 120 , a first stitching module 130 and a training module 140 .

[0081] Wherein, the splitting module 110 is configured to split the music segment into a plurality of consecutive notes in time sequence.

[0082] The processing module 120 is configured to process the music segment to obtain a fundamental frequency matrix and a note density matrix corresponding to each note. Wherein, the note density matrix is used to represent the triggering and ending time of the corresponding note. Specifically, the processing includes Embedding processing.

[0083] The first stitching module 130 is configured to stitch the fundamental frequency matrix and the note density matrix to generate a first stitching matrix.

[0084] The training module 140 is configured to input the first concatenated matrix into a model compri...

Embodiment 3

[0102] In this embodiment, a method for music synthesis is provided, such as Figure 4 As shown, the method includes:

[0103] Step S21, using the method for generating a model as in Embodiment 1 to train and generate a musical note prediction model.

[0104] Step S22, obtaining the number of preset notes contained in the music to be synthesized and the target fundamental frequency matrix and target note density matrix corresponding to each preset note; wherein, each preset note has a note position label.

[0105] Step S23, splicing the target fundamental frequency matrix and the target note density matrix to generate a target splicing matrix.

[0106] Step S24, input the target mosaic matrix corresponding to all the preset notes into the note prediction model in sequence according to the arrangement order of the notes in the preset time sequence, so as to obtain the target note corresponding to each preset note.

[0107] Step S25, splicing all the target notes according to ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com