Tensor cache and access structure and method thereof

A cache and access method technology, applied in memory systems, register devices, instruments, etc., can solve problems such as inconsistent data access delays, complex memory access scheduling, and memory bank access conflicts.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] The present invention will be described in further detail below in conjunction with the accompanying drawings and specific embodiments.

[0040] This embodiment discloses a Tensor cache and access structure and method thereof, which is mainly aimed at a data cache, access and parallel processing scheme for two-dimensional / three-dimensional regular data access and processing.

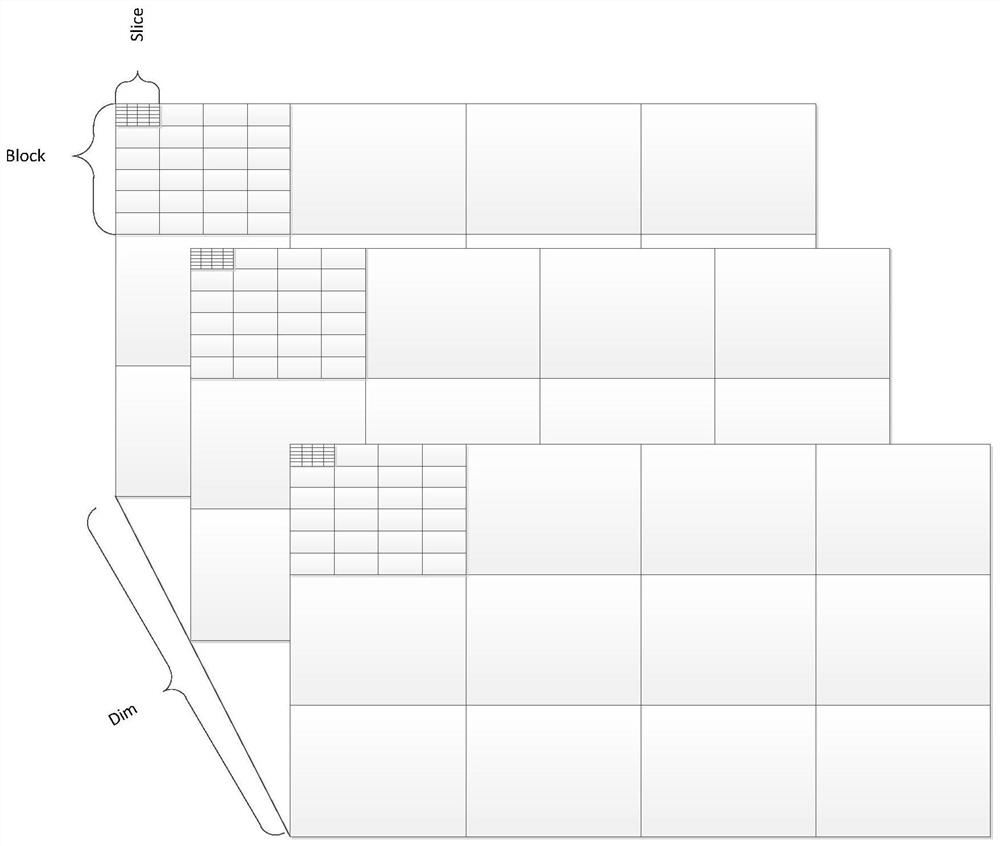

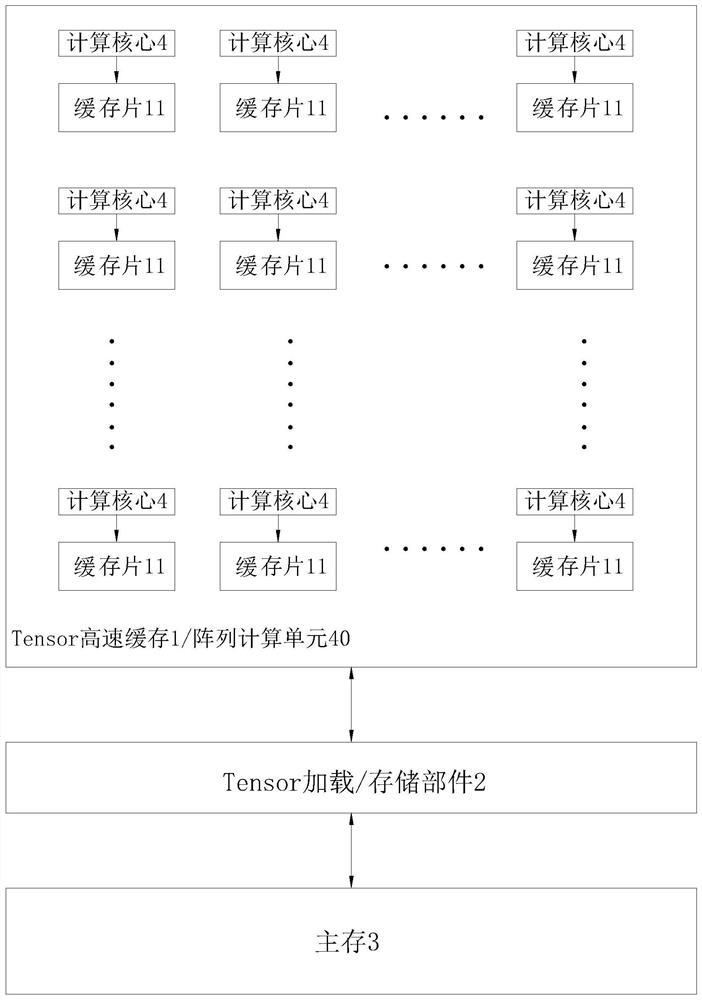

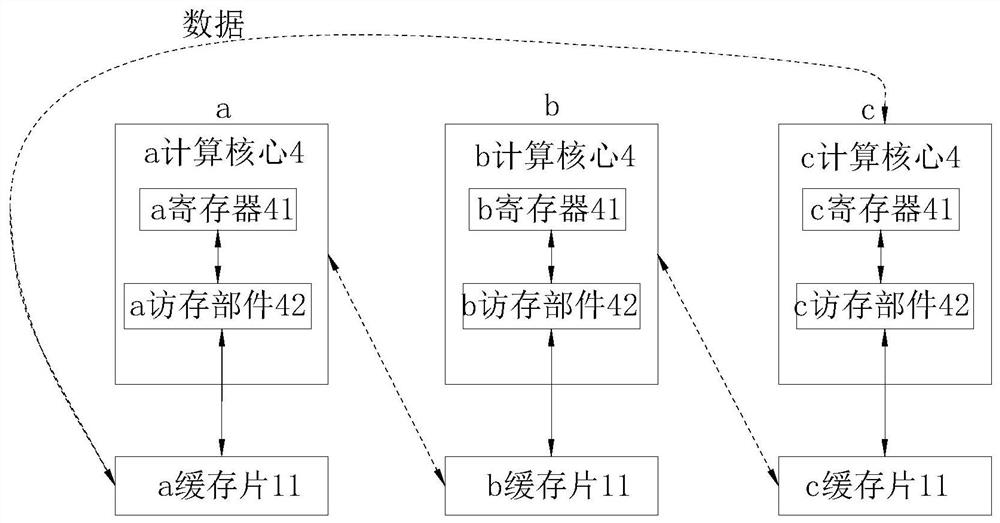

[0041] Such as figure 1 As shown, this solution includes a Tensor cache 1 with multi-dimensional distribution of data. Specifically, the Tensor cache 1 is divided into multiple Block cache blocks in three dimensions, and each cache block has multiple Slice caches distributed in a two-dimensional array Each cache slice has multiple memory banks distributed in a two-dimensional array.

[0042] The parameters of the Tensor structure variable tensor are as follows:

[0043] Length on the third dimension: DIM;

[0044] Block cache block array contained in two dimensions: BLOCK={BLOCK_ROW,BLOCK_COLUM...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com