Target detection and visual positioning method based on YOLO series

A technology for visual positioning and target detection, applied in neural learning methods, character and pattern recognition, image data processing, etc., can solve the problems of low missed detection rate, low applicability, and low error rate.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] The present invention will be further described below in conjunction with the accompanying drawings.

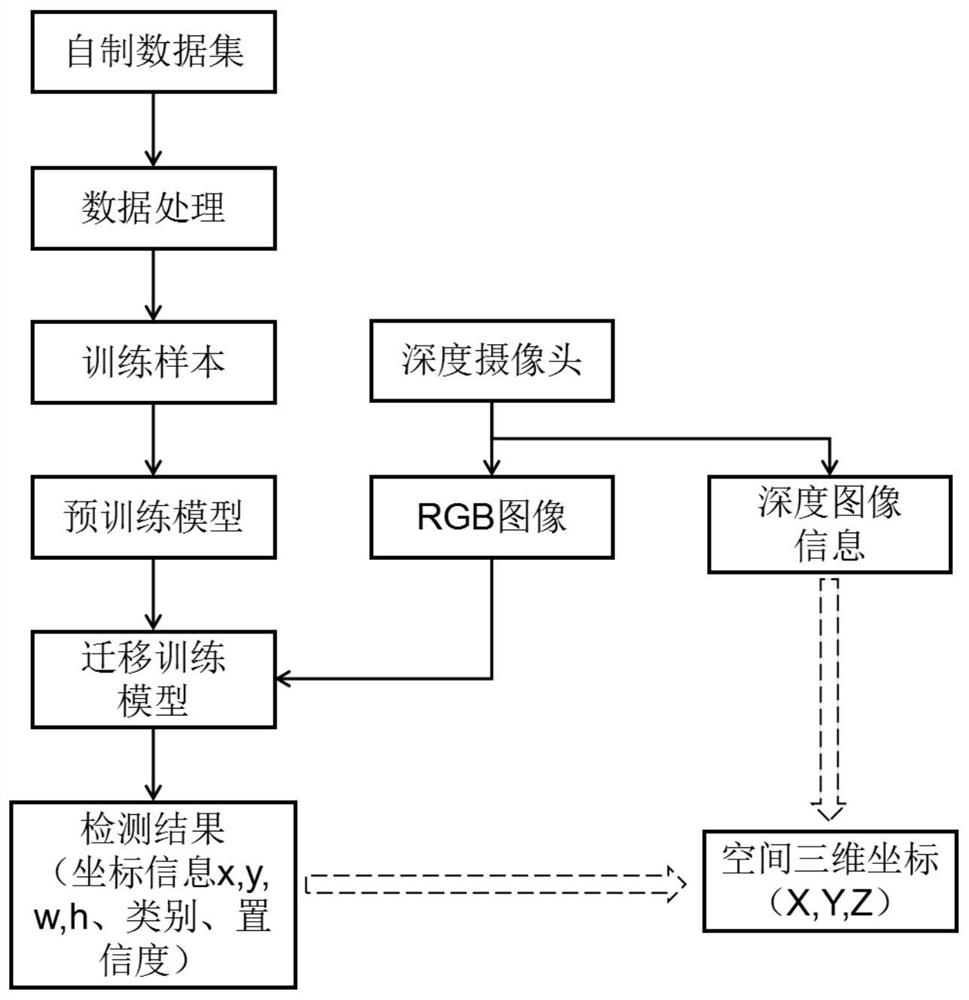

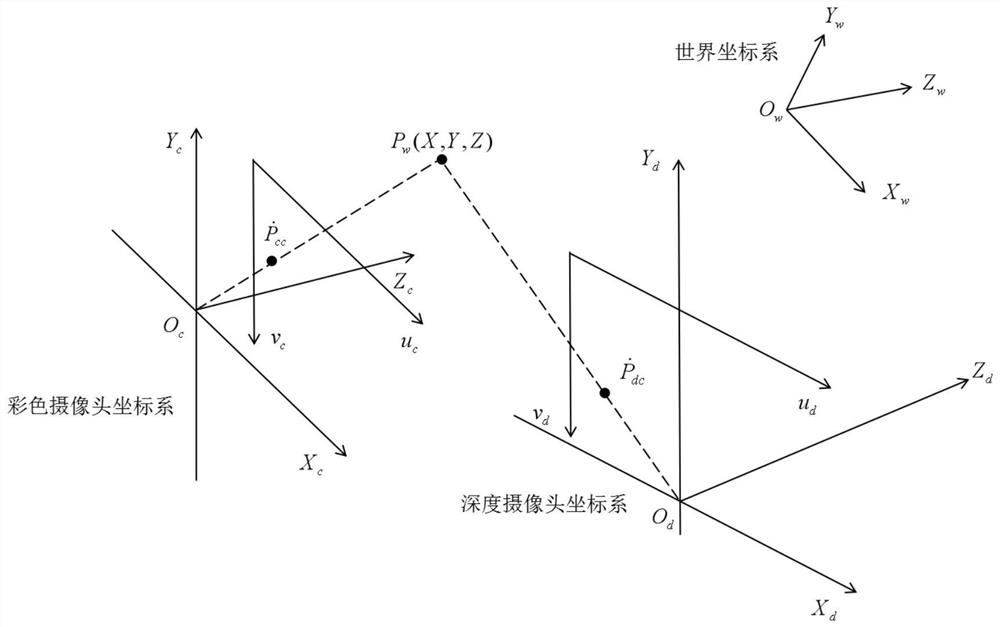

[0036] Such as figure 1 As shown, a target detection and visual localization method based on YOLO series includes the following steps:

[0037] (1) Collect the RGB color image of the target to be detected, and make a self-made image set of the target to be detected;

[0038] Specifically, the RGB color image in step (1) is collected by a D435i depth camera fixed directly above the target to be detected; the D435i depth camera has an IMU, a binocular camera and an infrared emitter module, and is used by configuring the ROS environment.

[0039] (2) Annotate the image set, perform data processing, and define training, testing, and verification samples;

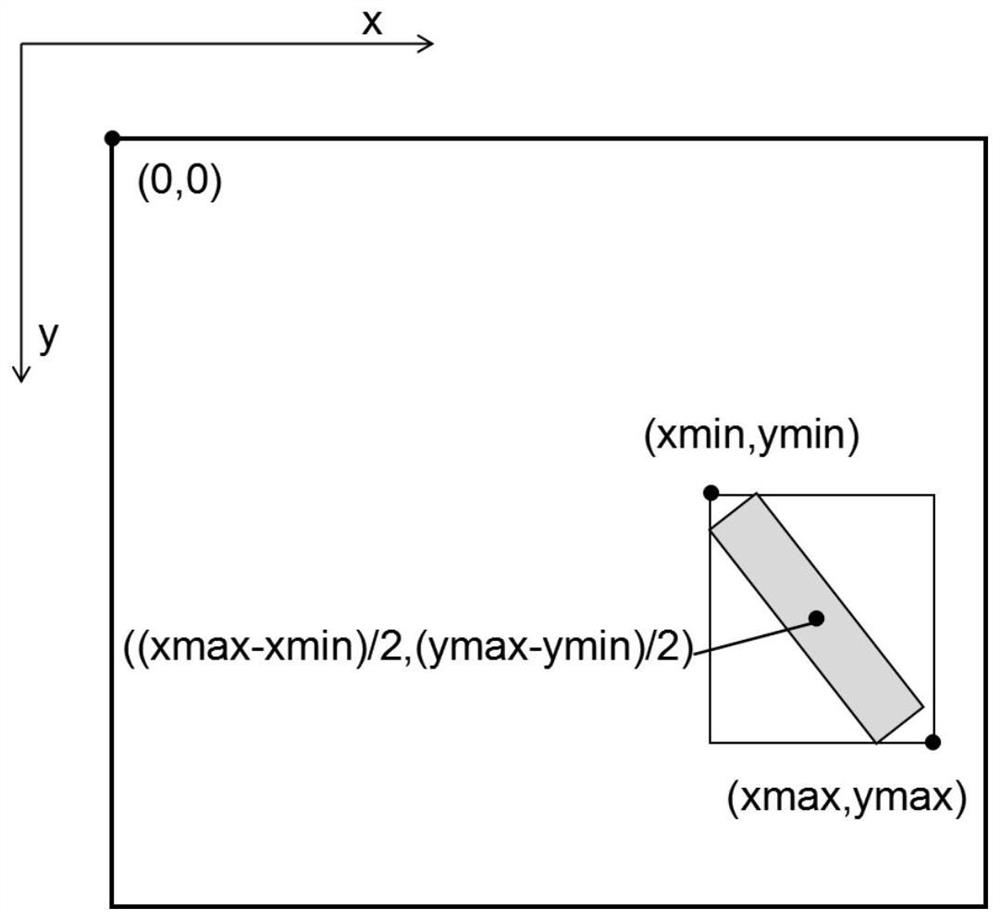

[0040] Specifically, the tool for labeling images in step (2) is Labelimg, which marks the coordinates and categories of the target to be detected with a rectangular frame, and outputs in VOC format; each image to be d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com