High-performance-oriented intelligent cache replacement strategy adaptive to prefetching

A cache replacement, high-performance technology, applied in memory systems, memory address/allocation/relocation, instruments, etc., can solve problems such as performance gain decline, and achieve the effect of reducing interference, avoiding cache pollution, and achieving good performance advantages

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] In order to make the purpose, technical solutions and advantages of the present invention more clear, the embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings.

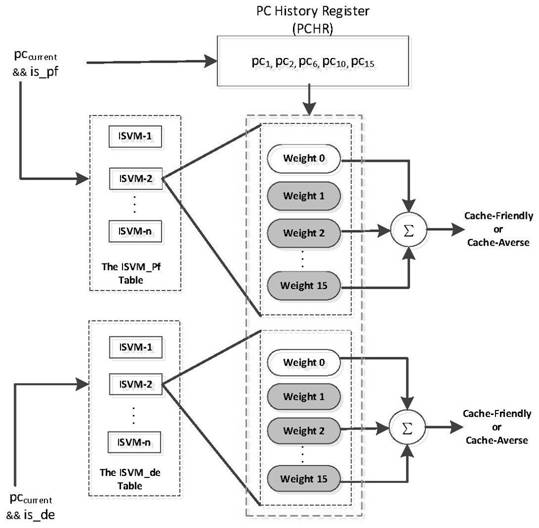

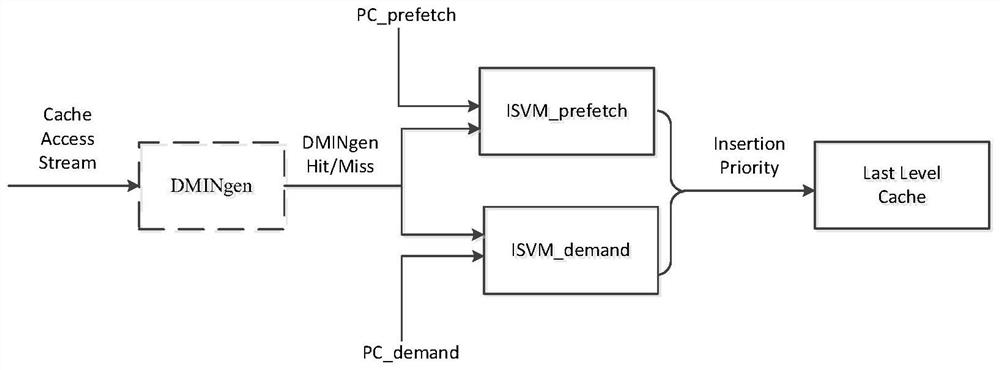

[0030] What the present invention relates to is a kind of intelligent cache replacement strategy that adapts to prefetching, such as figure 1 As shown, its main components are the Demand Request Predictor and the Prefetch Request Predictor that make reuse interval predictions, and DMINgen that simulates the Demand-MIN algorithm to provide the input labels for training the predictors. The design mainly includes two parts of training and forecasting of demand predictor and prefetch predictor. The input data of the demand predictor includes the PC address of the load instruction that generates the demand access, and the past PC address stored in the PCHR; the input data of the prefetch predictor includes the PC address of the load instruction that tri...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com