Distributed training method for large-scale deep neural network

A deep neural network and training method technology, applied in the field of distributed training for large-scale deep neural networks, to achieve the effect of high-efficiency expansion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] In order to make the purpose, technical solution and technical effect of the present invention clearer, the technical solutions in the embodiments of the present invention will be further clearly and completely described below in conjunction with the accompanying drawings. Obviously, the described embodiments are only the present invention. Some, but not all, embodiments.

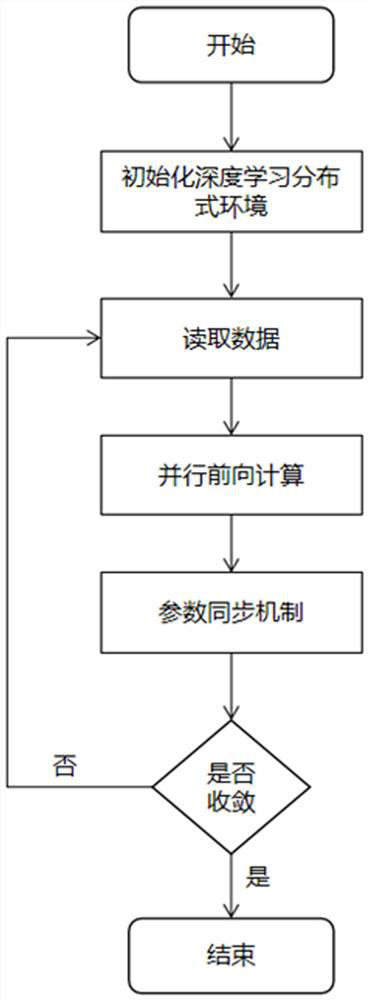

[0047] Such as Figure 1-3 As shown, a kind of distributed training method for large-scale deep neural network provided by the present invention comprises the following steps:

[0048] S1: Determine the total number of servers and the number of GPUs available for each machine, build and initialize a deep learning distributed environment, determine the overall BatchSize and learning rate during the training process, and the communication mechanism of all computing nodes during the parameter update phase.

[0049] Specifically, the total number of servers and the number of GPUs available for each serv...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com