Multi-robot relative positioning method based on multi-sensor fusion

A multi-sensor fusion and multi-robot technology, applied in the field of UAV perception and positioning research, can solve problems such as distance, improve positioning accuracy, improve accuracy and calculation speed, and accelerate the effect of observation update frequency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

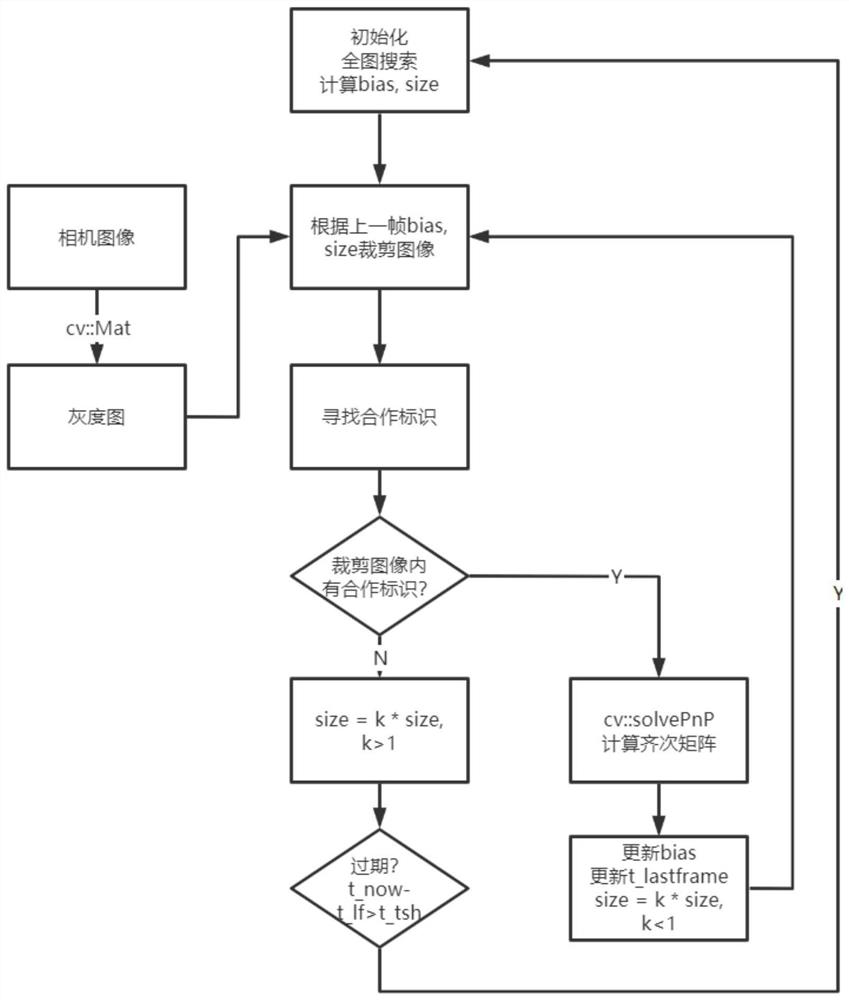

[0036] Further, the drawings are now further described with reference to the present invention:

[0037] It is an object of the present invention to design a mutual positioning method based on a multi-sensor fusion. The configuration perception of the multi-drone consensus is intended to provide a stamped information for the control module.

[0038] In order to achieve the above object, the technical solution used in the present invention includes the following steps:

[0039] 1) Single-machine visual position estimate for other drones in visual visibility;

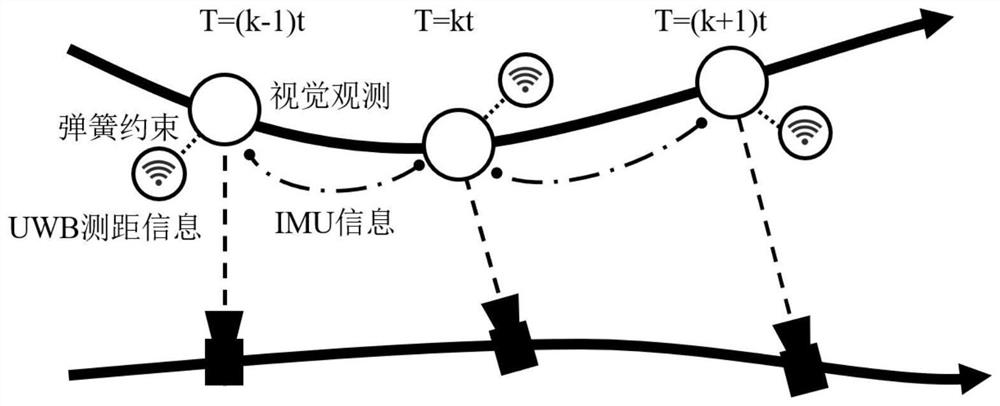

[0040] 2) IMU information and visual estimation and UWB ranging information fusion;

[0041] 3) Multi-machine positioning results are fused.

[0042] The step 1) estimate of the single machine to other drones:

[0043] 1.1) Setting of cooperation identification: Use AprilTag as a cooperation identifier, black and white print, and the outer frame is 0.5m.

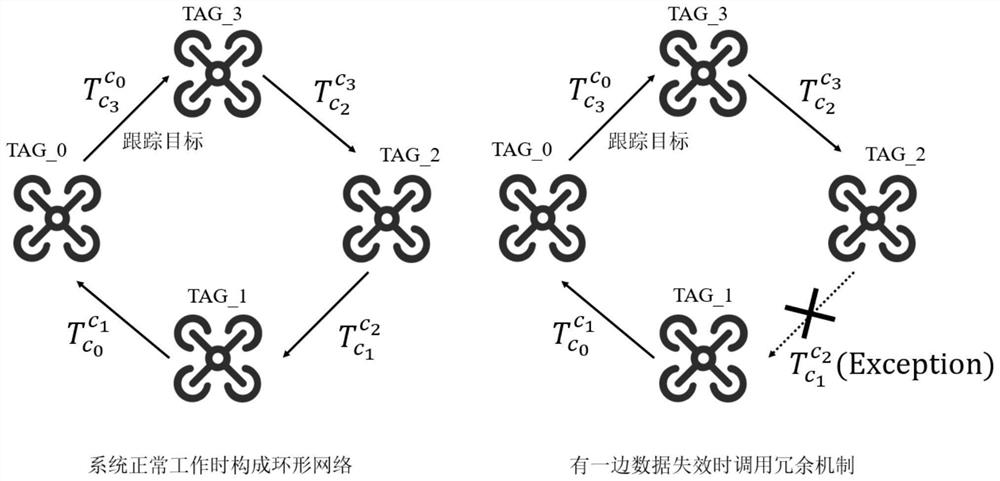

[0044] 1.2) Determine the system topology: The performance of the airb...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com