Neural network generation method and device, and computer readable storage medium

A neural network and neural network model technology, applied in the field of neural network generation and computer-readable storage media, can solve problems such as consumption and large computing power, and achieve the effect of reducing the number of trainings, reducing costs, and reducing computing power requirements

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

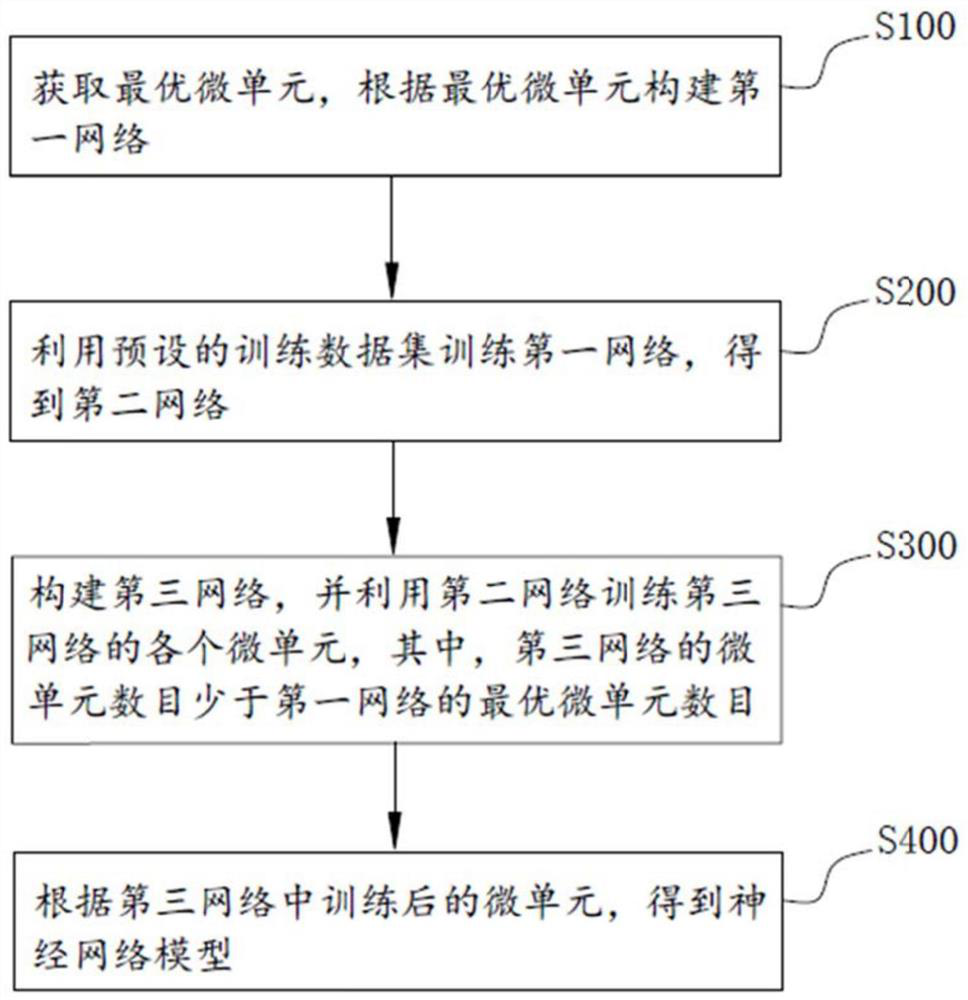

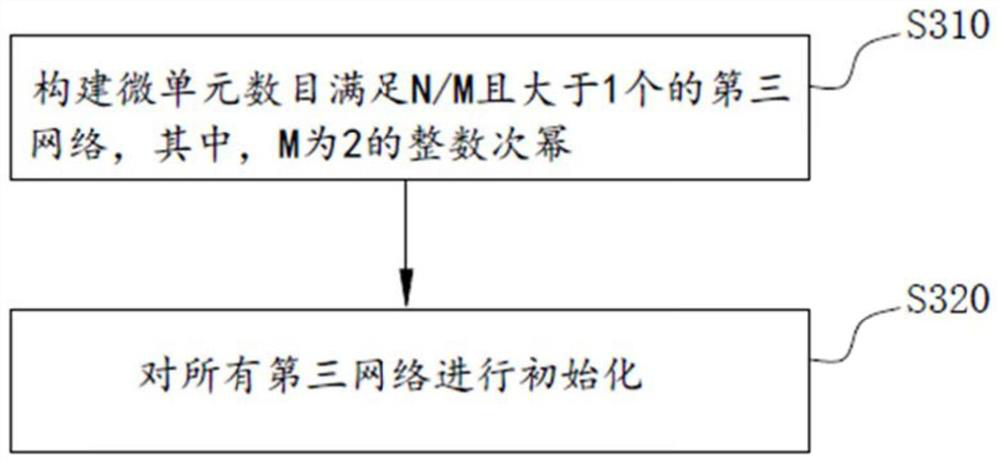

[0107] see Image 6 As shown, the search space defining the NAS algorithm is the micro-unit space, the micro-unit has a single-input-single-output structure, and the topology of the macro-unit is a sequential structure; the network model composed of multiple micro-units is used to solve the image classification problem, and the multi-channel image The data is the actual classification of the input and output image content, and the generation method of the neural network includes the following steps:

[0108] Step S610: Initialize the search space of the micro-unit network structure, apply the micro-search NAS algorithm, and obtain the optimal micro-unit;

[0109] Step S620: Set the number of micro-units in the sequential topology and form a first network a-a-a-a-a-a-a-a of a predefined size;

[0110] Step S630: Train the first network using the picture data of the training dataset and the classification information corresponding to each image to obtain the teacher network a ...

Embodiment 2

[0115] see Figure 7 As shown, the search space that defines the NAS algorithm is the micro-unit space, and the micro-unit has a two-input and single-output structure; the network model composed of multiple micro-units is used to solve the image classification problem, and the multi-channel image data is used as input and the image content is output. For actual classification, the generation method of the neural network includes the following steps:

[0116] Step S710: Initialize the search space of the micro-unit network structure, and apply the micro-search NAS algorithm to obtain the optimal micro-unit structure;

[0117] a-a-a-a

[0118] Step S720: Set the number of micro-units in the complex topology network and form a first network a-a-a-a of a predefined size;

[0119] Step S730: Apply the picture data in the training data set and the classification information corresponding to each image to train the model to obtain

[0120] a 1 -a 3 -a 5 -a 7

[0121] teacher ...

Embodiment 3

[0140] see Figure 8 As shown, after obtaining a full set of neural network models, the restriction for deploying terminals is that the online inference delay should not exceed 500ms. The neural network model testing process includes the following steps:

[0141]Step S810: Evaluate the prediction accuracy of all neural network models with different numbers of micro-units on the test data set;

[0142] Step S820: Applying all the neural network models with different numbers of micro-units on the target terminal to perform the same reasoning task respectively, recording the time delay index when the terminal performs the reasoning task;

[0143] Step S830: According to the prediction accuracy rate and the time delay index, under the pre-set reasoning time delay limit condition, select the model with the highest reasoning accuracy rate and deploy it on the terminal.

[0144] In this embodiment, the terminal runs a classification network composed of more than 5 micro-units, and t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com