Multi-view depth acquisition method

An acquisition method and multi-view technology, applied in the field of computer vision, can solve problems such as speeding up the generation of depth maps, and achieve the effect of improving recognition ability, fast speed and good robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

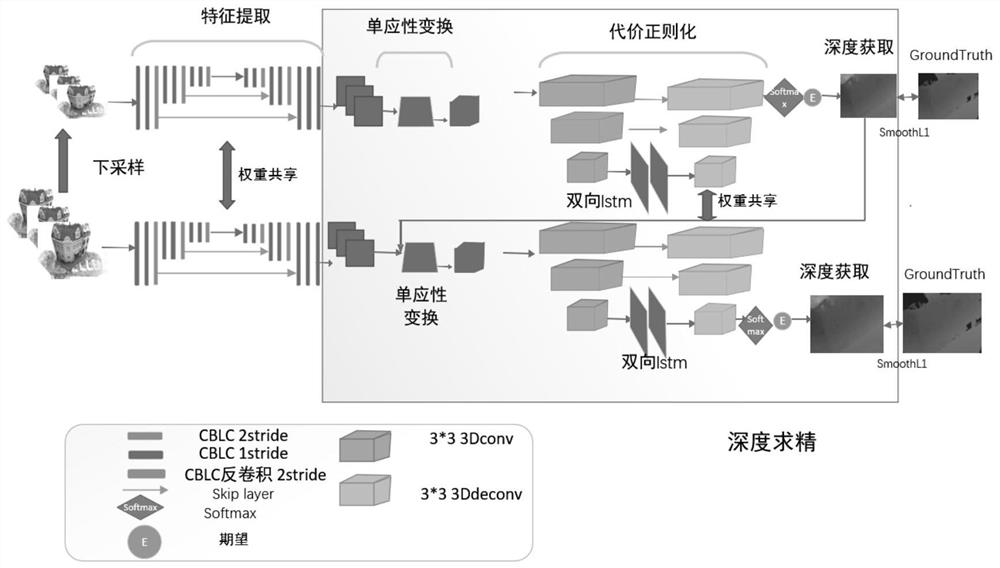

[0033] Below in conjunction with accompanying drawing, the present invention will be further described:

[0034] Such as figure 1 As shown, a multi-view depth acquisition method specifically includes the following steps:

[0035] Image input: Multiple images are input, and the same camera acquires images at multiple locations. Here, the images acquired at multiple positions are divided into a reference image and multiple target images, and these images are RGB three-channel images of 128×160 pixels. The position where the reference image is acquired is called the reference angle of view, and the position where the target image is acquired is called the target angle of view. In this method, an image sequence of another scale can be obtained by downsampling the image sequence, and the length and width of each downsampling are 1 / 2 of the original. If downsampling is done n times, the sequence numbers of the final image sequence are arranged in reverse order as L=n, n−1, . . ....

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com