Multi-modal irony object detection method based on multi-scale cross-modal neural network

A technology of object detection and neural network, applied in the direction of biological neural network model, neural learning method, neural architecture, etc., can solve problems such as inadequacy and incompleteness, and achieve high-performance results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

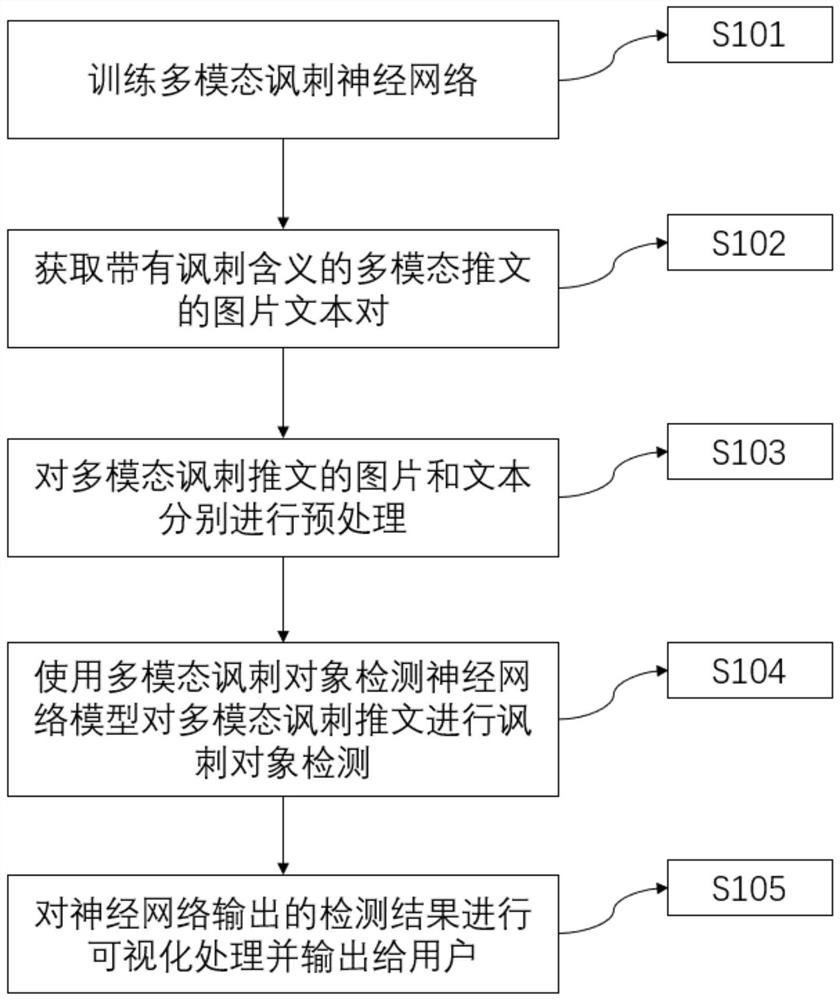

[0051] Embodiment 1 of this application provides such as figure 1 The shown multi-modal sarcasm object detection method for social tweets based on multi-scale cross-modal encoding neural network for real-time detection of multi-modal sarcasm objects in sarcastic tweets:

[0052] S101. Training a multimodal satirical object detection neural network;

[0053] The directly initialized neural network cannot work directly, so it is necessary to train the constructed neural network according to the existing data set. After training on the training set, use the test set to test the performance of the trained neural network weights to obtain the evaluation results. Constantly repeat the above process, and continuously adjust the relevant hyperparameters (Exact Match (EM, absolute matching rate) and F1 score for text satire object detection tasks; AP for visual satire object detection) through the evaluation results connected to the test. 、AP 50 、AP 75 ), and finally get a result w...

Embodiment 2

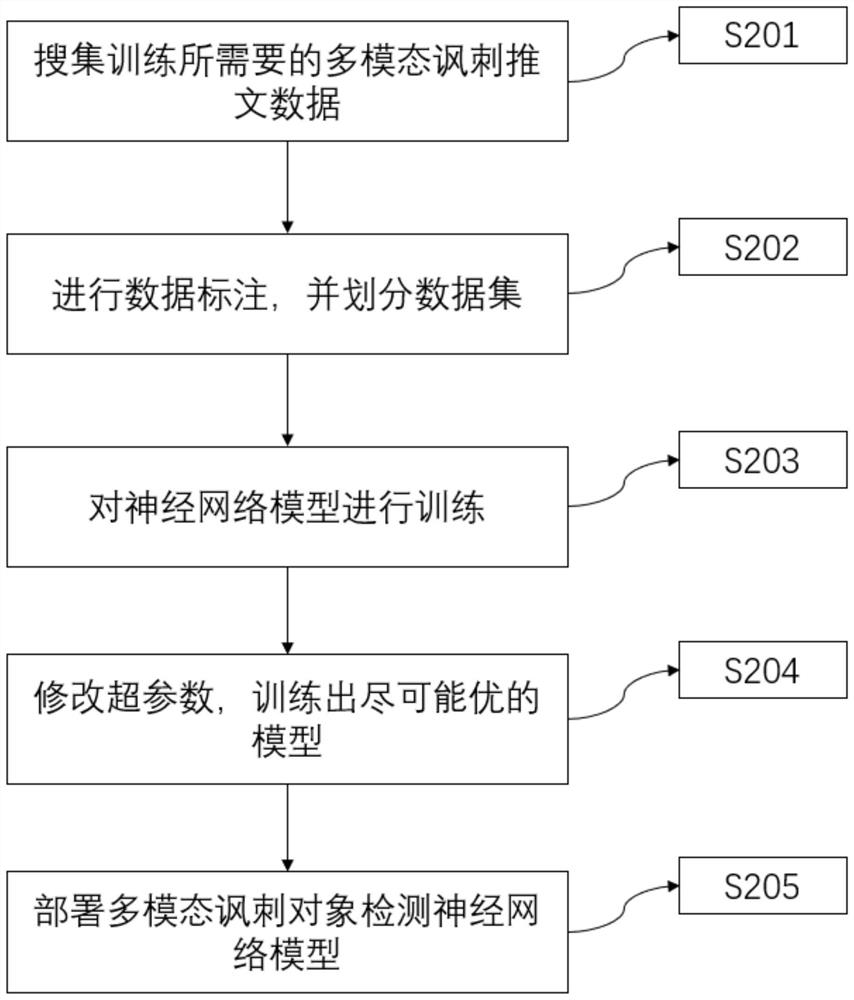

[0063] On the basis of Embodiment 1, Embodiment 2 of this application provides a specific implementation of step S101 in Embodiment 1, such as figure 2 Shown:

[0064] S201. Collect multimodal satirical tweet data required for training;

[0065] As mentioned in the content of the invention above, positive samples, that is, samples with ironic meaning, are selected from the multi-modal satire detection data set used in the related paper "Multi-Modal Sarcasm Detection in Twitter with Hierarchical Fusion Model" as the basic data.

[0066] S202. Perform data labeling and divide the data set;

[0067] On the basis of the existing satire dataset, the multi-modal satire object annotation is carried out, including visual satire object annotation and text satire object annotation, and a labeled dataset is obtained. Then divide the data set into training set, verification set and test set according to the appropriate ratio.

[0068] S203, train the neural network model

[0069] Use...

Embodiment 3

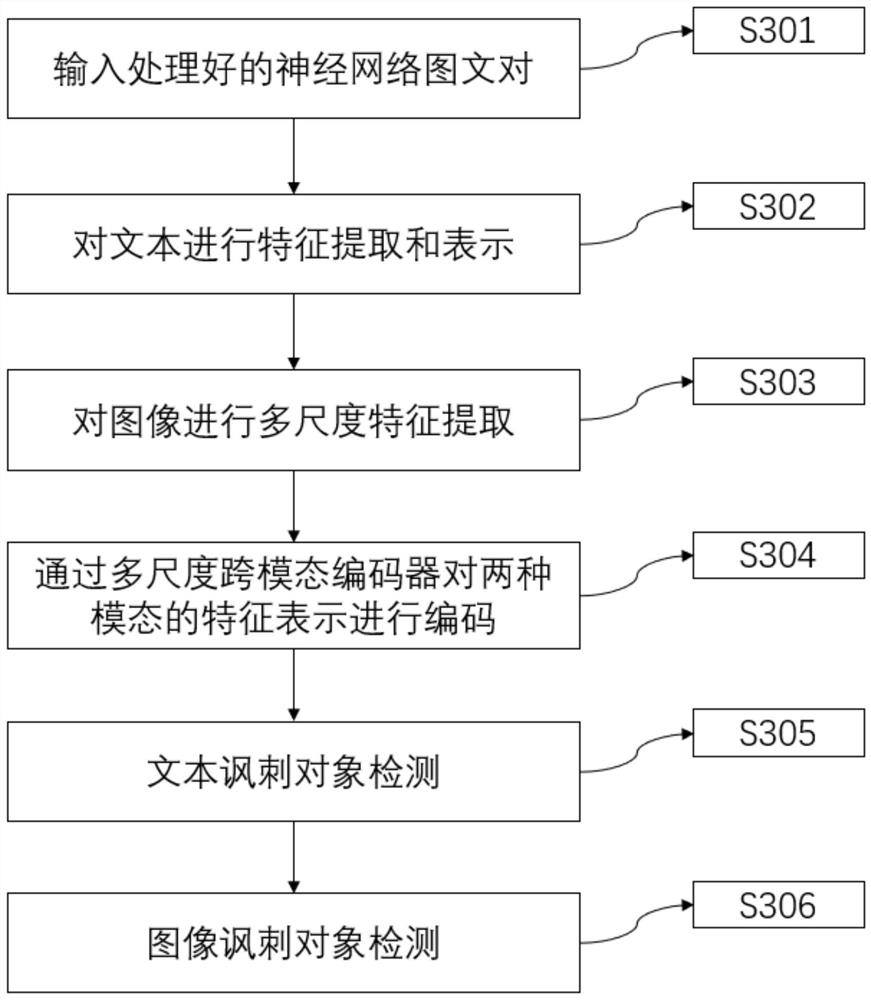

[0081] On the basis of Embodiments 1 to 2, Embodiment 3 of the present application provides a specific implementation of step S104 in Embodiment 1, such as image 3 and 4 Shown:

[0082] S301. Input the processed neural network image-text pair;

[0083] The images and texts of the multimodal satirical object detection data samples that have undergone preprocessing steps are input into the neural network, and the neural network will process the data of the two modalities respectively. Perform one-hot encoding on text data and normalize image data.

[0084] S302, performing feature extraction and representation on the text;

[0085] The text of modal satirical tweets is input into a pre-trained language model (such as BERT, RoBERT or BERTweets, etc.), the pre-trained language model extracts and encodes the text features, and selects the output of the last layer of the model as the text final expression of

[0086] S303, performing multi-scale feature extraction on the image...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com