Excitation data block processing method for hardware accelerator and hardware accelerator

A technology of hardware accelerator and incentive data, applied in physical realization, neural architecture, biological neural network model, etc., can solve the problems of large cache of incentive data, affecting system efficiency and power consumption, etc., to reduce the demand for storage resources and avoid data dependent effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] The present invention will be further described in detail below in conjunction with the embodiments and the accompanying drawings.

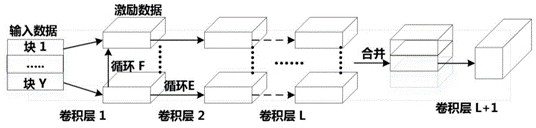

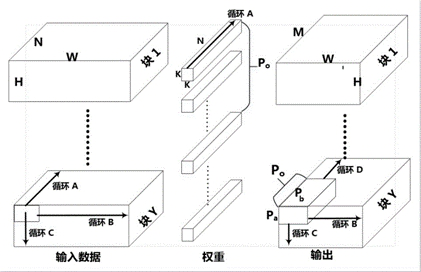

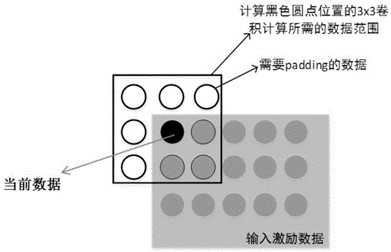

[0024] In the convolution network block parallel processing method of the present invention, H represents the size of the data in the height direction, W represents the size of the width direction, N represents the size of the output channel, M represents the size of the output channel, and K represents the size of the convolution kernel, Pa and Pb represent the parallelism of a single cycle in the H and W directions respectively, and Po represents the parallelism of a single cycle on the output channel. Input and output data are collectively referred to as incentive data.

[0025] A block processing method for excitation data of a hardware accelerator, wherein the hardware accelerator performs block parallel processing on the excitation data of the convolutional neural network, and stores the block parallel processed excitation data in a ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com