Deep learning-based train broadcast speech enhancement method and system

A technology for train broadcasting and voice enhancement, applied in voice analysis, instruments, etc., can solve problems such as inability to combine and increase the sound field, and achieve the effects of less difficulty, fullness, and low cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

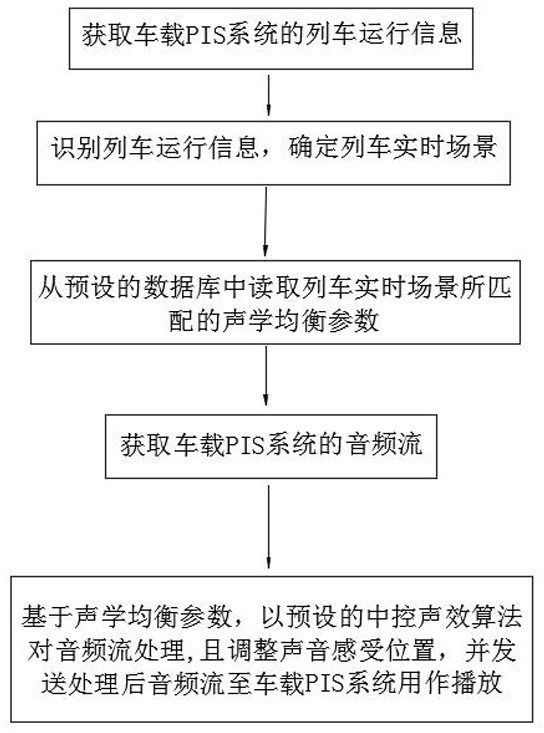

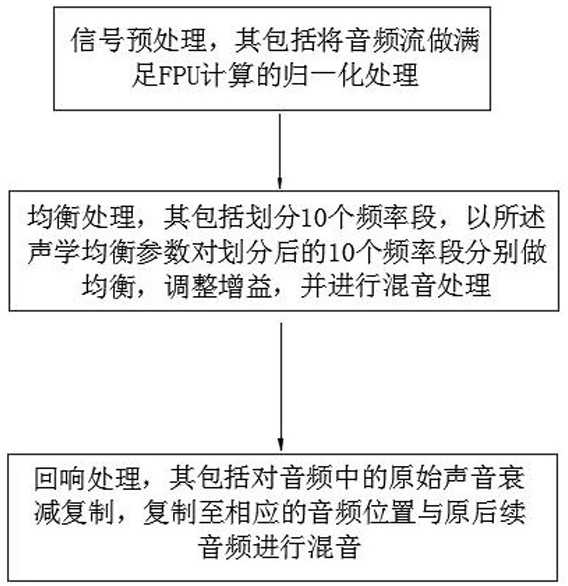

[0036] refer to figure 1 , the train announcement voice enhancement method based on deep learning includes:

[0037] S101. Obtain train operation information of the vehicle-mounted PIS system.

[0038] The above-mentioned vehicle-mounted PIS system is the passenger information system. In this application, the train operation information includes: speed information (vehicle speed), video information and designated identification information, which are used to determine the real-time scene of the train.

[0039] Moreover, when the vehicle speed is 0-30KM / H, it is identified as the starting scene; when the vehicle speed is 30-0KM / H, it is identified as the parking scene; other speeds are identified as the running scene; it should be noted that the above 30 is assigned to the low-speed threshold X The specific number can be selected according to the vehicle model and operating environment.

[0040] The above-mentioned video information is the video stream collected by the camer...

Embodiment 2

[0059] The difference with embodiment 1 is that this method also includes:

[0060] Record process information, bind time parameters, and save as audio transformation files;

[0061] Train preset neural network models using audio modification archives;

[0062] The trained neural network model is used to identify the subsequent real-time audio stream. If there is a record and the current environment of the train matches the recorded information, the processed audio stream in the record is called and sent to the on-board PIS system for playback.

[0063] It can be understood that the above process information is the key information during the execution of the method described in the embodiment, for example: the original audio stream, the corresponding real-time scene of the train, the processed audio stream, and the acoustic equalization parameters. By binding time parameters, a one-to-one correspondence can be established to know when, where, what scene, what kind of original...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com