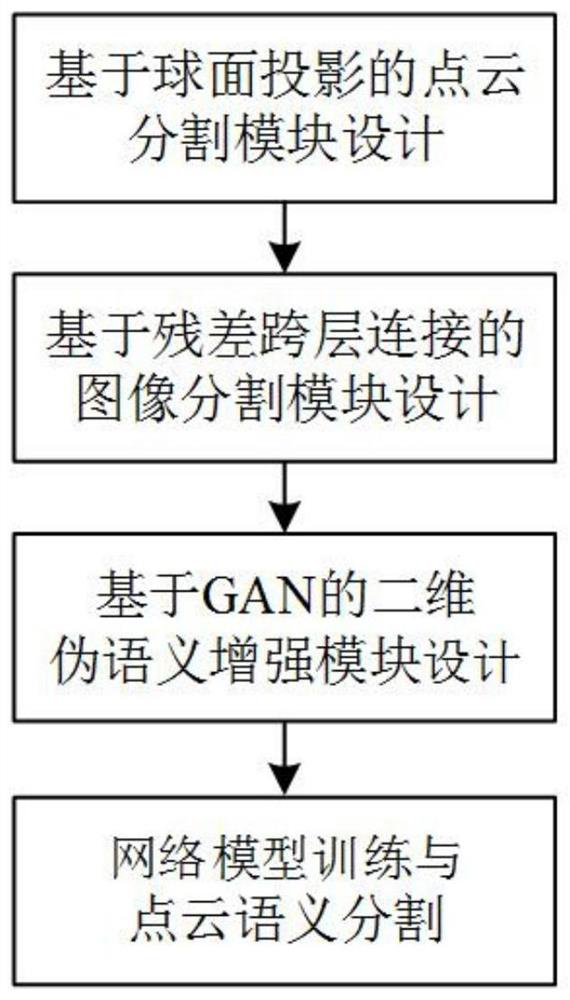

Non-structured environment point cloud semantic segmentation method based on cross-modal semantic enhancement

An unstructured, semantic segmentation technology, applied in the field of intelligent vehicle environment perception, can solve the problems of limited adaptability of three-dimensional structural information, single depth and geometric information, difficulty in distinguishing low-resolution or similar geometric features, etc., to reduce Indexing and calculation time, ensuring accuracy and real-time performance, and improving the effect of adaptability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

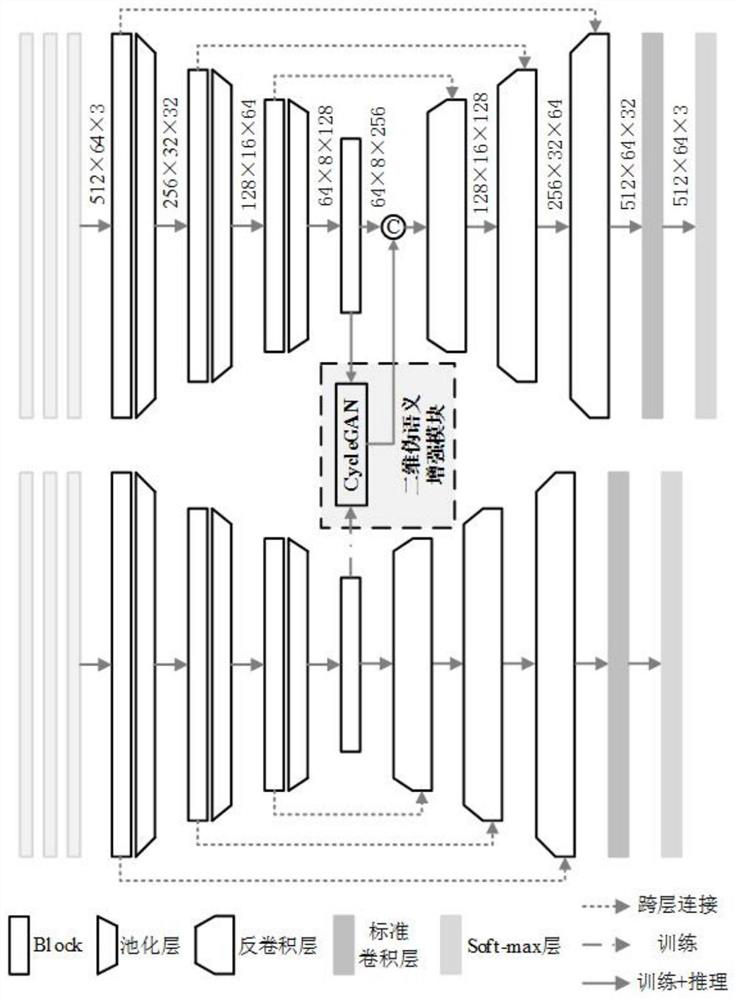

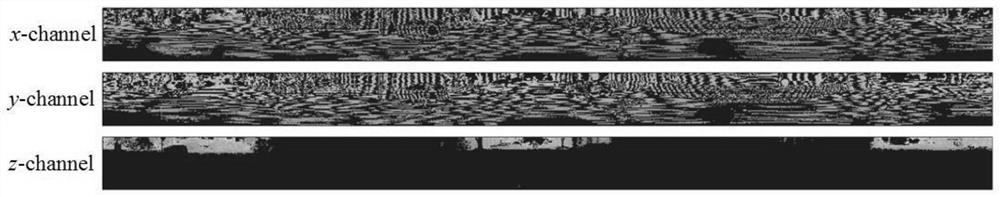

Method used

Image

Examples

Embodiment Construction

[0078] The present invention will be described in further detail below in conjunction with the accompanying drawings and specific embodiments:

[0079] 3D scene understanding is a key technology in the field of ground unmanned systems and a prerequisite for safe and reliable passage in structured and unstructured environments. At present, the more mature technologies are mainly designed for urban structured environments, and there are few studies on unstructured environments (such as emergency rescue scenarios), and the technologies are not yet mature. In an unstructured environment, there are no structural features such as lanes, road surfaces, and guardrails, and the drivable area has blurred boundaries and diverse textures. Therefore, existing algorithms designed for structured environments are difficult to apply directly to unstructured environments.

[0080] At present, deep learning-based semantic segmentation tasks mostly use cameras and lidars as their main sensor dat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com