Food volume estimation method based on single depth map deep learning view synthesis

A technology of view synthesis and deep learning, applied in computing, image analysis, image enhancement, etc., can solve problems that affect the accuracy of feature point matching and 3D reconstruction, complex operation methods, and failure of 3D reconstruction, so as to solve health problems and improve The effect of accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0052] Embodiment 1: the method for estimating food volume based on single-depth map deep learning view synthesis, such as Figure 1 to Figure 5 shown, including the following steps:

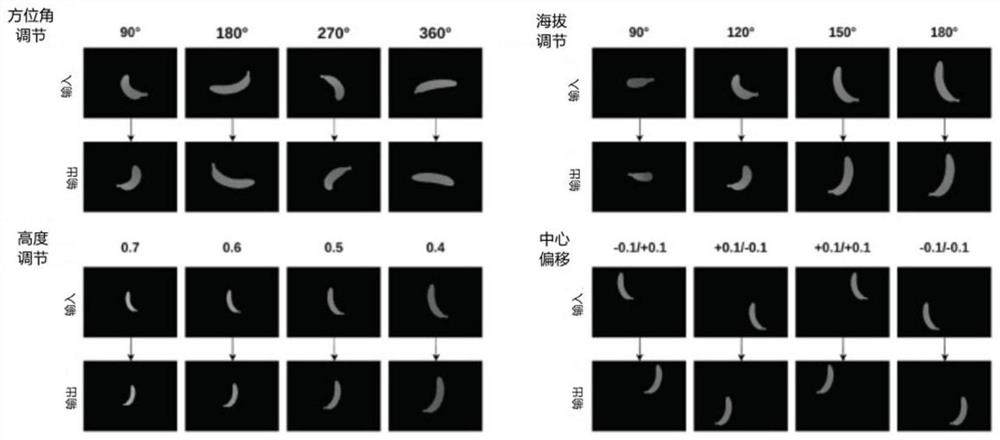

[0053] 1) Place each object item at the origin, and capture depth images of the object item from different perspectives through four motion modes: azimuth rotation, elevation rotation, height adjustment, and center movement;

[0054] 2) Segment and classify the captured images, randomly render initial and corresponding depth images of object items captured from opposite perspectives using multiple external camera parameters, and render the captured depth images as a training dataset;

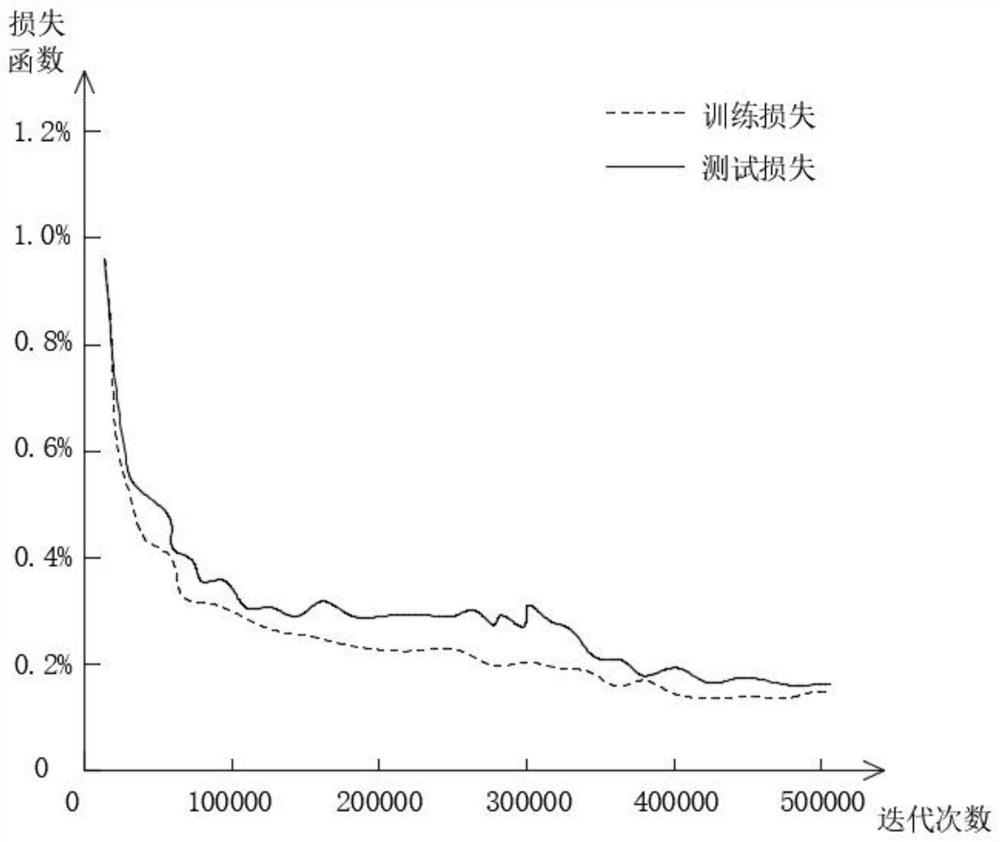

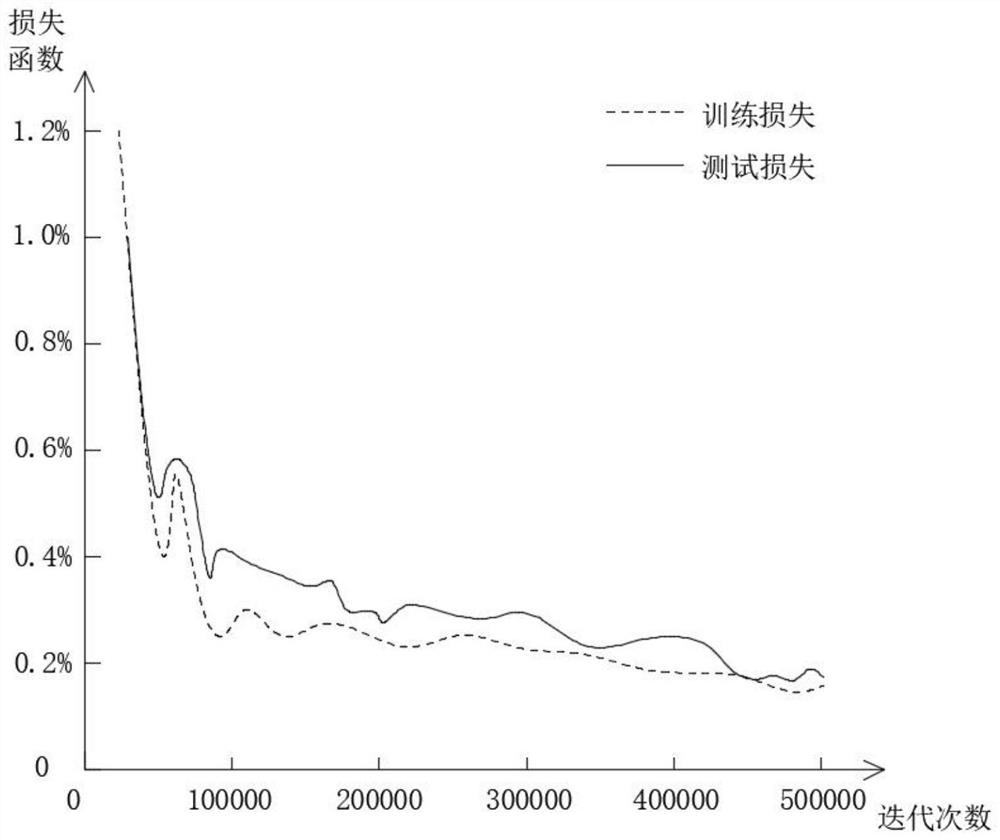

[0055] 3) View synthesis methods based on deep neural networks combine unseen viewpoints and use unseen object items to predict results using input images;

[0056] 4) Registering the camera coordinates of the initial depth image and the relative depth image into the same world coordinates to obtain a complete 3D...

Embodiment 2

[0099] Example 2: Application of a method for estimating food volume based on deep learning view synthesis of a single depth map, and applying the method to the estimation of the nutritional content of dietary intake.

[0100] In this example, if Image 6 As shown, after the volume of each meal is obtained by the volume estimation method, the content of each ingredient in the meal can be obtained according to the database, thereby helping users understand their eating behavior.

[0101] Working principle: Through the integrated method of depth sensing technology and deep learning view synthesis, a single depth image can be obtained at any convenient angle; by adopting the network structure and point cloud completion algorithm, the 3D structure of various shapes can be learned implicitly, and It can restore the occluded part of the food and improve the accuracy of volume estimation; applying the food volume estimation method to the nutrient content estimation of dietary intake ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com