Forest unstructured scene segmentation method based on multispectral image fusion

A multi-spectral image, unstructured technology, applied in the direction of graphic image conversion, image data processing, neural learning methods, etc., can solve the problem that RGB images are difficult to apply to complex unstructured scenes, and achieve poor network adaptability and error. Segmentation problems, optimizing the feature extraction process, the effect of improving accuracy and robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0063] The technical solutions provided by the present invention will be described in detail below with reference to specific embodiments. It should be understood that the following specific embodiments are only used to illustrate the present invention and not to limit the scope of the present invention.

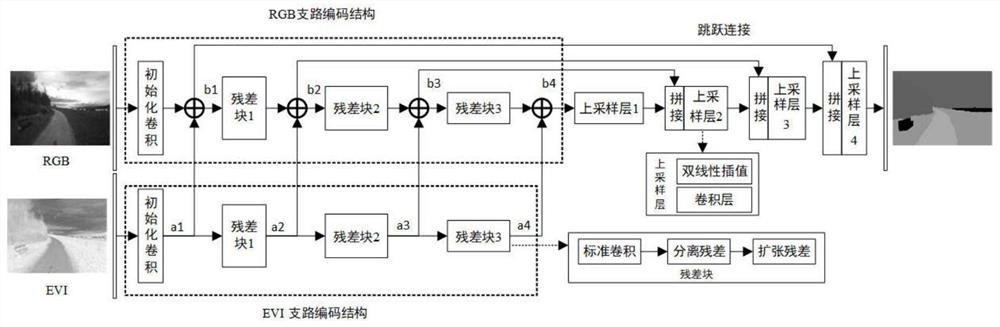

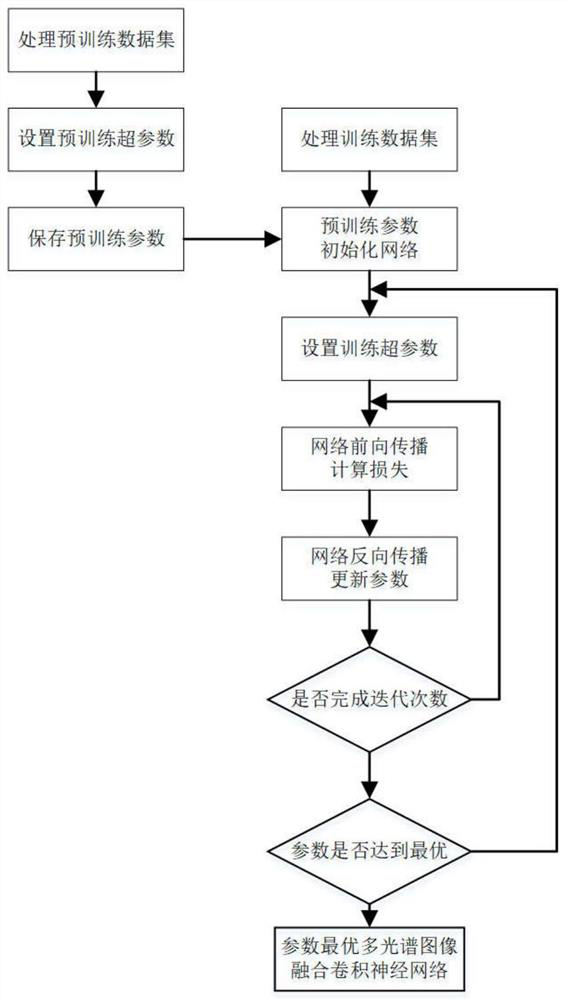

[0064] The invention discloses a forest unstructured scene segmentation method based on multispectral image fusion. The method designs a parallel encoder and a two-level fusion strategy, and realizes layered feature fusion in the process of encoding and decoding. First in the encoder stage, two encoding branches process RGB data and EVI data respectively, and fuse two-way features at each layer to obtain informative complementary features. The encoder also utilizes dilated convolutions to increase the receptive field of the network and optimize the feature extraction process. Then, the high-semantic features are decoded. During the decoding process, the low-semantic and high...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com