Device and method for generating label objects for the surroundings of a vehicle

a vehicle and label object technology, applied in the field of vehicle label object generation devices and methods, can solve the problems of comparatively time-consuming and computation-intensive, high cost of manual methods, etc., and achieve the effect of increasing the accuracy of automatic generation of reference labels, and increasing the accuracy of the generated labels

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

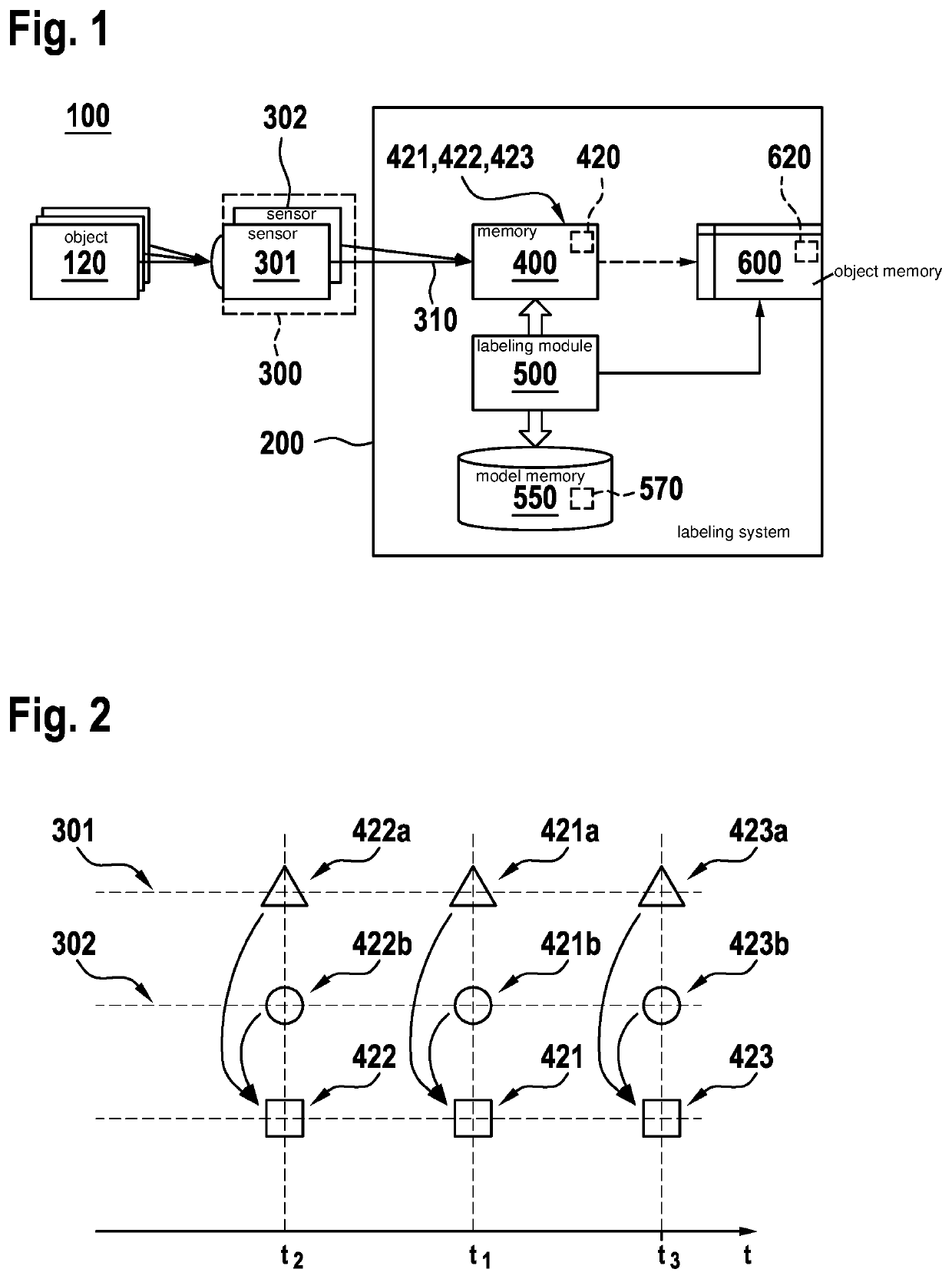

[0065]FIG. 1 schematically shows a system including a labeling system 200 according to a specific embodiment of the present invention. The labeling system 200 is connected to a sensor set 300, which has a plurality of individual sensors 301, 302. Individual sensors 301, 302 may have or use various contrast mechanisms. In the specific embodiment shown, a first individual sensor 301 is developed as a camera (schematically indicated by a lens) and the second individual sensor 302 is developed as a radar sensor; the second individual sensor 302 thus being of a different sensor type than individual sensor 301 and using different contrast mechanisms. Sensor set 300 is designed to detect raw measurement values of an object 120 in an environment or a surroundings 100 and to transmit these via the line or interface 310 to a memory 400. The type of raw measurement values is specific to the sensor; these may be for example pixel values, positional data (e.g., radar locations) or also specific ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com