Chip instruction and data pushing device of embedded processor

A data push and instruction technology, applied in electrical digital data processing, instruments, memory systems, etc., can solve the problems of processor performance loss, reduced prefetch timeliness, increased data transmission traffic, etc., to eliminate Cache pollution and solve access problems. Conflict problems, the effect of reducing traffic

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

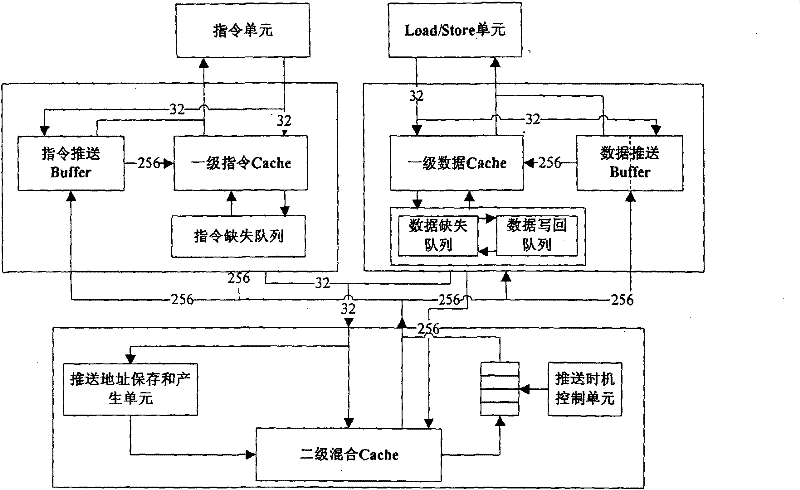

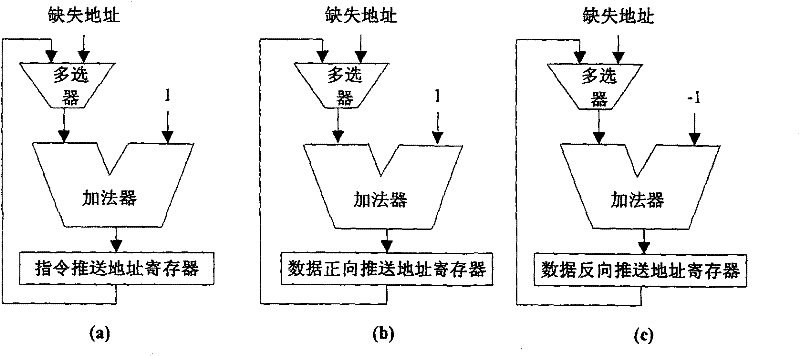

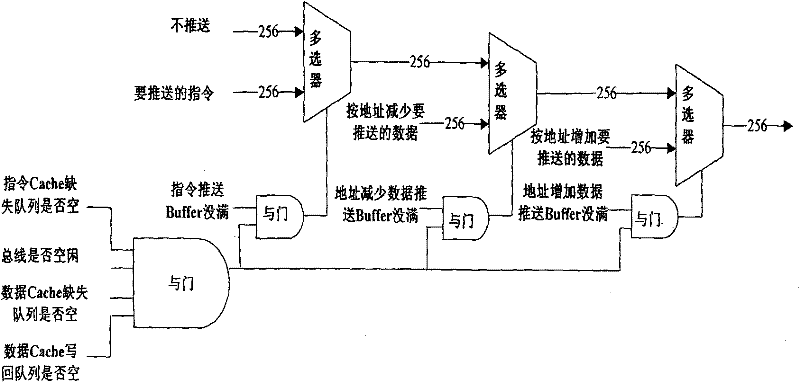

[0015] refer to Figure 1~4 , the present invention adopts the pushing device of instruction and data, comprises instruction unit, Load / Store unit, one-level instruction Cache, one-level data Cache, missing queue, missing queue / write-back queue, two-level mixed Cache, push address preservation and A generation unit, a push timing control unit, an instruction push buffer and a data push buffer, the push address storage and generation unit includes an instruction push address register, a data forward push address register and a data reverse push address register. The push address storage and generation unit is used to store and calculate the address of the next instruction or data that needs to be pushed.

[0016] After adding these devices, the flow of the entire signal is as follows:

[0017] When the instruction unit needs to read the instruction, it sends the address to the instruction push buffer and the first-level instruction cache at the same time. These two devices re...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com