High-fineness urban three-dimensional modeling method

A technology of three-dimensional modeling and three-dimensional modeling of cities, which is applied in the field of three-dimensional digital city construction, can solve the problems of poor accuracy and fineness, low degree of digitalization, long cycle, etc., and achieve the effect of high precision and high degree of automation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] In order to make the object, technical solution and advantages of the present invention clearer, the embodiments of the present invention will be further described in detail below in conjunction with the accompanying drawings.

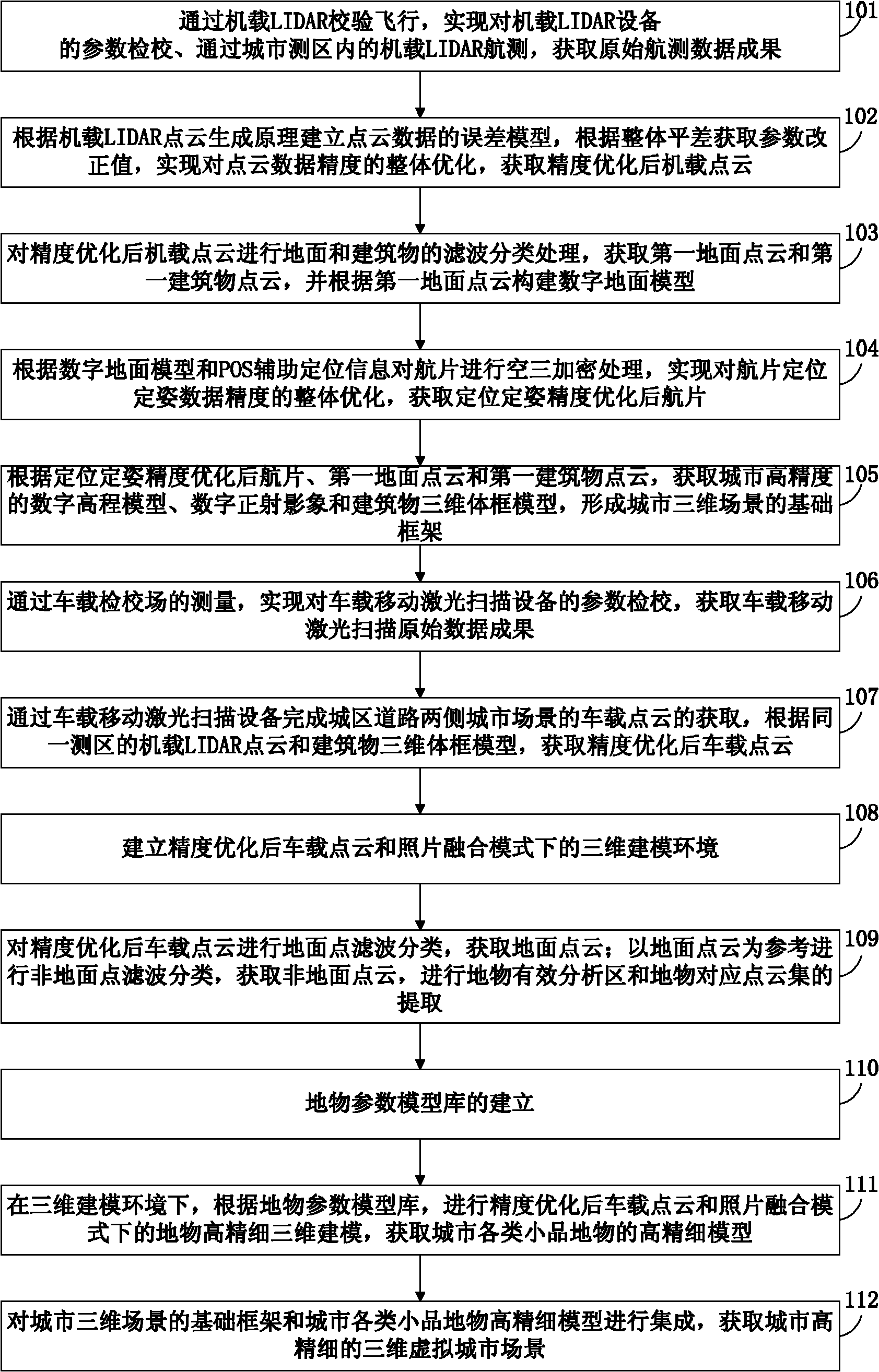

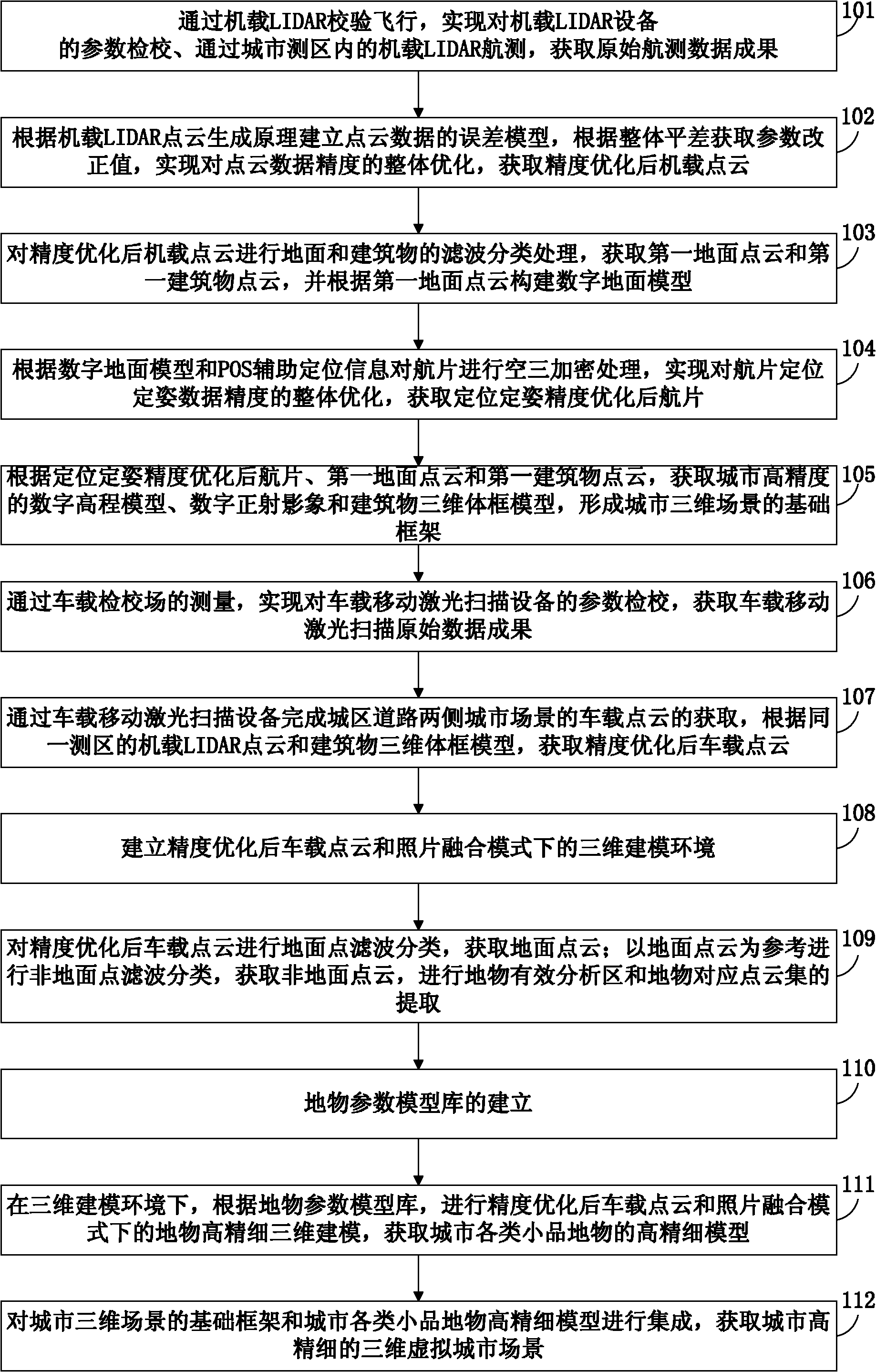

[0045] In order to improve development efficiency, reduce development cost, shorten development cycle and improve modeling precision, the embodiment of the present invention provides a high-precision three-dimensional modeling method for cities, see figure 1 , see the description below:

[0046] 101: Through the airborne LIDAR (Light Detection And Ranging, laser radar) verification flight, realize the parameter verification of the airborne LIDAR equipment, and obtain the original aerial survey data results through the airborne LIDAR aerial survey in the urban survey area;

[0047] Among them, the main components of airborne LIDAR are laser sensors, digital cameras and POS positioning and attitude determination systems. The laser sensor needs to c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com