Video space-time feature extraction method

A feature extraction, space-time technology, applied in image data processing, instrumentation, computing, etc., can solve the problems of unfavorable observation of target motion state, motion boundary diffusion, susceptibility to noise, etc., achieve clear motion boundary and reduce calculation amount

Inactive Publication Date: 2011-08-03

SHANGHAI JIAO TONG UNIV

View PDF5 Cites 7 Cited by

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

But the optical flow method is based on the constant brightness assumption, and most calculation methods adopt spatial global smoothing (see: Berthold K.P.Horn and Brian G.Schunck, "Determining optical flow," Artificial Intelligence, vol.17, no.1-3, pp .185-203, 1981. Artificial Intelligence, Vol. 12, No. 1-3, pp. 185-203, 1981, determining optical flow) or the local smoothness assumption (see: Bruce D. Lucas and Takeo Kanade, "An iterative image registration technique with an application to stereo vision," in Proceedings of the 1981 DARPA Image Understanding Workshop, April1981, pp.121-130. pp. 121-130 of the DARPA Image Understanding Working Group Meeting in April 1981, an iteration applied to stereo vision image registration technology), which makes it face the following three problems: 1) susceptible to noise and light changes; 2) motion boundary diffusion; 3) most optical flow calculation methods need to calculate all pixels in the frame , with a large amount of calculation and relatively poor real-time performance

Method based on feature point matching (see: HanWang and Michael Brady, "Real-time corner detection algorithm for motion estimation," Image and Vision Computing, vol.13, no.9, pp.695-703, 1995. Image and Video Computing, Volume 13, Issue 9, 1995, pages 695-703, real-time corner detection algorithm for motion estimation) Although the robust performance is good, it is too sparse, which is not conducive to observing the motion state of the entire target, and the motion Extraction of target structures

Method used

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

View moreImage

Smart Image Click on the blue labels to locate them in the text.

Smart ImageViewing Examples

Examples

Experimental program

Comparison scheme

Effect test

Embodiment

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More PUM

Login to View More

Login to View More Abstract

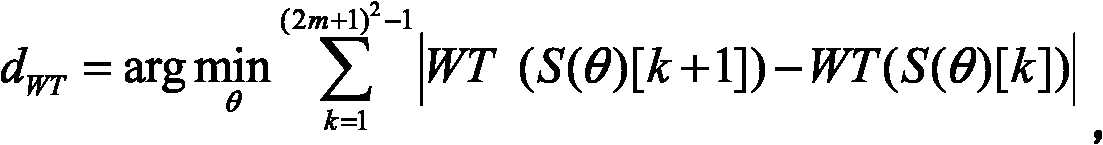

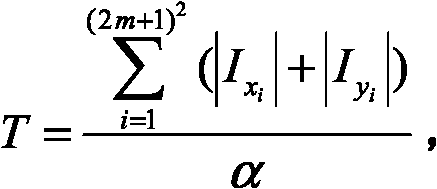

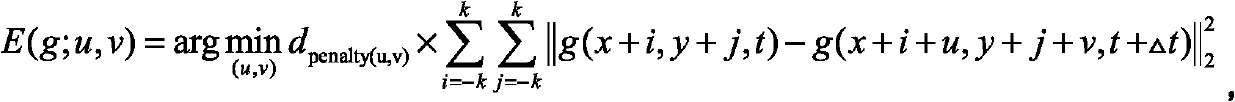

The invention discloses a video space-time feature extraction method in the technical field of computer video processing. The method comprises the following steps of: performing Gaussian filtering on two adjacent frames of images in a video flow, calculating geometric regularity and geometric regularity direction of an edge and a texture region on the smooth image and constructing a spatial geometric flow field; establishing a motion equation between the two frames of images and calculating a motion vector of each pixel point by adopting a block matching correlation method; eliminating singular values of the calculated geometric flow field by using an M*M neighborhood local average method; and finally merging the spatial geometric flow field with the time domain geometric motion flow to obtain a space-time feature vector of the pixel point. The method has higher robustness and calculation performance for illumination change; a moving target has a clear structure; close moving targets can be effectively separated; and the method has higher robustness and efficiency for traffic flow estimation and abnormal event detection in traffic surveillance.

Description

technical field The invention relates to a method in the technical field of computer video processing, in particular to a video space-time feature extraction method. Background technique At present, computer vision technology is playing an increasingly important role in urban traffic monitoring, such as traffic flow monitoring, congestion estimation, abnormal event detection, etc. As an important task, vehicle motion analysis still faces great challenges due to the complex urban traffic environment (such as light and weather changes, occlusion, etc.). At present, the work on vehicle motion analysis is mainly divided into two categories. One is the traditional long-term motion analysis based on detection and tracking, but there is still a lack of reliable and stable multi-target tracking algorithms. In recent years, more and more researchers have adopted another method to perform statistics or modeling directly based on the underlying motion features, avoiding the detection ...

Claims

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More Application Information

Patent Timeline

Login to View More

Login to View More IPC IPC(8): G06T7/20

Inventor 杨华樊亚文苏航郑世宝

Owner SHANGHAI JIAO TONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com