Virtual viewpoint drawing method based on space-time combination in multi-view video

A technology of virtual viewpoint and viewpoint synthesis, which is applied in the field of video and multimedia signal processing, and can solve problems such as inaccuracy of virtual viewpoint

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] The invention was experimented with a "Mobile" video sequence. The video sequence collects videos from 9 viewpoints of a scene, and provides corresponding depth information as well as internal and external parameters of each camera. In the experiment, we selected the No. 4 viewpoint as the reference viewpoint, and the No. 5 viewpoint as the virtual viewpoint.

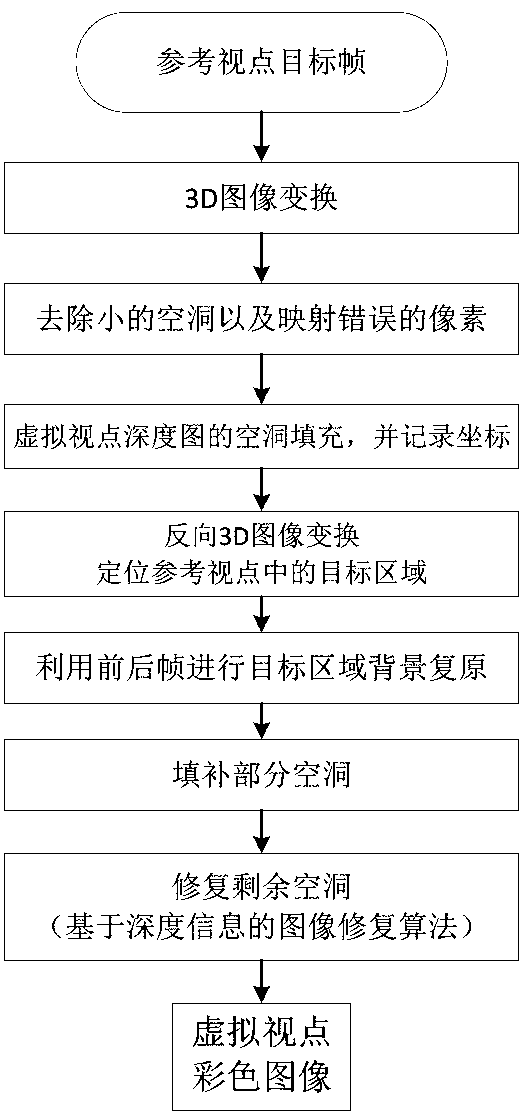

[0042] figure 1 Shown is the flowchart of the present invention, according to the flowchart, we introduce its specific implementation.

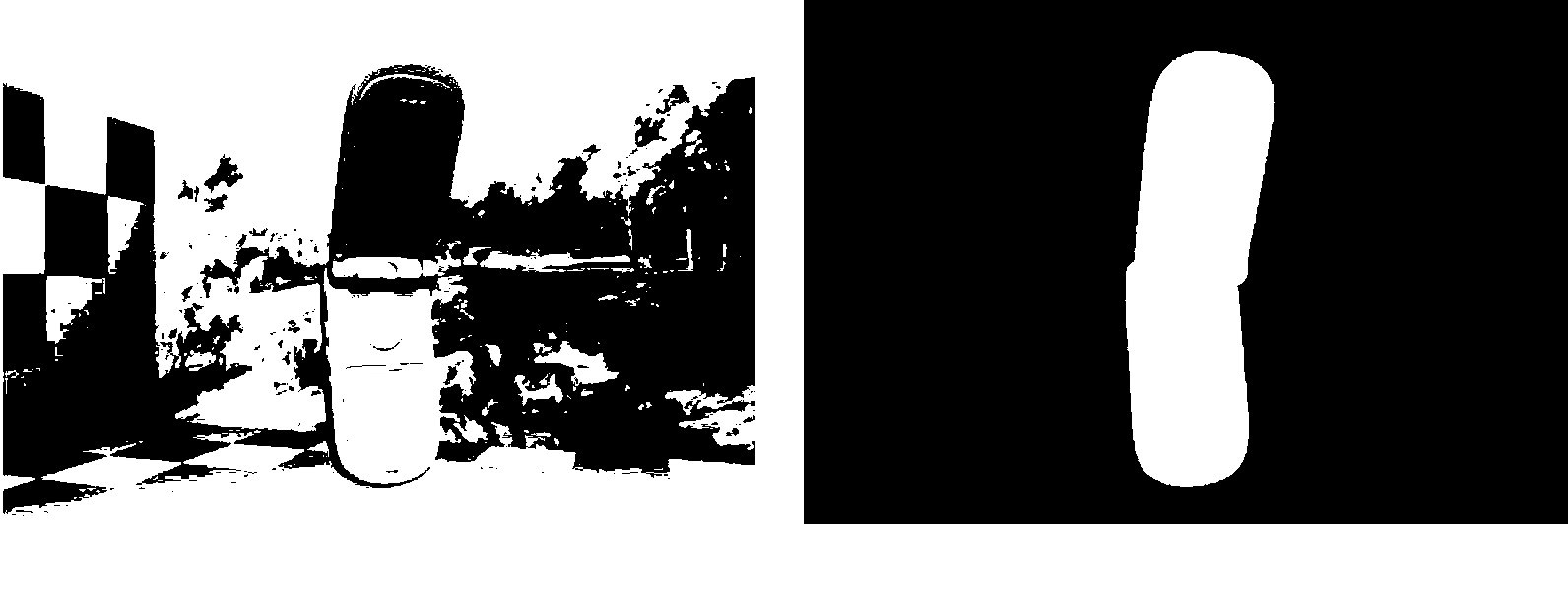

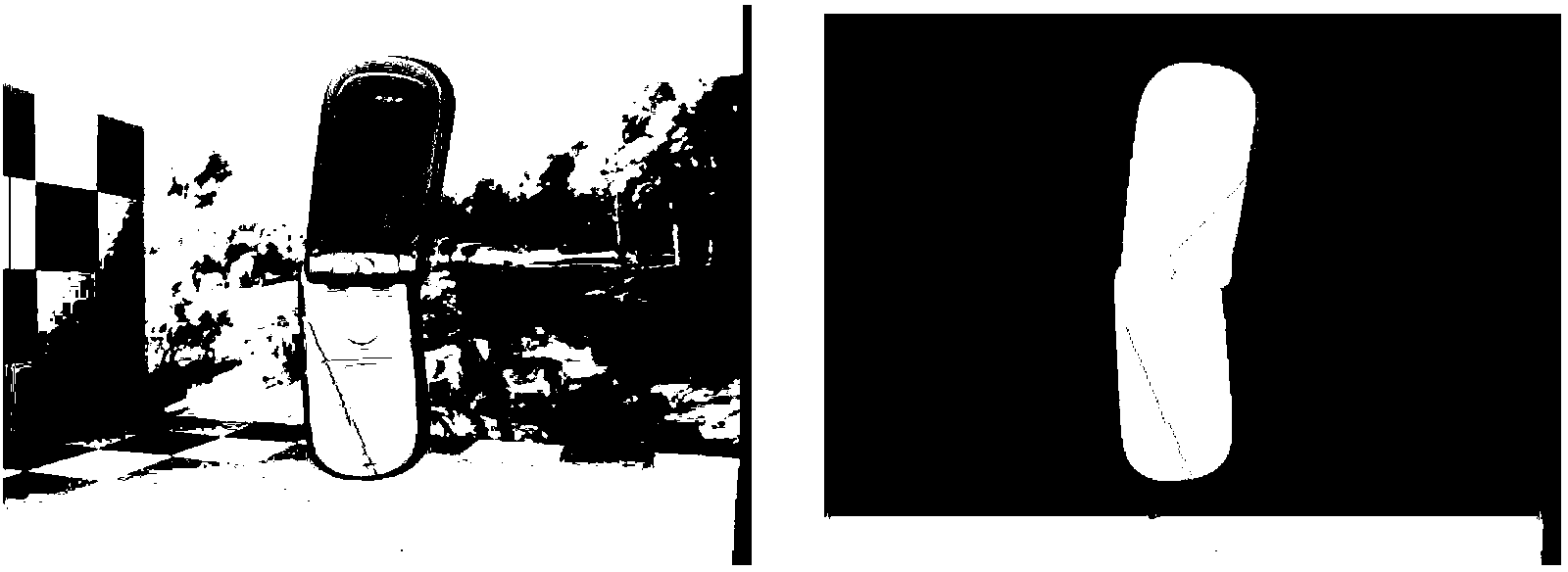

[0043] (1) 3D image transformation. The so-called 3D image transformation is to project the pixels in the reference viewpoint into the virtual viewpoint plane according to the camera projection principle. This process is mainly divided into two parts. First, the pixels in the reference viewpoint are projected into the 3D space, and then projected from the 3D space into the virtual viewpoint plane. figure 2 Color image and depth image for the reference viewpoint. Suppose th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com