System and method for guaranteeing distributed data processing consistency

A distributed data and consistent technology, applied in the direction of digital transmission system, transmission system, data exchange network, etc., can solve problems such as inconsistent expected results, abnormal data processing, etc., and achieve the effect of reducing delay and ensuring reliability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

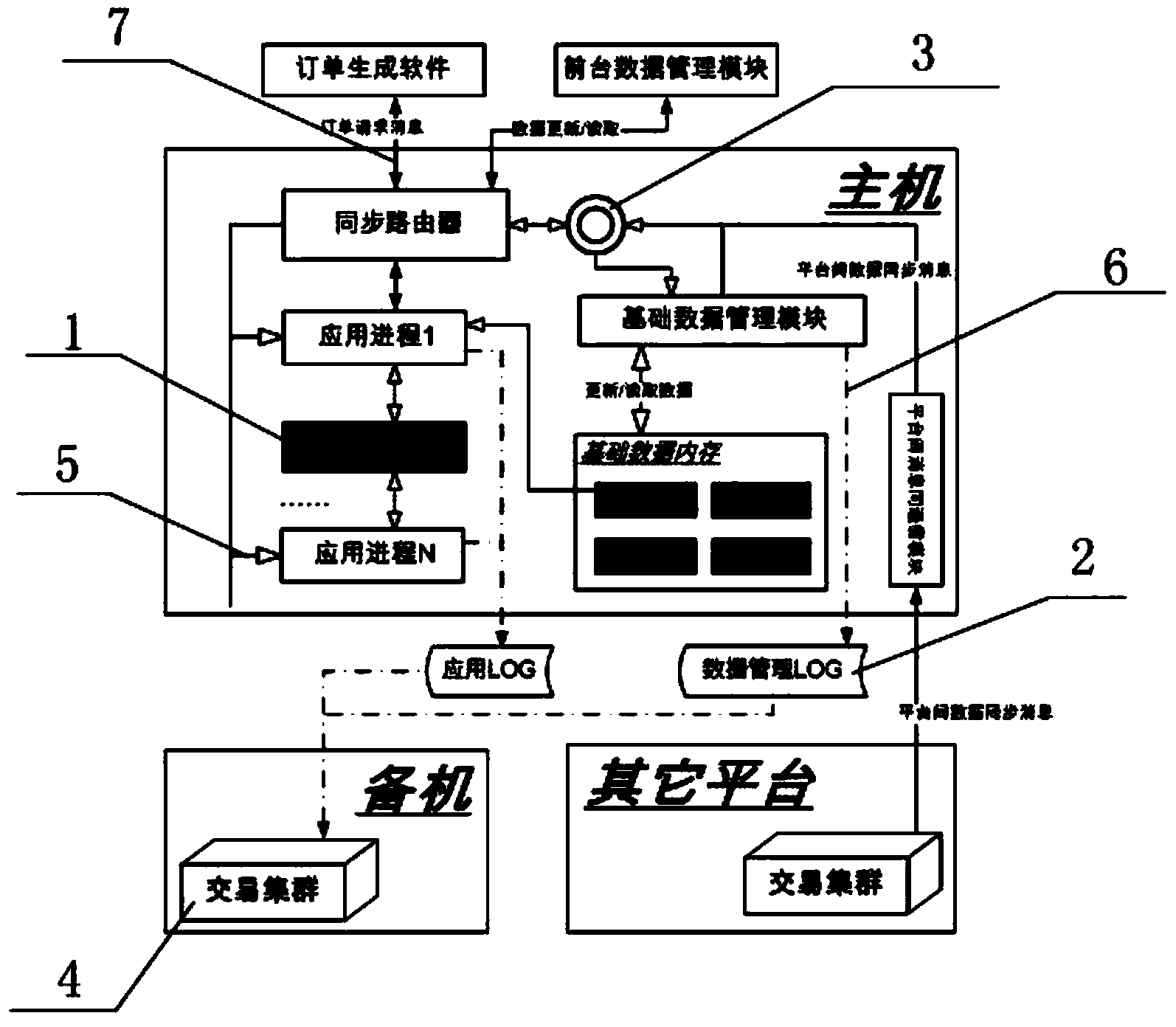

[0057] Such as figure 1 as shown, figure 1 It is a framework diagram of basic data maintenance in the distributed transaction system of the present invention. The computer distributed transaction system platform is composed of several transaction platforms responsible for transaction business processing. Inside the transaction host, one host will be used as the master node, and the other nodes will become transaction nodes. Backup machine, the master node is responsible for all order processing tasks, and is responsible for log recording and maintenance, and triggers the basic data synchronization of the slave node. The slave node does not perform real-time order processing, but can maintain the consistency of the basic data memory with the master node through synchronization. There is a synchronization router inside. The synchronization router obtains order messages and basic data update messages from order generation software, front-end data management software or other plat...

Embodiment 2

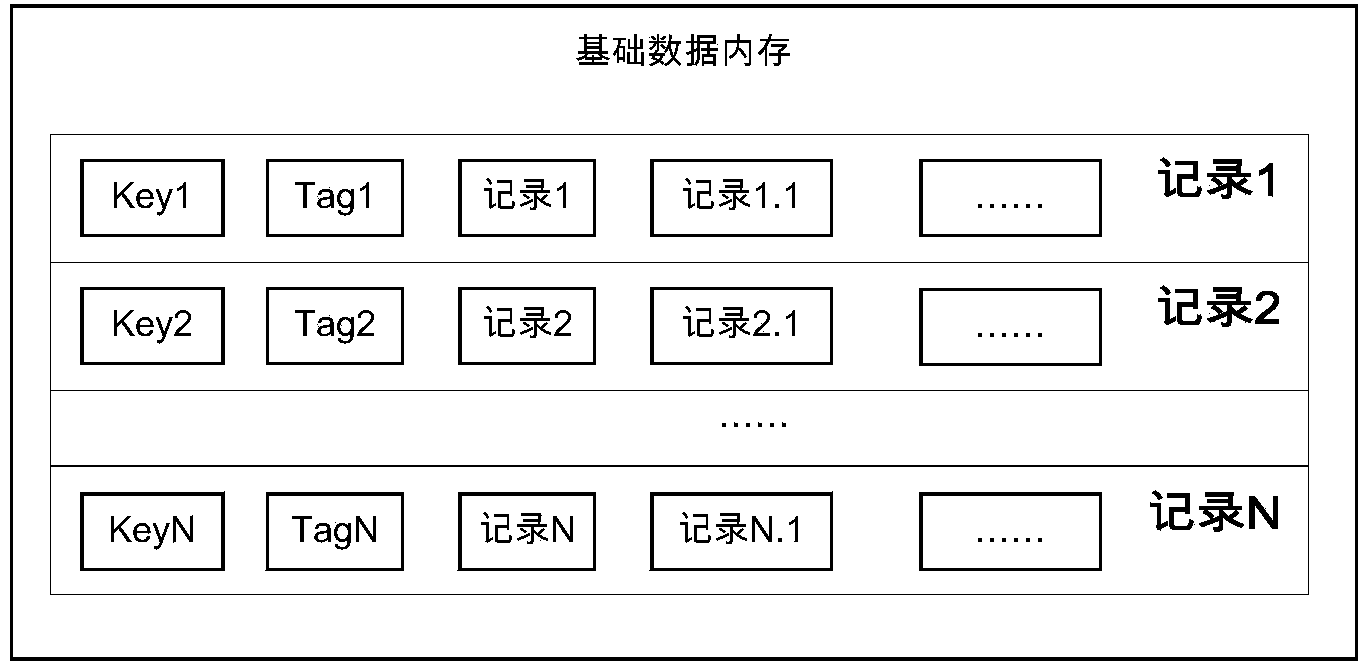

[0059] The basic data memory organization structure diagram is as follows figure 2 As shown, each record in the figure consists of multiple versions. In addition to the KEY (password) value used for indexing, a TAG (tag) field is added in the record header structure to record the current version number, where TAG ( The tag) field is of INT64 type, and the reading and writing of the TAG (tag) field are atomic operations without conflicts. The basic data memory supports one write and multiple reads, and supports undo commands for write operations. While the HFM (basic data management module) updates the basic data records, the read operations of the application are not affected.

Embodiment 3

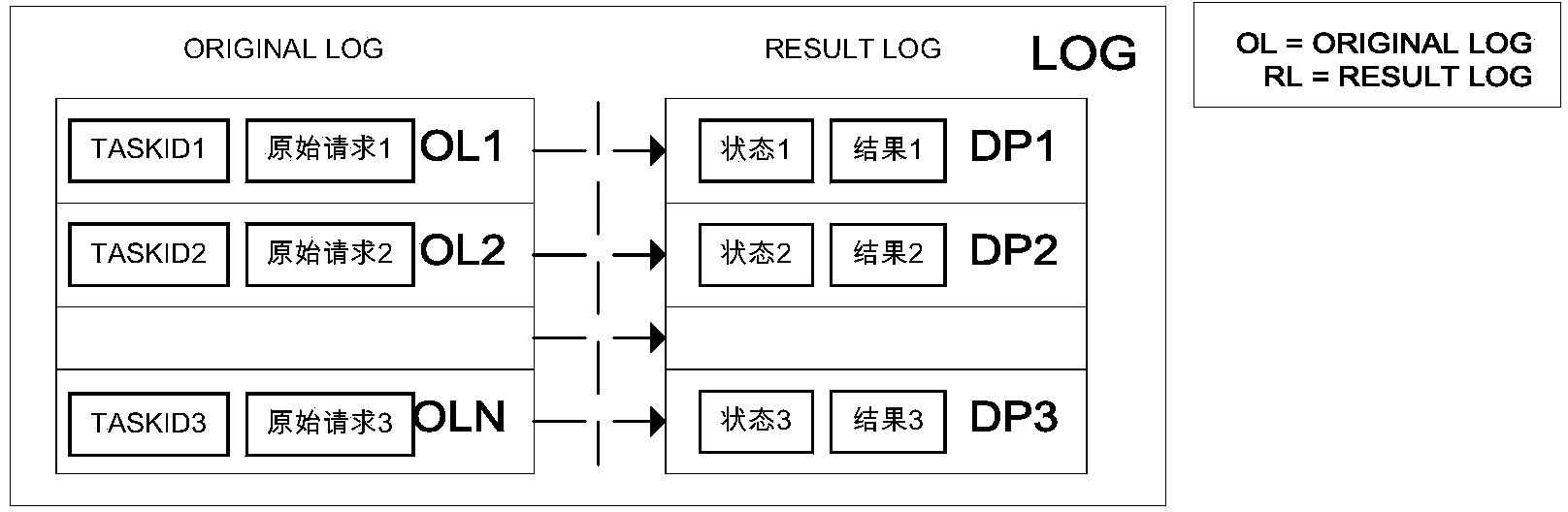

[0061] A method to ensure the consistency of distributed data processing. The request messages involved in the data processing process mainly include order requests and basic data update requests. The order messages come from the order generation software, and the basic data update messages are sent by the front-end data management software or other platforms. Generated, the specific method is as follows:

[0062] a. After the request message arrives at the host, the synchronization router will deliver it to the shared message queue according to the message category, and hand it over to the corresponding process for processing;

[0063] b. During the order processing process, the application process first connects to the basic data memory, and then verifies the order according to the information recorded in it. After the verification is successful, it writes to the order book memory and updates the status information recorded in the application log file. The application proces...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com