GPU (Graphic Processing Unit) virtualization optimization method based on delayed submitting

An optimization method and virtualization technology, applied in the field of virtualization, can solve the problems of frequent and large data transmission in the GPU virtualization framework, and achieve the effect of reducing repeated data transmission.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

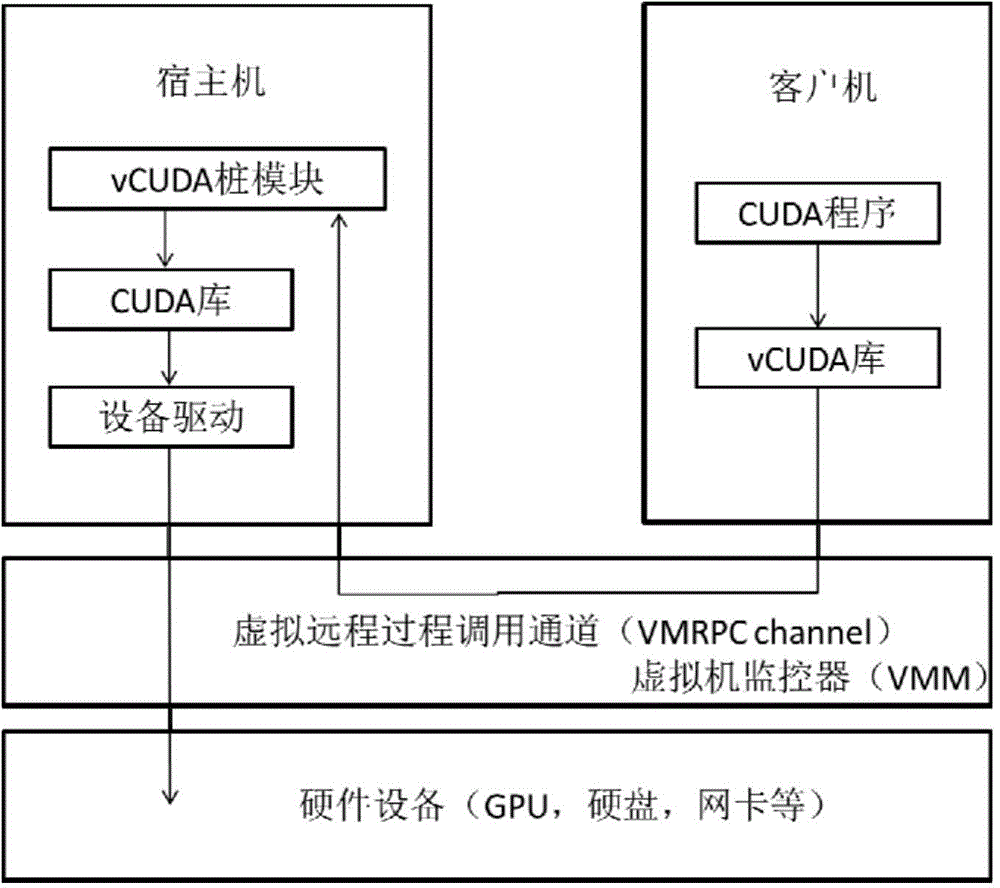

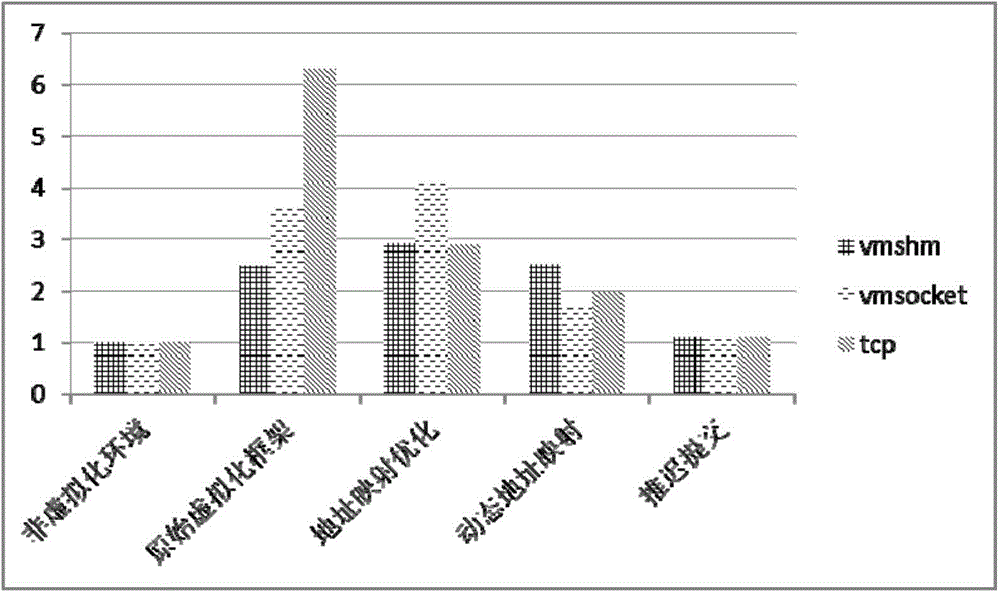

[0081] Based on the open source fully virtualized virtual machine manager KVM, and the Linux guest operating system, plus the open source GPU virtualization framework Gvirtus, we have implemented a new optimized GPU virtualization framework ( Figure 4 ). Further description will be made below in conjunction with this figure. The dotted line part is the part added or modified by this technology. The application example takes CUDA application program and CUDA function library as an example (CUDA is a computing platform launched by the graphics card manufacturer Nvidia, and the CUDA program here generally refers to the program formed after programming on this computing platform. s application).

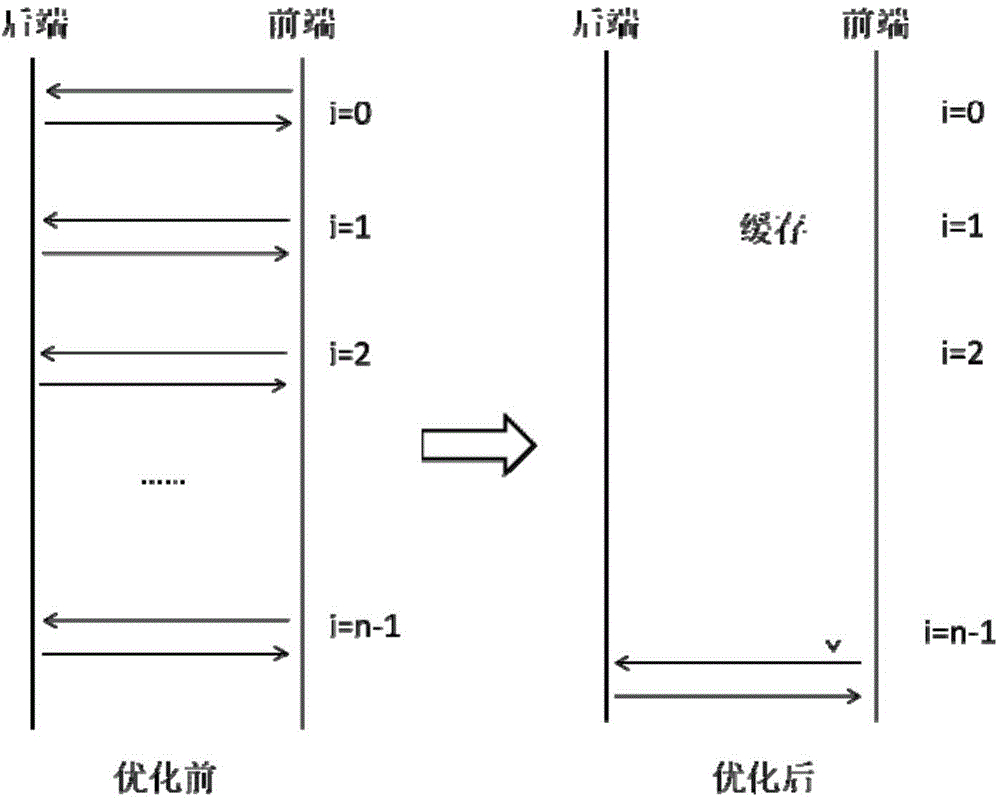

[0082] The Gvirtus open source virtualization framework is divided into two parts: the front end and the back end: the front end is in the client, responsible for rewriting the CUDA library, intercepting the call of the CUDA function by the program in the client, and sending the call i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com