Cache consistency protocol-free distributed sharing on-chip storage framework

A storage architecture and distributed technology, applied in the field of processors, can solve the problems of complex judgment and high communication overhead, and achieve the effect of avoiding the cache coherence protocol and reducing the cache miss rate.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

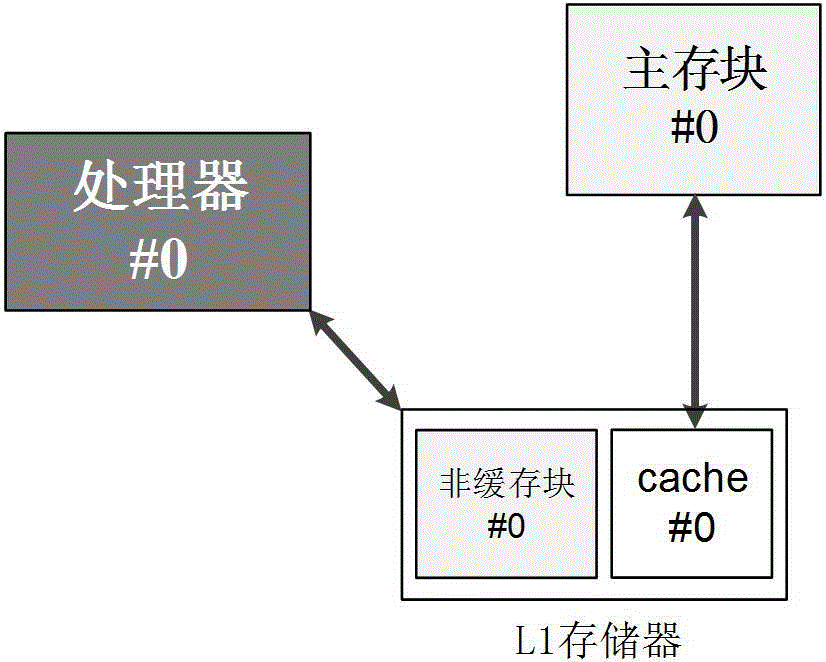

[0025] First, the program is compiled into machine code by a compiler. Among them, the generated static initialization data and other shared data will be placed in such as image 3 A non-cached block in L1 memory is shown. Others are placed in the corresponding main memory block, and the address codes of the two are continuous, which is convenient for the programming model.

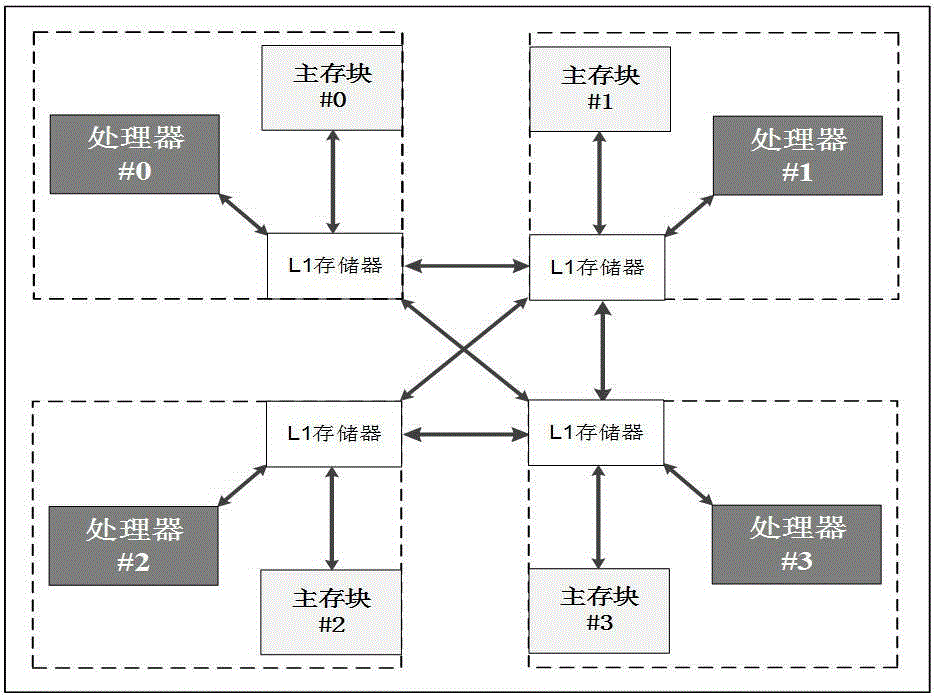

[0026] Such as figure 1 As shown, taking a cluster containing 4 processors as an example, the 4 main memory blocks #0, #1, #2, #3 are respectively distributed in the four processors #0, #1, #2, #3 Private area (other processors in the cluster cannot directly access memory), thus forming a distributed storage architecture.

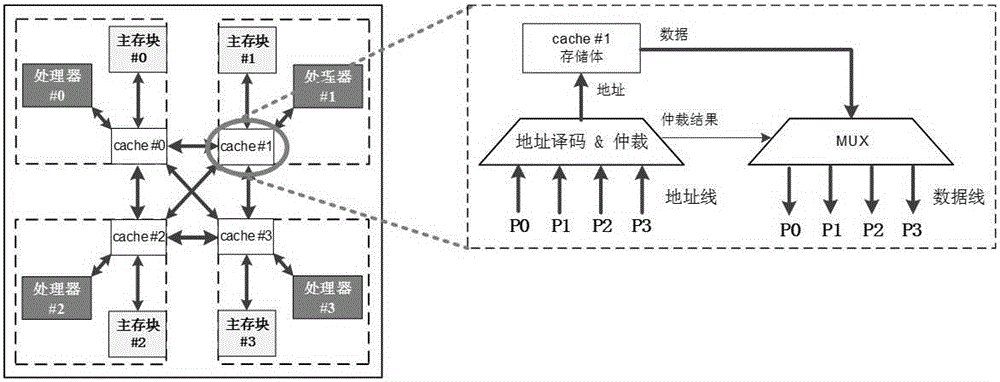

[0027] Such as figure 2 As shown, each processor in the cluster is also equipped with a local shared cache. In the figure, the local shared cache#x only maps the main memory block #x (that is, local), and can be shared and accessed by all other processors in the cluster in the cl...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com