Monocular vision ranging method based on edge point information in image

A visual odometry and edge point technology, which is applied in the field of UAV navigation and positioning, can solve the problems of inability to adapt to scenes lacking corner features, repetitive structures, and scarcity of numbers, etc., to enhance environmental adaptability, reduce dependence, The effect of reducing the amount of calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] The present invention will be described in detail below in conjunction with the accompanying drawings and embodiments.

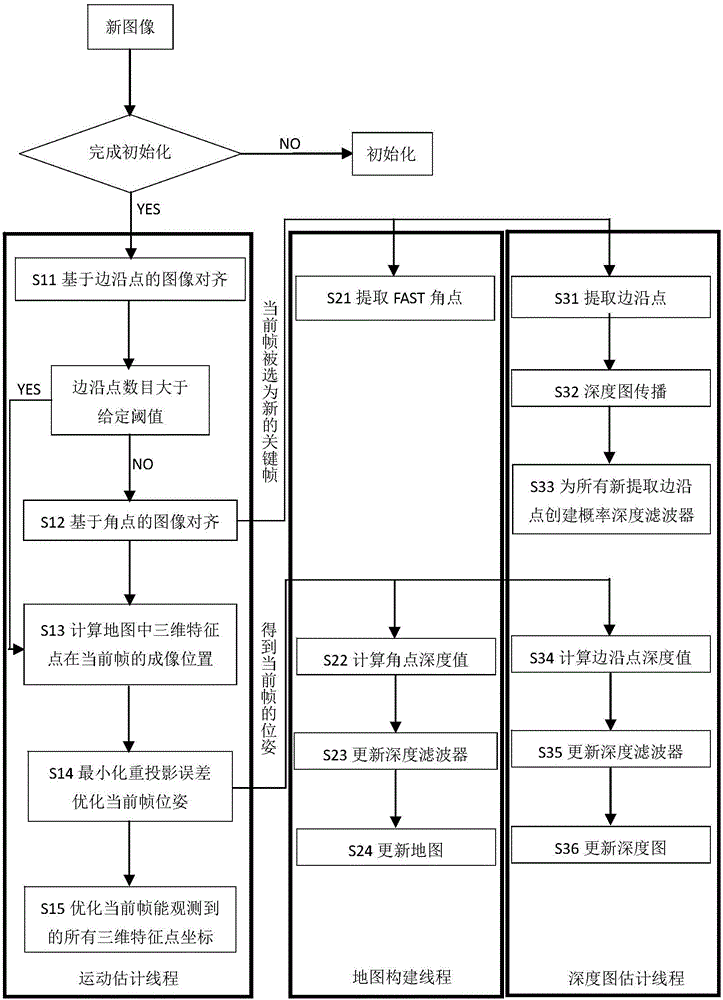

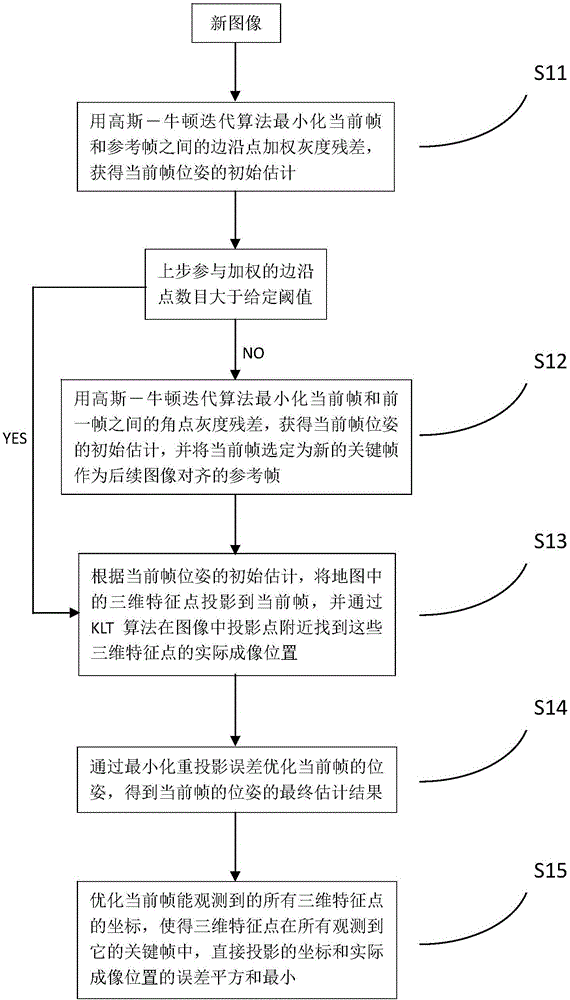

[0025] The overall process of the method for the monocular vision range measurement of unmanned aerial vehicles based on the edge point information in the image proposed by the present invention is as follows: figure 2 As shown, it is characterized in that it includes initialization and parallel processing of motion estimation, map construction and depth map estimation; specifically includes the following steps:

[0026] 1) Initialization: Select two frames from the image sequence captured by the downward-looking monocular camera fixedly connected to the UAV to construct the initial map and initial depth map. In this embodiment, the map uses a set of key frame sets {r m} and a set of three-dimensional feature points {p i}, where r represents the key frame, the subscript m represents the mth key frame, which is a positive integer, p represents the th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com