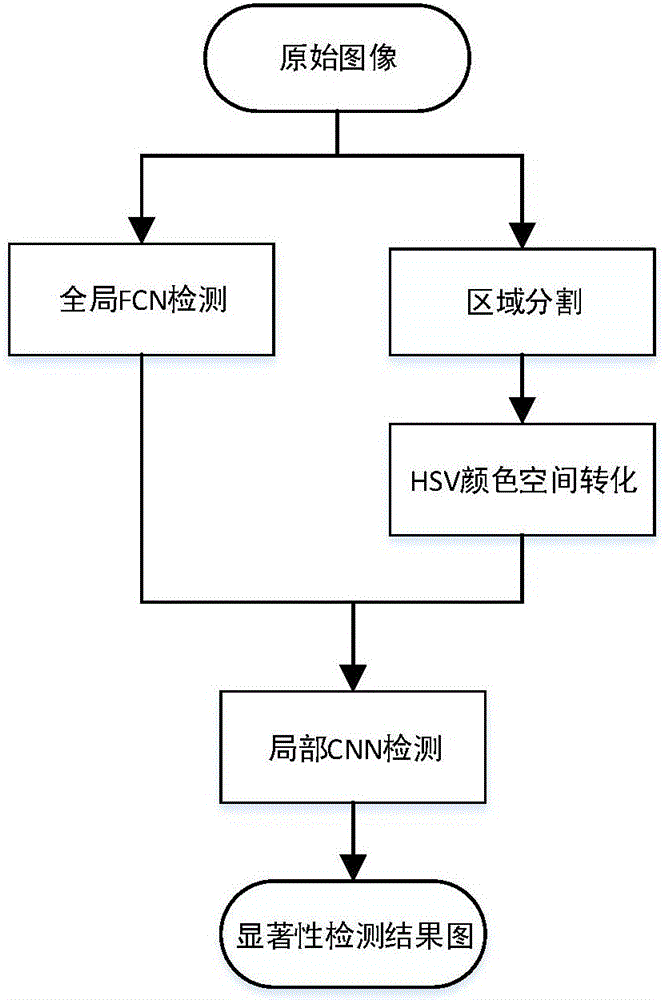

Significant target detection method based on FCN (fully convolutional network) and CNN (convolutional neural network)

A fully convolutional network, a remarkable technology, applied in biological neural network models, computer parts, character and pattern recognition, etc., can solve the problems of neglect, inaccurate detection results, poor results, etc., to improve accuracy and reduce The effect of complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] Now in conjunction with embodiment, accompanying drawing, the present invention will be further described:

[0022] Step 1. Construct the FCN network structure

[0023] The FCN network structure is composed of thirteen convolutional layers, five pooling layers and two deconvolutional layers. In this model, it is tuned on the VGG-16 model pre-trained by ImageNet. Remove the fully connected layer in the VGG-16 model, and add two layers of bilinear difference layer as the deconvolution layer. The first deconvolution layer performs 4-fold interpolation, and the second deconvolution layer performs 8-fold interpolation to expand the network output to the same size as the original image; set the classification category to two, for each pixel points for binary classification.

[0024] Step 2. Training network structure

[0025] Send the training samples to the network to classify each pixel in the image according to the output of the logistic regression classifier, use the s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com